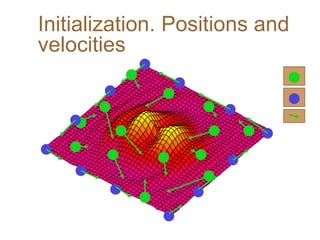

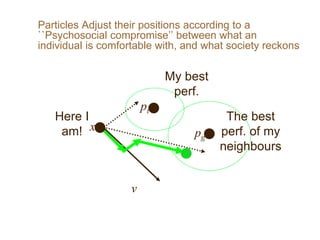

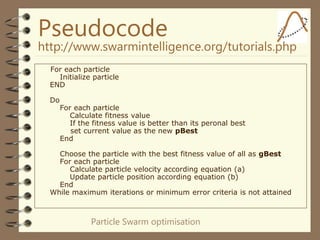

Particle Swarm Optimization (PSO) is an optimization technique invented by Russ Eberhart and James Kennedy in which potential solutions, called particles, change velocity and position to optimize a problem. Each particle remembers its best position and shares information with neighboring particles to guide its movement toward potentially better solutions. The basic steps of PSO involve initializing particles with random positions and velocities, then iteratively updating velocities and positions based on personal and neighborhood bests until termination criteria are met.

![Particle Swarm optimisation

Pseudocode

http://www.swarmintelligence.org/tutorials.php

Equation (a)

v[] = c0 *v[]

+ c1 * rand() * (pbest[] - present[])

+ c2 * rand() * (gbest[] - present[])

(in the original method, c0=1, but many

researchers now play with this parameter)

Equation (b)

present[] = present[] + v[]](https://image.slidesharecdn.com/bicpso-221117095638-25ade749/85/introduction-pso-ppt-13-320.jpg)

![... and some typical results

30D function PSO Type 1" Evolutionary

algo.(Angeline 98)

Griewank [±300] 0.003944 0.4033

Rastrigin [±5] 82.95618 46.4689

Rosenbrock [±10] 50.193877 1610.359

Optimum=0, dimension=30

Best result after 40 000 evaluations](https://image.slidesharecdn.com/bicpso-221117095638-25ade749/85/introduction-pso-ppt-21-320.jpg)