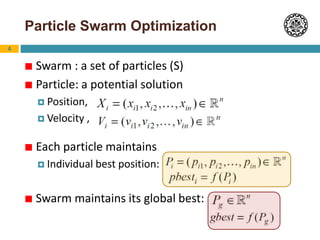

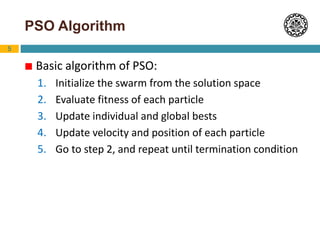

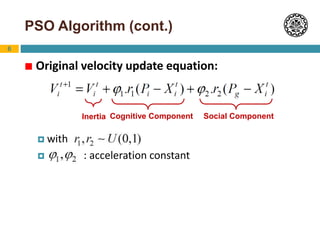

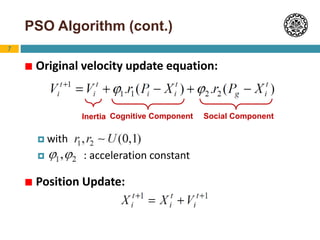

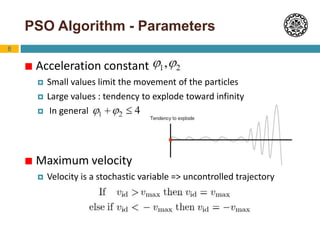

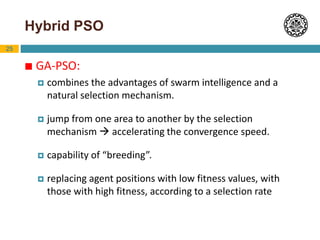

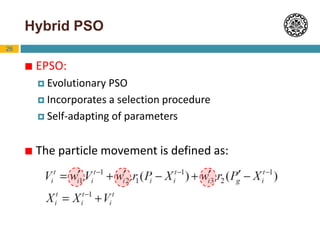

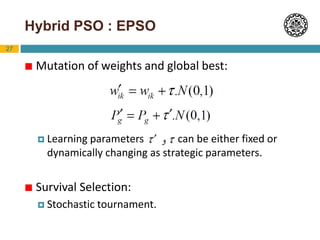

Particle Swarm Optimization (PSO) is an optimization technique inspired by swarm behavior in animals. It works by having a population (swarm) of potential solutions (particles) and updating the movement of the particles based on their personal best position and the global best position. The basic algorithm initializes a swarm of random particles and then iteratively updates the velocity and position of particles using equations that factor in inertia, cognition toward personal best, and social behavior toward global best. PSO has been shown to perform comparably to genetic algorithms but requires fewer parameters to adjust. Variants and hybridizations of PSO have also been developed to improve performance for different problem types.

![15

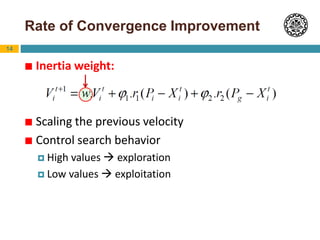

PSO with Inertia weight

can be decreased over time:

Linear [0.9 to 0.4]

Nonlinear

main disadvantage:

once the inertia weight is decreased, the swarm loses

its ability to search new areas (can not recover its

exploration mode).](https://image.slidesharecdn.com/pso-221102151817-2514413b/85/PSO-ppsx-15-320.jpg)

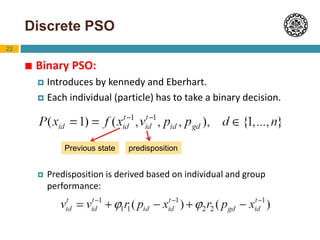

![23

Binary PSO (cont.)

determines a threshold in the probability function and

therefore should be bounded in the range of [0.0, 1.0].

state of the dth position in the string at time t:

Where is a random number with a uniform distribution.

Vid

1](https://image.slidesharecdn.com/pso-221102151817-2514413b/85/PSO-ppsx-23-320.jpg)

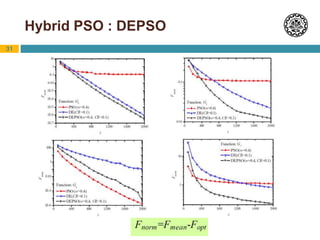

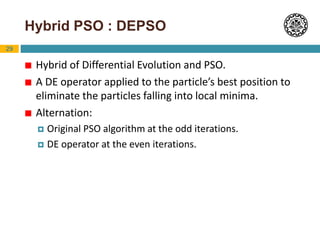

![30

Hybrid PSO : DEPSO

DE mutation on particle’s best positions:

where k is a random integer value within [1,n] which

ensures the mutation in at least one dimension.

Trial point:

For each dth dimention:](https://image.slidesharecdn.com/pso-221102151817-2514413b/85/PSO-ppsx-30-320.jpg)