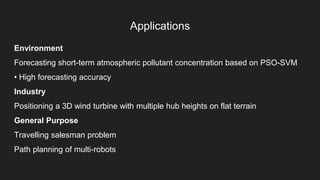

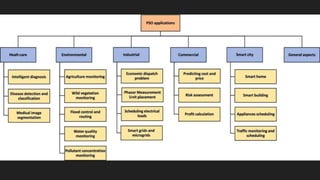

Particle swarm optimization (PSO) is a population-based stochastic optimization technique inspired by bird flocking or fish schooling. In PSO, each solution called a particle moves around in the search space, and is evaluated according to a fitness function. Particles are attracted toward the global best solution found so far and their own best solutions, updating their positions and velocities until termination criteria are met. PSO requires few parameters and is computationally inexpensive, making it a popular optimization algorithm applied to problems like smart city planning, healthcare diagnosis, environmental modeling, and more.

![Equations to update position and velocity

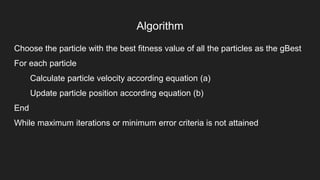

● v[] = v[] + c1 * rand() * (pbest[] - present[]) + c2 * rand() * (gbest[] - present[]) (a)

● present[] = persent[] + v[] (b)

v[] is the particle velocity,

persent[] is the current particle (solution),

pbest[] is the personal best,

gbest[] is the global best,

rand () is a random number between (0,1),

c1, c2 are learning factors.

usually c1 = c2 = 2](https://image.slidesharecdn.com/pso-240222100321-30b79582/85/Particle-swarm-optimization-PSO-ppt-presentation-9-320.jpg)