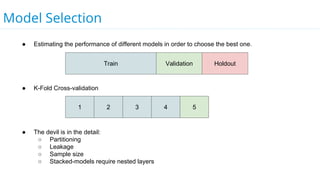

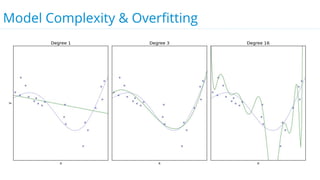

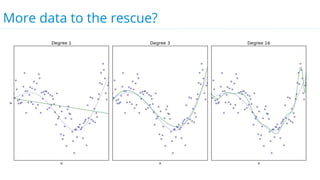

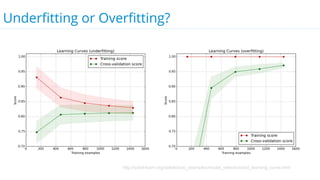

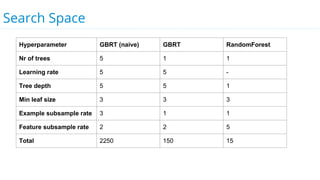

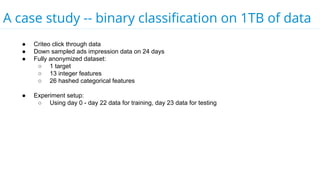

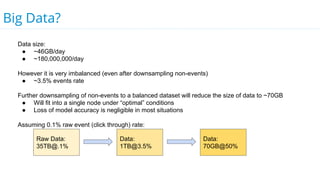

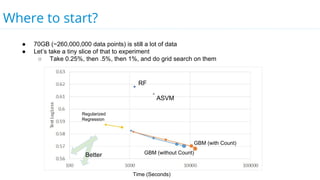

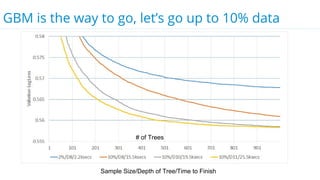

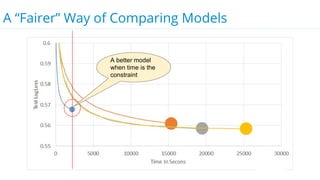

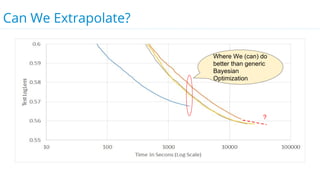

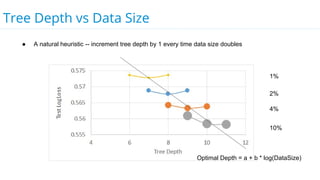

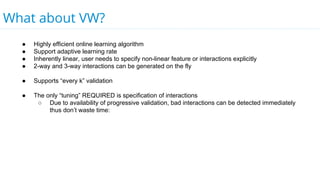

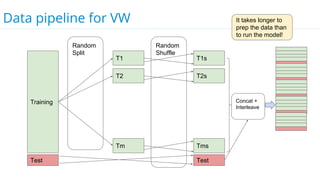

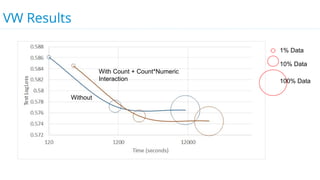

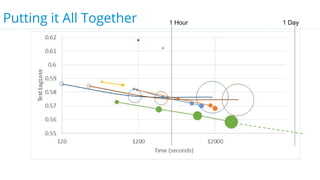

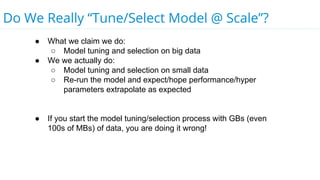

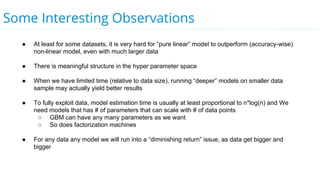

This document discusses model selection and tuning at scale using large datasets. It describes using different percentages of a 1TB Criteo click-through dataset to test and tune gradient boosted trees (GBTs) and other models. Testing on small slices found GBT performed best. Tuning GBT on larger slices up to 10% of the data showed tree depth should increase logarithmically with data size. Online learning with VW was also efficient, needing minimal tuning. The document cautions that true model selection and tuning at scale involves starting with larger data samples than GBs to avoid extrapolating from small data.