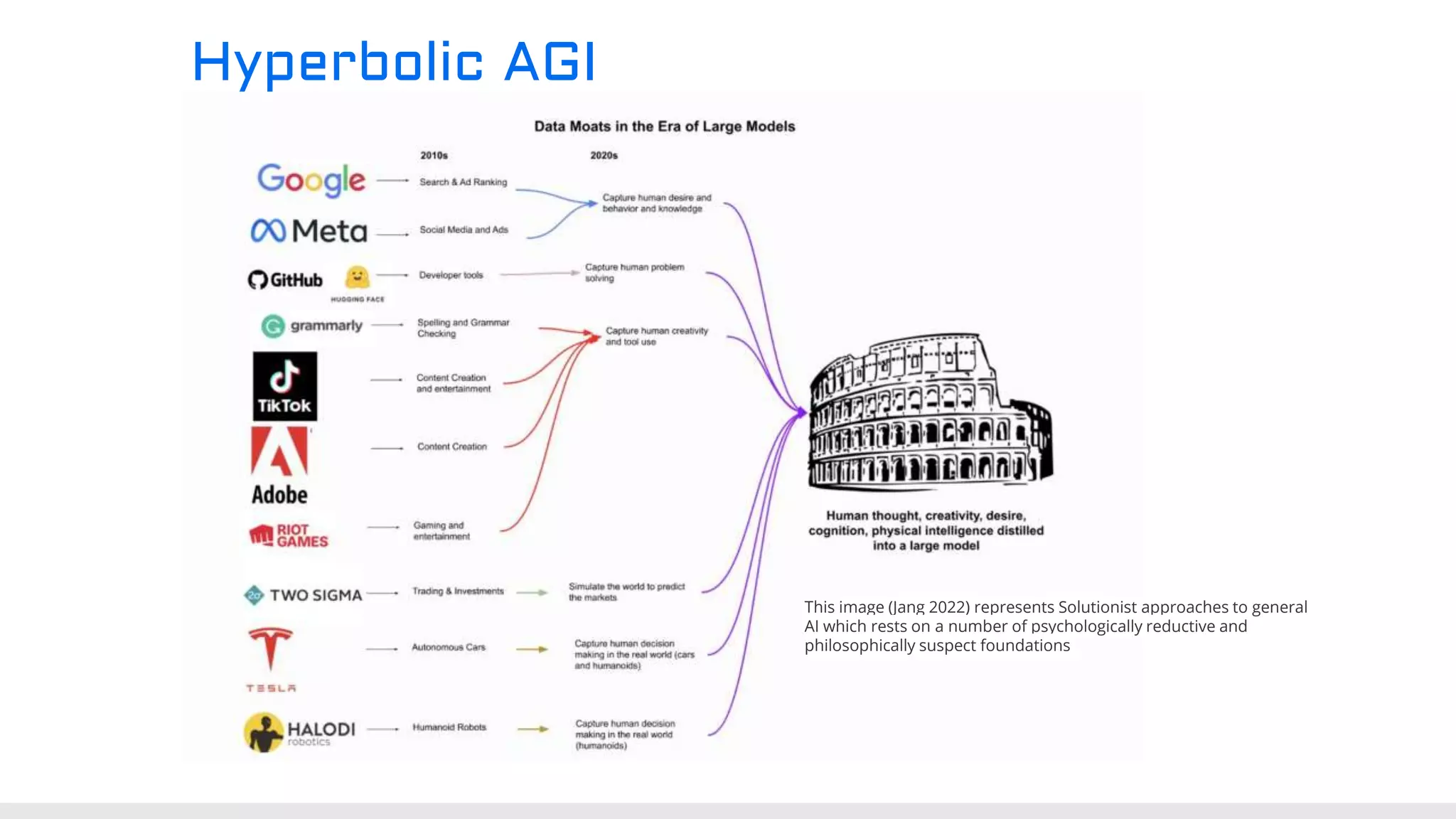

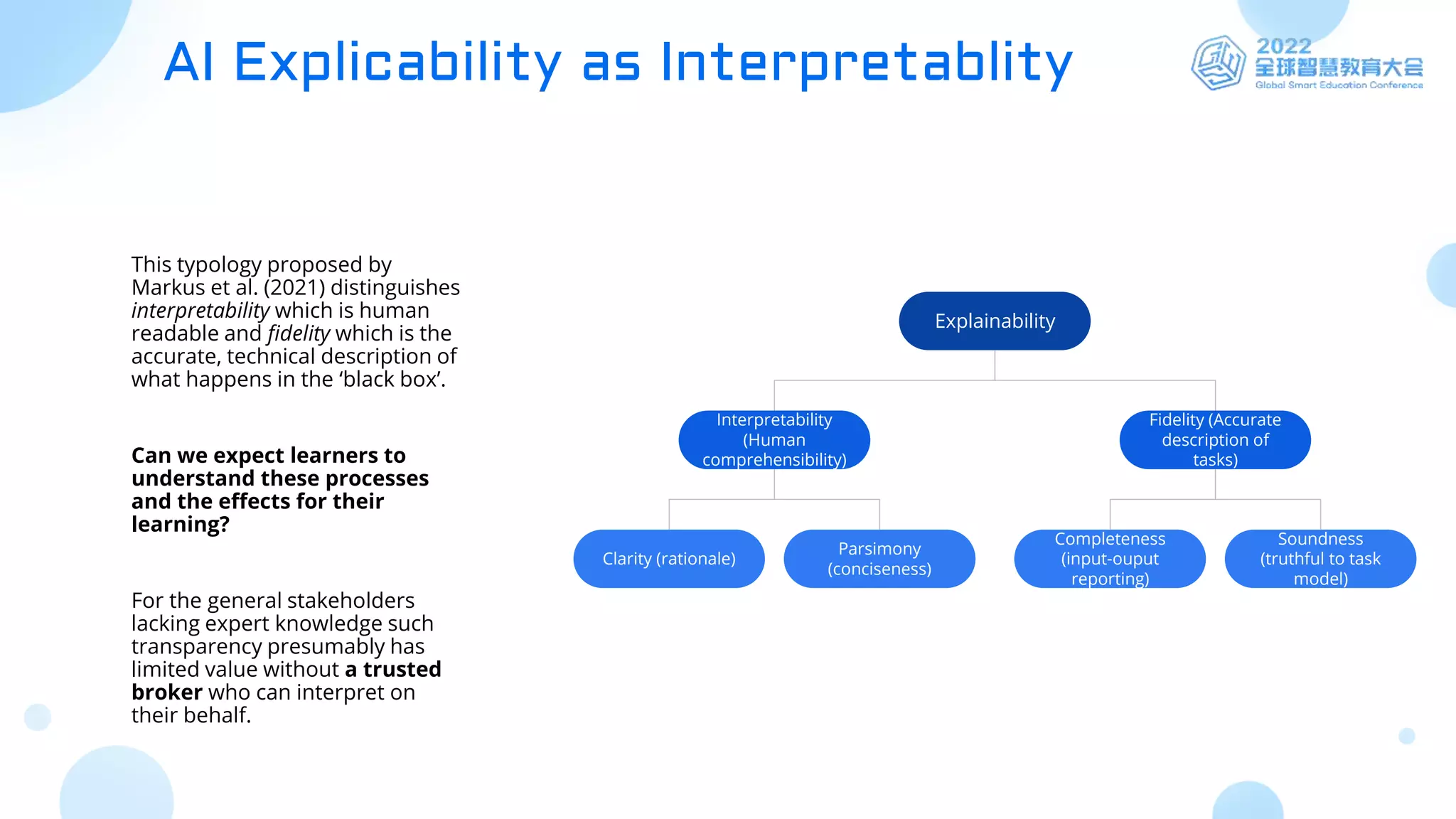

The document discusses the intersection of AI, ethics, and education, highlighting the implications of machine learning and data tracking in educational settings. It raises concerns about algorithmic bias, the shortcomings of current AI curricula, and the need for explainability in AI systems. The ongoing AI adoption in education presents challenges regarding transparency, equity, and the human costs often obscured in AI development.

![References

Baker, R. S., & Hawn, A. (2021). Algorithmic Bias in Education. https://doi.org/10.35542/osf.io/pbmvz

Birhane, A. (2021). Algorithmic injustice: a relational ethics approach. Patterns, 2(2), 100205.

Birhane, A., Ruane, E., Laurent, T., Brown, M. S., Flowers, J., Ventresque, A., Dancy, C. L. (2022). The Forgotten Margins of AI Ethics. FAccT '22: Proceedings of the 2021 ACM Conference on Fairness,

Accountability, and Transparency (forthcoming). https://doi.org/10.1145/3531146.3533157 / https://arxiv.org/abs/2205.04221v1

Coghlan, S., Miller, T. and Paterson, J. (2021). Good Proctor or “Big Brother”? Ethics of Online Exam Supervision Technologies. Philosophy and Technology, 34, 1581–1606.

https://doi.org/10.1007/s13347-021-00476-1

Floridi, L., & Cowls, J. (2019). A Unified Framework of Five Principles for AI in Society. Harvard Data Science Review, 1(1). https://doi.org/10.1162/99608f92.8cd550d1

Human Rights Watch (2022). “How Dare They Peep into My Private Life?” Children’s Rights Violations by Governments that Endorsed Online Learning During the Covid-19 Pandemic. Human

Rights Watch. https://www.hrw.org/report/2022/05/25/how-dare-they-peep-my-private-life/childrens-rights-violations-governments

Lambert, S. and Czerniewicz, L., 2020. Approaches to Open Education and Social Justice Research. Journal of Interactive Media in Education, 2020(1), p.1. DOI: http://doi.org/10.5334/jime.584

Lopez et al 2022)

Jang, E. (2022). All Roads Lead to Rome: The Machine Learning Job Market in 2022. Eric Jang. https://evjang.com/2022/04/25/rome.html

Luckin, R., Holmes, W., Griffiths, M. & Forcier, L. B. (2016). Intelligence Unleashed. An argument for AI in Education. London: Pearson. https://discovery.ucl.ac.uk/id/eprint/1475756/

Miao, F., & Holmes, W. (2022). International Forum on AI and Education: Ensuring AI as a Common Good to Transform Education, 7-8 December; synthesis report.

https://discovery.ucl.ac.uk/id/eprint/10146850/1/381226eng.pdf

Mueller, S.T., Hoffman, R.R. , Clancey, W., Emrey, A. and Klein, G. (2019). Explanation in human-AI systems: A literature meta-review, synopsis of key ideas and publications, and bibliography for

explainable AI [Preprint]. DARPA XAI Literature Review. February 9. https://arxiv.org/pdf/1902.01876.pdf.

Noble, S. U. (2018). Algorithms of Oppression. NYU Press.

Samuel, S. (2021). AI’s Islamophobia problem. Vox. https://www.vox.com/future-perfect/22672414/ai-artificial-intelligence-gpt-3-bias-muslim

Wachter, S. (forthcoming) The Theory of Artificial Immutability: Protecting Algorithmic Groups under Anti-Discrimination Law (February 15, 2022). Tulane Law Review. Available at SSRN:

https://ssrn.com/abstract=4099100 or http://dx.doi.org/10.2139/ssrn.4099100

Zuboff, S. (2019). The Age of Surveillance Capitalism. Public Affairs Books.](https://image.slidesharecdn.com/20220819openminingeducationethicsai-220819112716-a3c70543/75/Open-Mining-Education-Ethics-AI-18-2048.jpg)