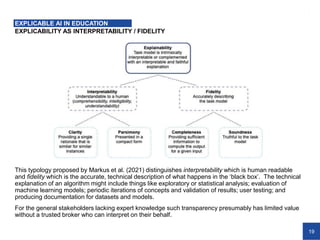

The document discusses explainable artificial intelligence in education (XAIED), emphasizing its growing influence and the ethical challenges that accompany AI technologies in educational contexts. It highlights the importance of transparency and explicability for algorithmic decision-making, ethical accountability, and the potential biases and privacy concerns that arise from AI usage in learning environments. The need for governance measures and the balance between transparency and pedagogical values are underscored, advocating for a holistic and inclusive approach to AI in education.