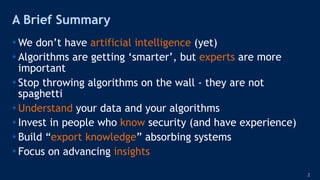

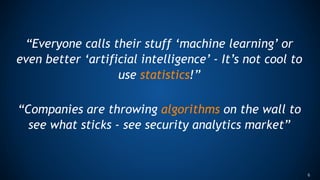

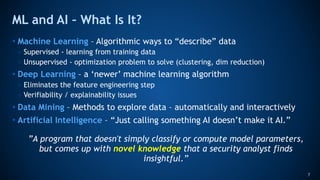

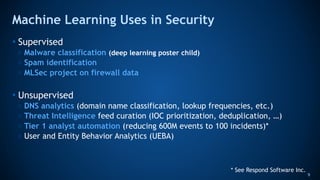

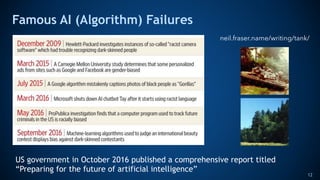

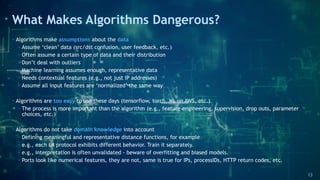

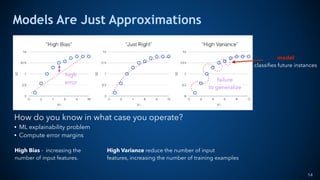

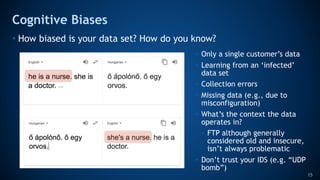

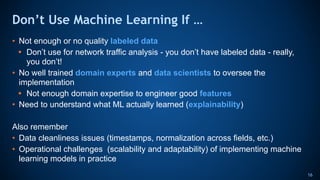

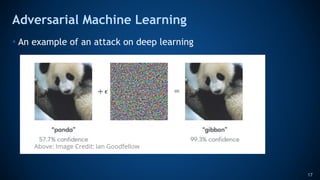

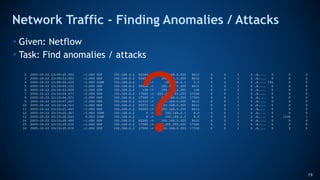

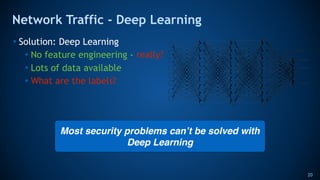

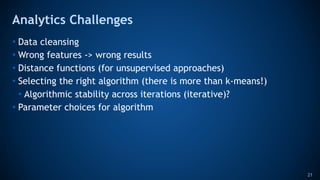

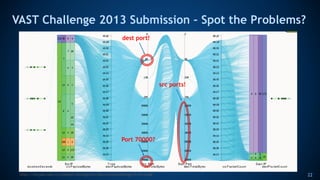

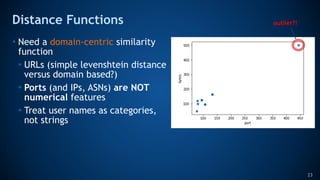

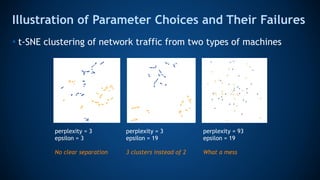

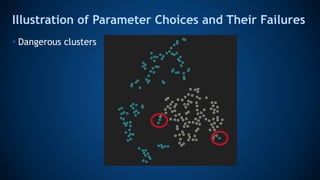

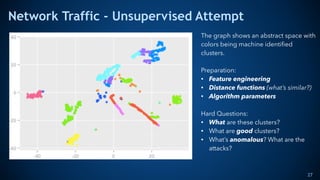

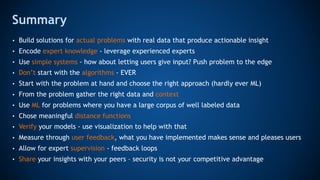

The document discusses the limitations and risks associated with using algorithms and machine learning in cybersecurity, emphasizing that experts are crucial for understanding data and its context. It warns against the dangers of poorly designed algorithms that make assumptions about data and urges a focus on practical knowledge and problem-solving rather than solely on algorithmic solutions. The importance of combining human expertise with data-driven insights is highlighted to improve security measures.