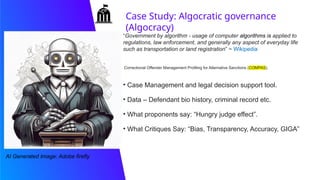

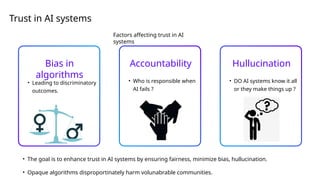

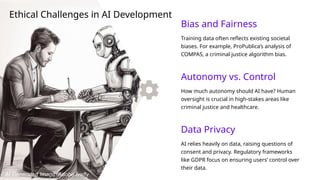

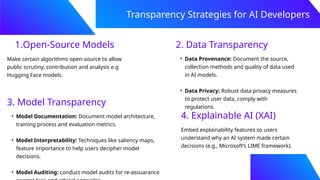

The document discusses the balance between transparency, trust, and regulation in artificial intelligence systems, highlighting challenges like algorithmic bias, accountability, and the importance of human oversight. It examines the implications of opaque algorithms and ethical considerations in AI development while advocating for transparency strategies such as open-source models and explainable AI. Furthermore, it emphasizes the need for regulatory frameworks and international cooperation to ensure ethical practices in AI deployment.