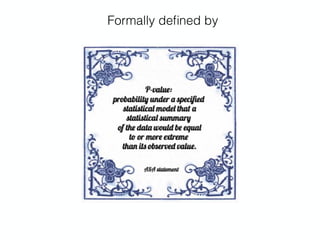

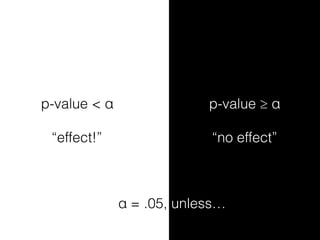

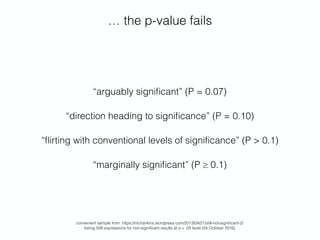

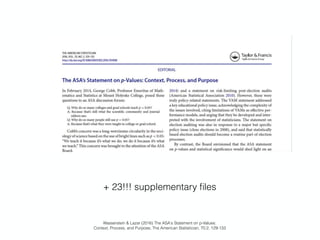

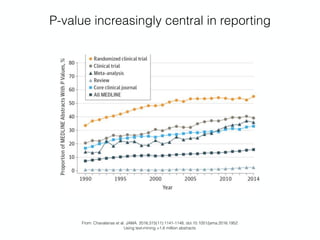

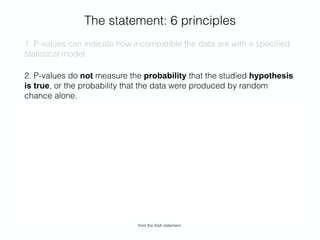

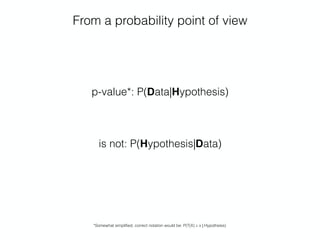

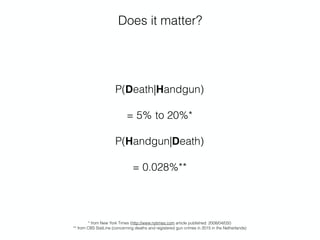

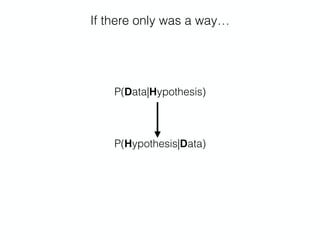

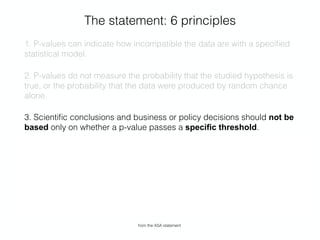

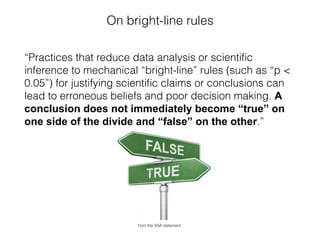

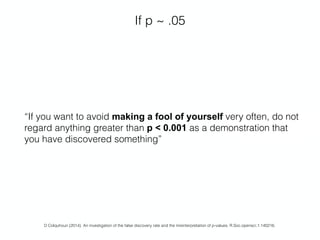

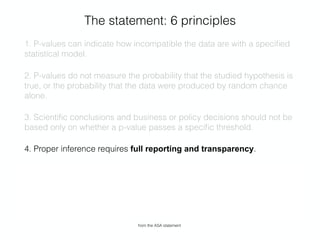

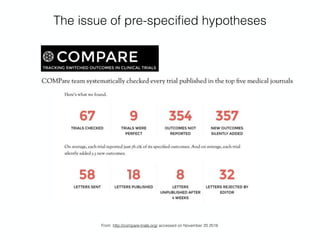

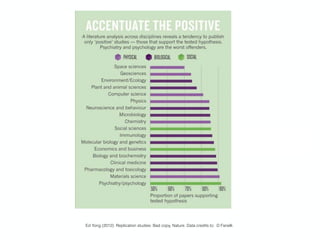

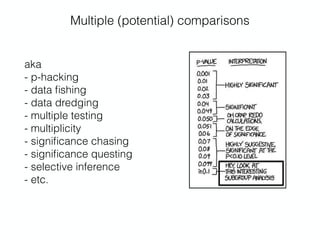

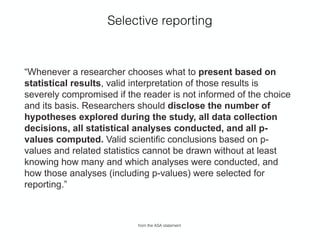

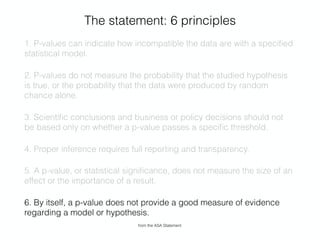

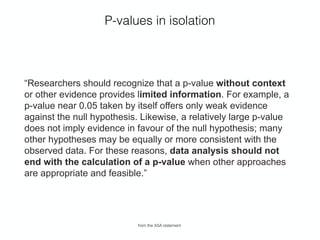

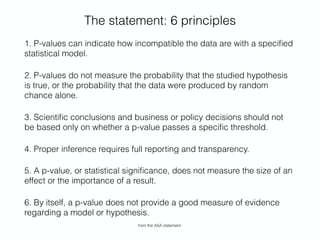

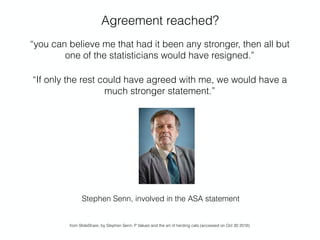

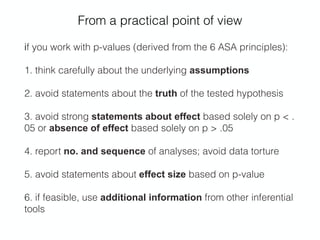

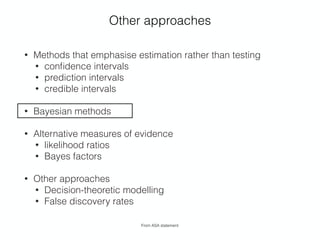

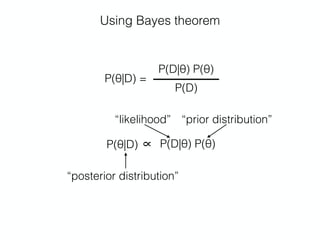

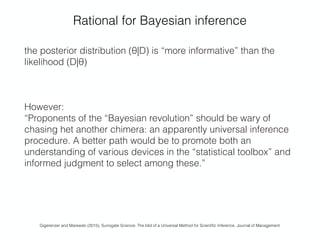

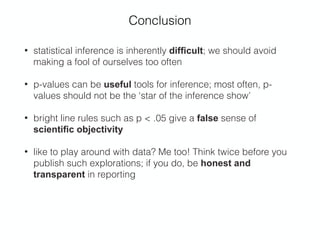

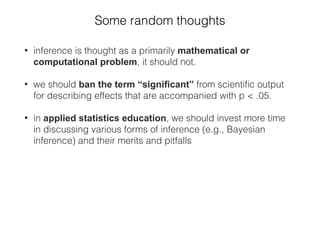

The workshop by Maarten van Smeden discusses the limitations and misconceptions surrounding p-values in statistical inference, highlighting six key principles from the American Statistical Association (ASA). These principles emphasize that p-values do not adequately measure the truth of hypotheses and should not solely drive scientific conclusions or policy decisions. The document advocates for improved practices in statistical analysis, greater transparency, and consideration of alternative methods to enhance the reliability of scientific research.