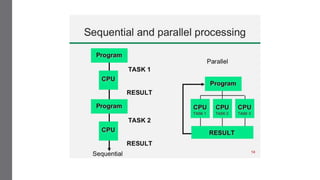

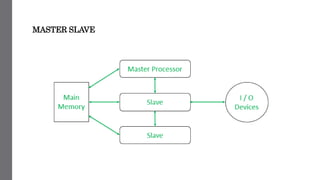

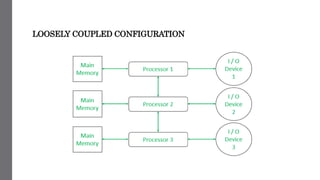

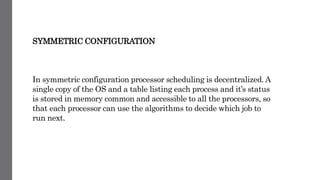

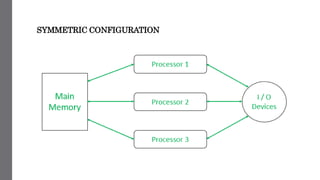

The document discusses parallel processing and typical multiprocessing configurations. Parallel processing divides tasks across multiple CPUs to reduce program time. There are several types of parallel processing including single instruction single data (SISD), multiple instruction single data (MISD), single instruction multiple data (SIMD), and multiple instruction multiple data (MIMD). Typical multiprocessing configurations include the master/slave configuration with one master processor managing slave processors, loosely coupled configurations with independent computer systems working together, and symmetric configurations with decentralized scheduling across all processors.