Embed presentation

Download to read offline

![MPI_Allreduce • MPI_Allreduce is the equivalent of

doing MPI_Reduce followed by an MPI_Bcast

MPI_Reduce vs MPI_AllReduce

[Source]: https://mpitutorial.com/tutorials/mpi-reduce-and-allreduce/](https://image.slidesharecdn.com/mpicollectivecommunicationoperations-200309024343/85/Mpi-collective-communication-operations-2-320.jpg)

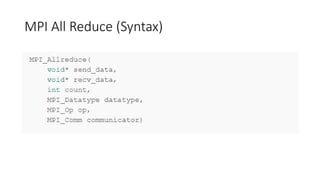

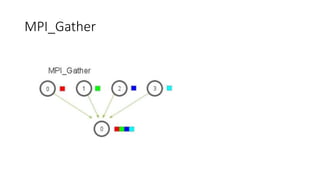

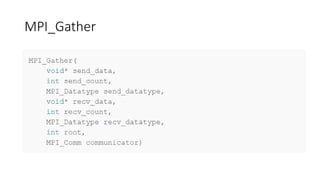

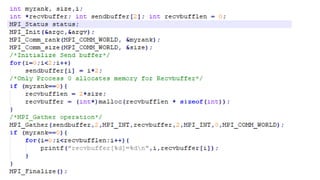

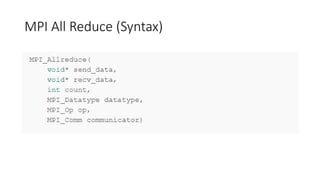

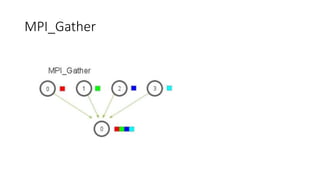

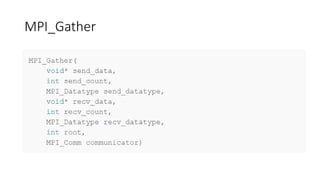

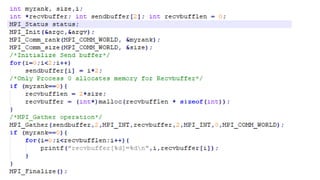

MPI collective communication routines like MPI_Allreduce, MPI_Gather, and MPI_Scatter allow processes in parallel programs to share data. MPI_Allreduce combines data from all processes using a reduction operation like sum or max. MPI_Gather collects portions of a send buffer from each process into a receive buffer. MPI_Scatter distributes different chunks of an array from a root process to other processes. Exercises demonstrate changing data and testing these routines work correctly across processes.

![MPI_Allreduce • MPI_Allreduce is the equivalent of

doing MPI_Reduce followed by an MPI_Bcast

MPI_Reduce vs MPI_AllReduce

[Source]: https://mpitutorial.com/tutorials/mpi-reduce-and-allreduce/](https://image.slidesharecdn.com/mpicollectivecommunicationoperations-200309024343/85/Mpi-collective-communication-operations-2-320.jpg)