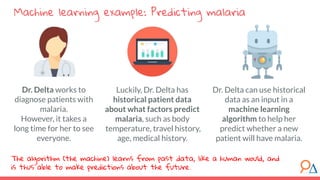

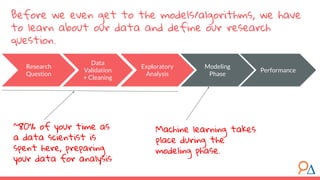

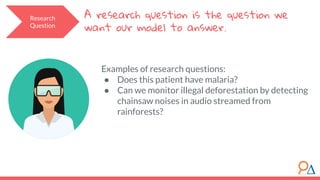

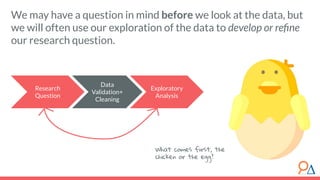

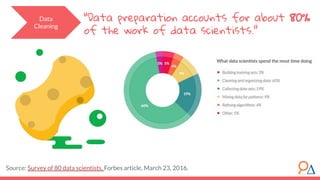

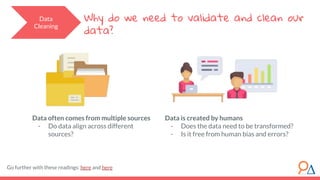

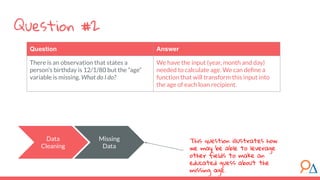

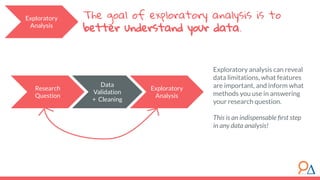

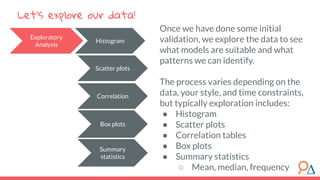

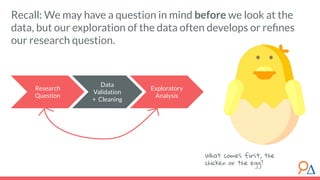

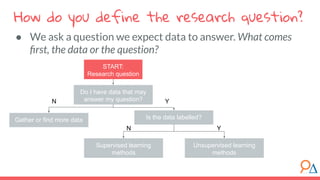

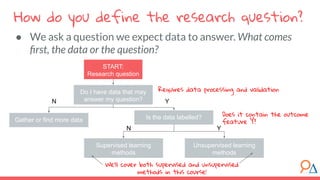

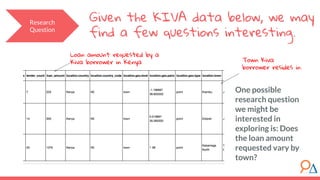

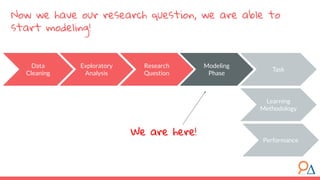

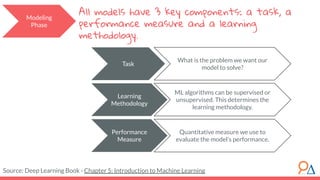

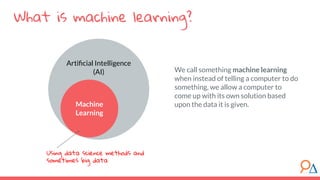

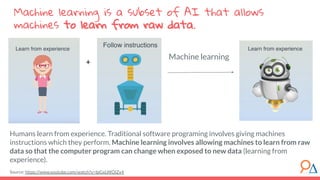

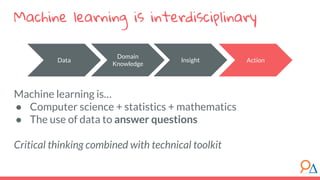

This document outlines a machine learning course developed by Delta Analytics, covering various modules such as model selection, evaluation, and algorithms. It emphasizes the importance of clearly defining research questions and conducting thorough data cleaning and exploratory analysis to prepare for machine learning tasks. The course aims to empower communities by enabling them to leverage data effectively for positive outcomes.

![There is a growing need for machine

learning

Sources:

[1] “What is Big Data,” IBM,

● There are huge amounts of data generated

every day.

● Previously impossible problems are now

solvable.

● Companies are increasingly demanding

quantitative solutions.

“Every day, we create 2.5 quintillion bytes of data

— so much that 90% of the data in the world today

has been created in the last two years alone.” [1]](https://image.slidesharecdn.com/module1introductiontomachinelearning4-190707185926/85/Module-1-introduction-to-machine-learning-10-320.jpg)