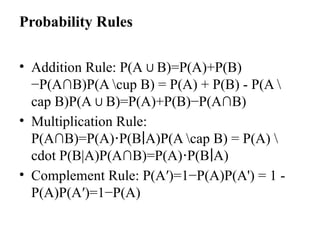

This unit explains the importance of statistical methods in analyzing and interpreting data in data science. It covers descriptive statistics to summarize data and probability concepts to model uncertainty. Inferential statistics, including sampling, hypothesis testing, confidence intervals, and regression analysis, are used to draw conclusions about populations from sample data