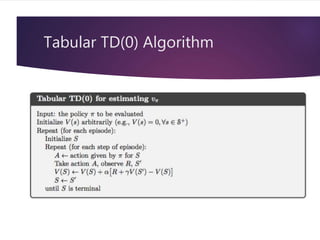

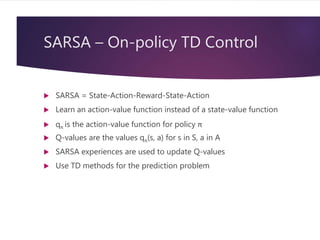

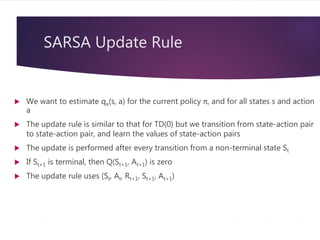

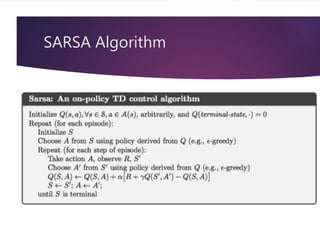

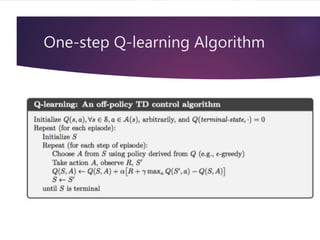

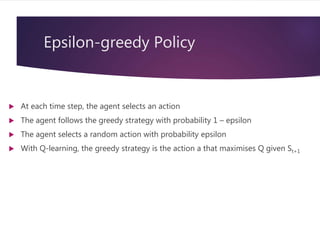

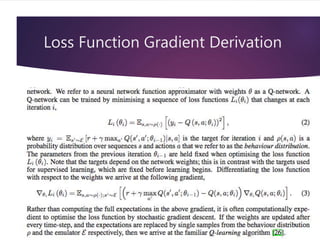

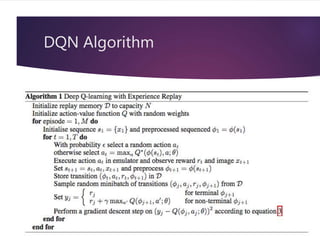

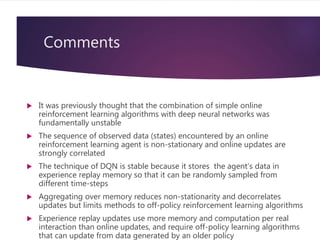

This document provides an overview of reinforcement learning and some key algorithms used in artificial intelligence. It introduces reinforcement learning concepts like Markov decision processes, value functions, temporal difference learning methods like Q-learning and SARSA, and policy gradient methods. It also describes deep reinforcement learning techniques like deep Q-networks that combine reinforcement learning with deep neural networks. Deep Q-networks use experience replay and fixed length state representations to allow deep neural networks to approximate the Q-function and learn successful policies from high dimensional input like images.

![Value Function (1)

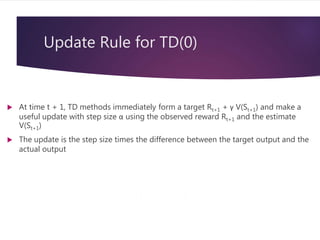

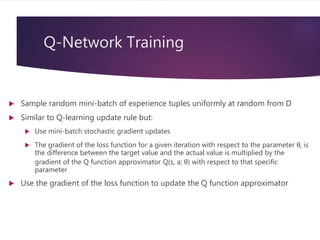

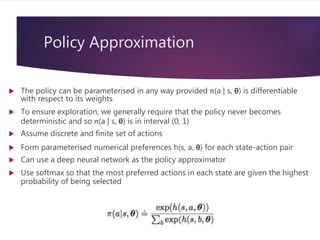

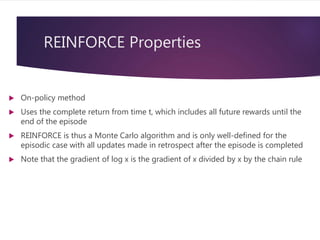

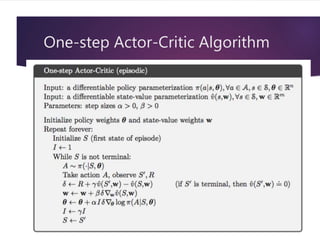

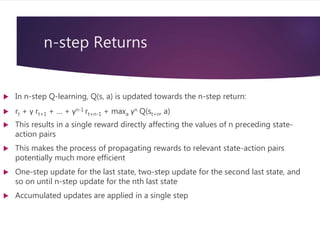

The reward signal indicates what is good in the short run while the value function

indicates what is good in the long run

The value of a state is the total amount of reward an agent can expect to

accumulate over the future, starting in that state

Compute the value using the states that are likely to follow the current state and

the rewards available in those states

Future rewards may be time-discounted with a factor in the interval [0, 1]](https://image.slidesharecdn.com/reinforcementlearningearning-220517080105-a405fdad/85/Reinforcement-Learning-10-320.jpg)

![Markov Decision Process (MDP)

Set of states S

Set of actions A

State transition probabilities p(s’ | s, a). This is the probability distribution over the

state space given we take action a in state s

Discount factor γ in [0, 1]

Reward function R: S x A -> set of real numbers

For simplicity, assume discrete rewards

Finite MDP if both S and A are finite](https://image.slidesharecdn.com/reinforcementlearningearning-220517080105-a405fdad/85/Reinforcement-Learning-18-320.jpg)