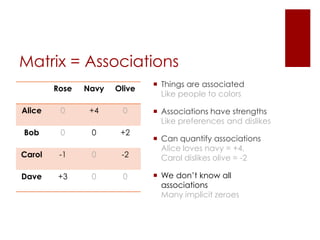

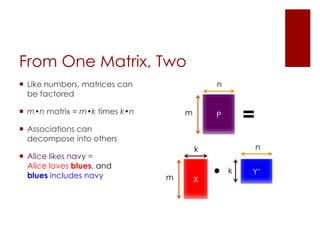

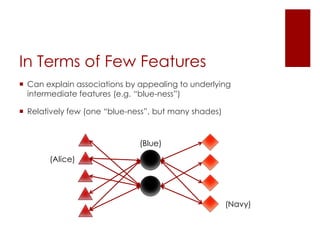

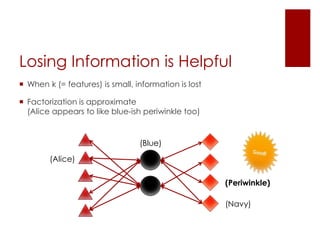

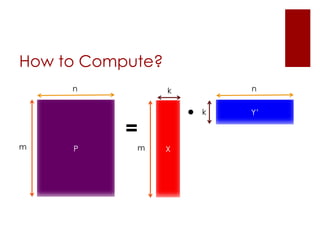

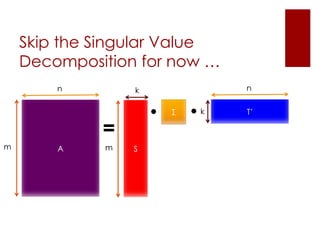

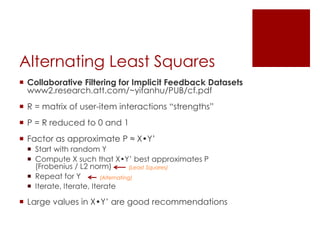

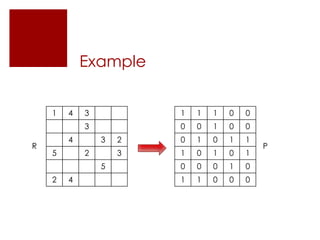

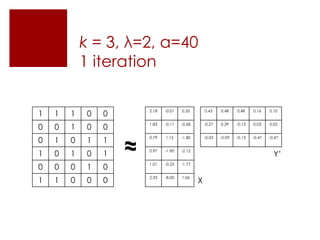

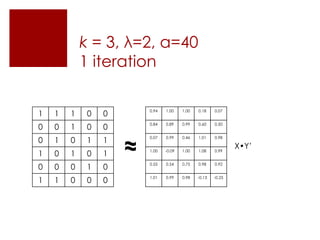

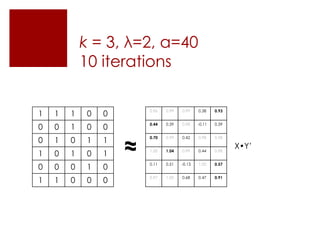

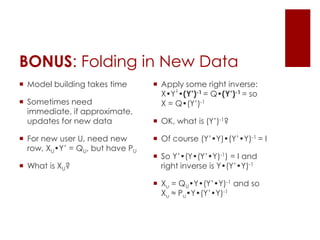

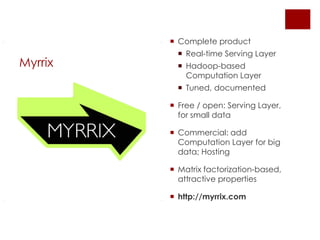

This document discusses matrix factorization techniques for recommendation systems. It explains that user-item interaction data can be represented as a matrix and decomposed into two lower-rank matrices that capture latent features. One matrix represents users and the other represents items. The document outlines an alternating least squares algorithm to compute the decomposed matrices and discusses how the technique can be implemented in Apache Mahout and Myrrix for scalable recommendations.