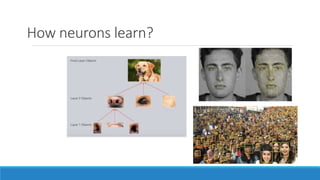

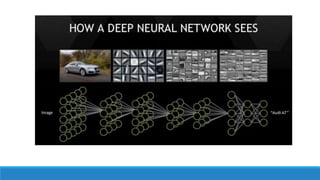

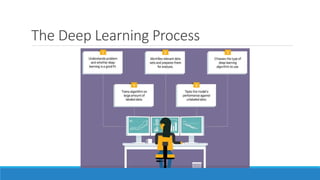

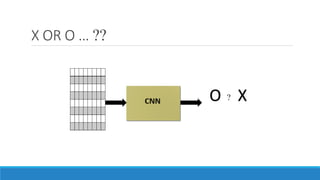

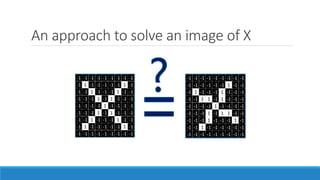

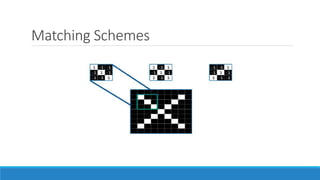

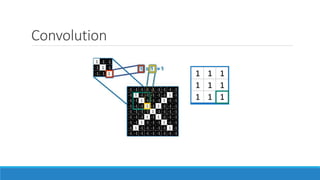

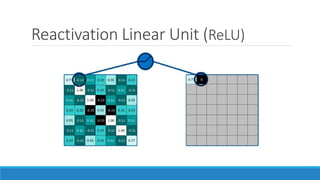

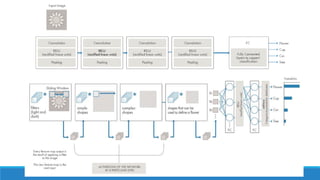

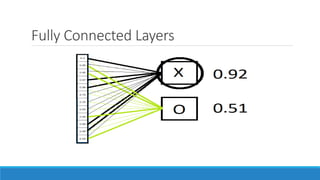

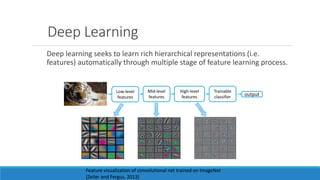

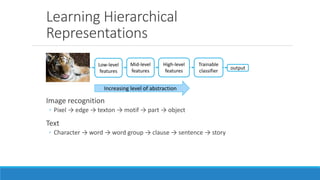

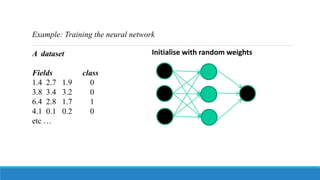

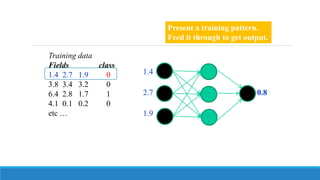

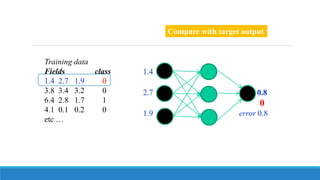

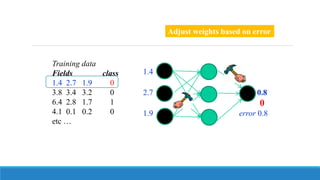

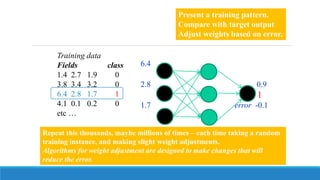

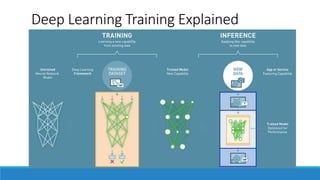

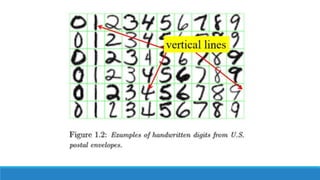

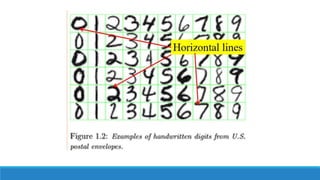

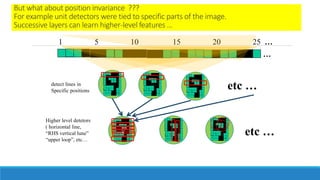

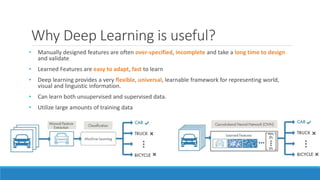

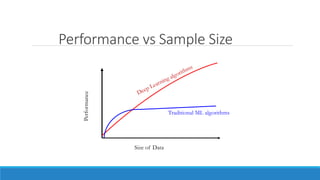

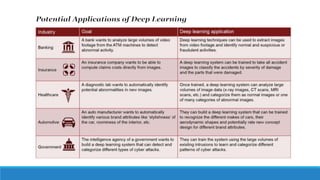

The document discusses deep neural networks (DNN) and deep learning. It explains that deep learning uses multiple layers to learn hierarchical representations from raw input data. Lower layers identify lower-level features while higher layers integrate these into more complex patterns. Deep learning models are trained on large datasets by adjusting weights to minimize error. Applications discussed include image recognition, natural language processing, drug discovery, and analyzing satellite imagery. Both advantages like state-of-the-art performance and drawbacks like high computational costs are outlined.