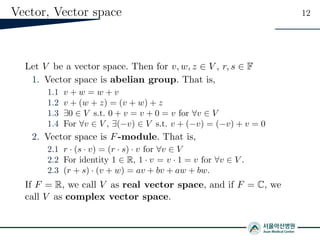

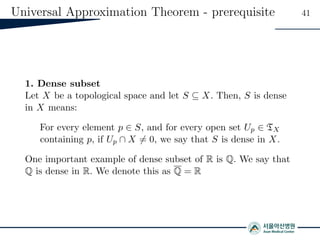

The document provides a comprehensive overview of mathematical concepts essential for deep learning, including linear algebra, manifolds, and the universal approximation theorem. It discusses various elements of vector spaces, linear maps, and their applications in neural networks. Additionally, it addresses the manifold hypothesis and conjecture, emphasizing their significance in understanding high-dimensional data structures.

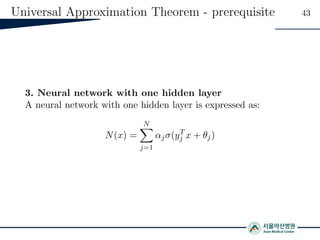

![Universal Approximation Theorem - prerequisite 42

2. Sigmoidal function

A sigmoidal function is a monotonically increasing continuous

function σ : R → [0, 1] s.t.

σ(x) =

1 x → +∞

0 x → −∞](https://image.slidesharecdn.com/20191016mi2rllabseminarmathematics-191017083301/85/Mathematics-for-Deep-Learning-1-42-320.jpg)