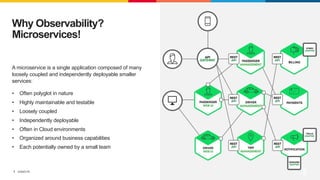

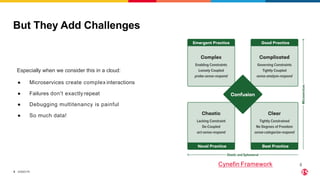

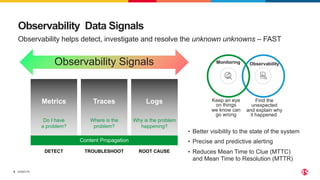

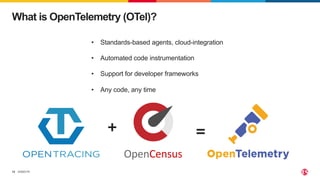

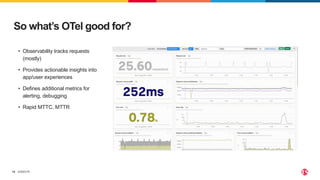

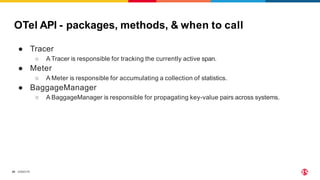

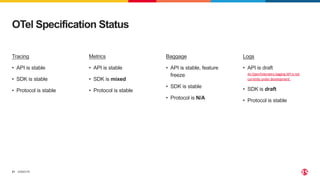

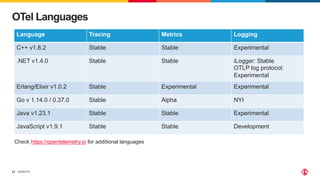

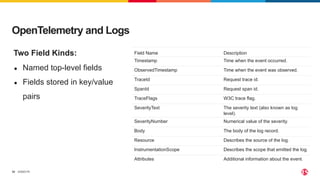

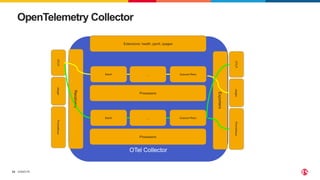

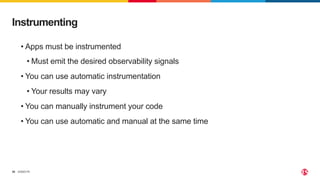

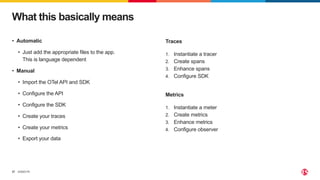

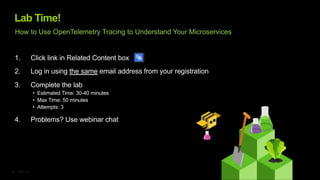

This document outlines a webinar focused on microservices and observability, emphasizing the importance of Opentelemetry for monitoring and collecting data in microservice applications. It covers key topics such as the challenges posed by microservices, the structure and benefits of Opentelemetry, and provides guidance on how to implement observability in applications. The document includes an agenda, speaker information, and instructions for hands-on labs.