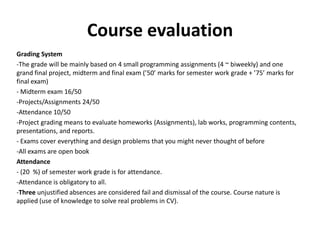

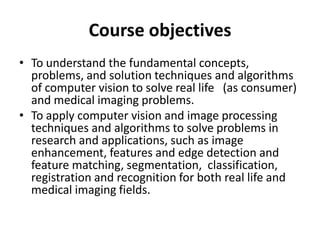

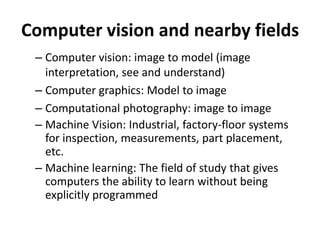

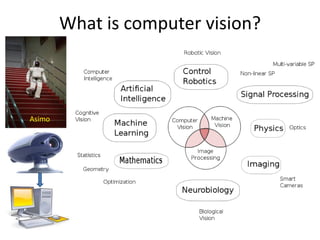

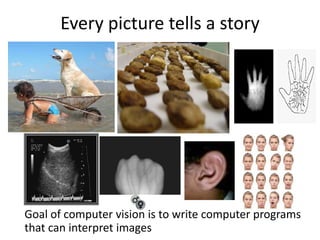

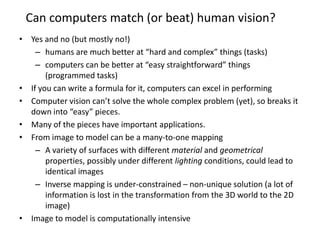

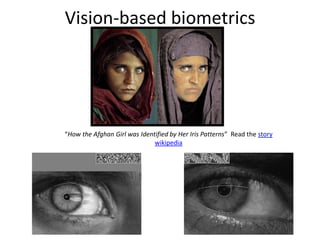

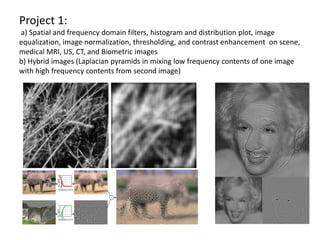

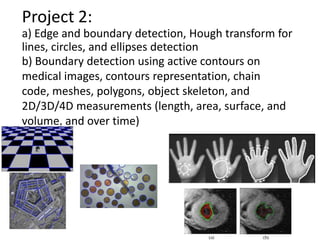

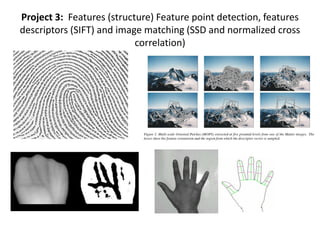

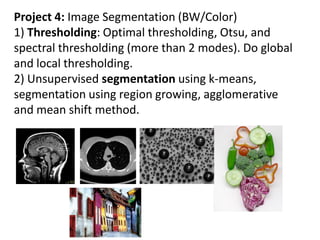

This document outlines the course details for a Computer Vision course taught by Professor Ahmed Badawi. It includes the course requirements, prerequisites, textbooks, communications methods, intended learning outcomes, course evaluation criteria, and course objectives. The course aims to teach fundamental concepts and algorithms of computer vision to solve real-life problems. Topics covered include image processing techniques, feature detection, segmentation, classification, and recognition. Students will complete assignments applying these techniques and a final project involving face detection or recognition. The course uses a combination of exams, assignments, projects, and attendance for evaluation.