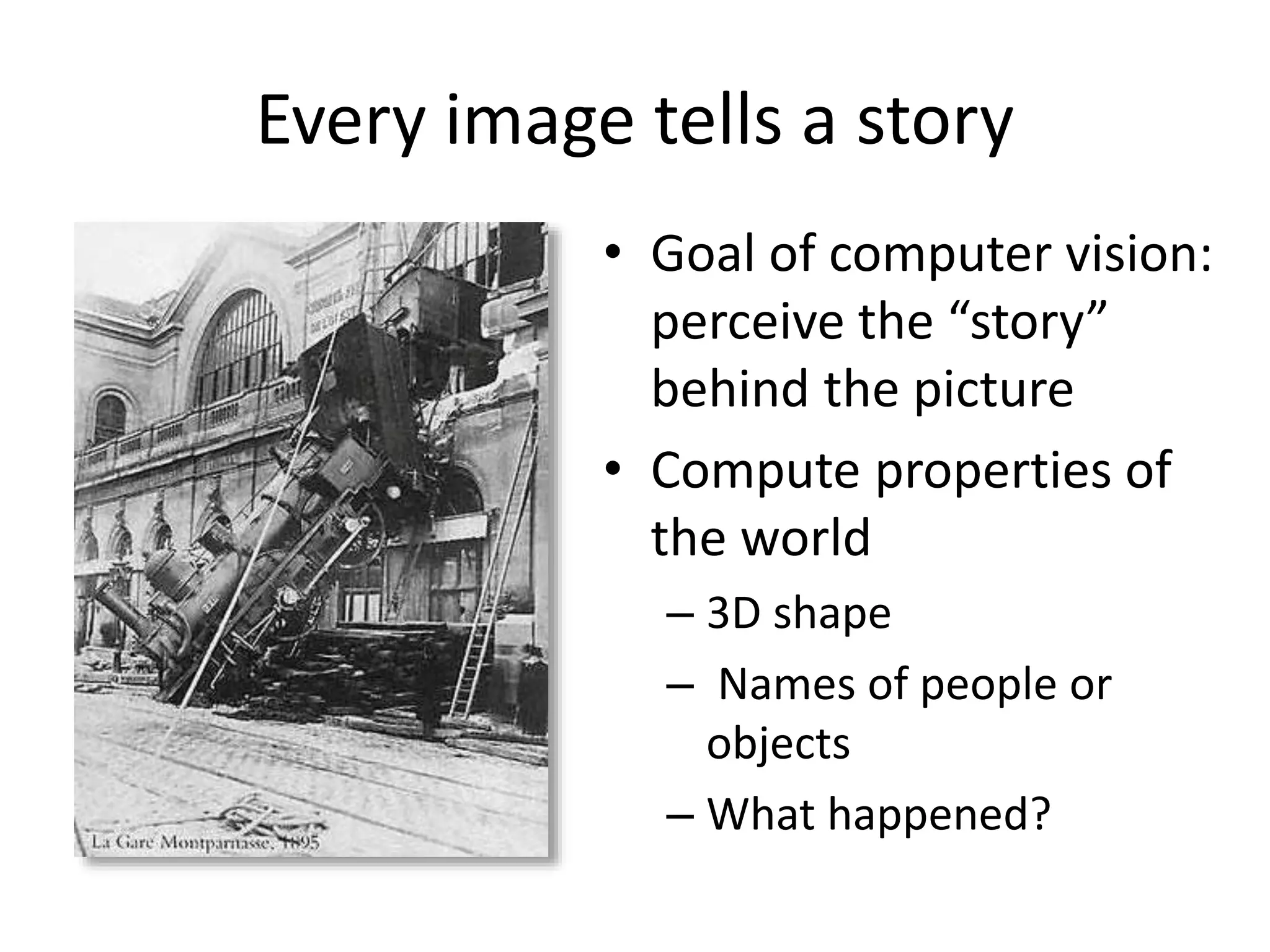

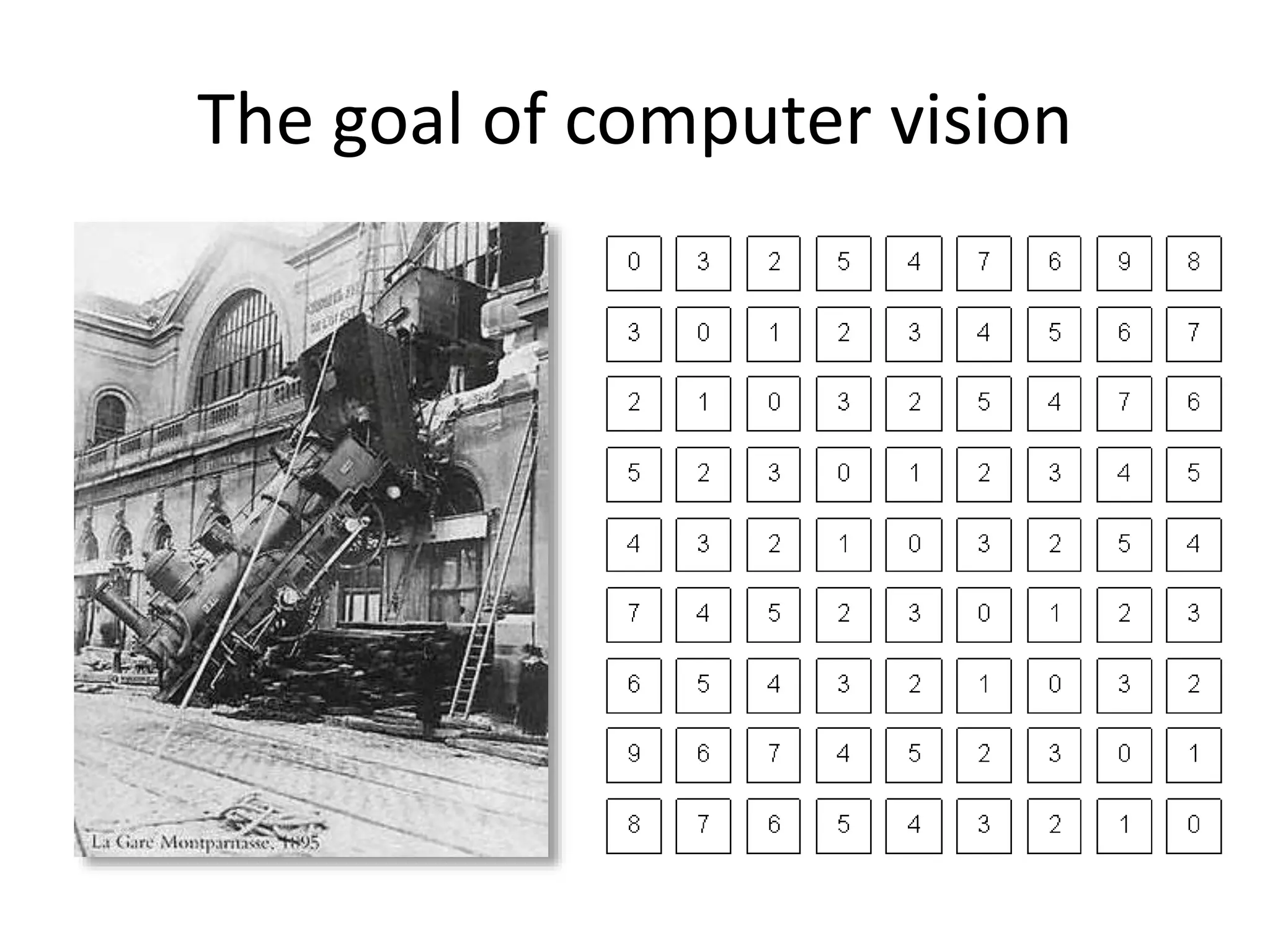

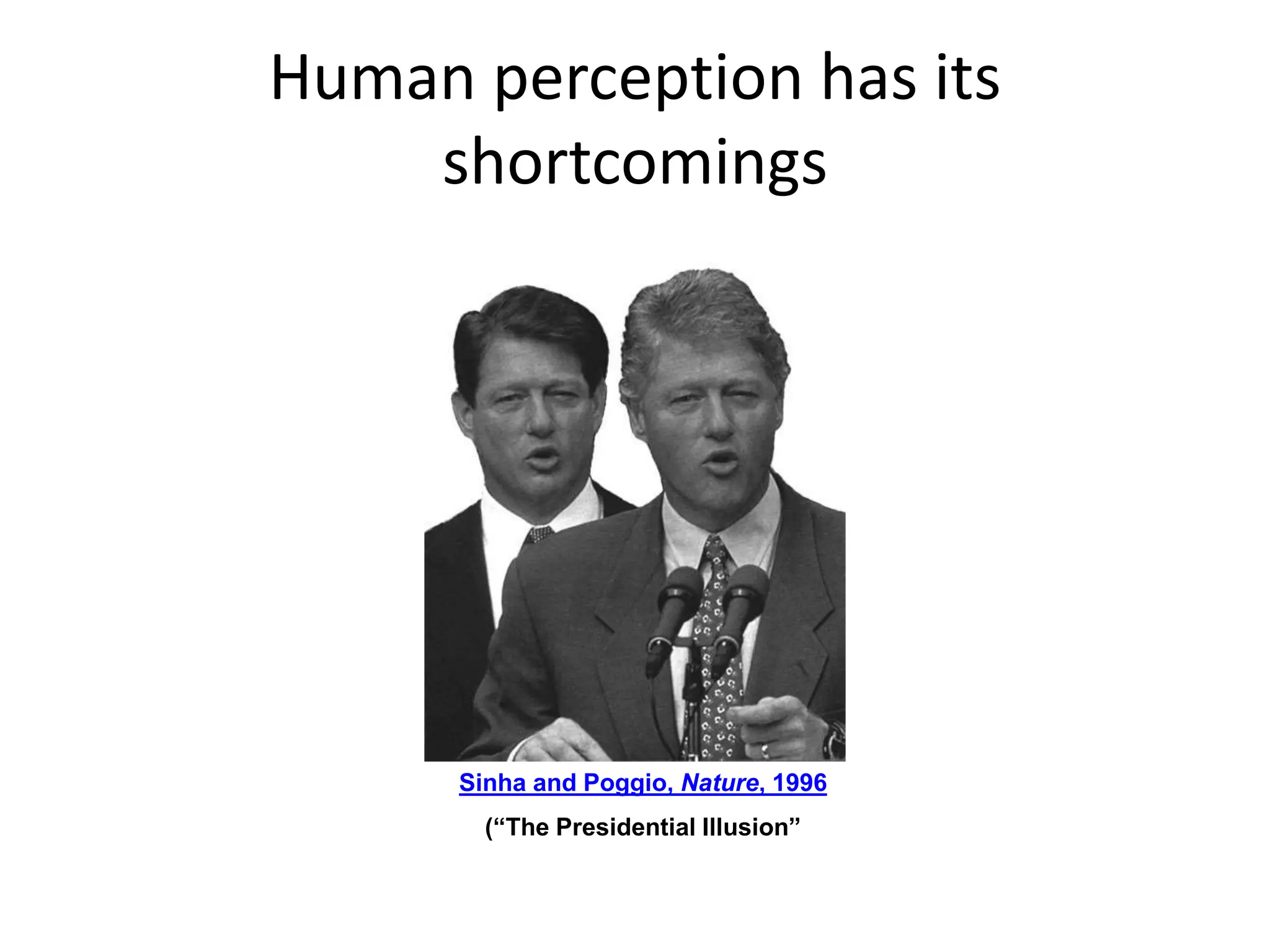

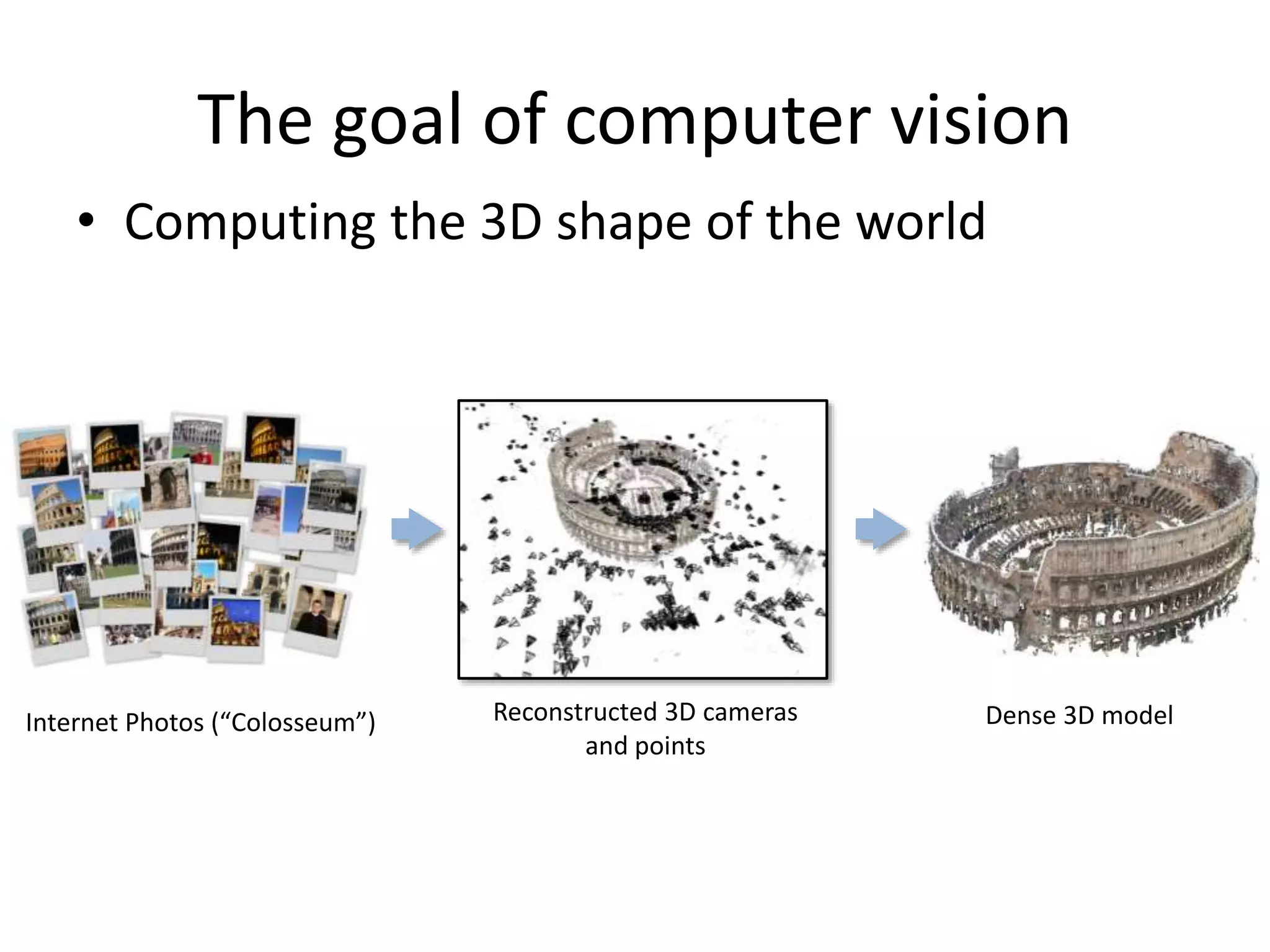

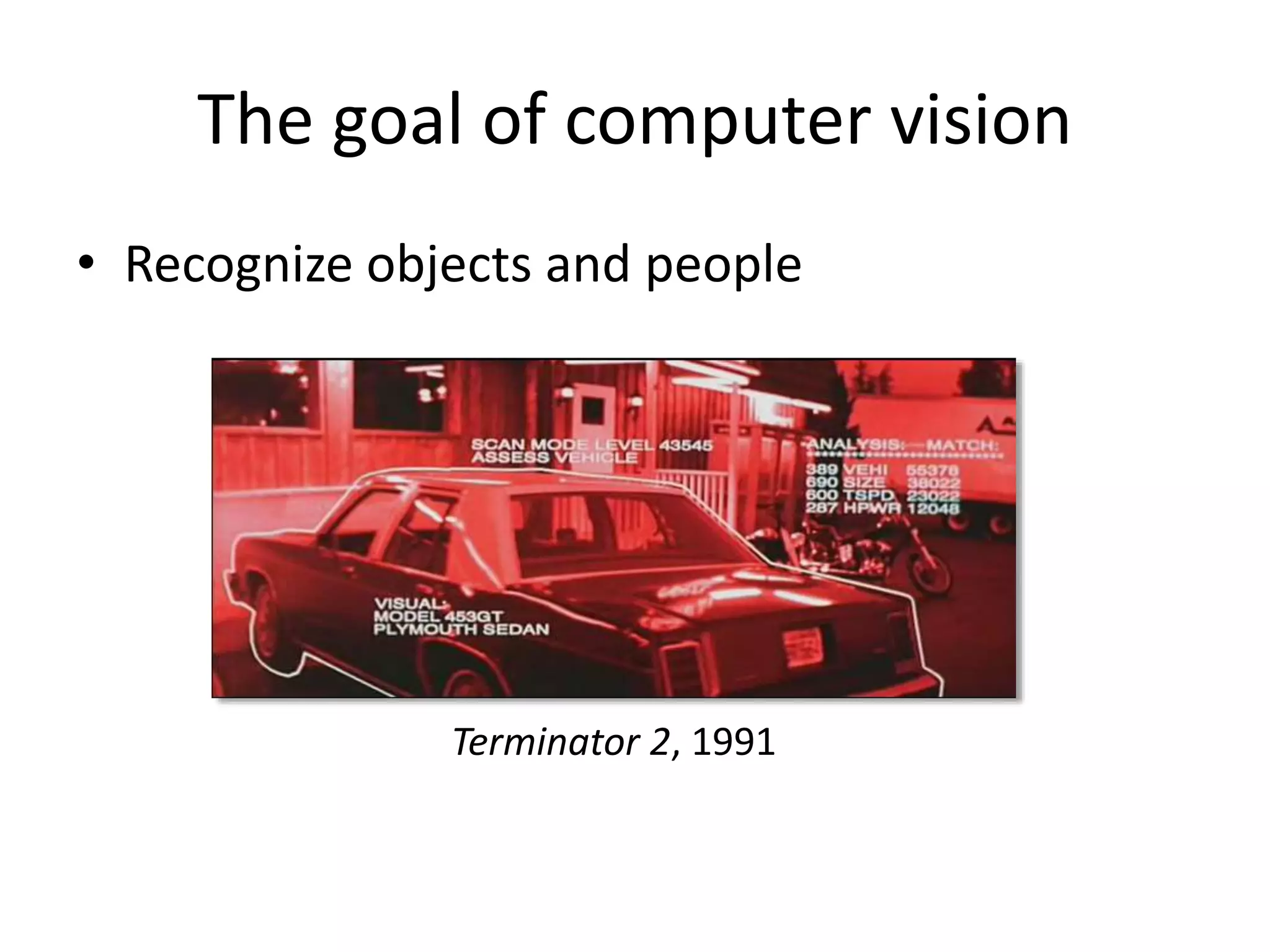

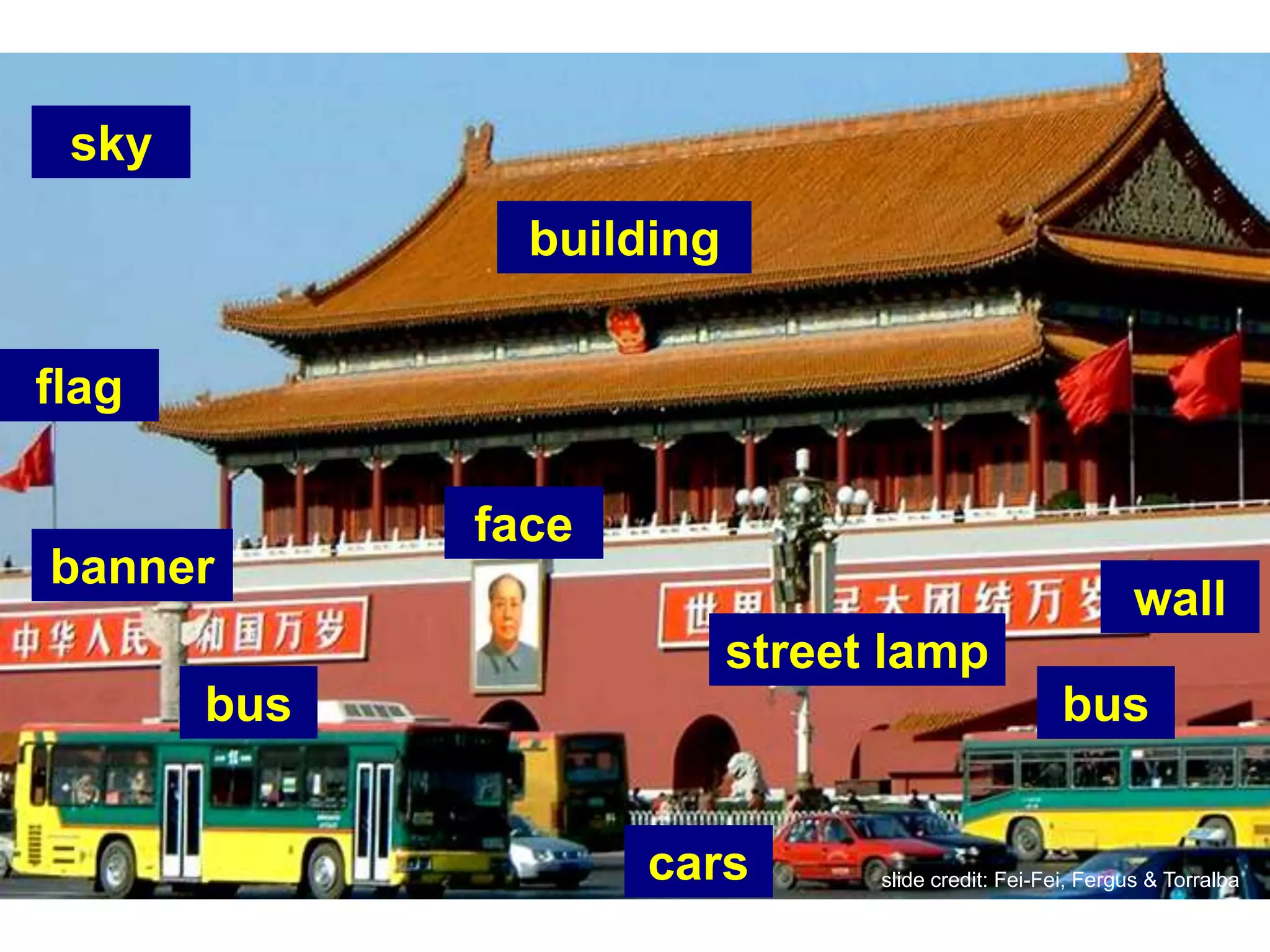

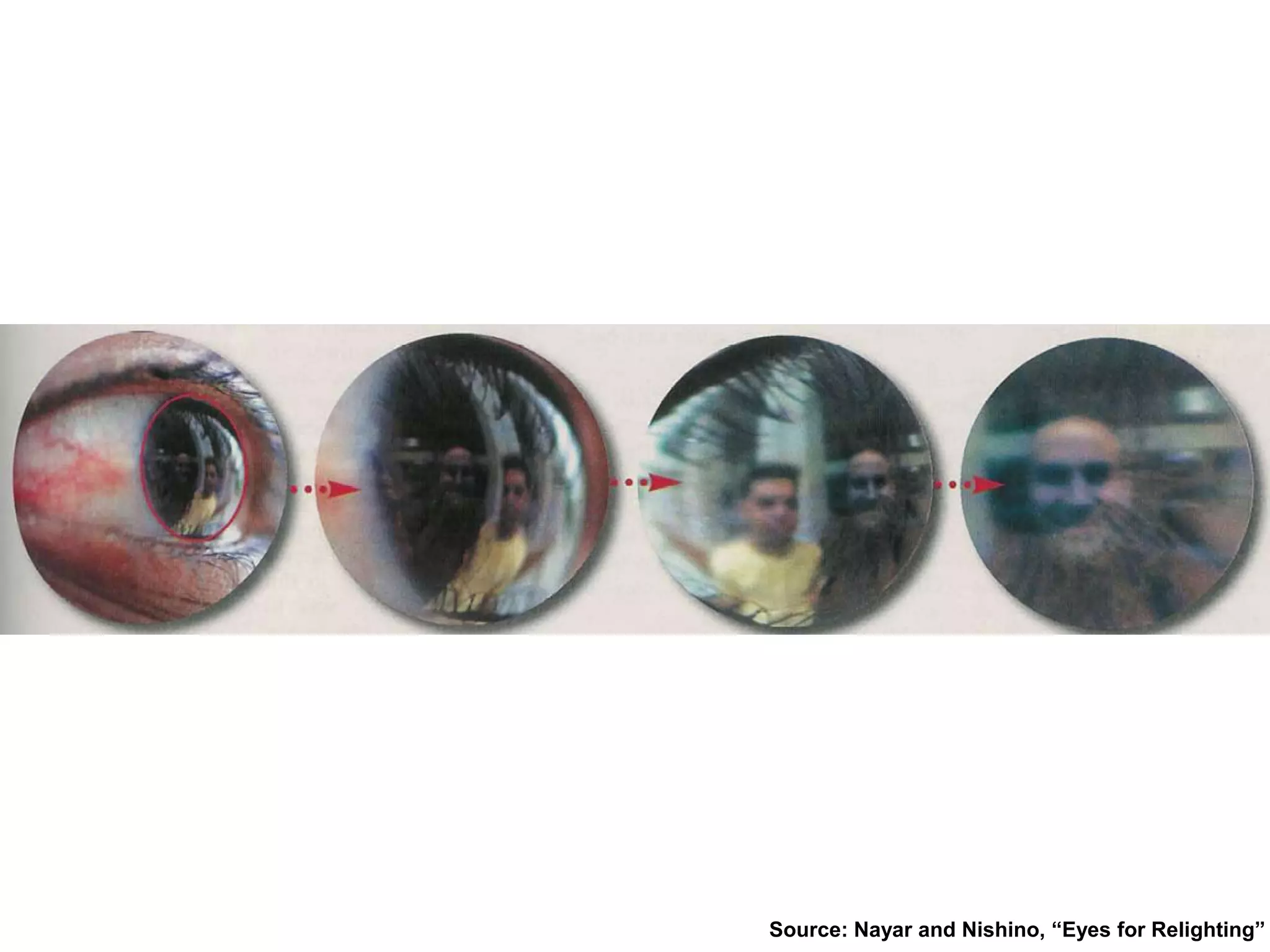

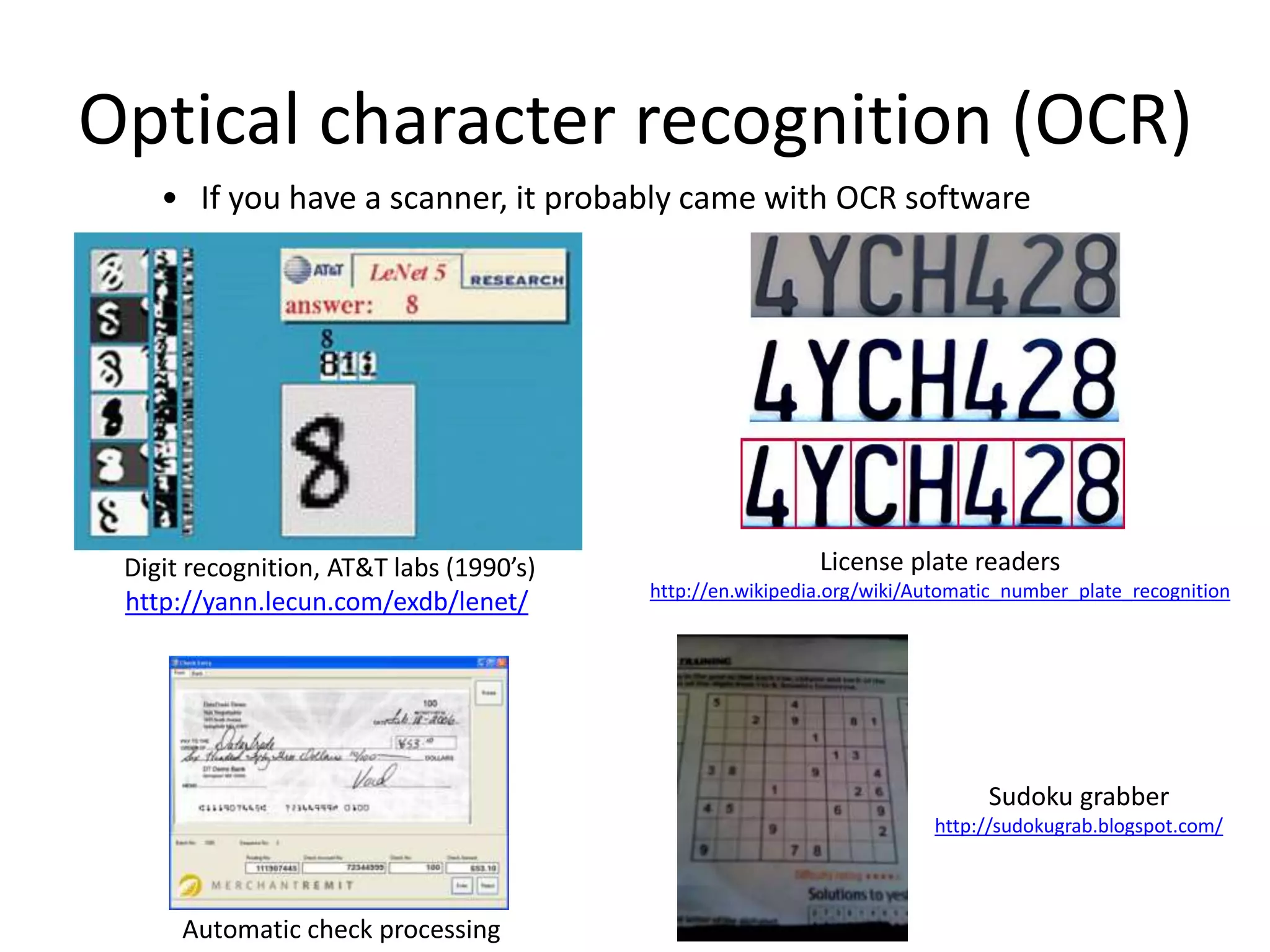

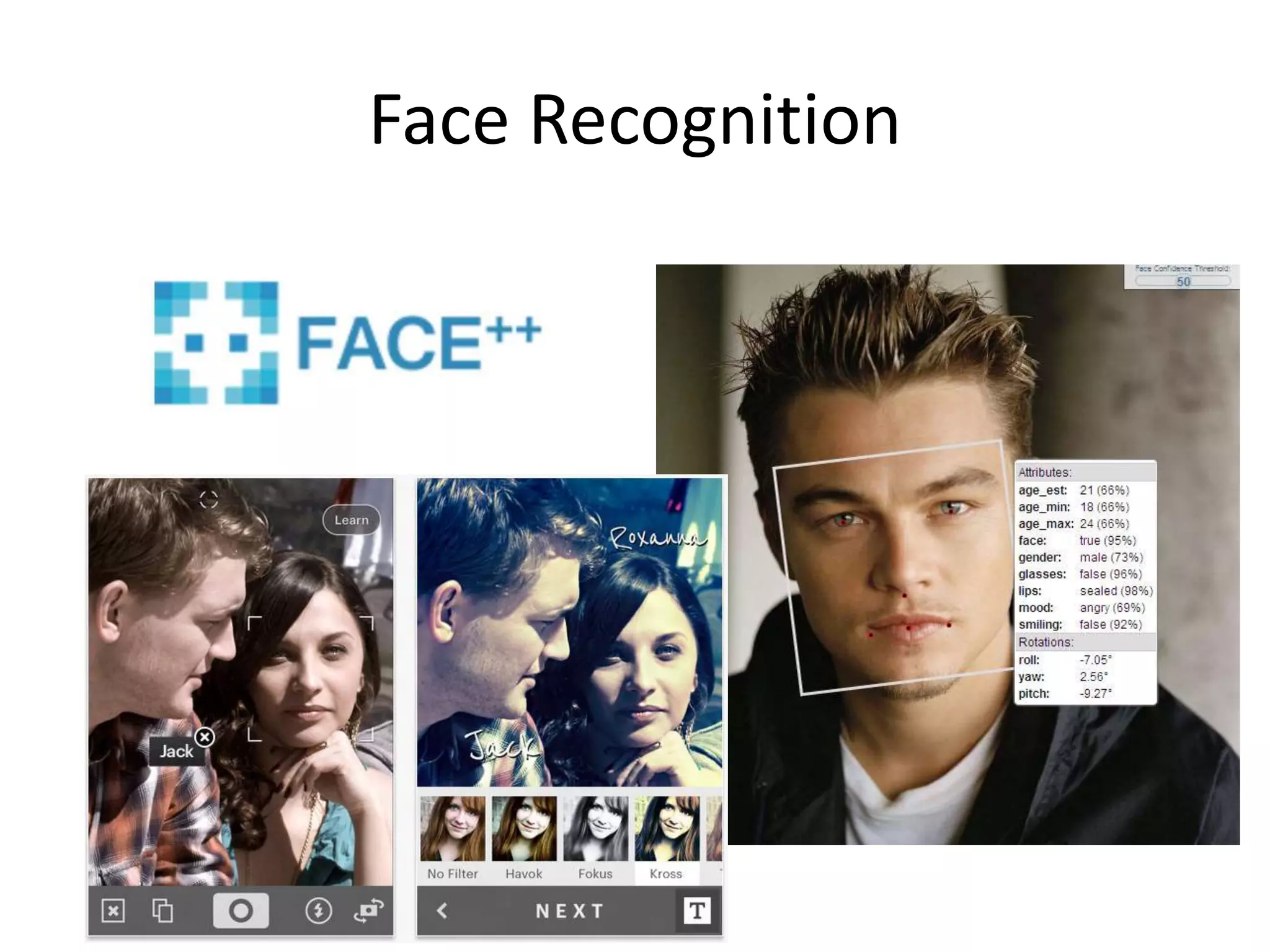

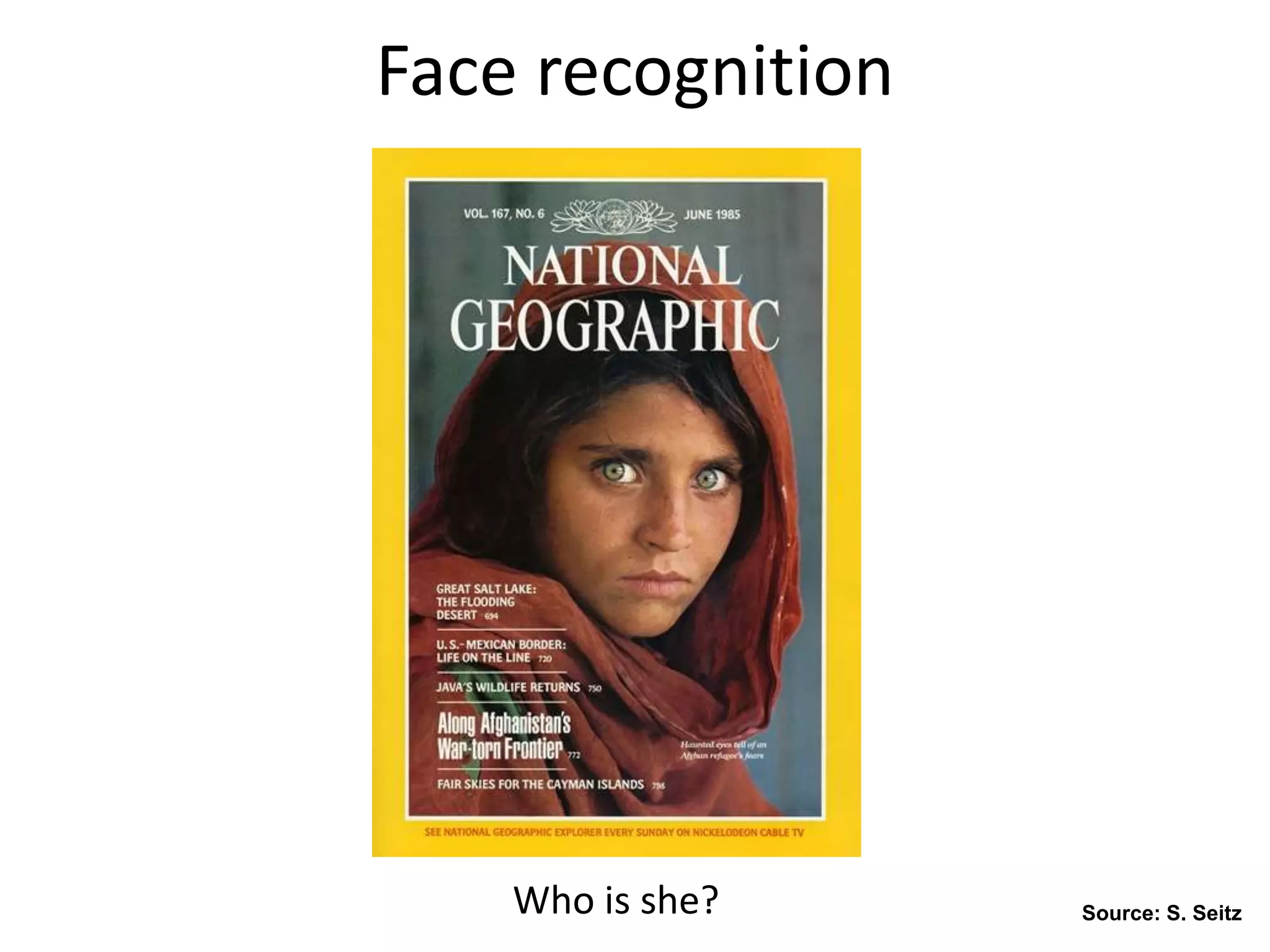

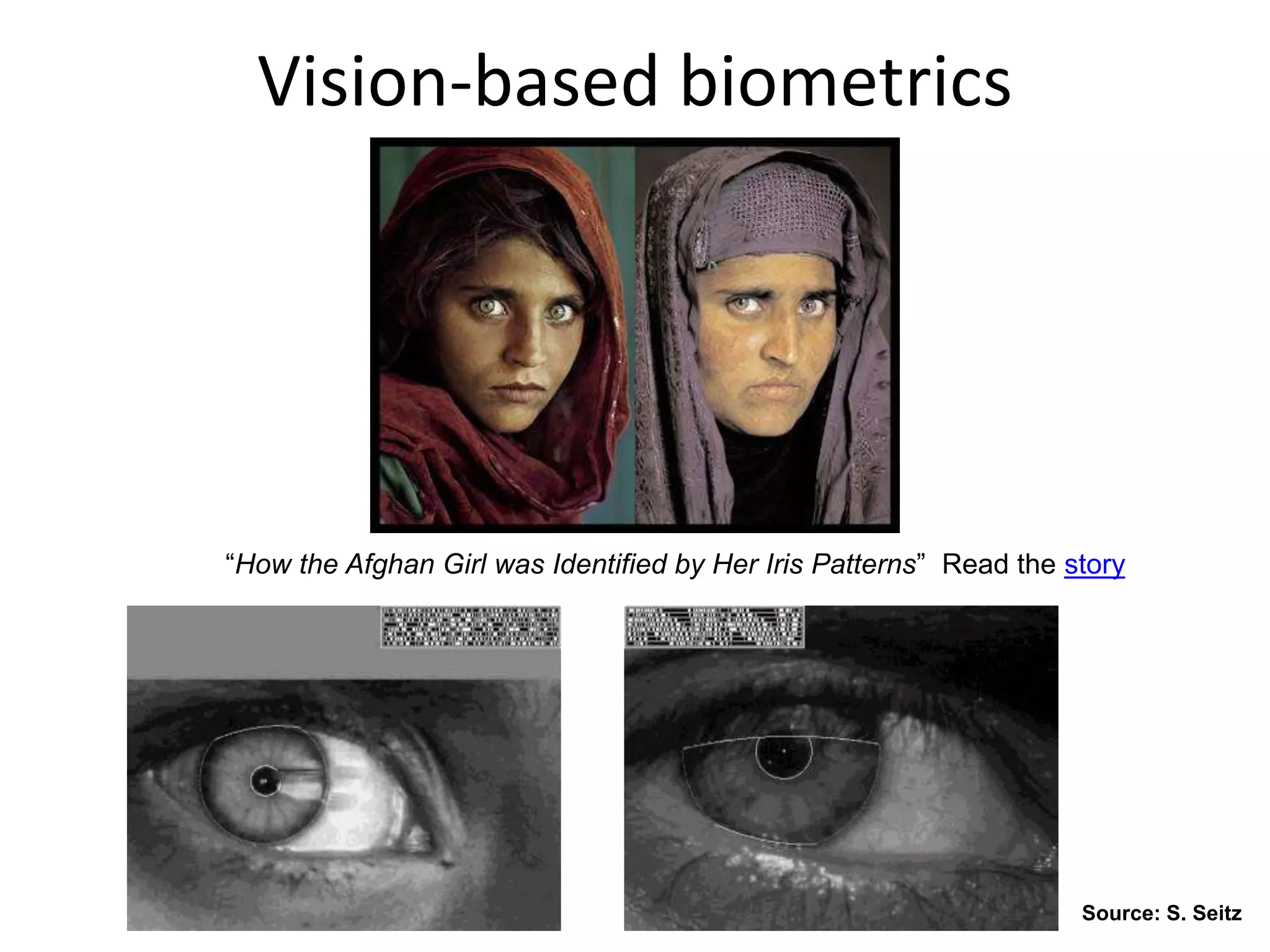

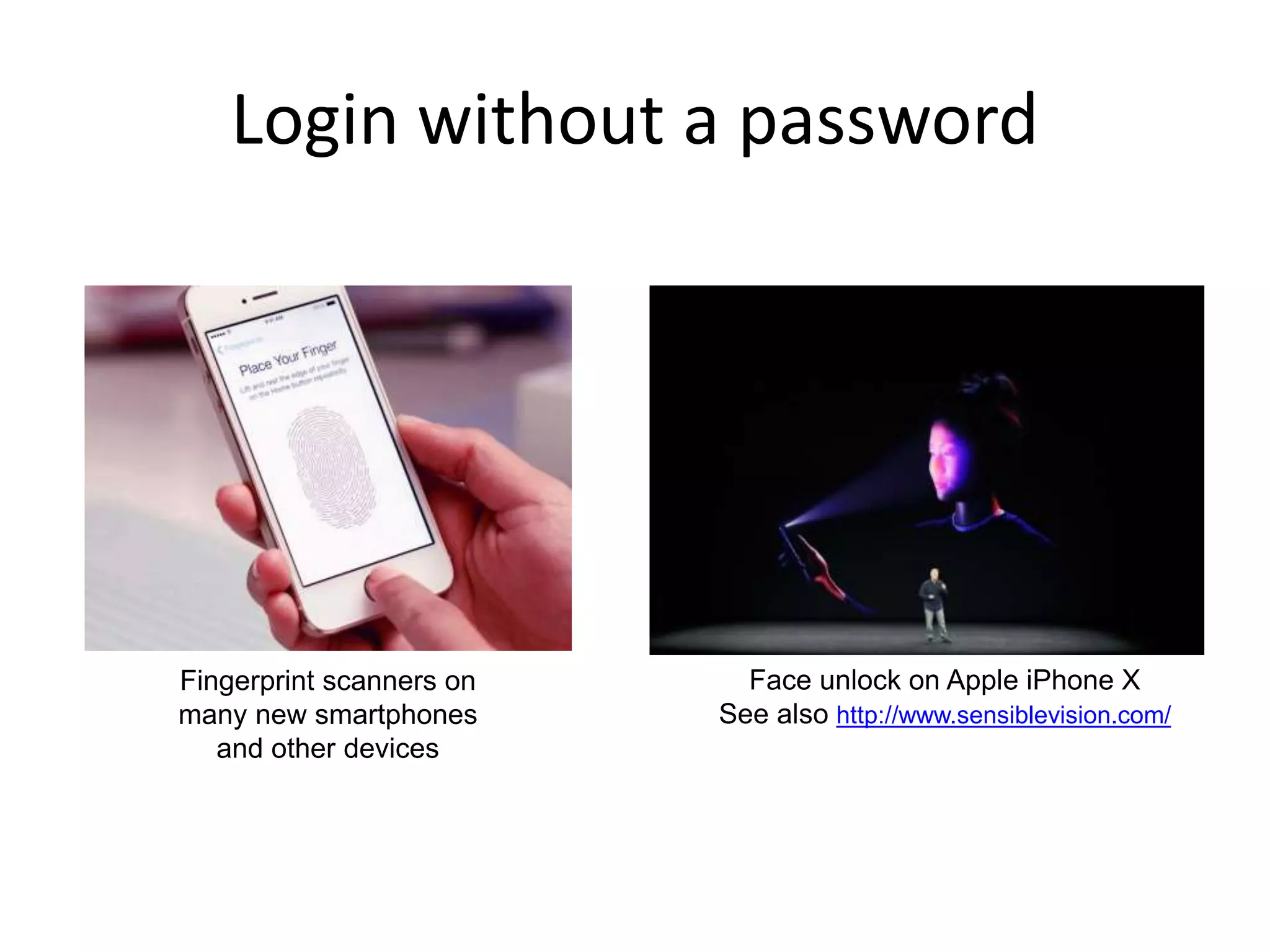

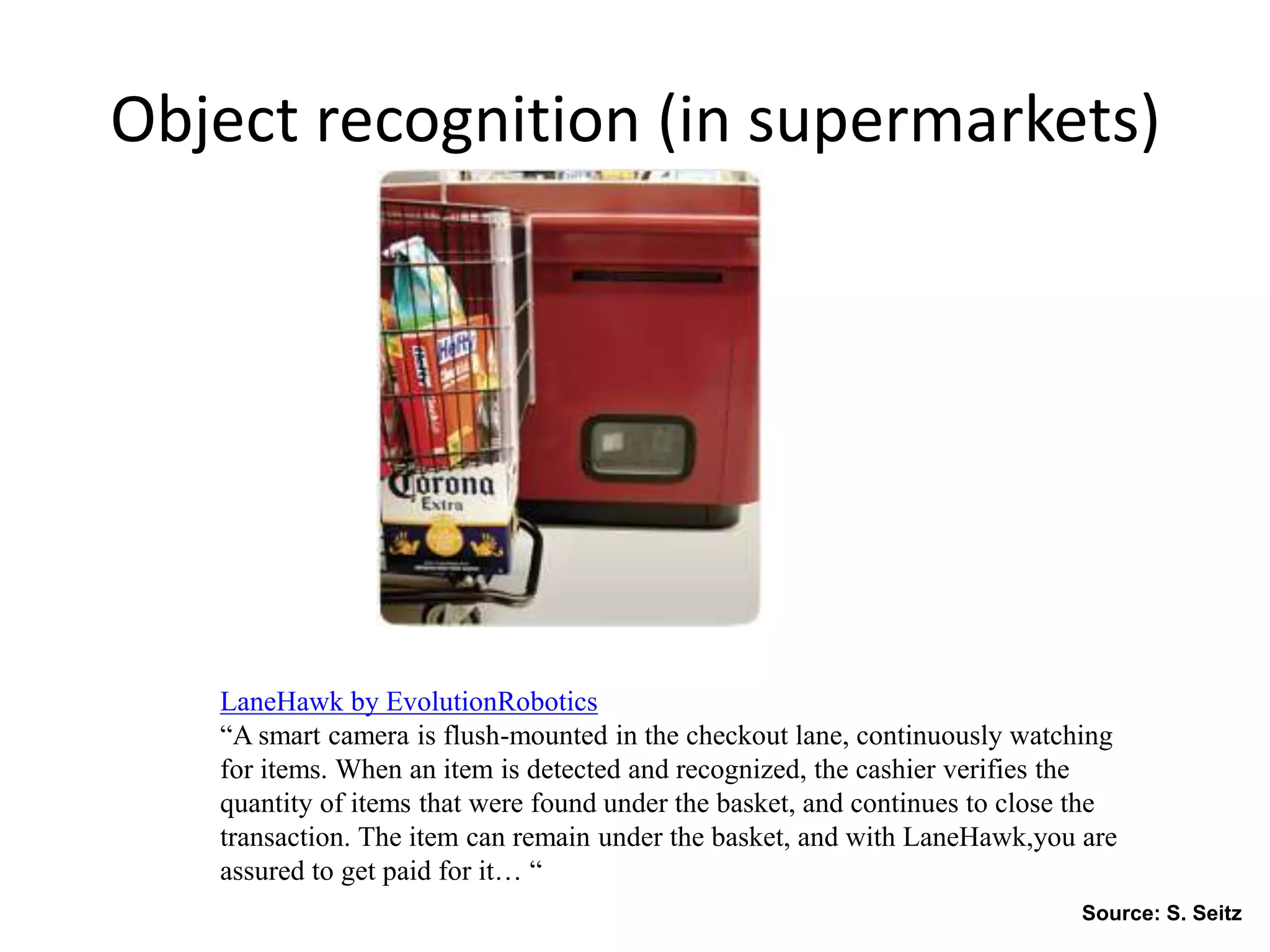

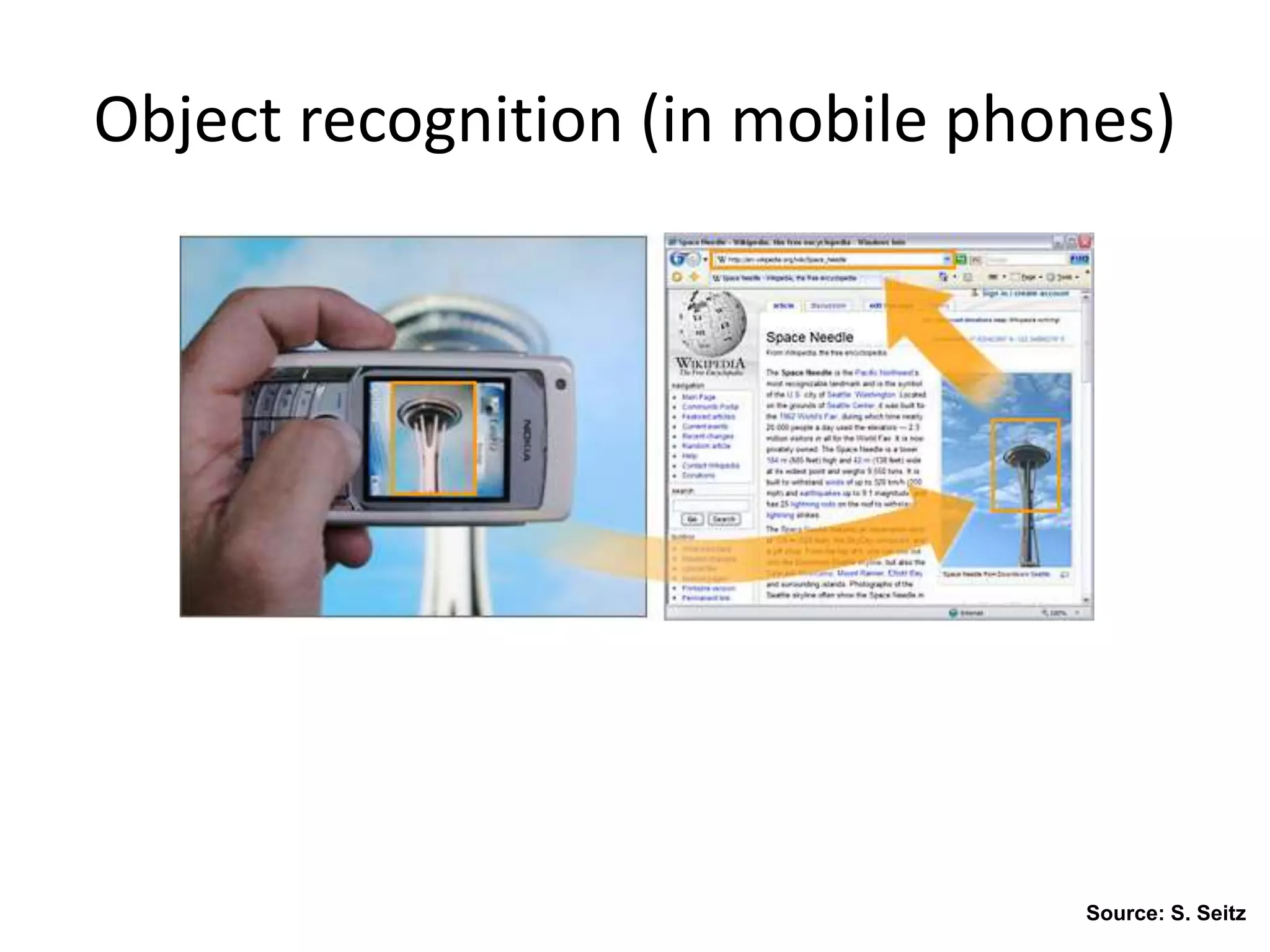

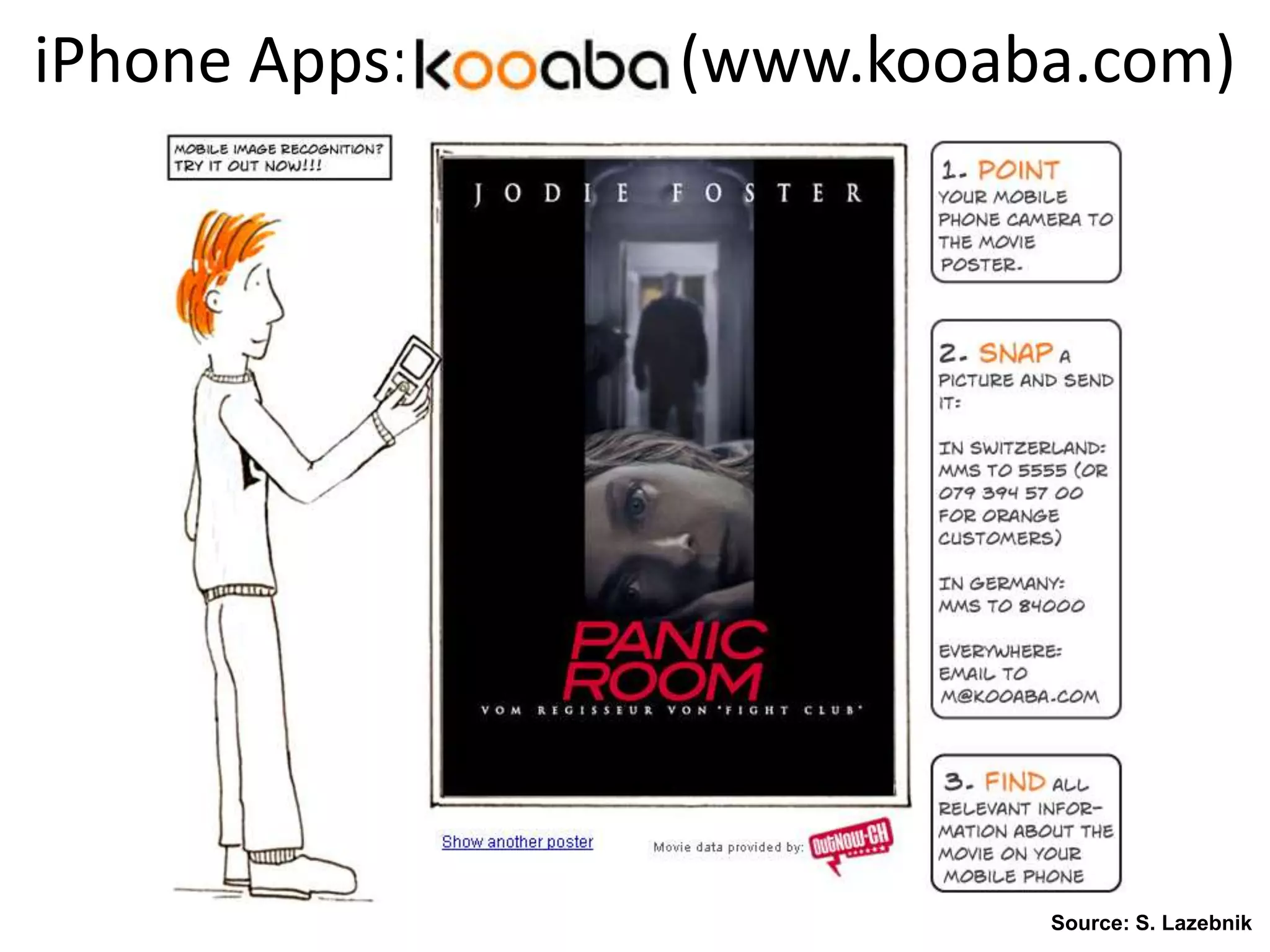

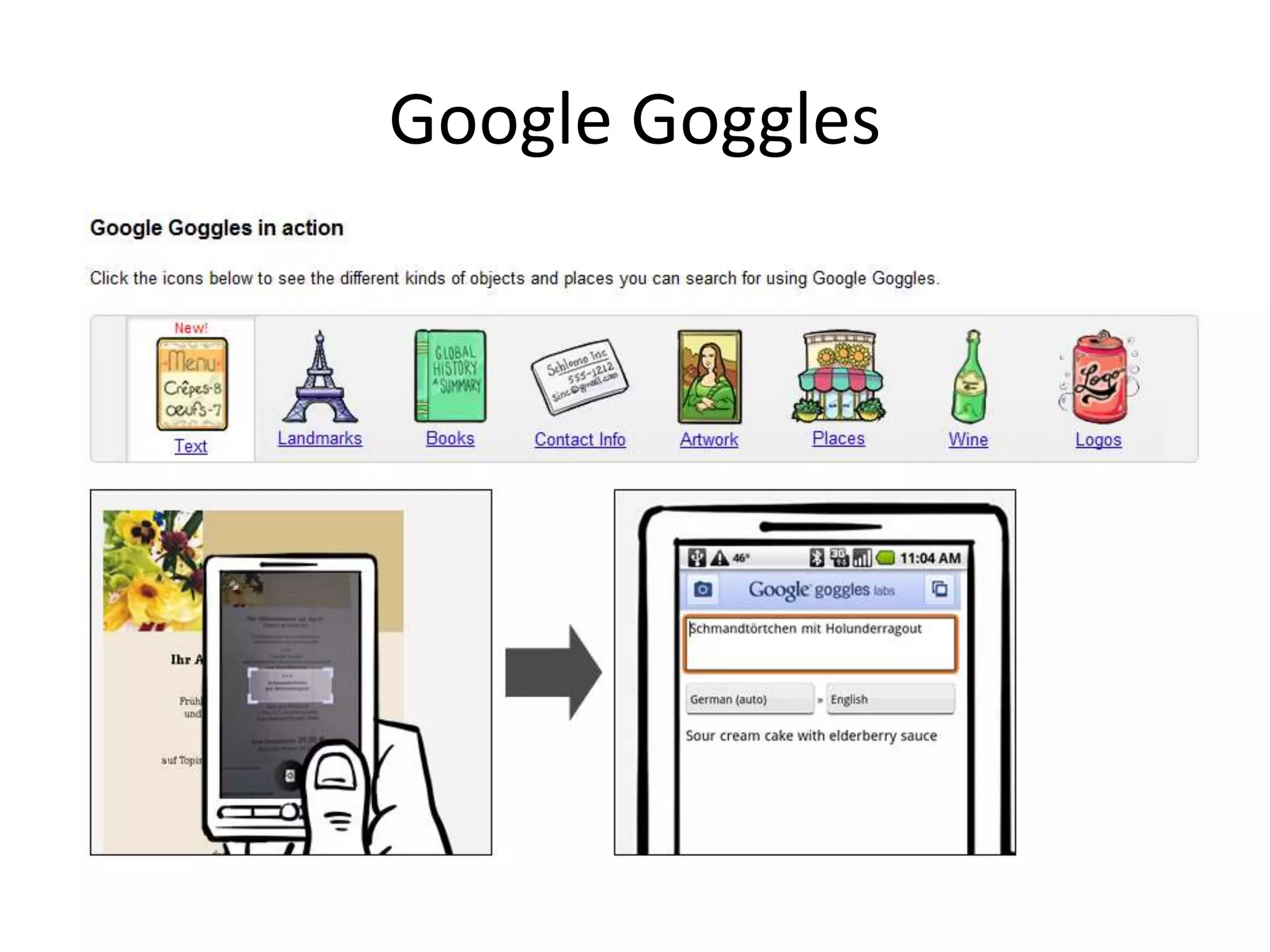

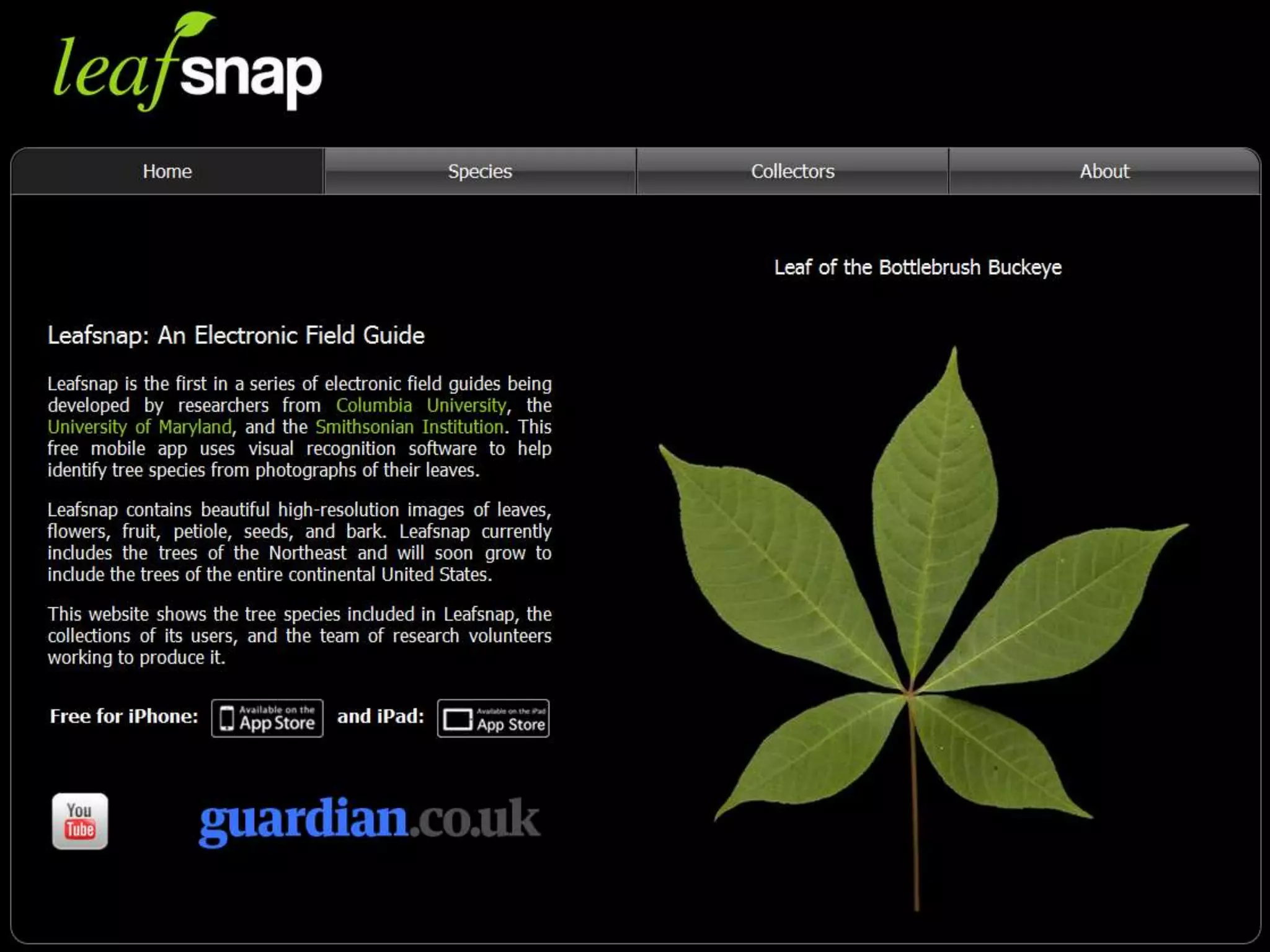

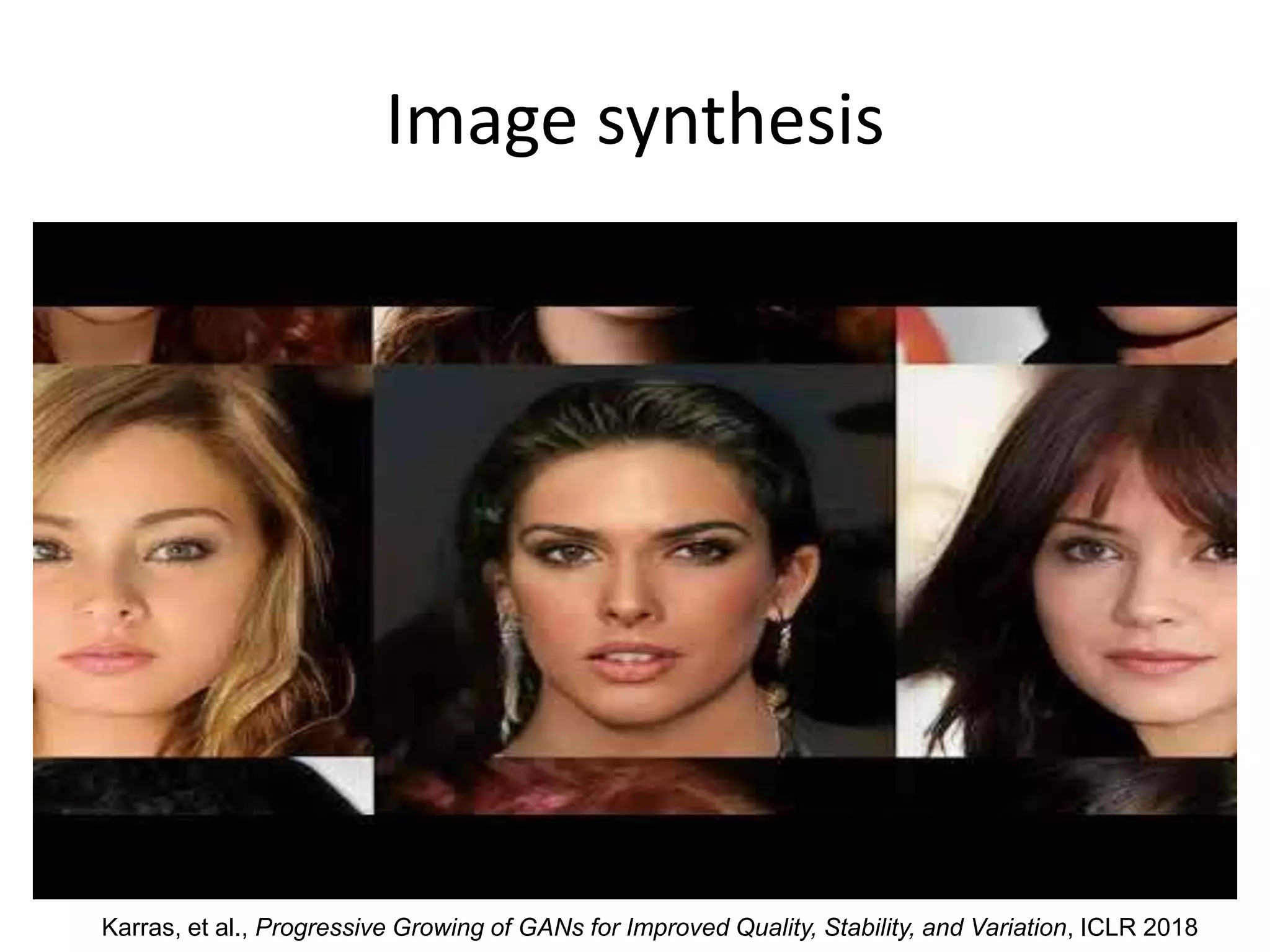

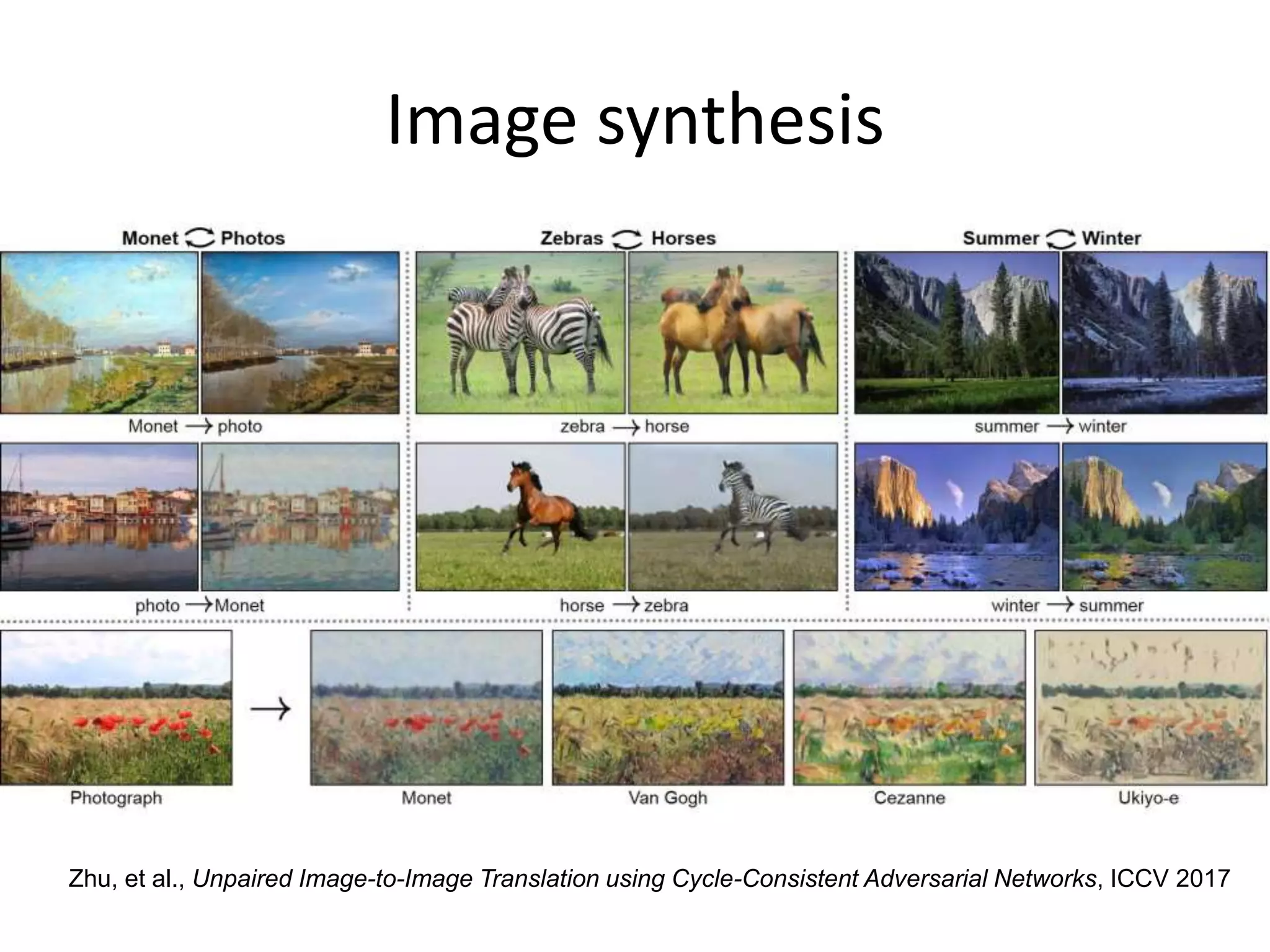

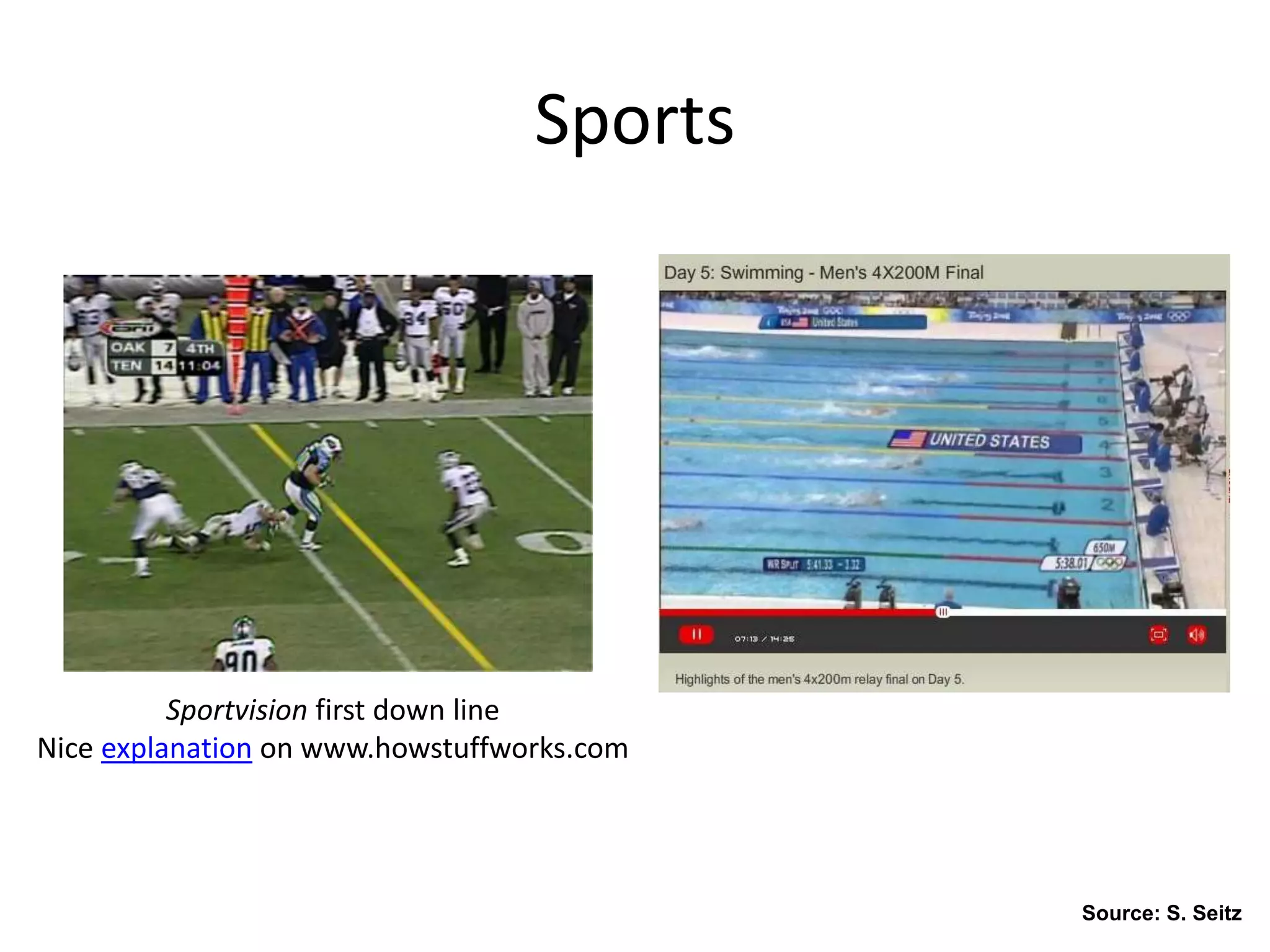

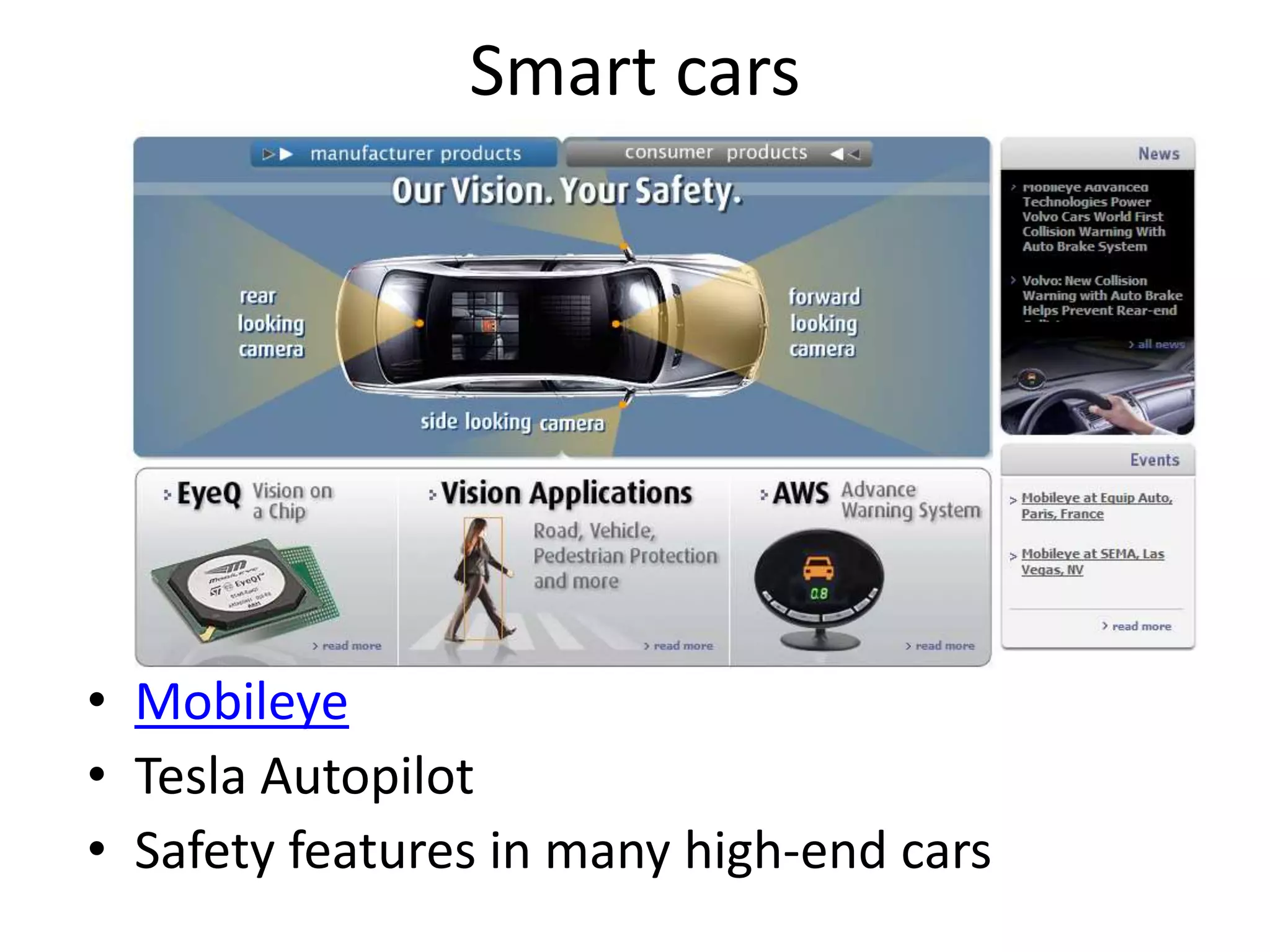

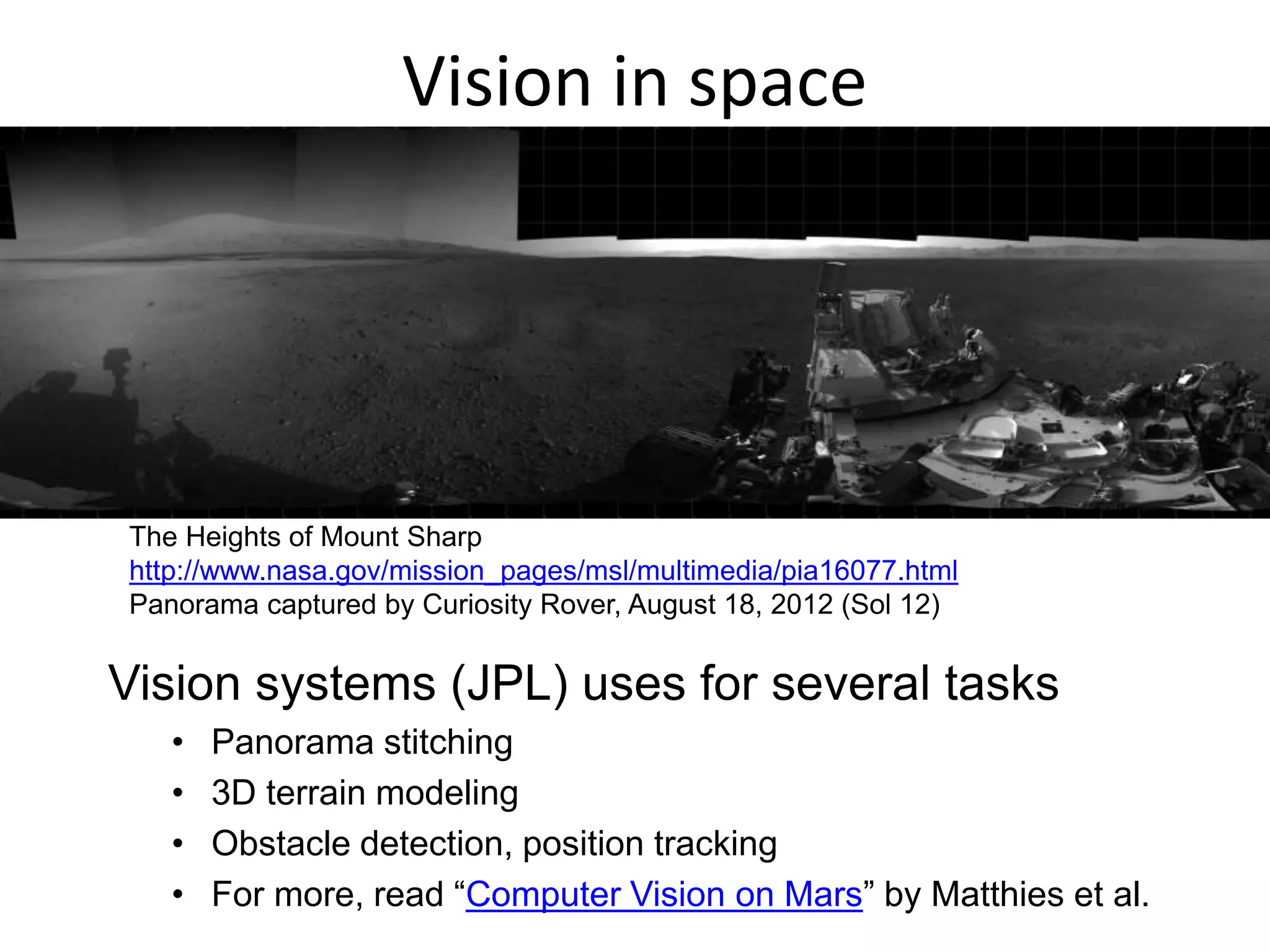

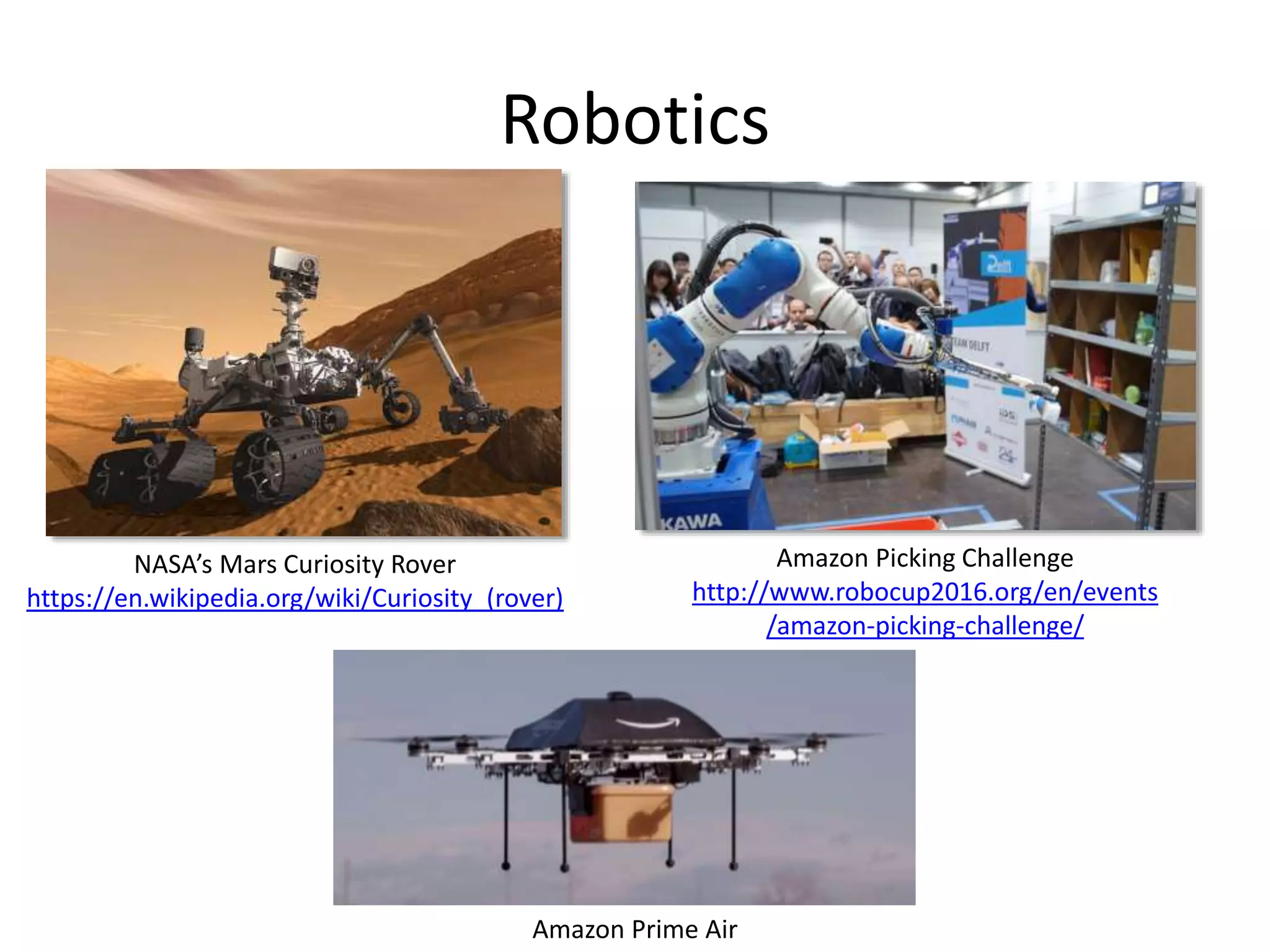

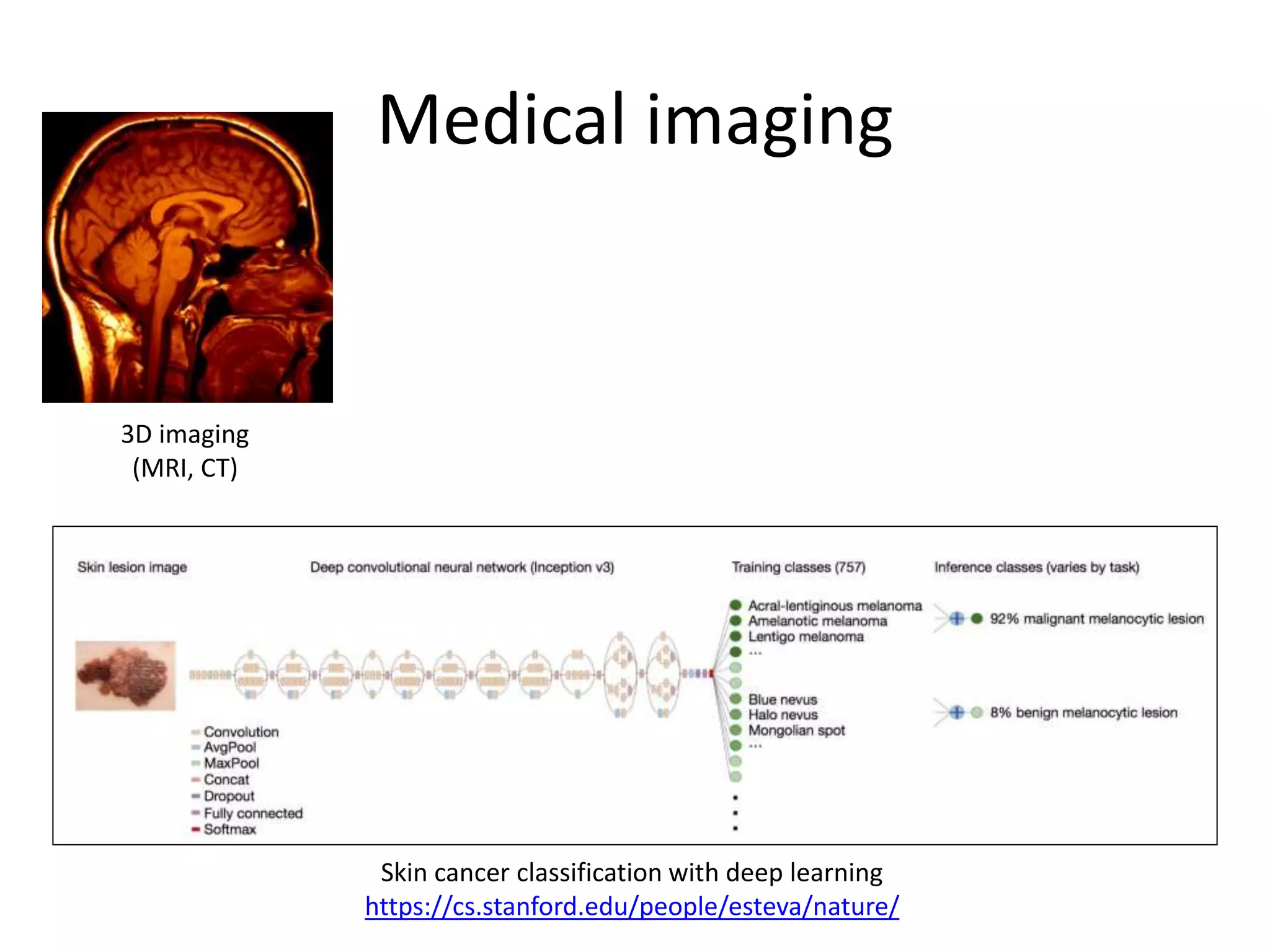

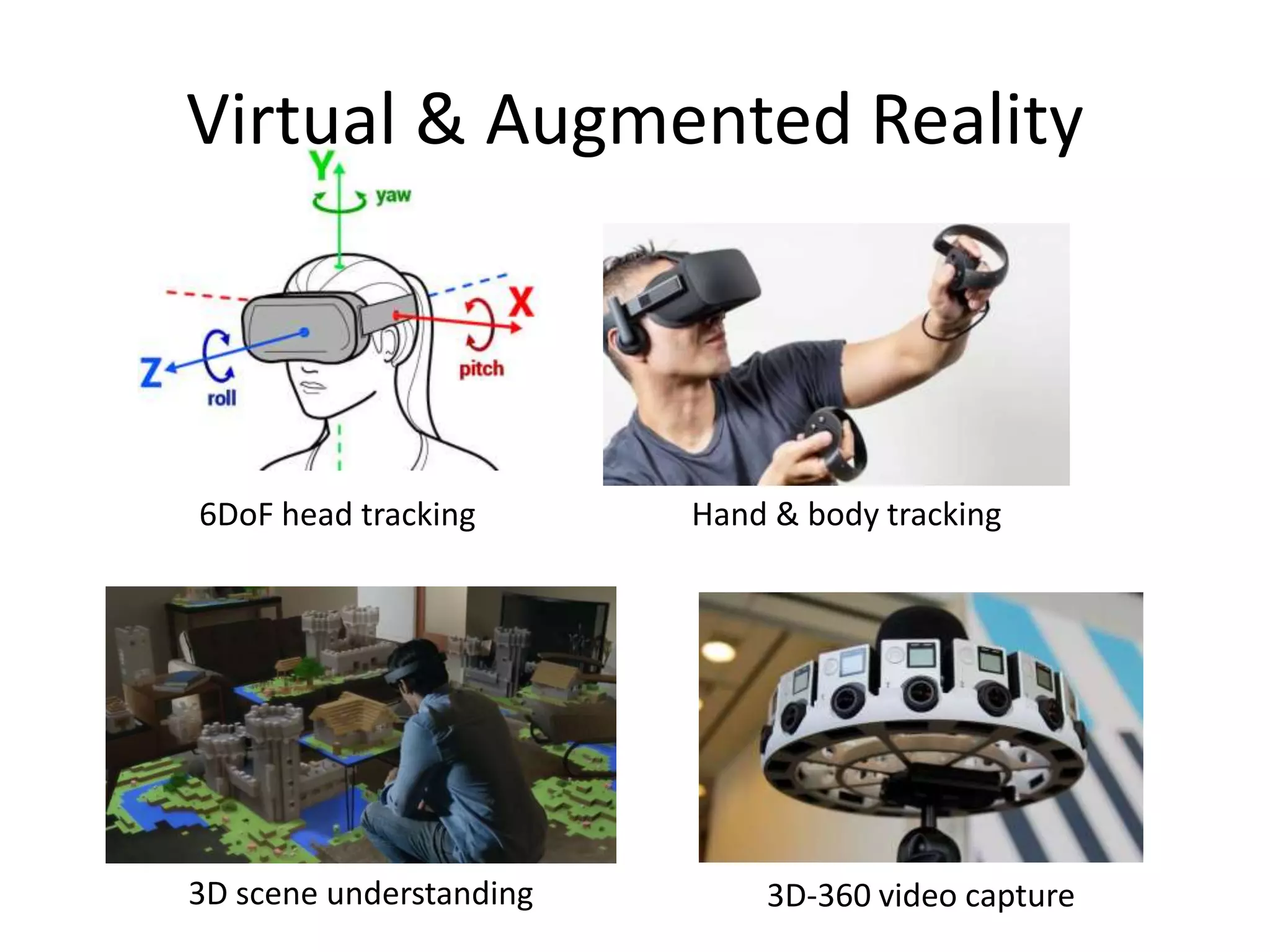

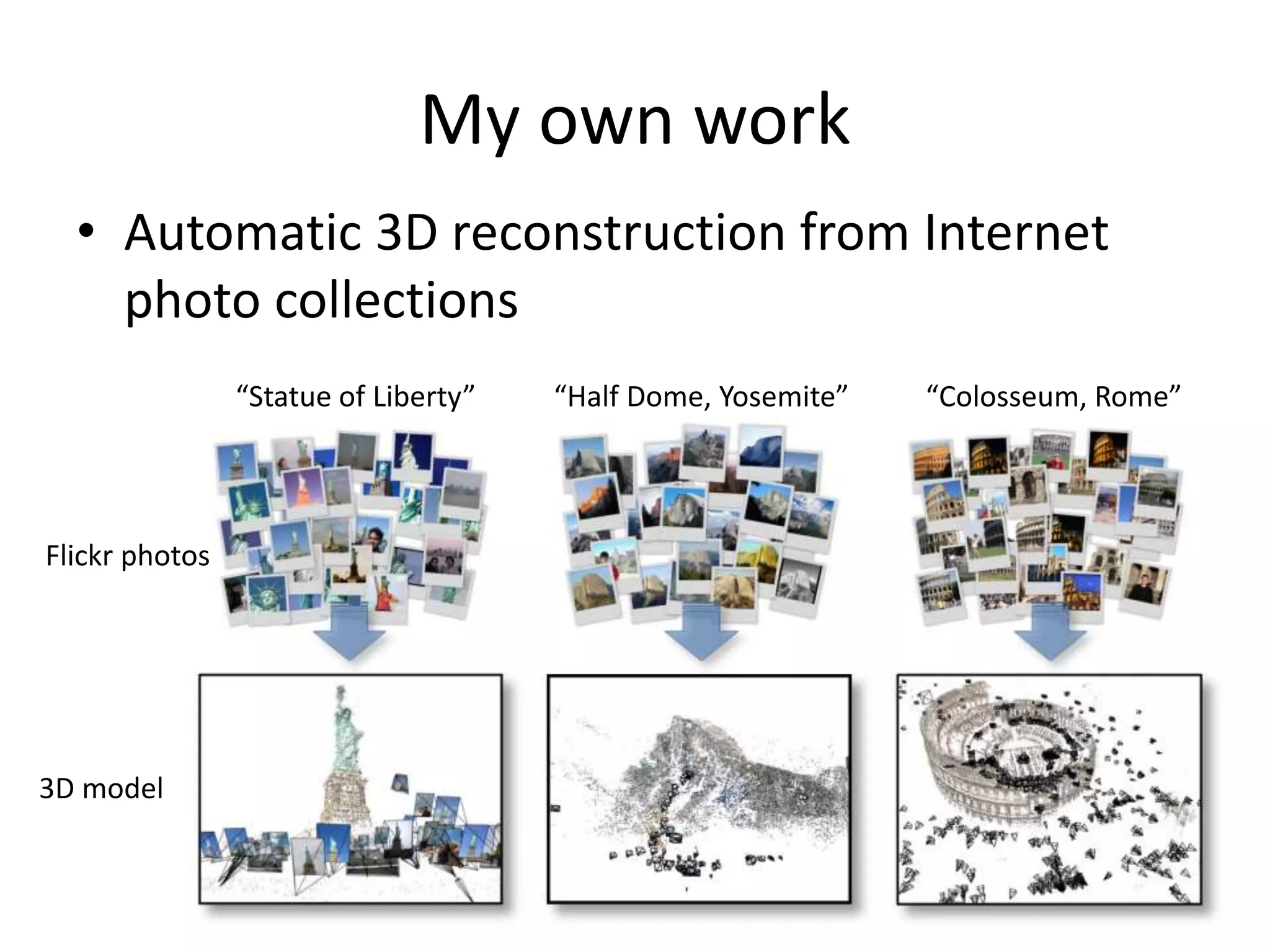

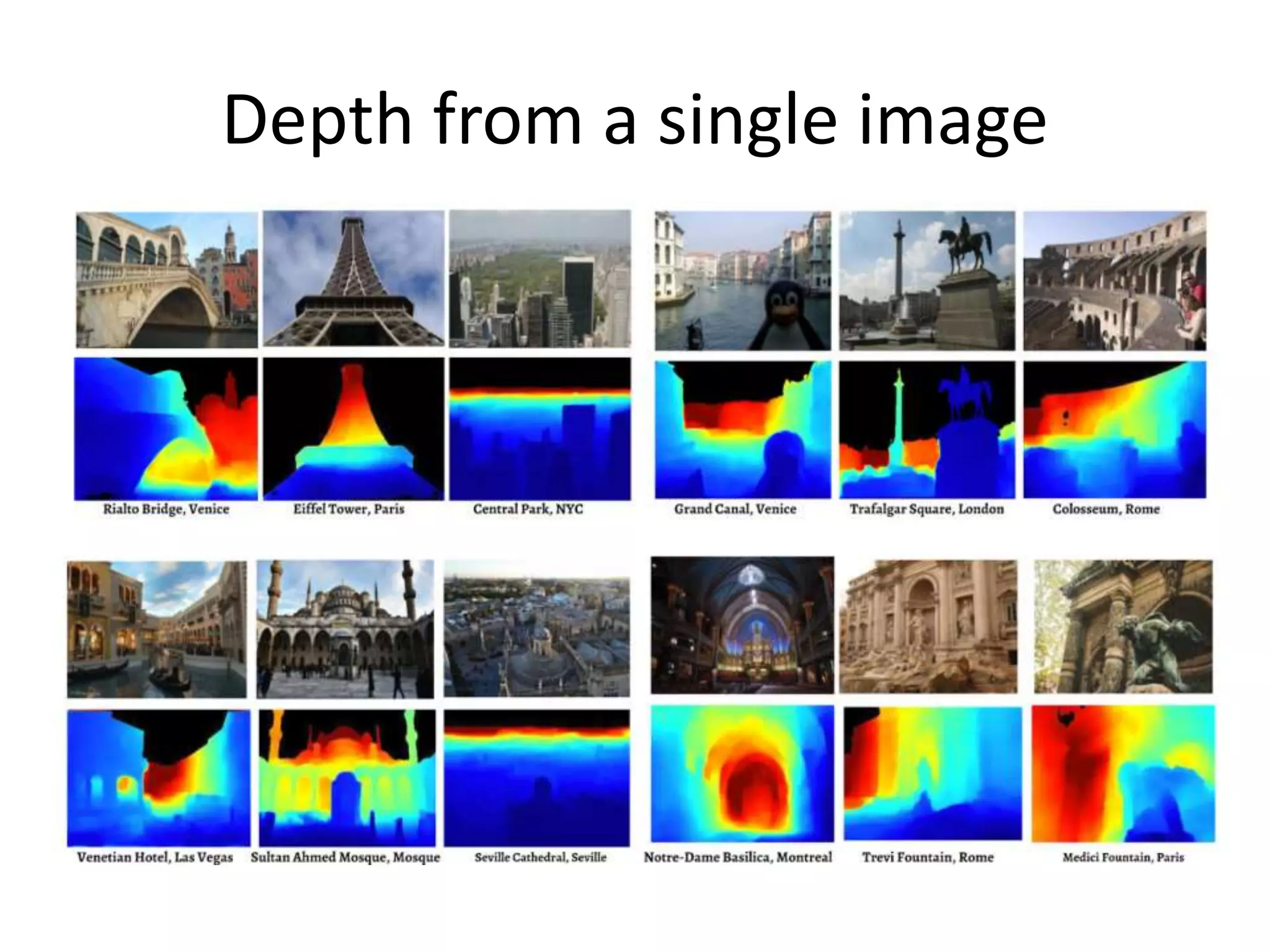

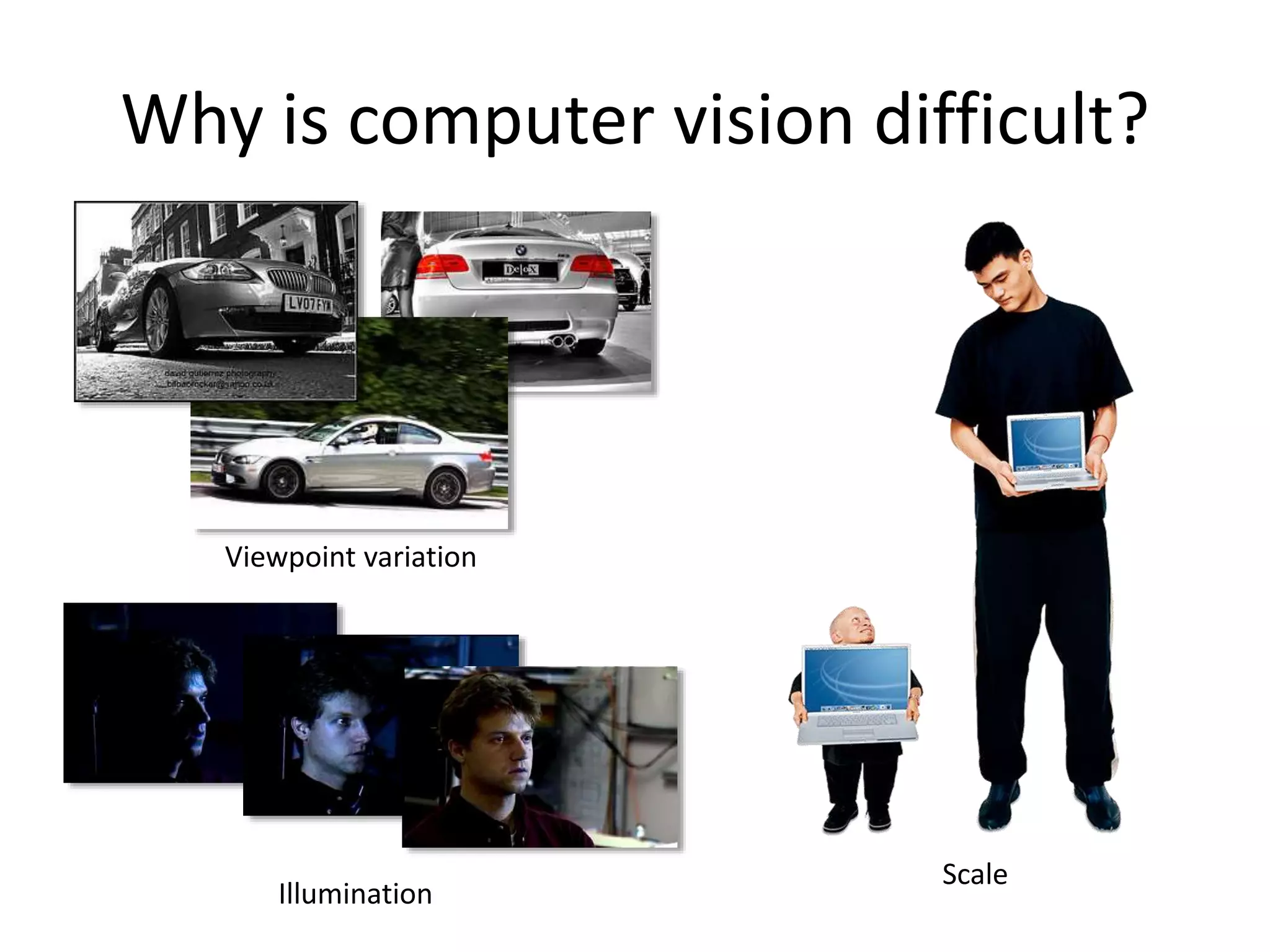

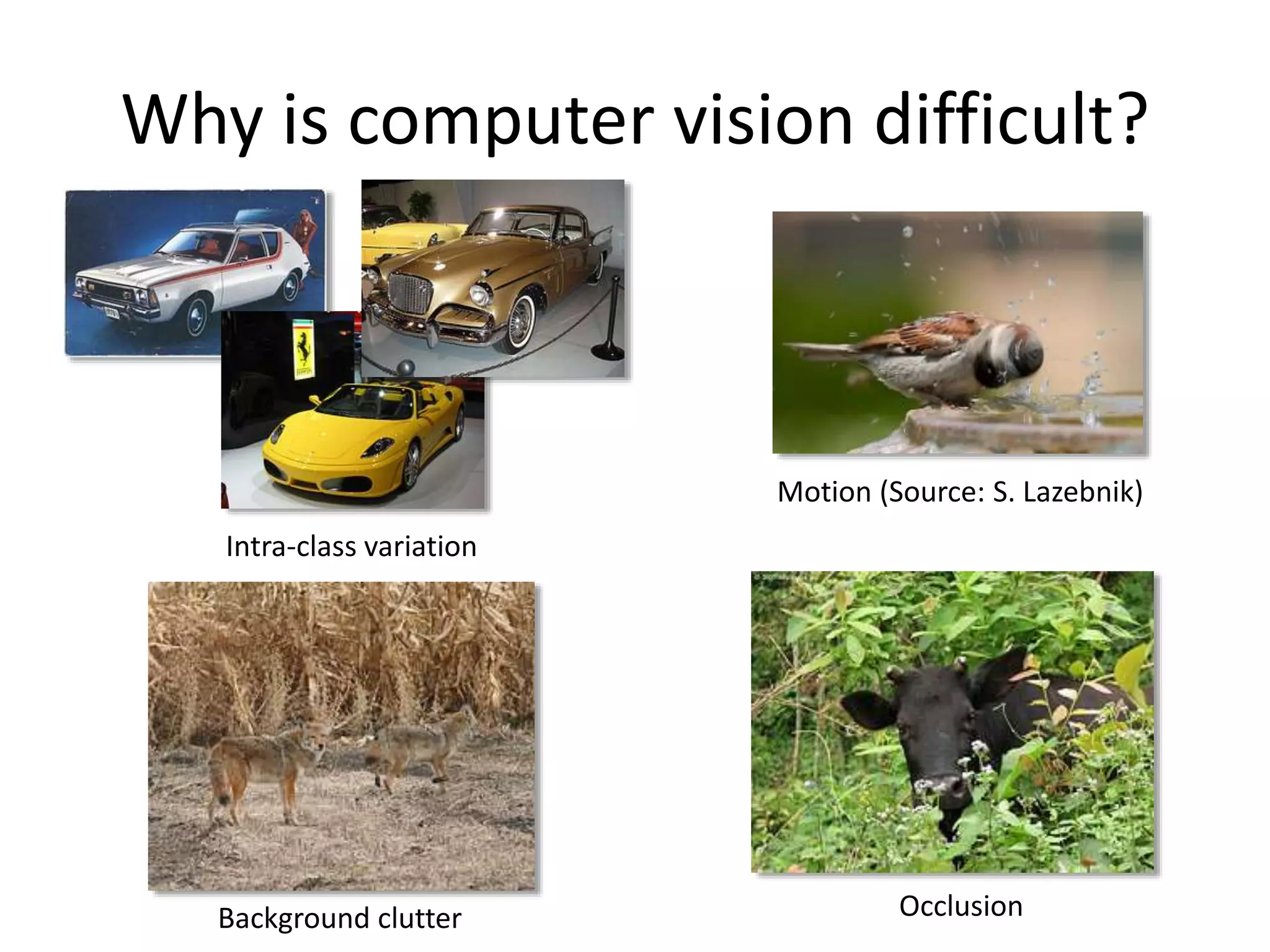

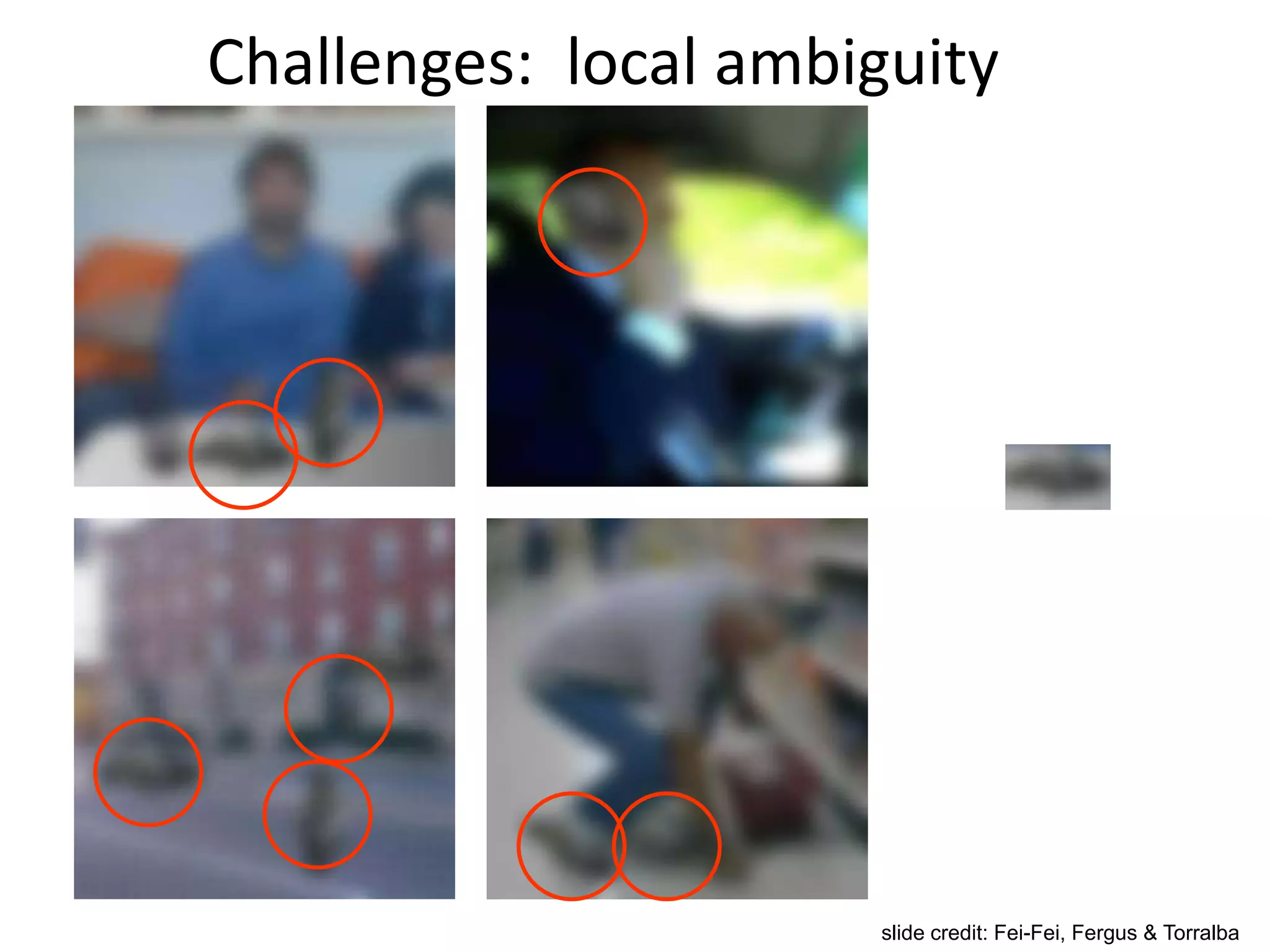

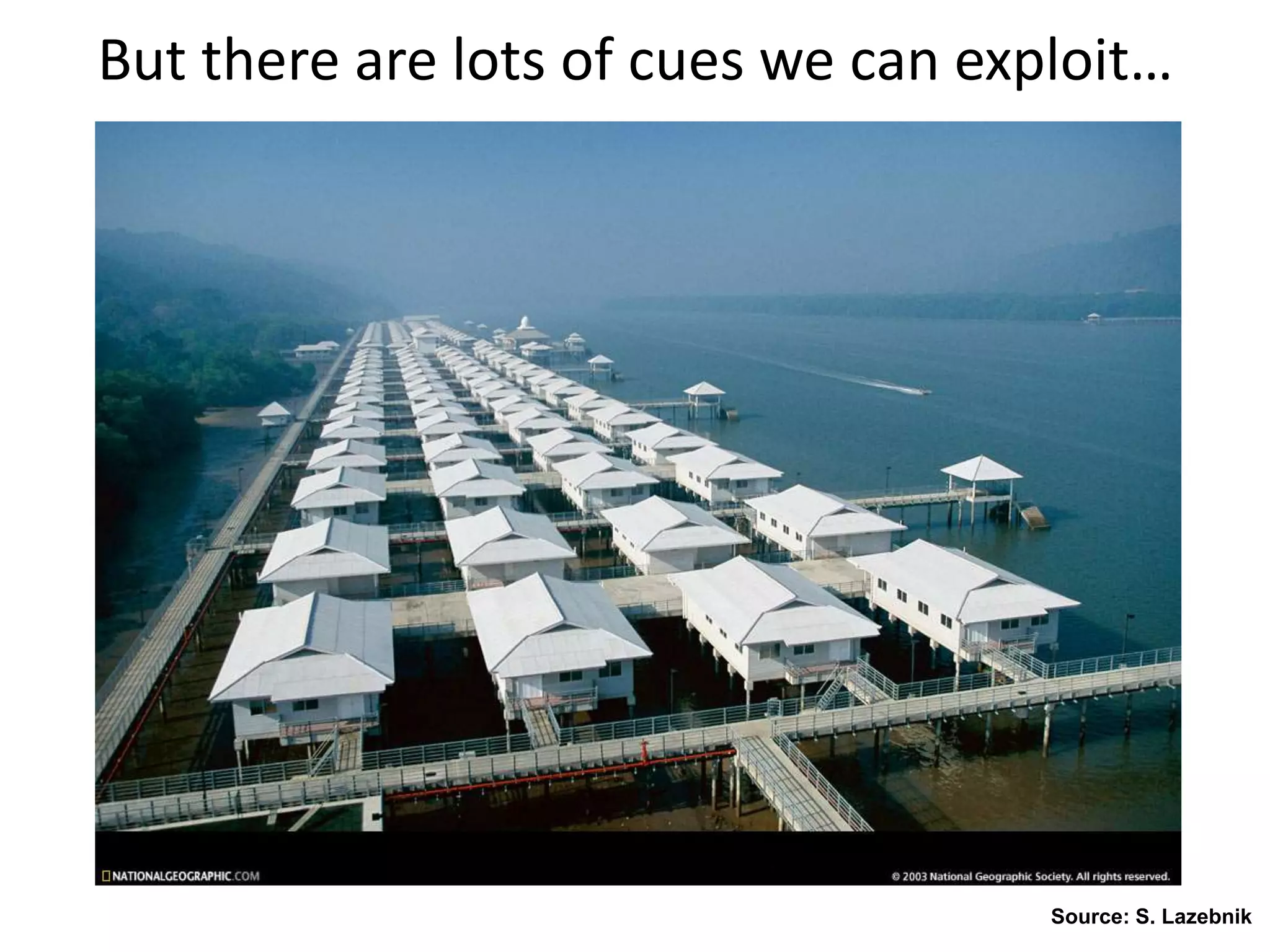

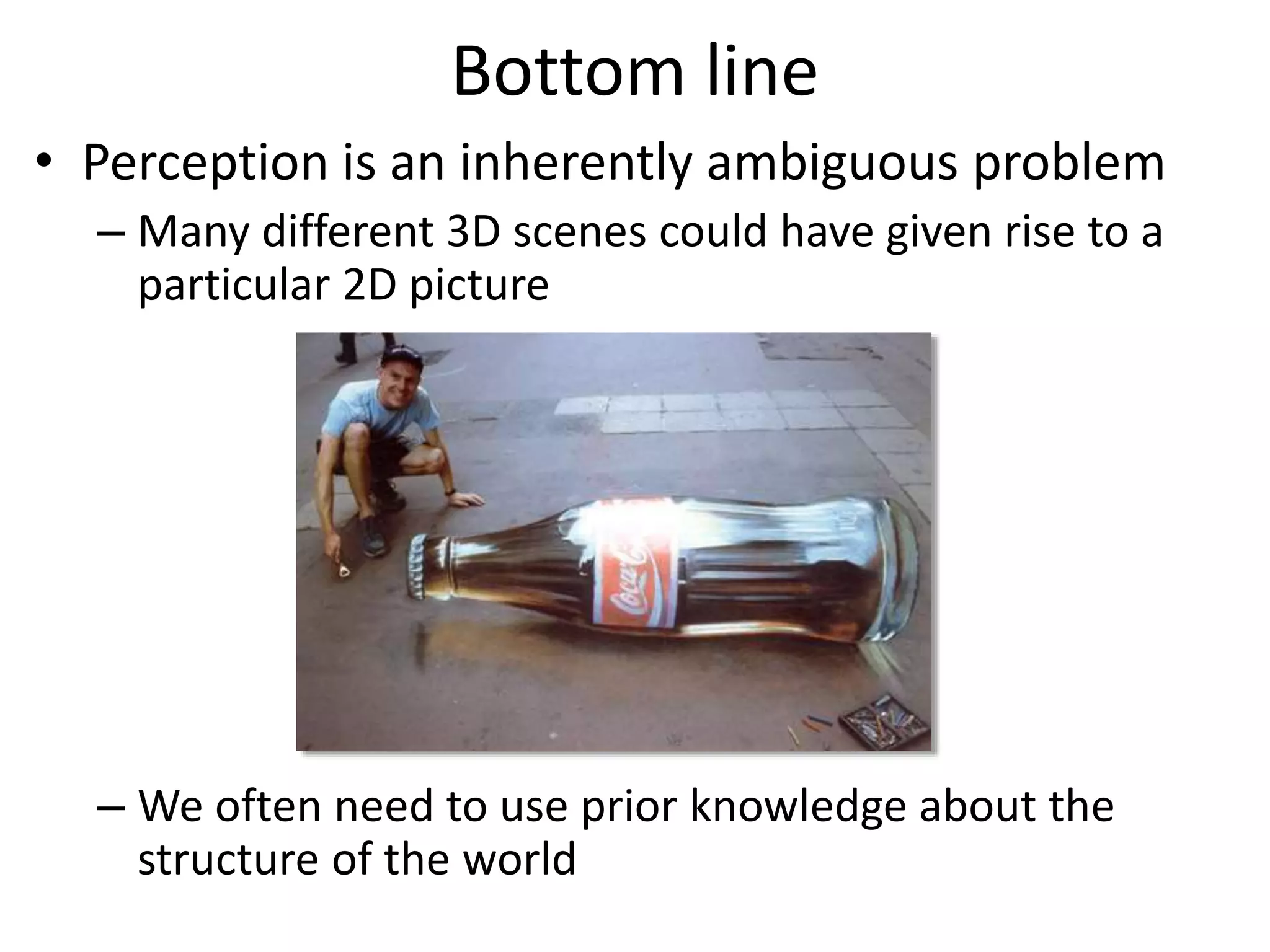

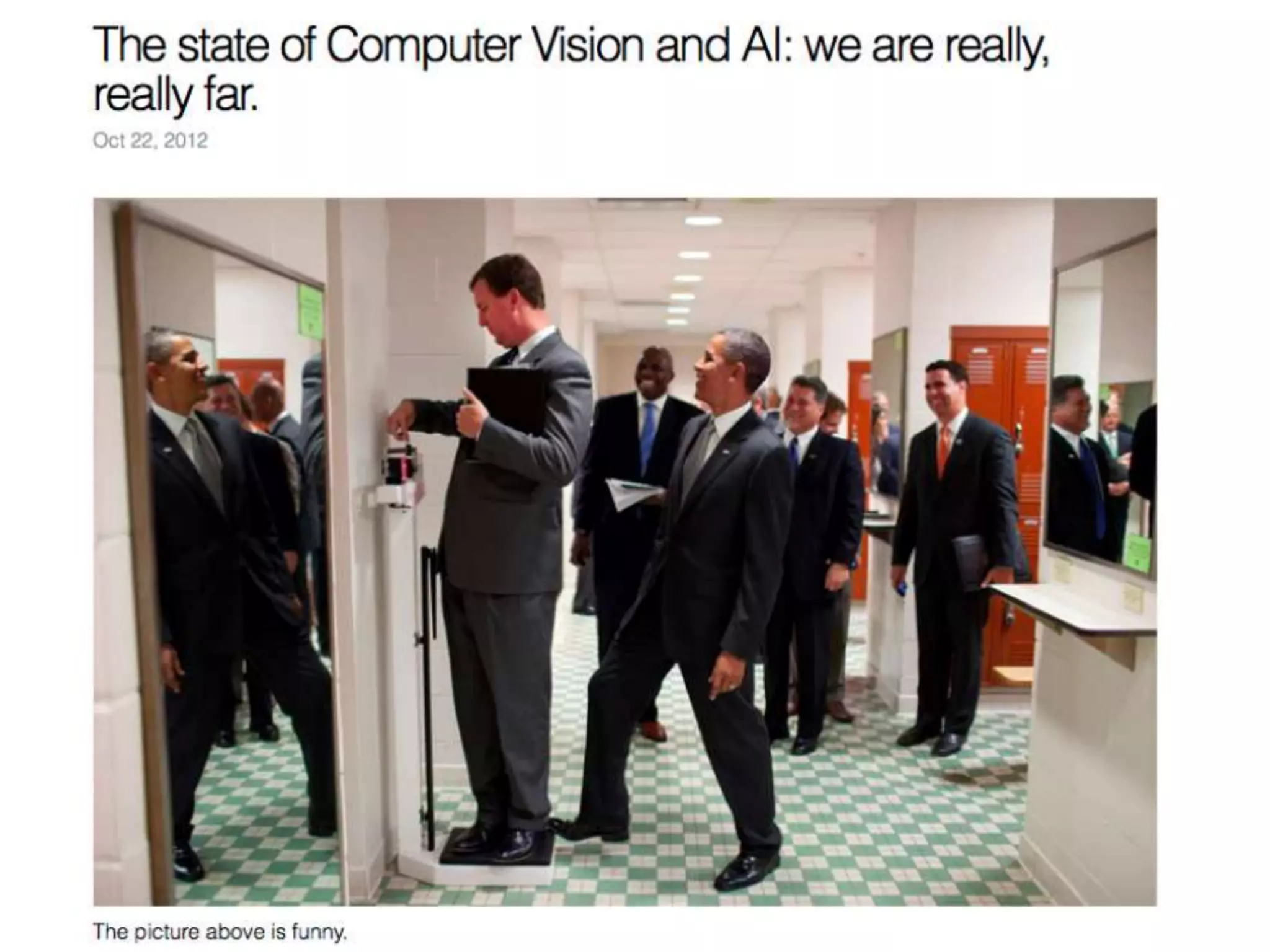

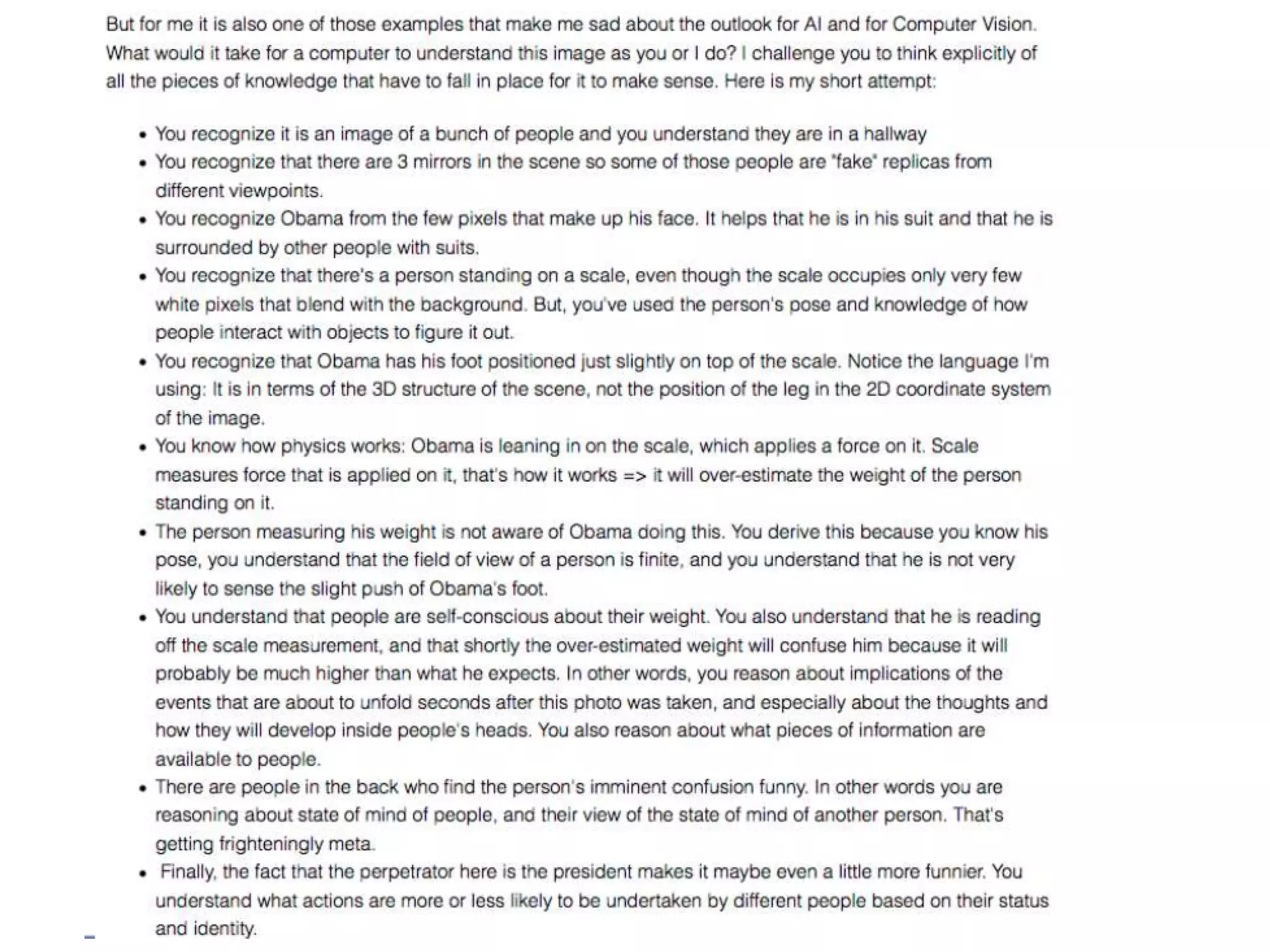

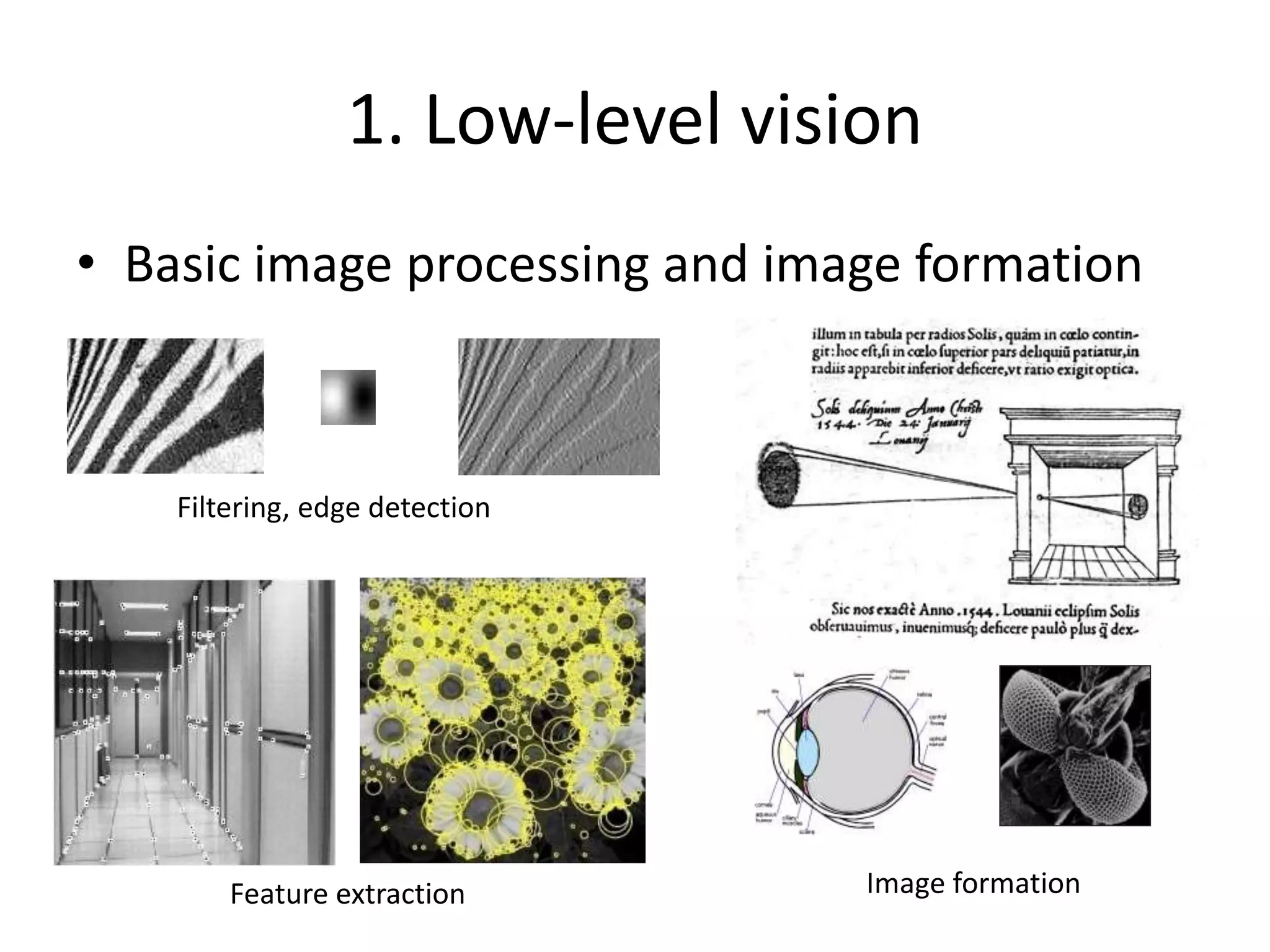

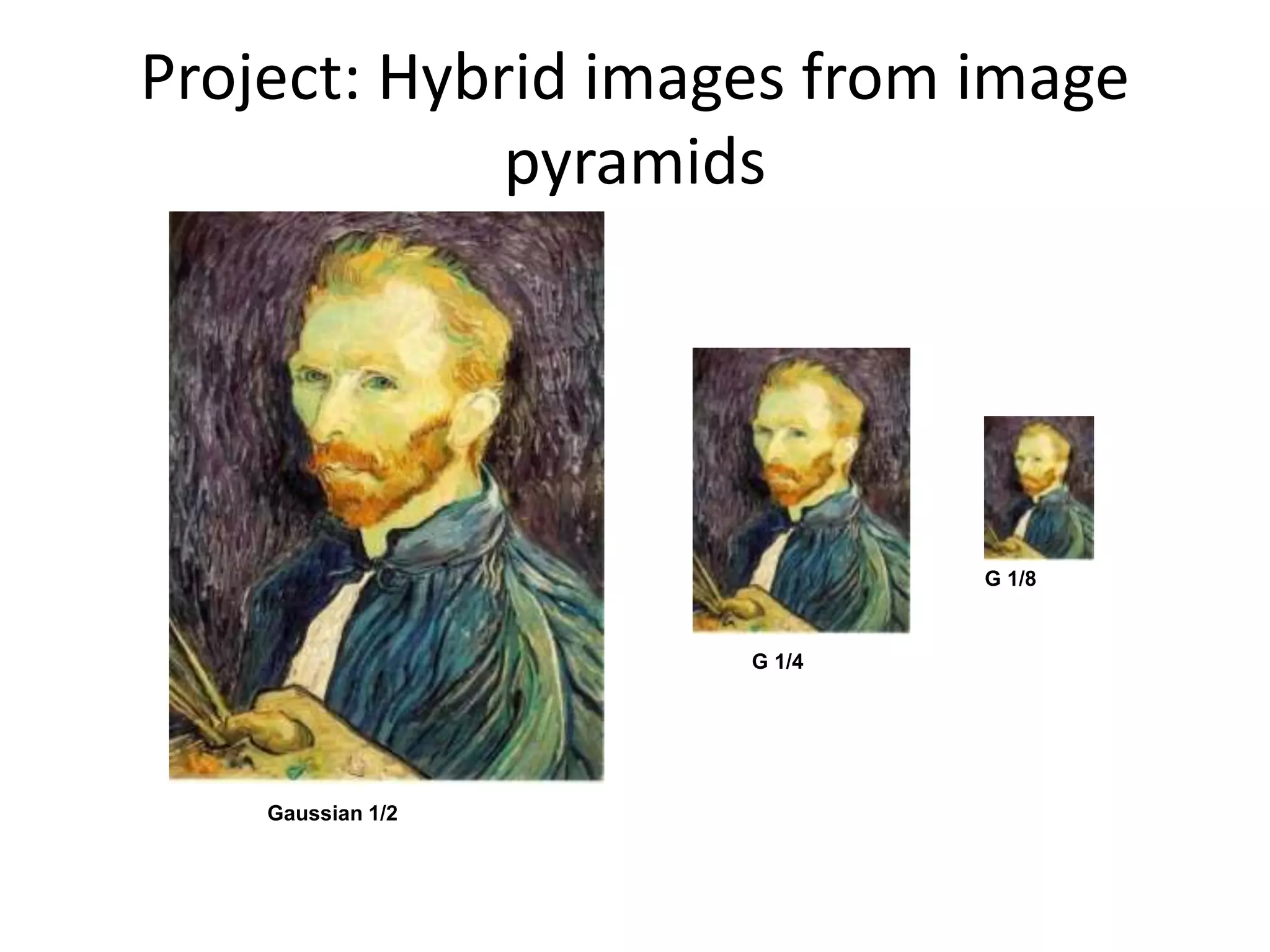

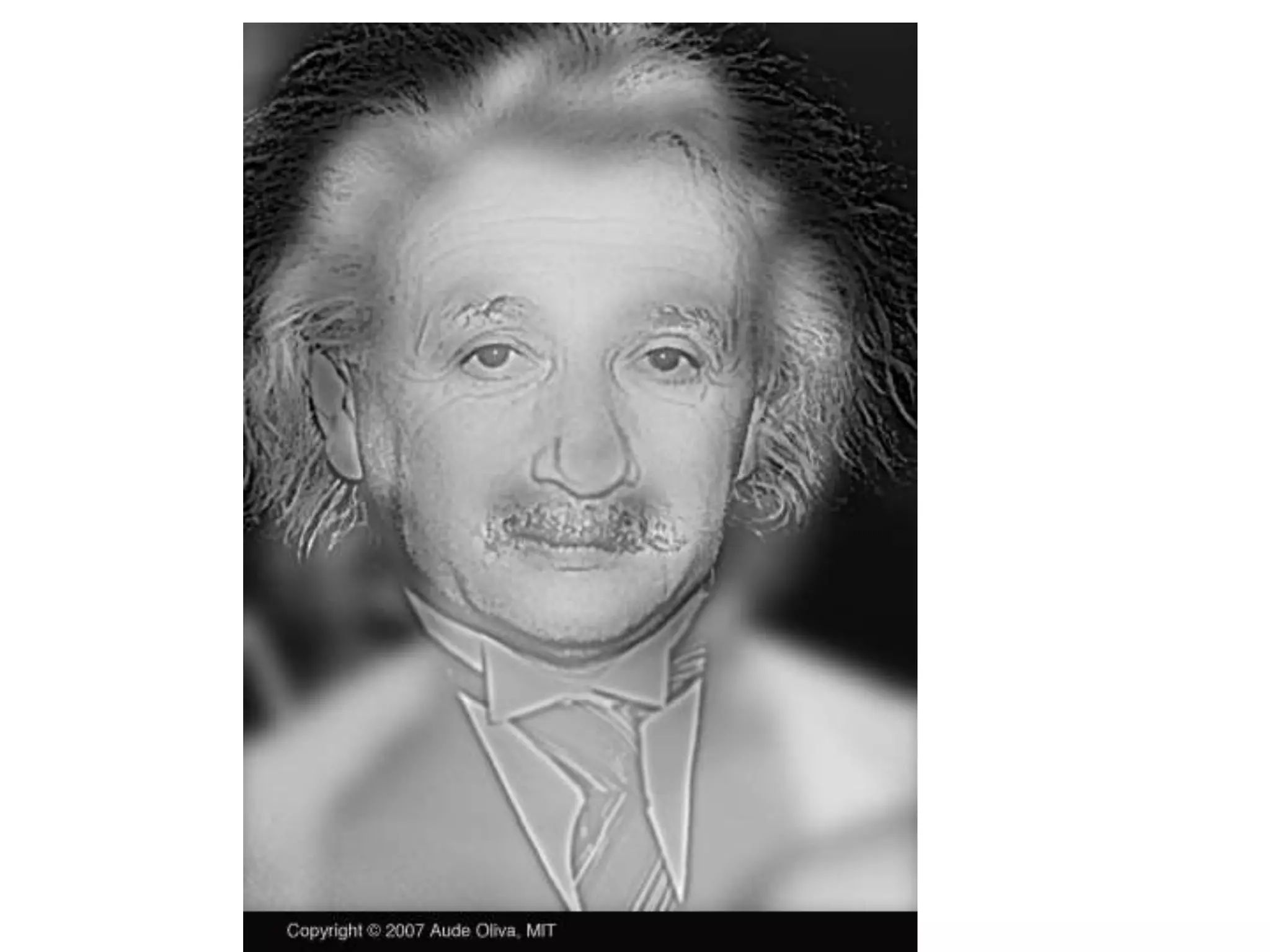

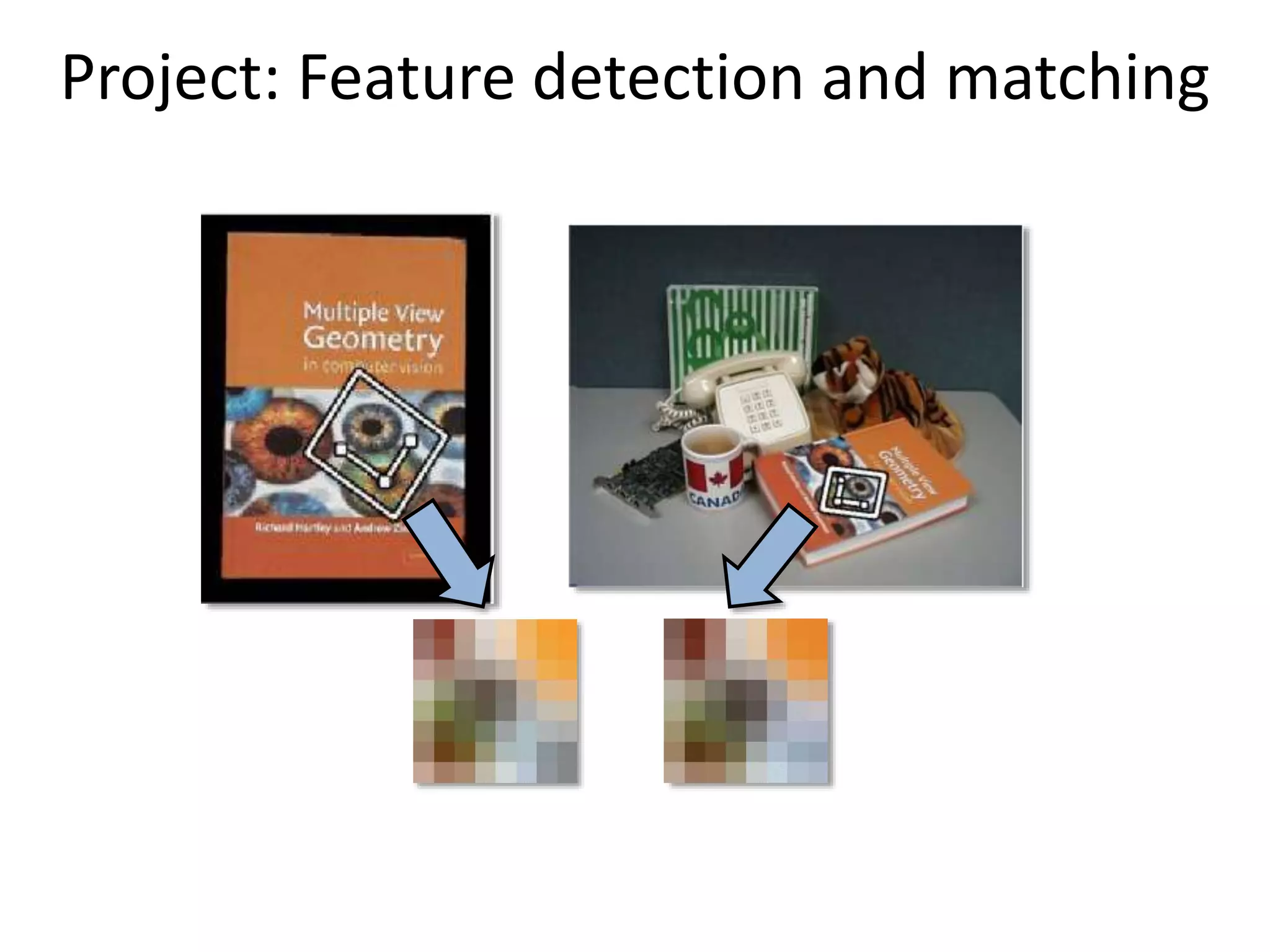

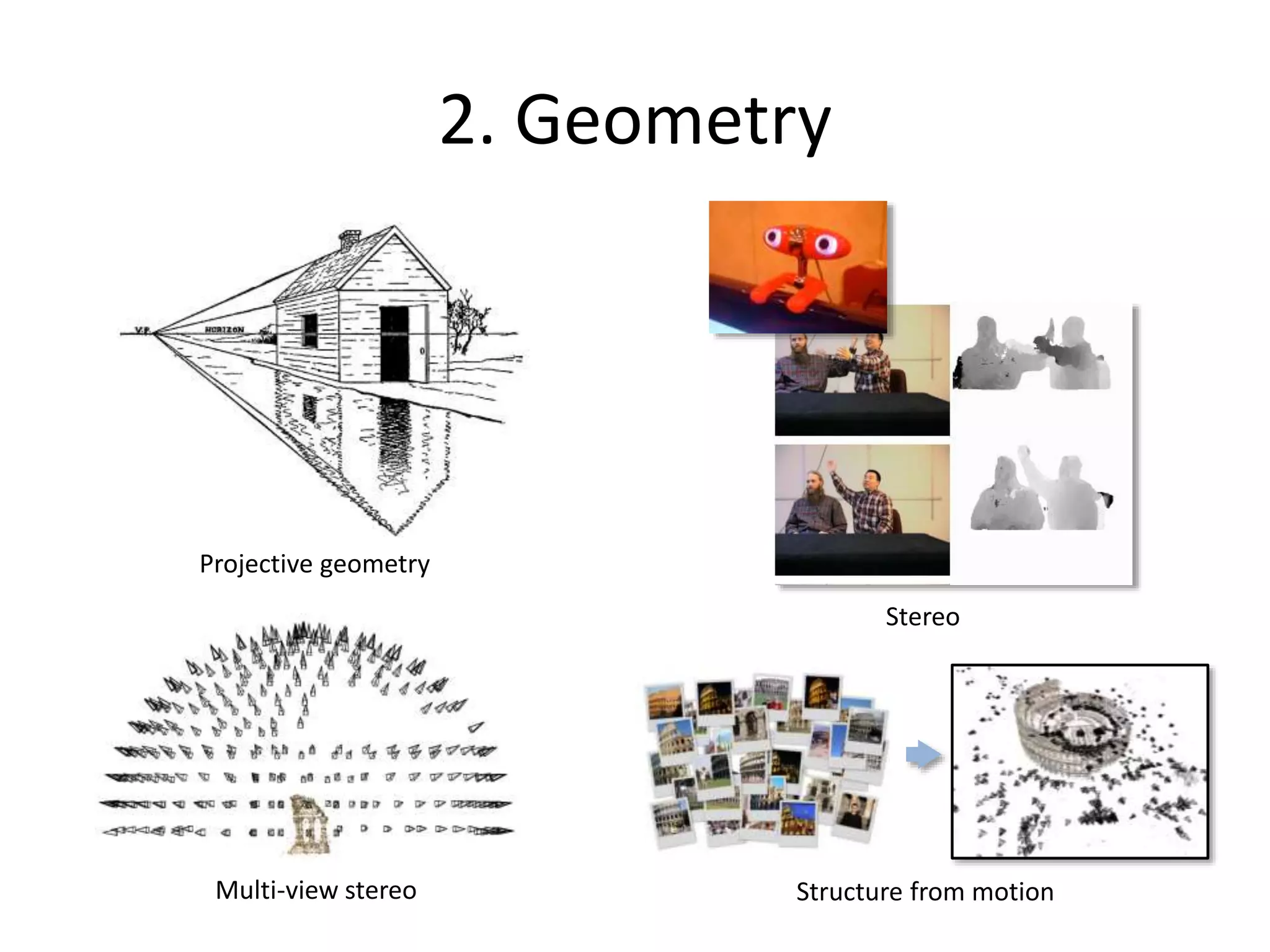

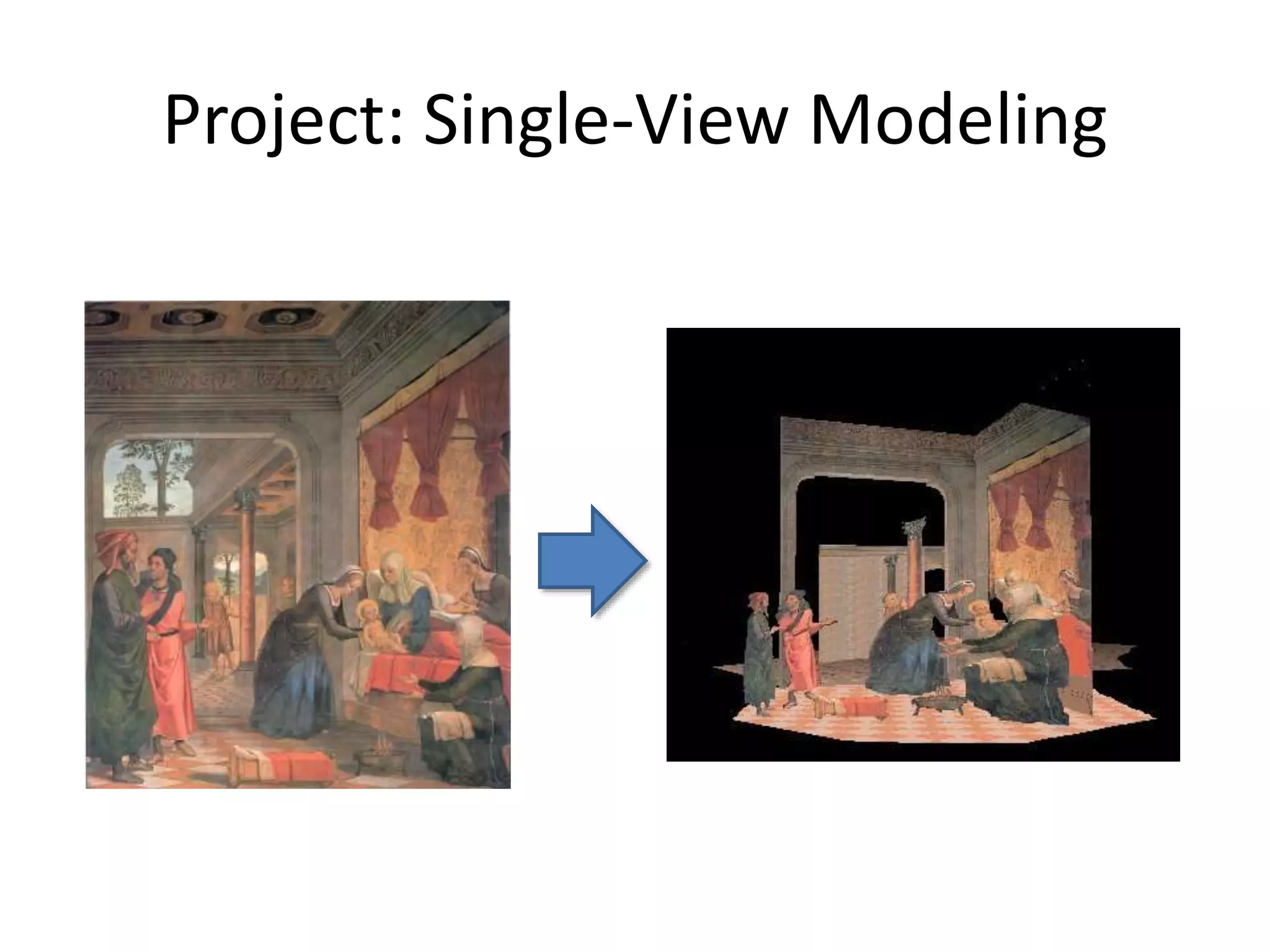

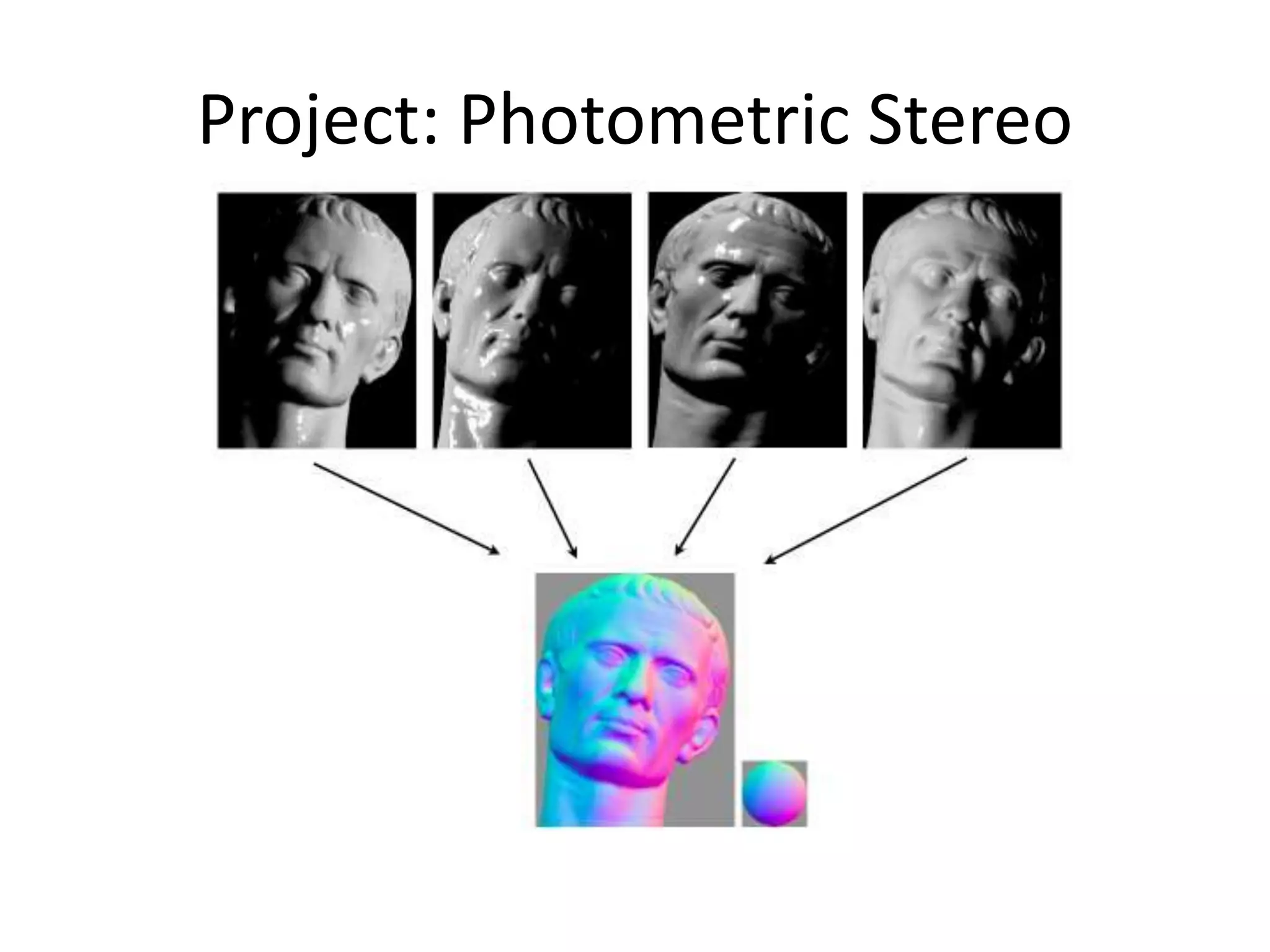

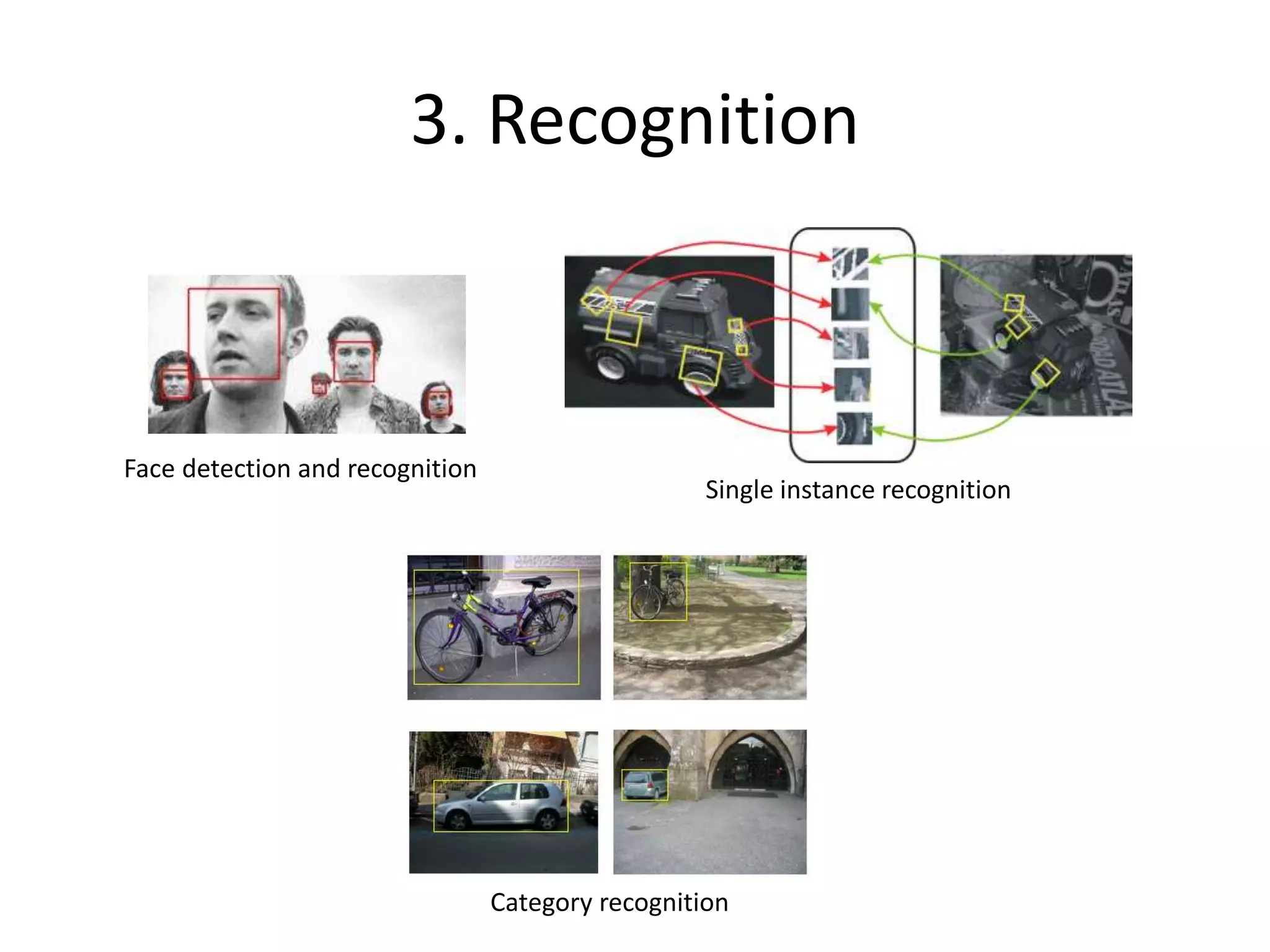

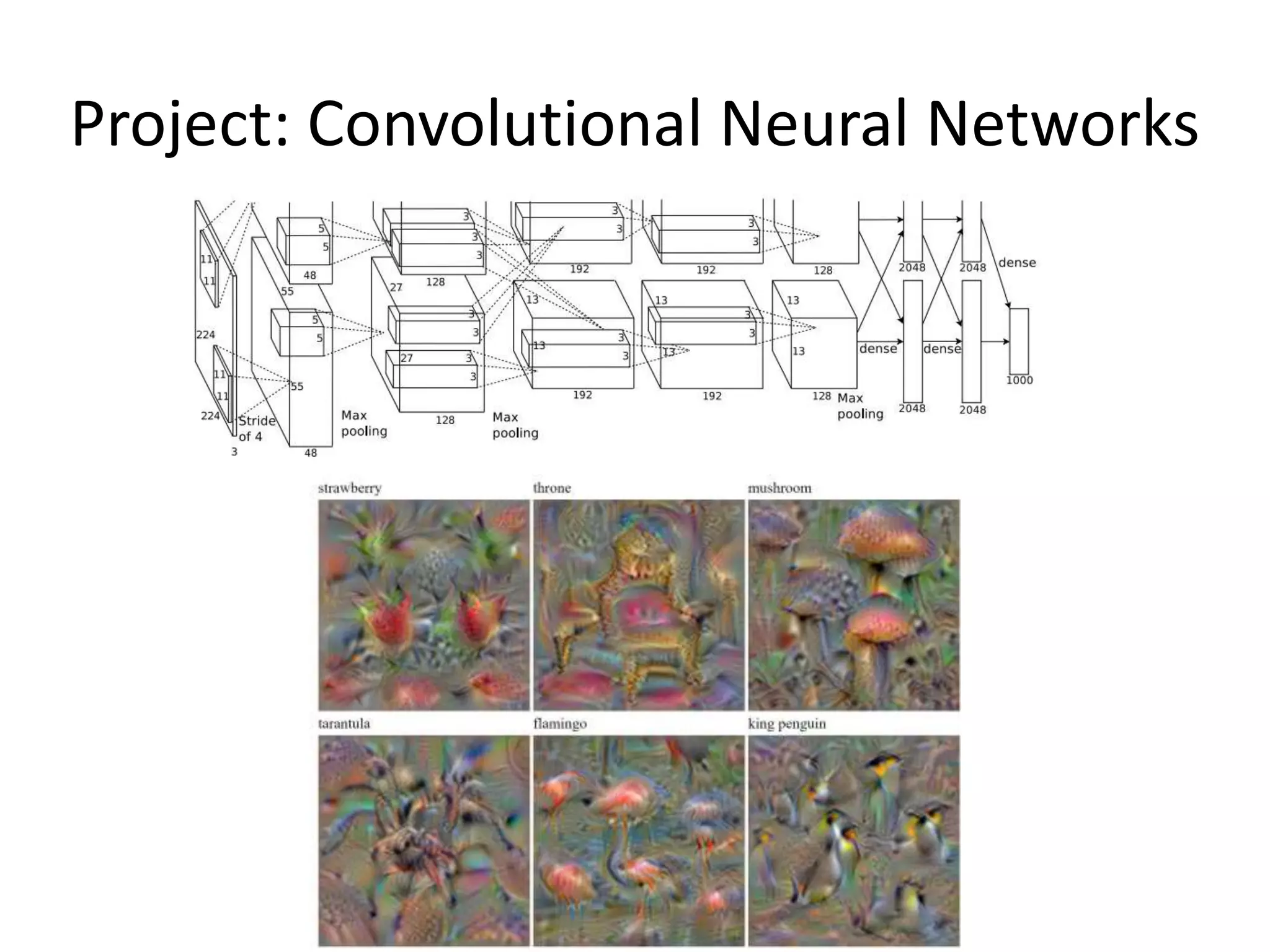

This document provides an overview of a course on computer vision called CSCI 455: Intro to Computer Vision. It acknowledges that many of the course slides were modified from other similar computer vision courses. The course will cover topics like image filtering, projective geometry, stereo vision, structure from motion, face detection, object recognition, and convolutional neural networks. It highlights current applications of computer vision like biometrics, mobile apps, self-driving cars, medical imaging, and more. The document discusses challenges in computer vision like viewpoint and illumination variations, occlusion, and local ambiguity. It emphasizes that perception is an inherently ambiguous problem that requires using prior knowledge about the world.