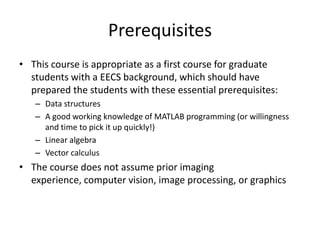

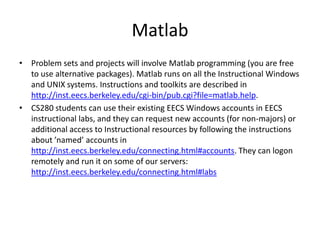

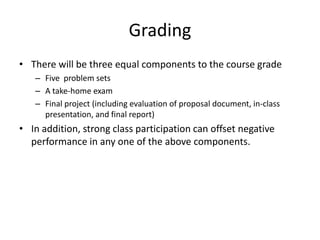

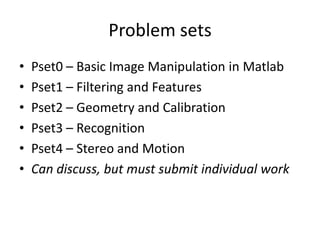

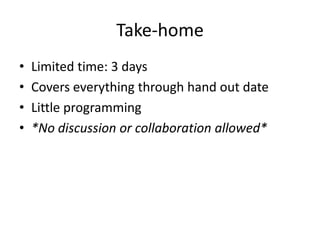

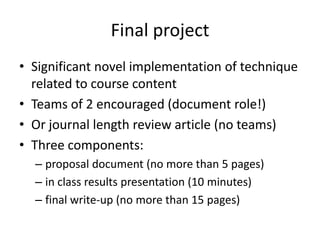

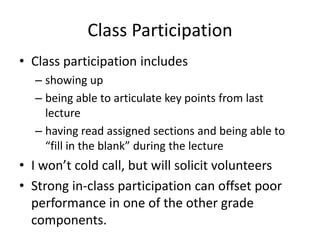

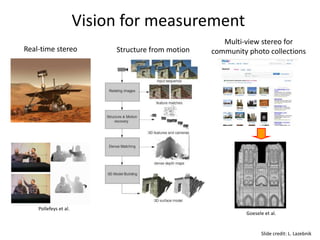

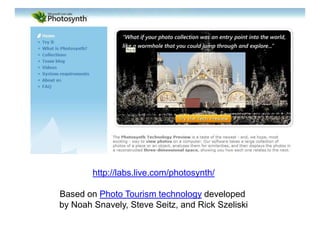

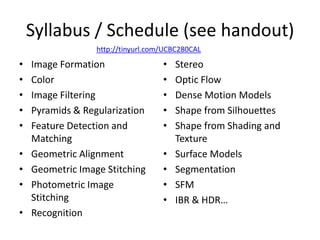

This document provides an overview of the C280 Computer Vision course, including administrative details, prerequisites, textbooks, grading, schedule, and a brief introduction to the field of computer vision. The course will cover topics like image formation, filtering, features, geometry, recognition, stereo, and motion. Assignments will include problem sets, a take-home exam, and a final project. The goal is to teach the principles and algorithms of computer vision through programming assignments and a project.