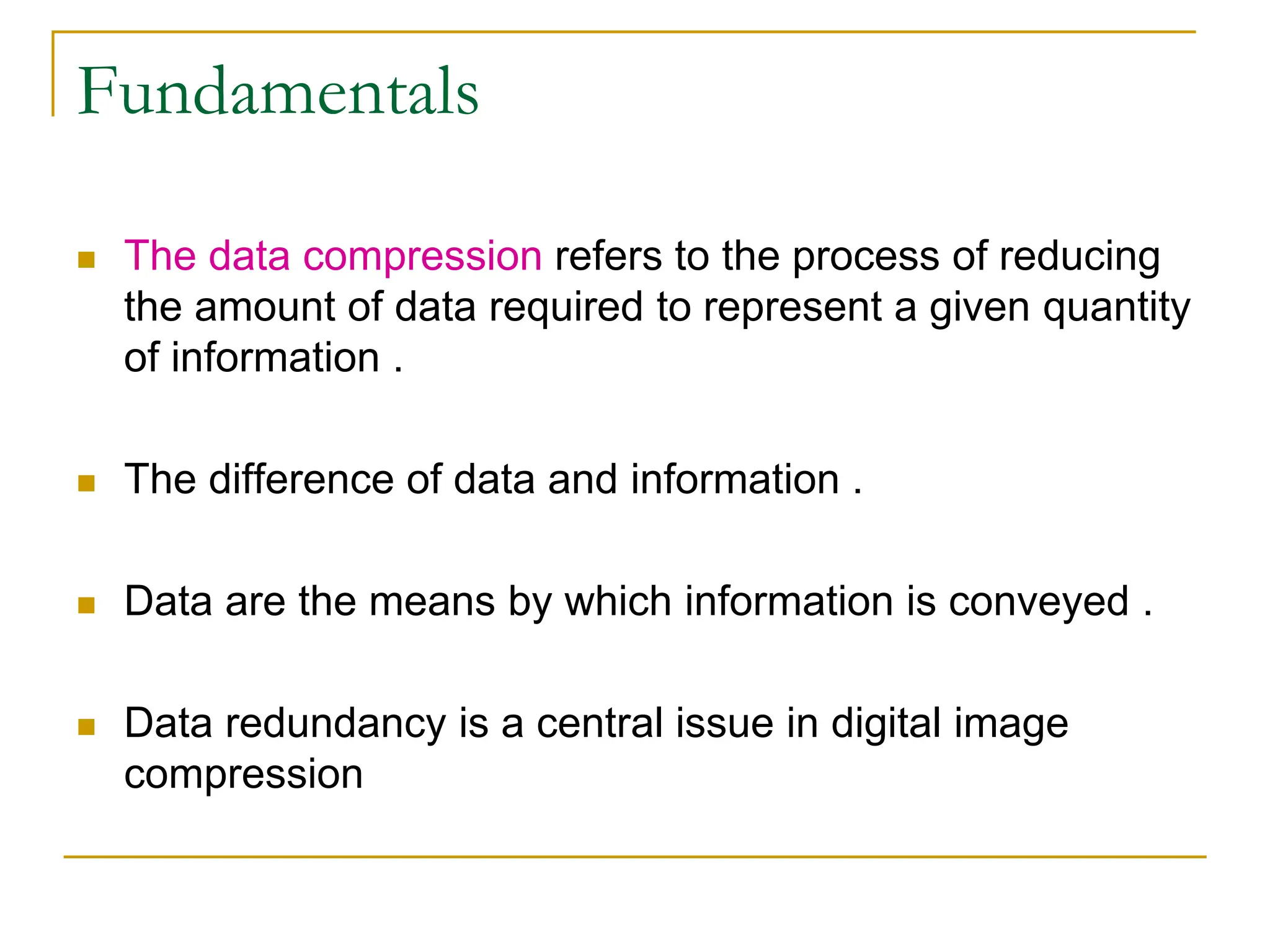

This document discusses various methods of image compression. It begins by defining image compression as reducing the amount of data required to represent a digital image. The main methods of compression are by removing redundant data from the image.

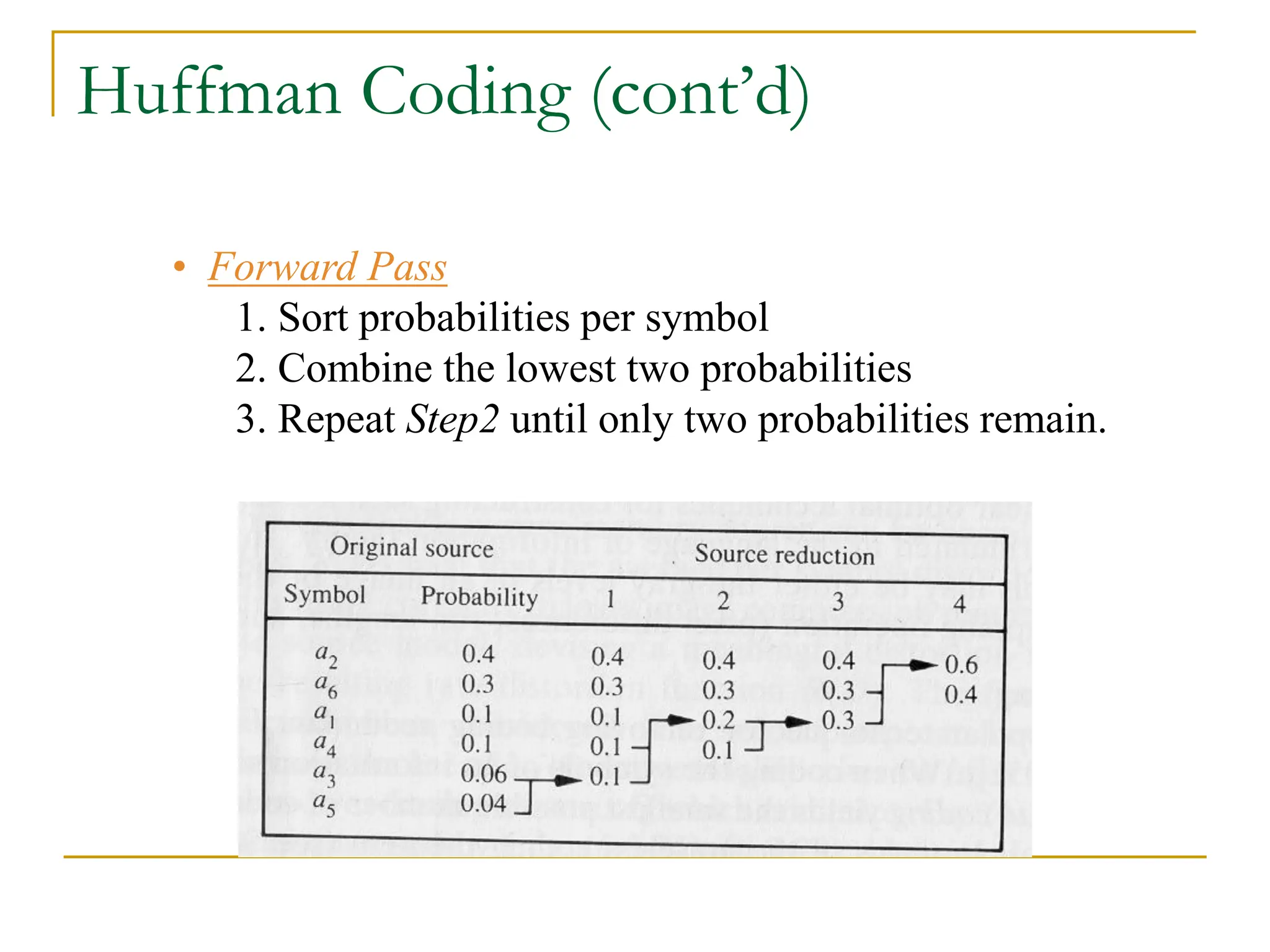

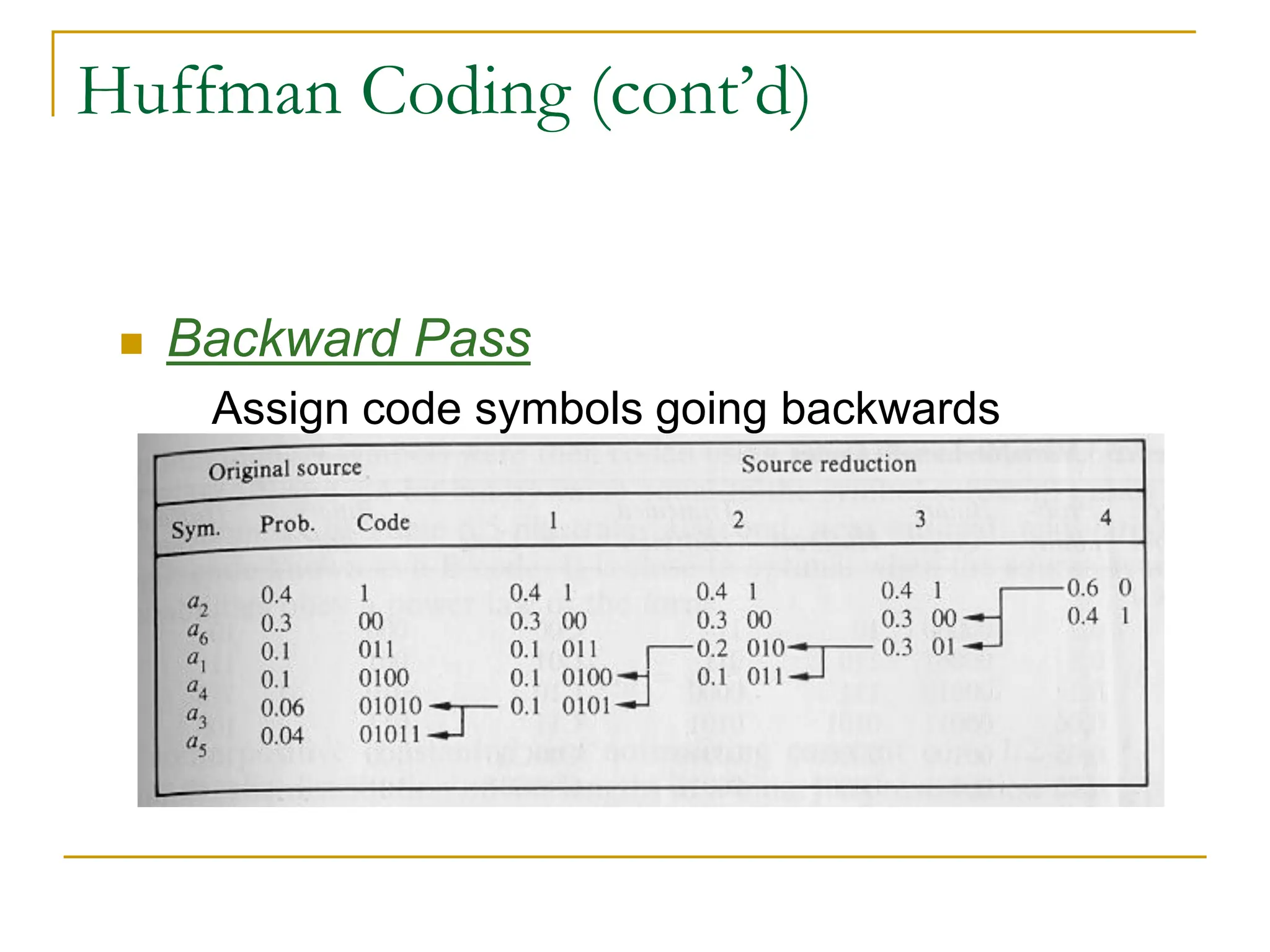

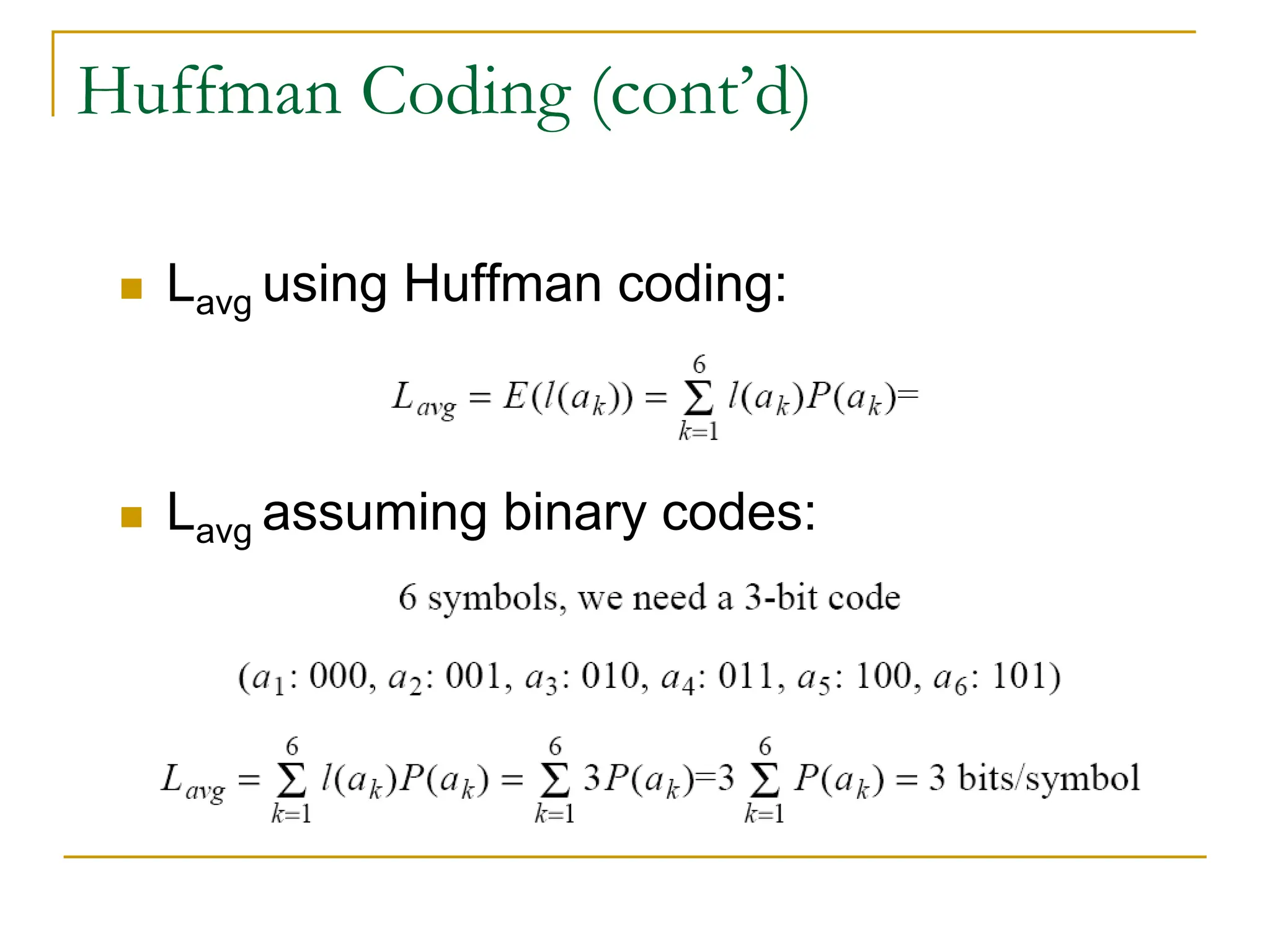

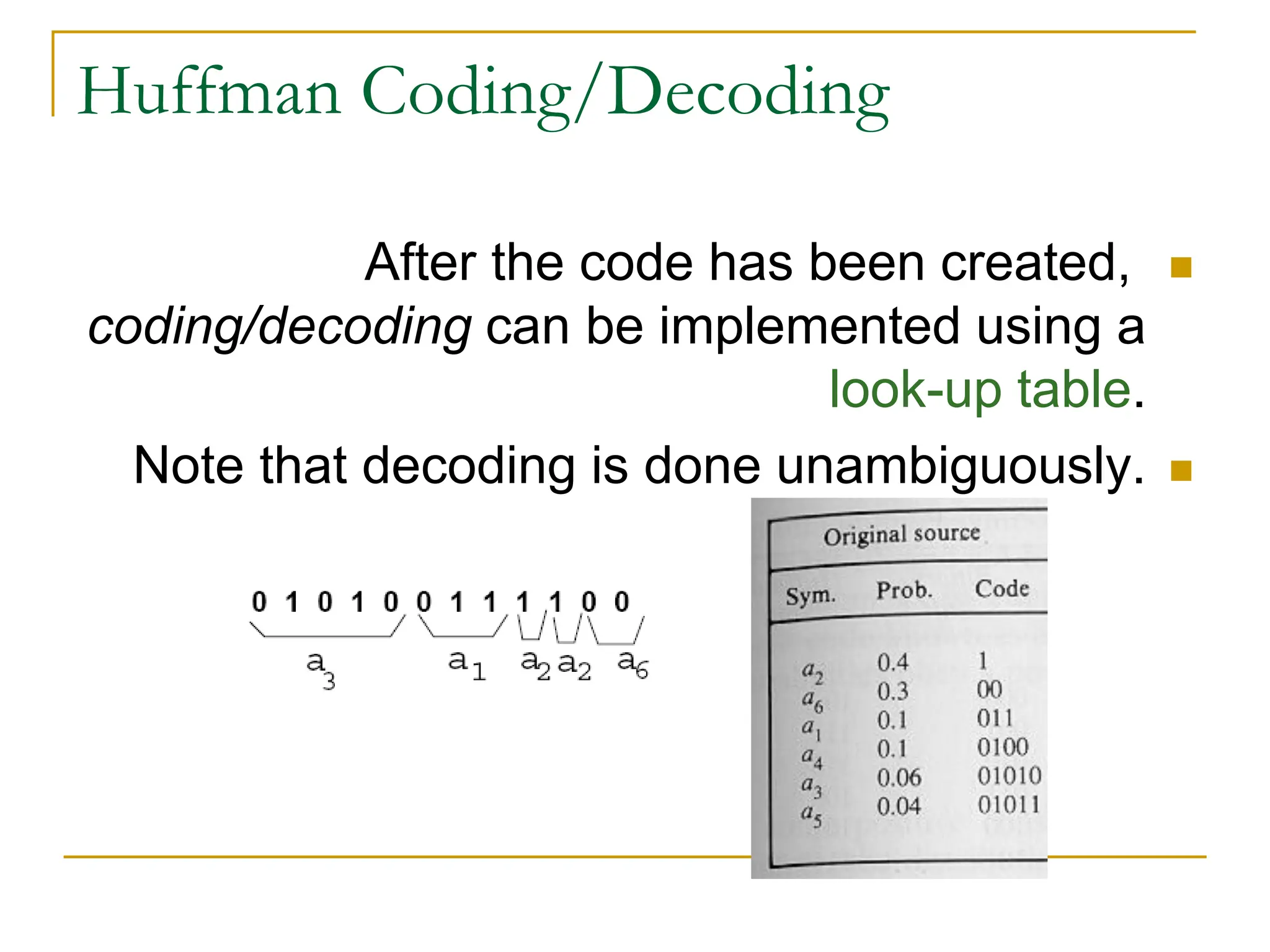

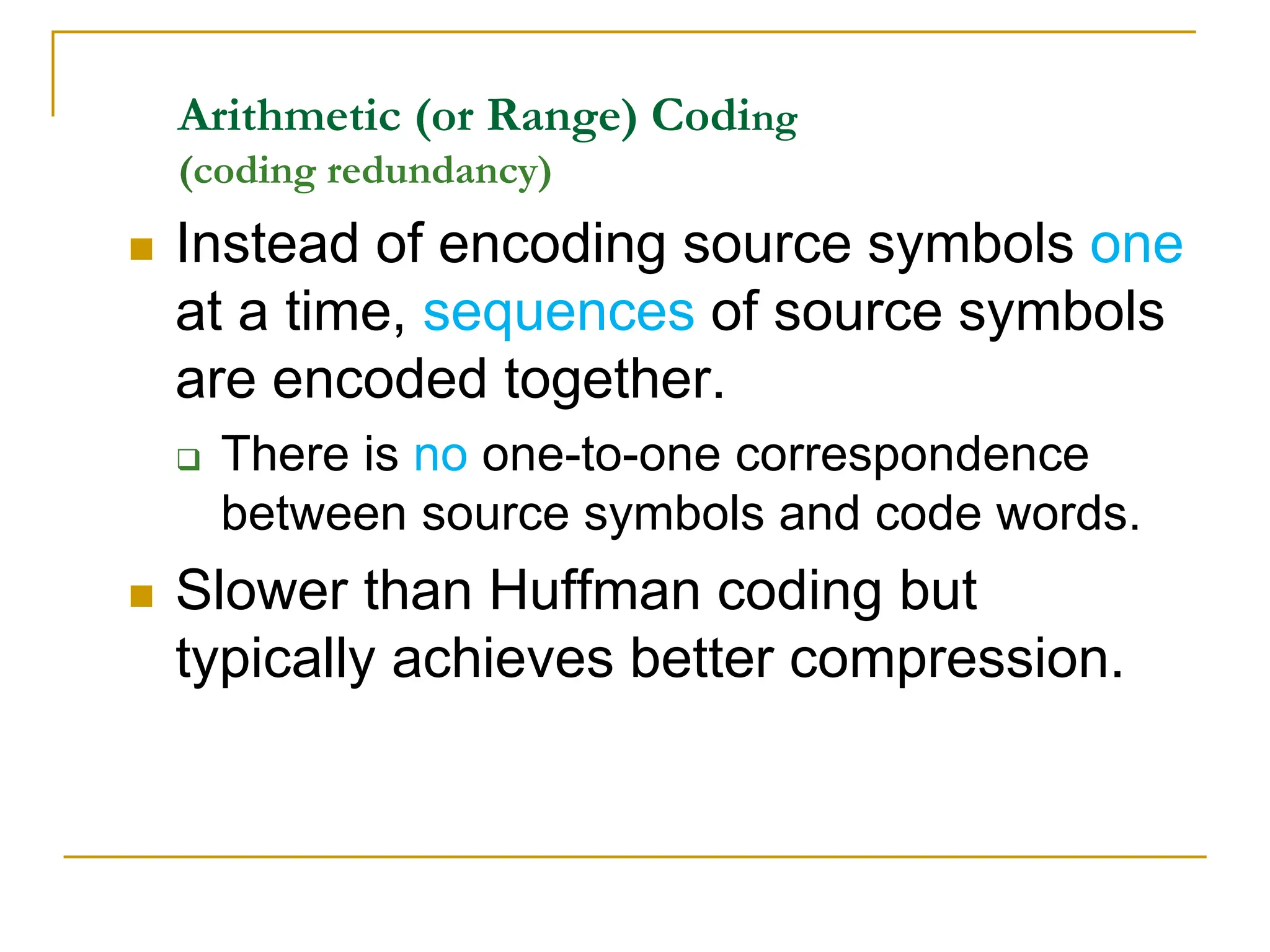

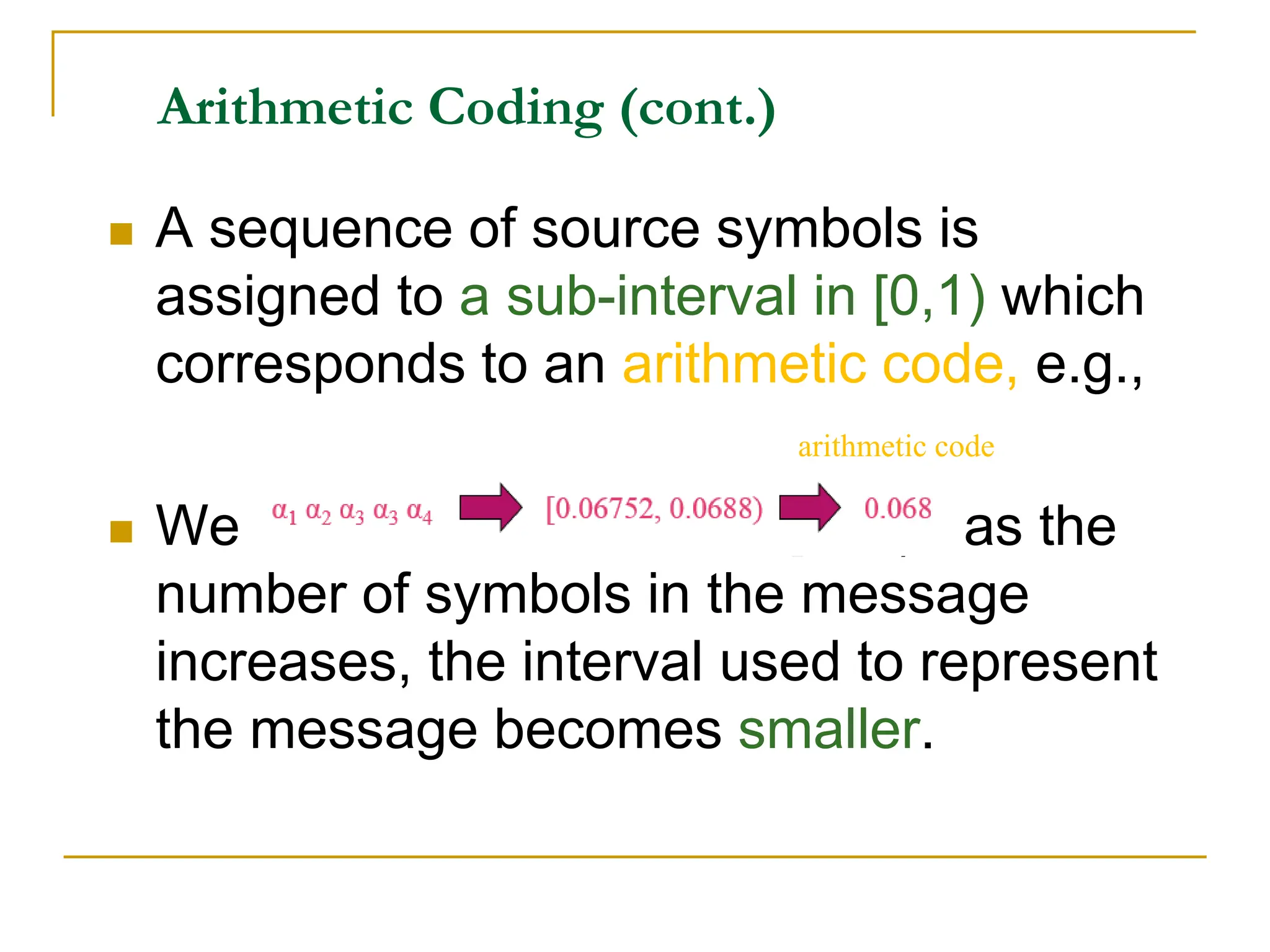

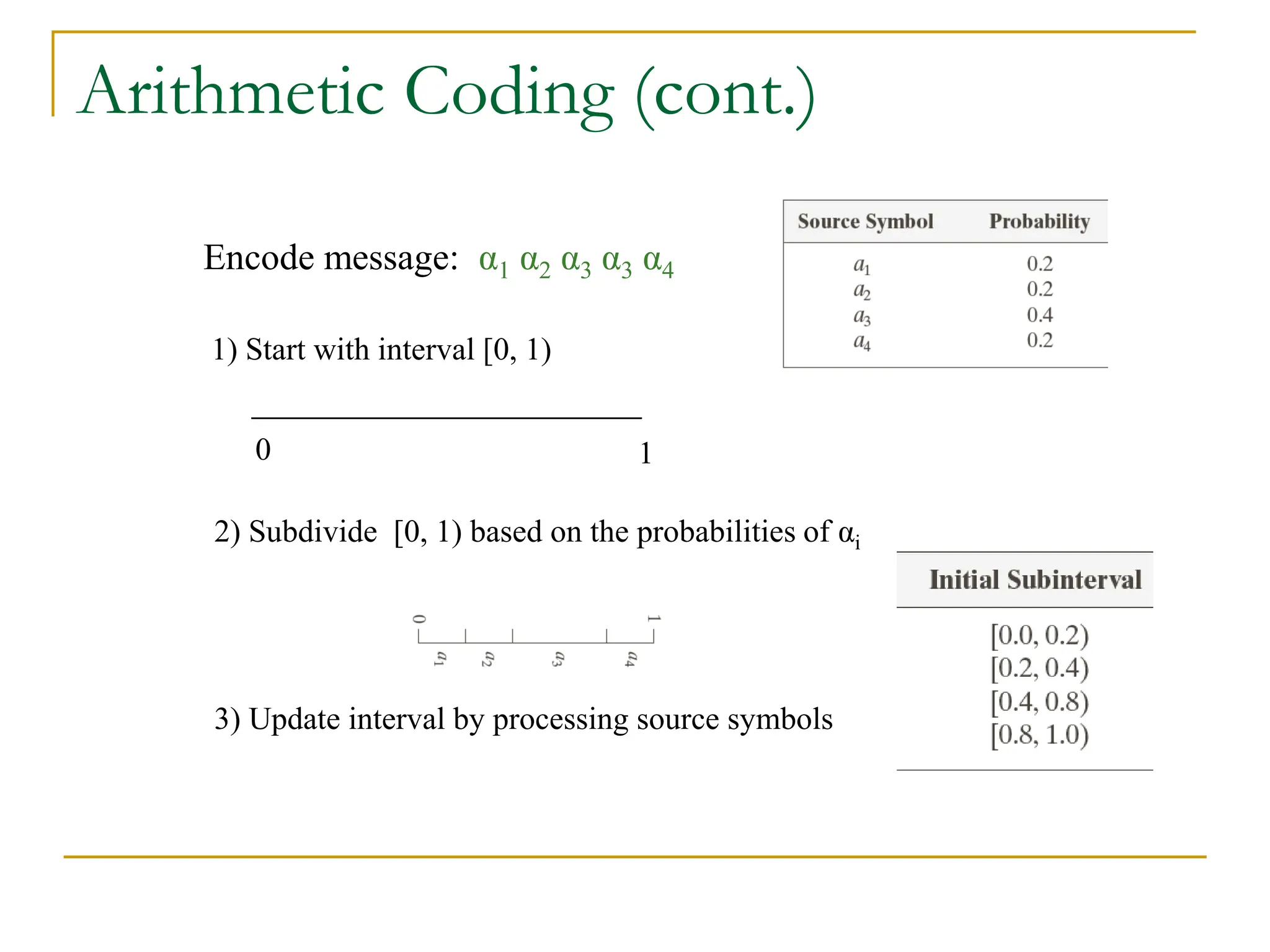

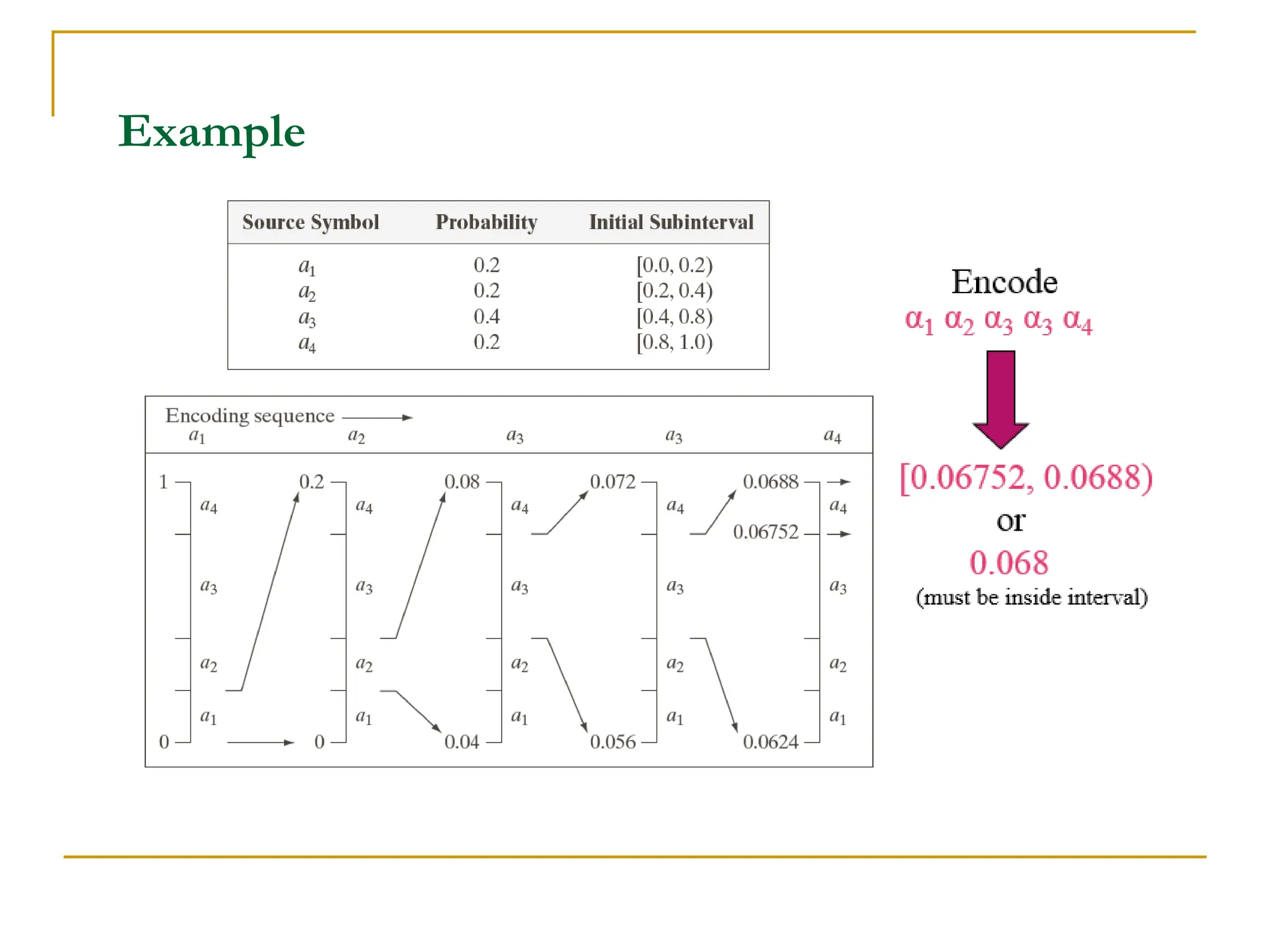

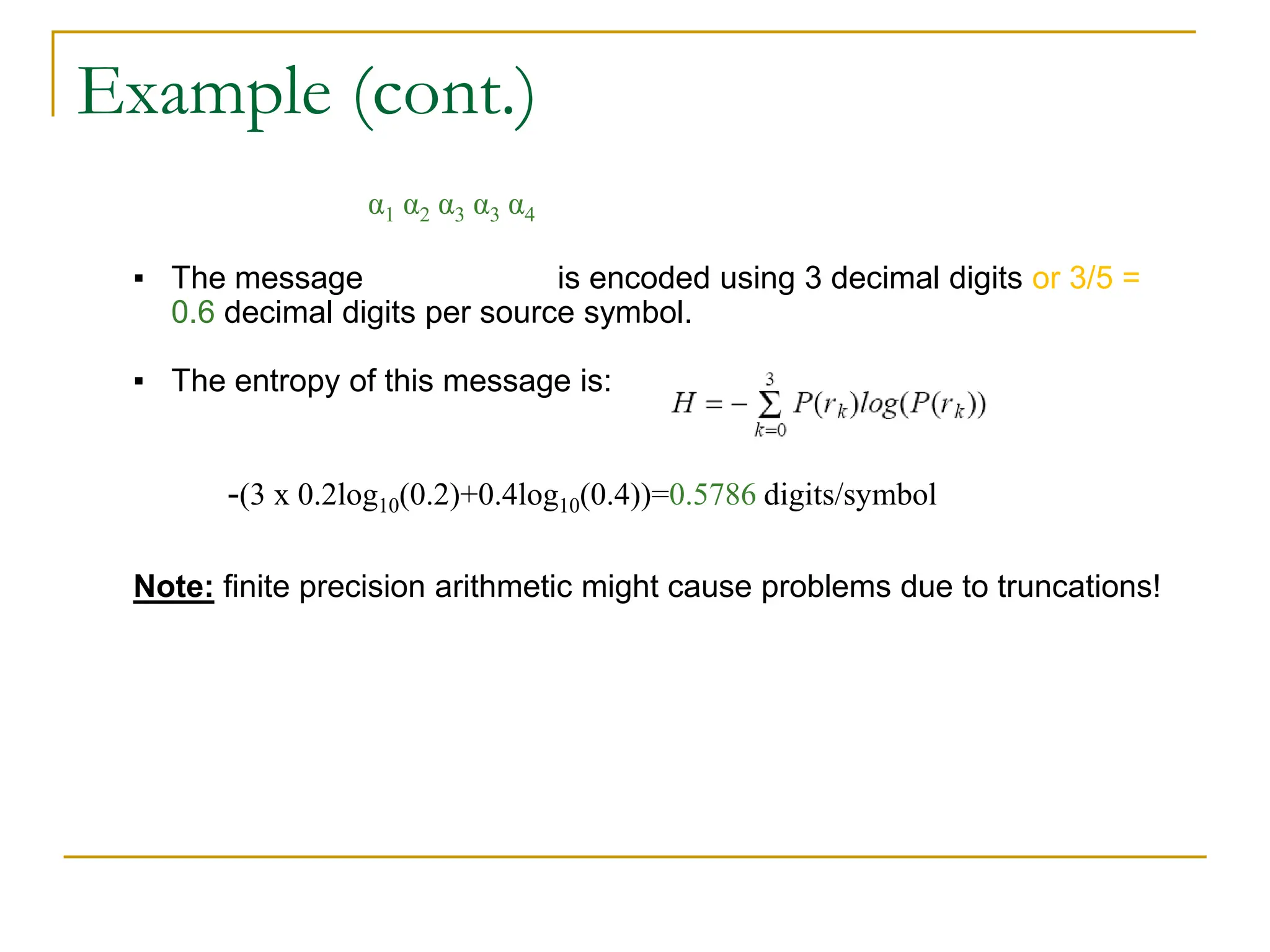

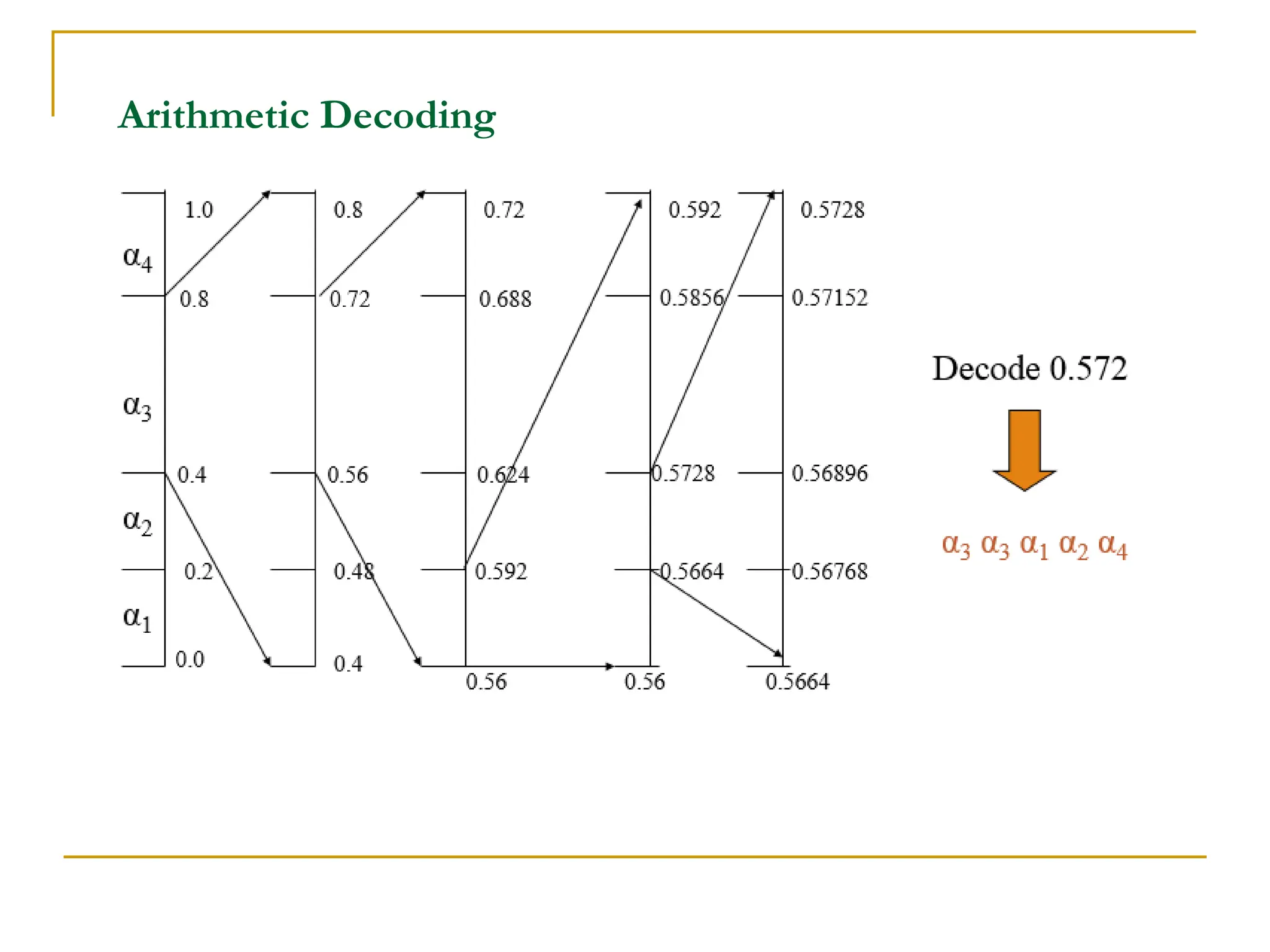

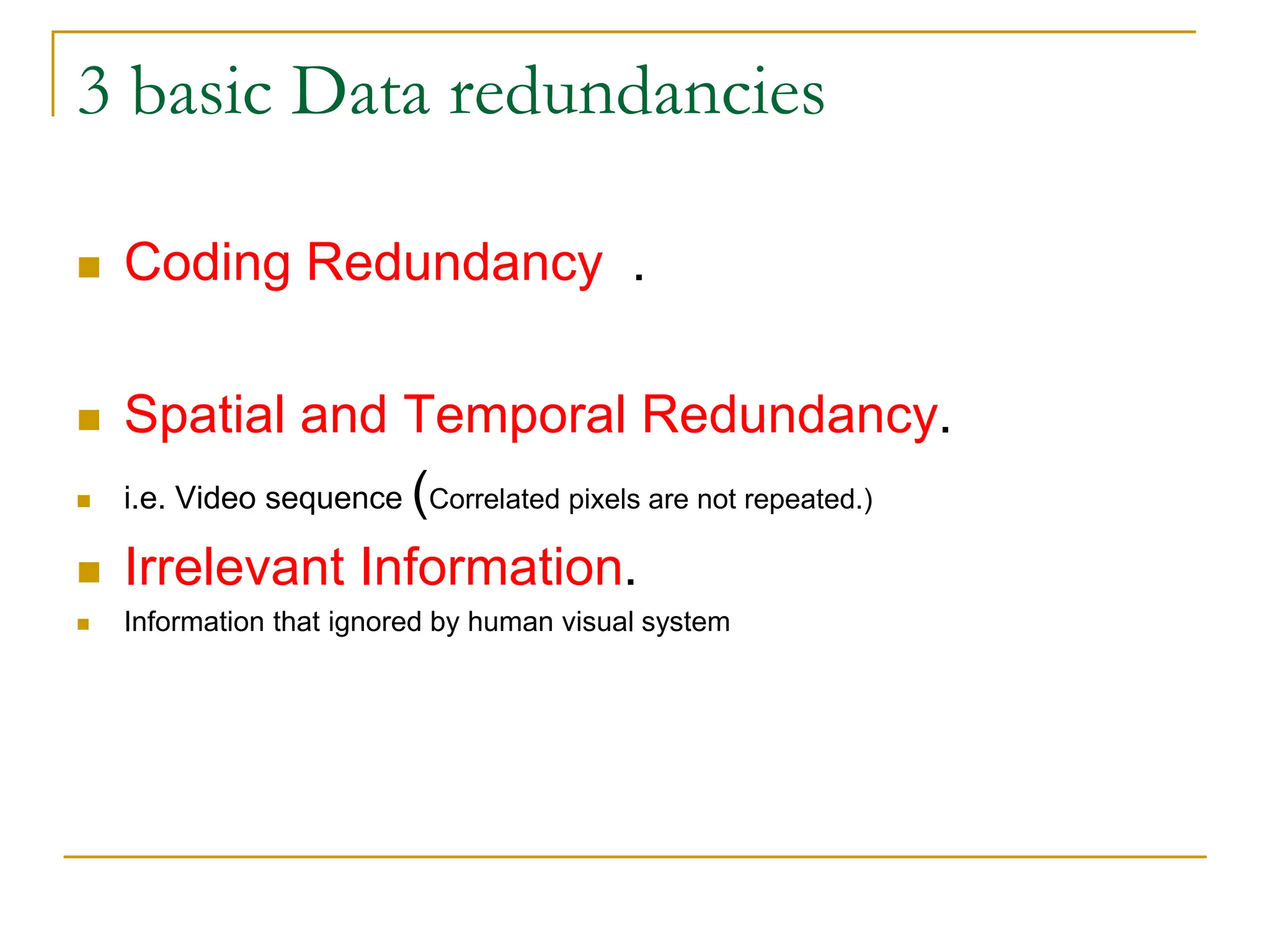

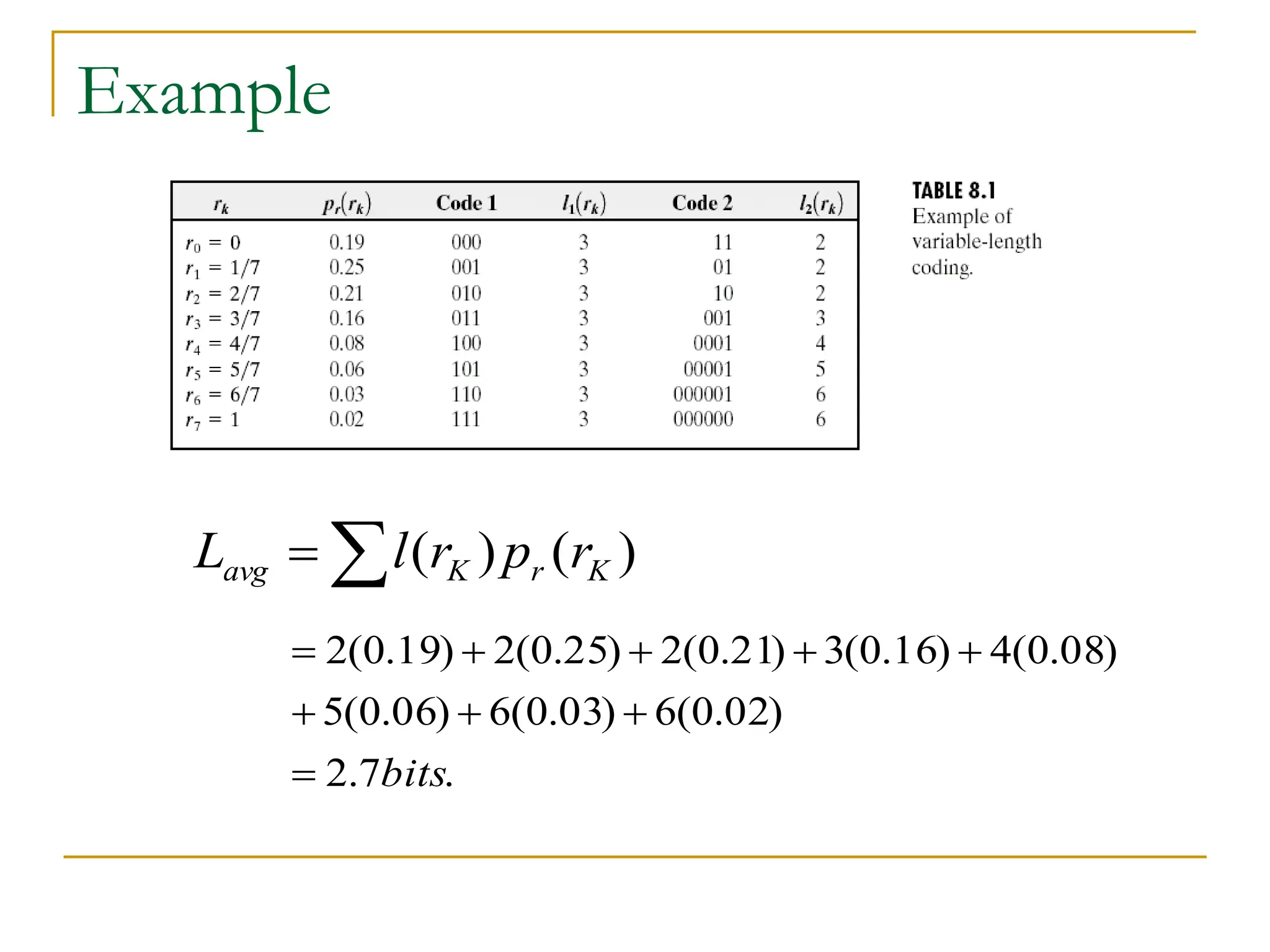

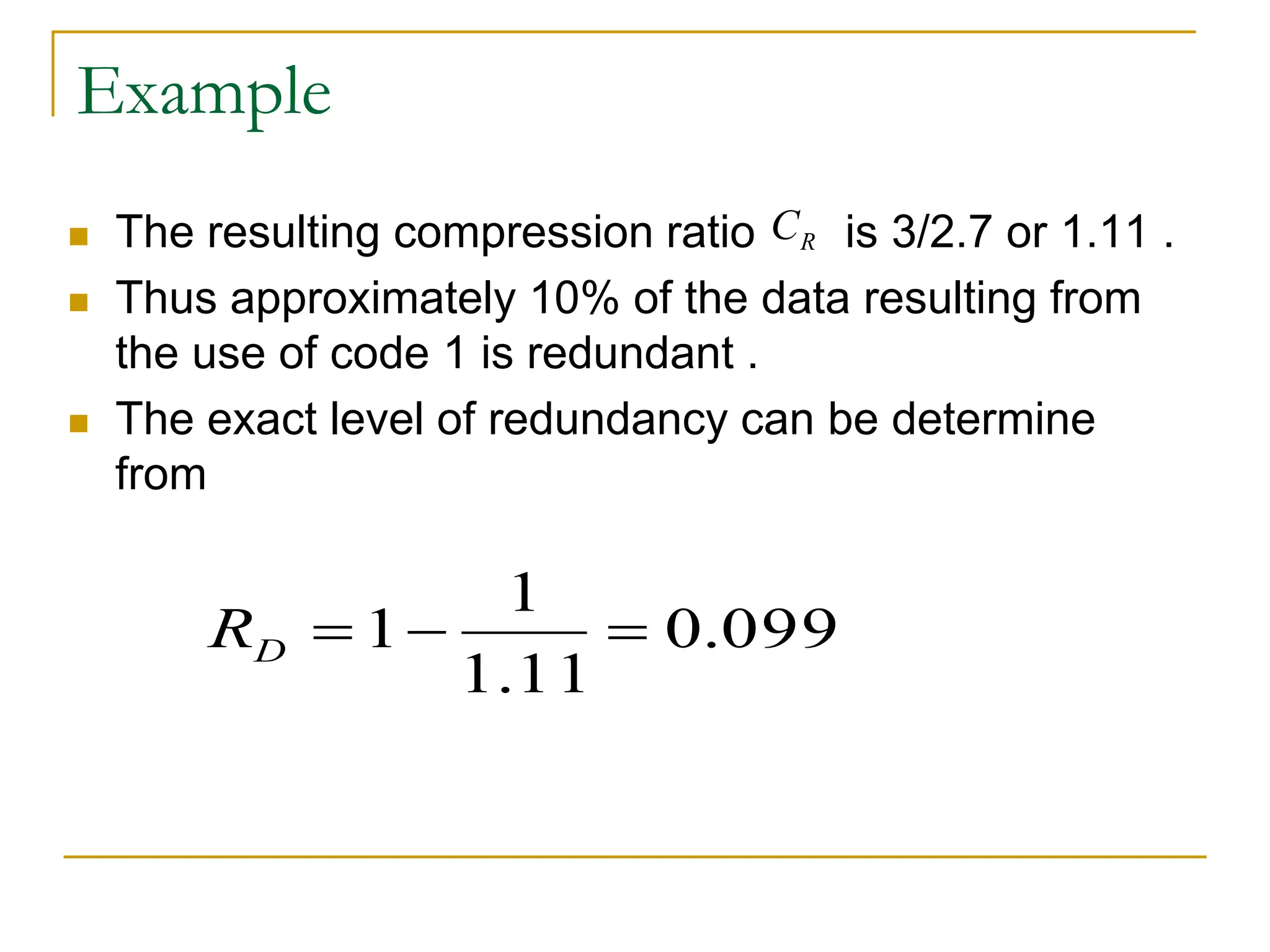

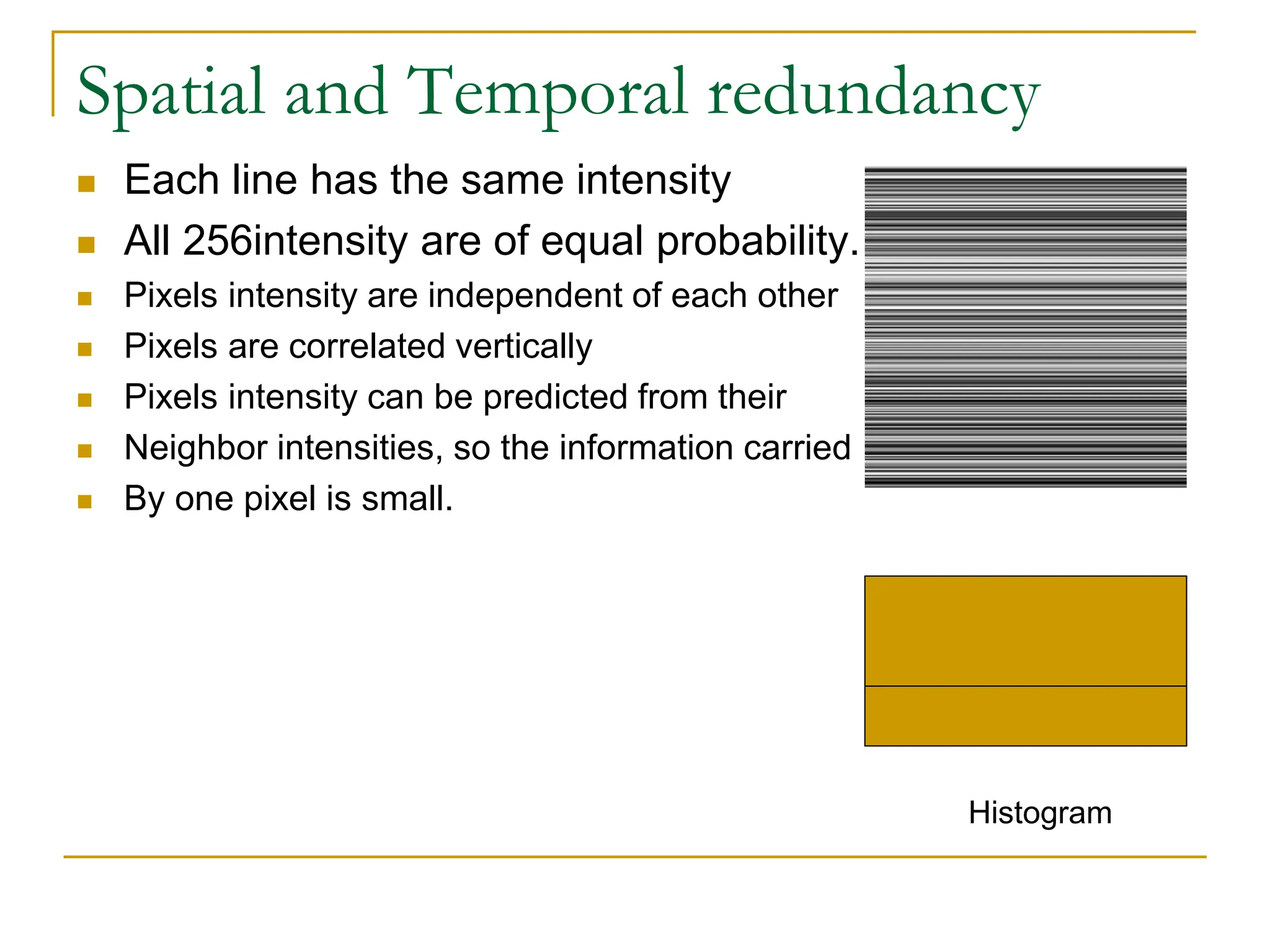

The document then discusses three main types of data redundancy that can be reduced: coding redundancy, spatial/temporal redundancy, and irrelevant information redundancy. Coding redundancy refers to inefficient coding of pixel values and can be reduced using techniques like Huffman coding or arithmetic coding. Spatial/temporal redundancy occurs from correlations between neighboring pixel values, while irrelevant information redundancy refers to data that is ignored by the human visual system.

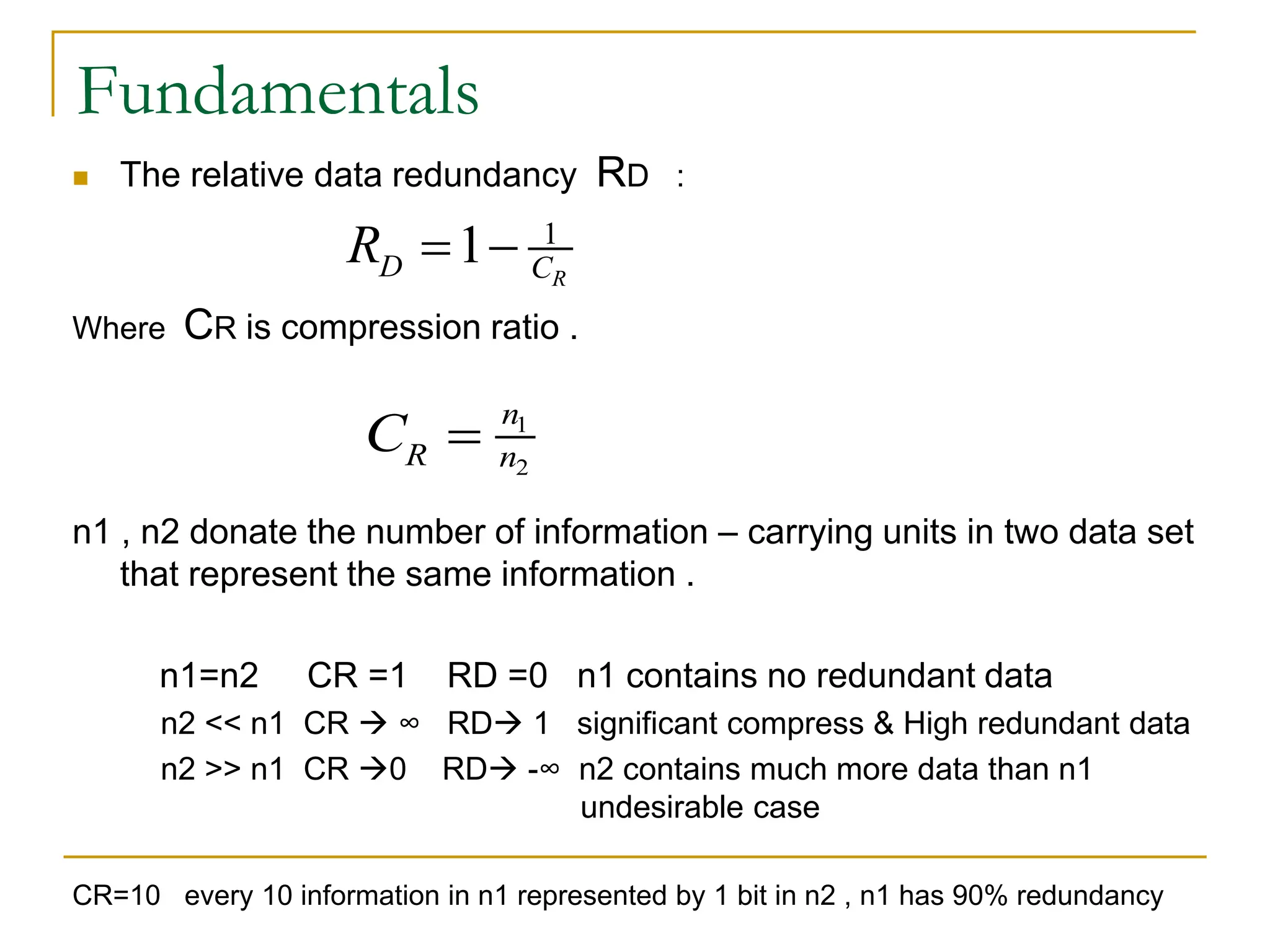

The document provides examples and formulas for measuring compression ratio, data redundancy, and estimating the information content of

![Coding Redundancy

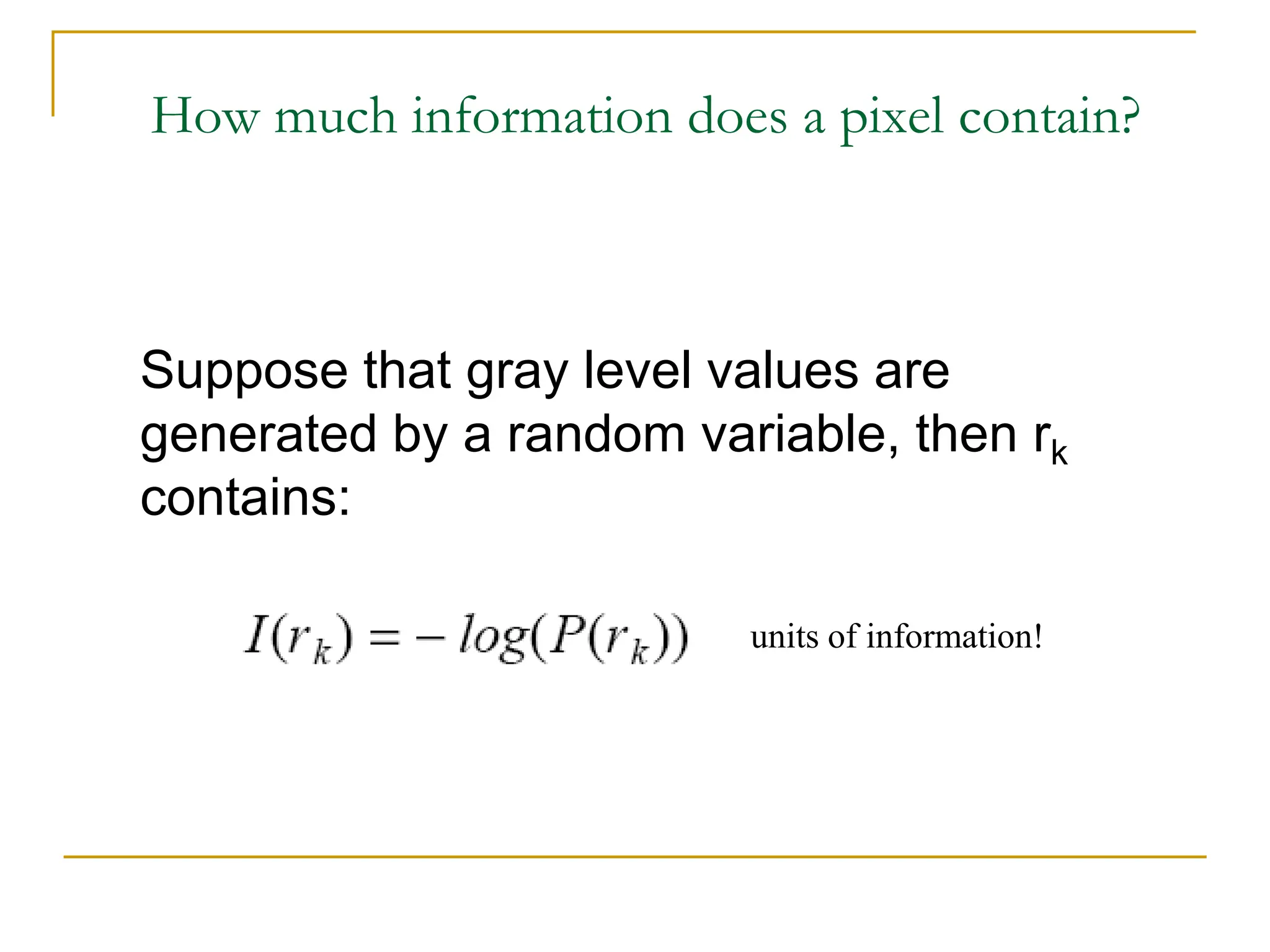

Lets assume , that a discrete random variable rK in the

interval [0 , 1] represents the gray levels of an image and

each rK occurs with probability

k=0,1,2,……L-1

Where L is the number gray levels ,

nK is the number of times that the Kth gray level appears in

image .

n is the total number of pixel in the image .

( ) K

n

r K n

P r

( )

r K

P r](https://image.slidesharecdn.com/lec8imagecompression-231015160653-5a904101/75/Lec_8_Image-Compression-pdf-6-2048.jpg)

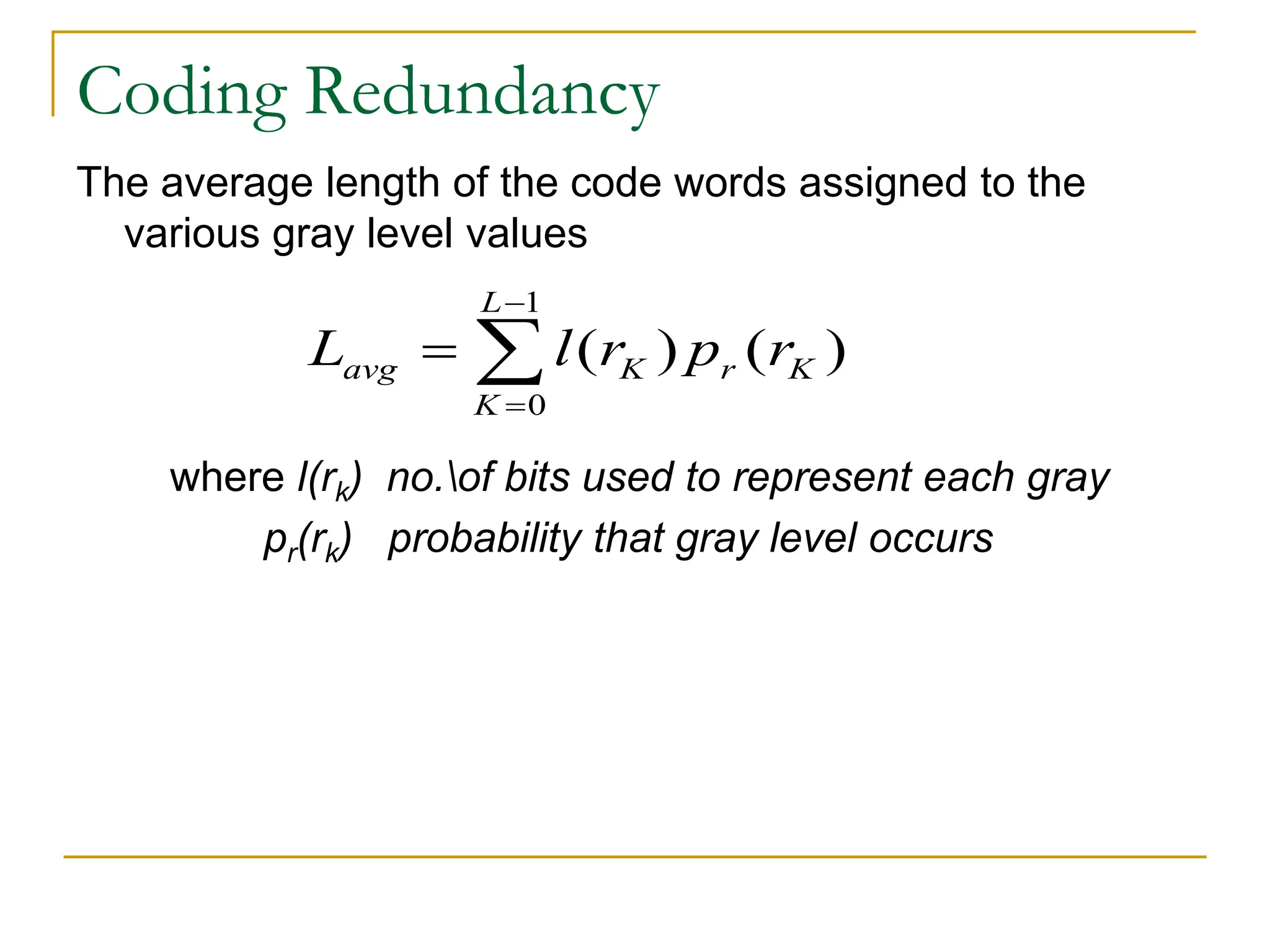

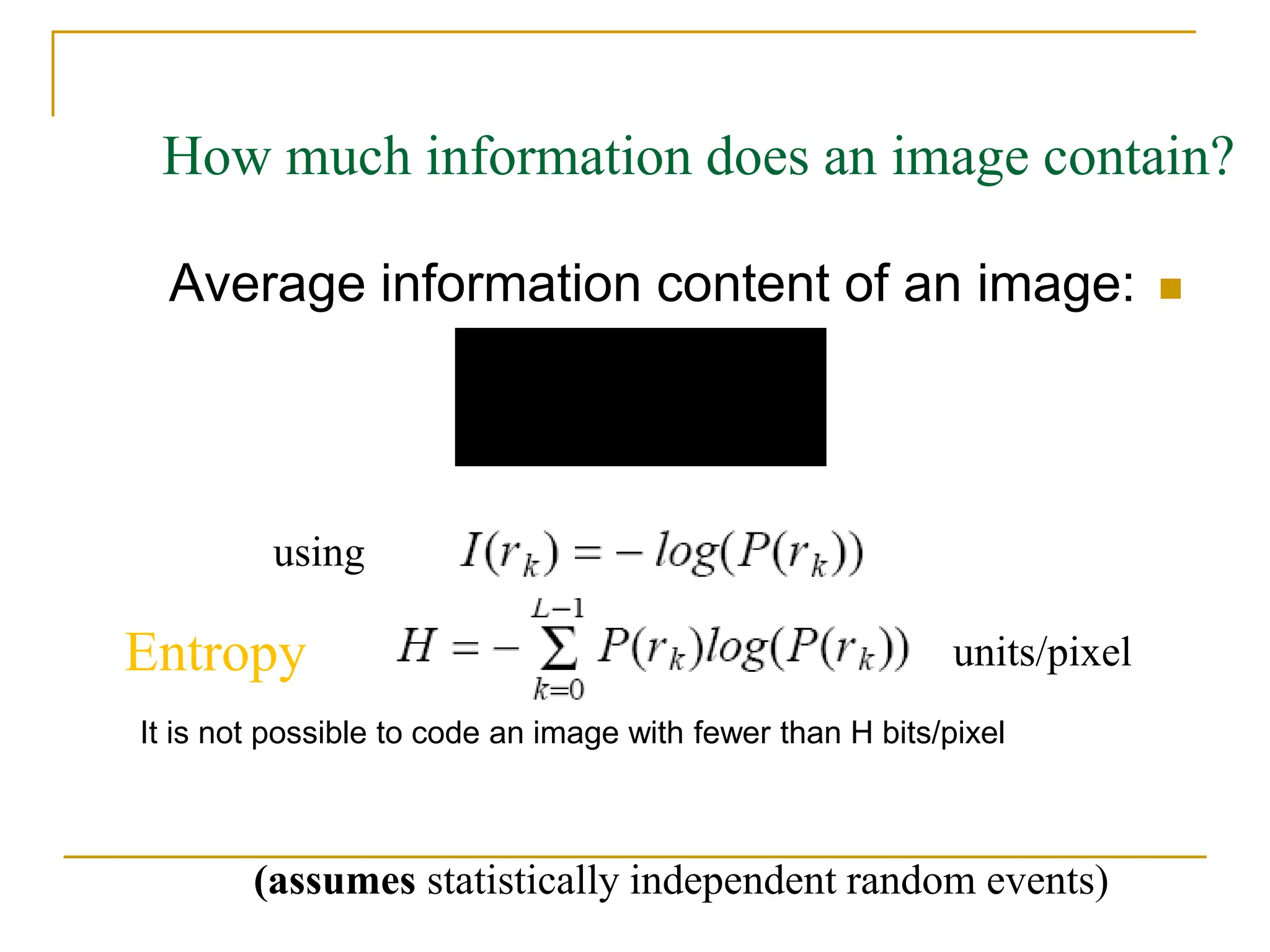

![Example:

H = -[.25 log2 0.25 +.47 log2 0.47 +

.25 log2 0.25 + .03 log2 0.03]

= [-0.25(-2) +.47(-1.09) + .25(-2) +.03(-5.06)]

= 1.6614 bits/pixel

What about H for the second type of redundancy?](https://image.slidesharecdn.com/lec8imagecompression-231015160653-5a904101/75/Lec_8_Image-Compression-pdf-17-2048.jpg)