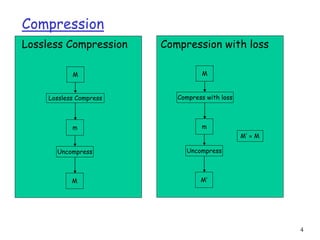

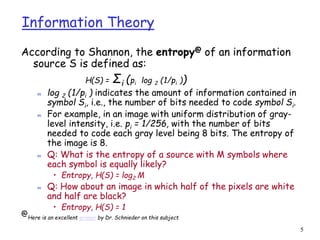

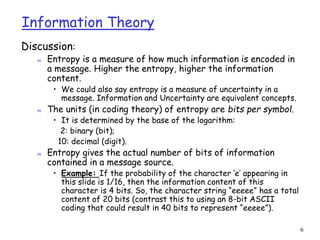

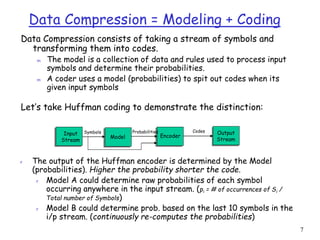

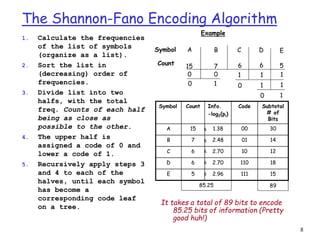

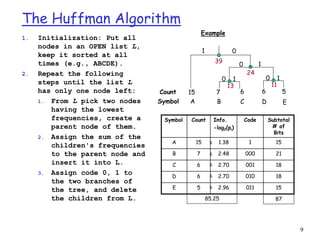

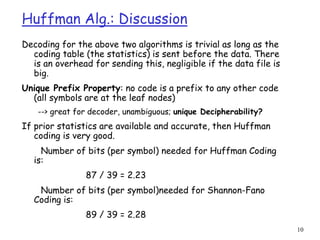

This document discusses lossless data compression techniques including Shannon-Fano coding and Huffman coding. It explains that Shannon-Fano coding assigns binary codes to symbols based on dividing a list of symbols and frequencies in half recursively, while Huffman coding builds a binary tree from the frequency list and assigns codes based on paths from the root to leaves. The document also discusses entropy from information theory and how both algorithms aim to minimize the number of bits needed to represent data.