This document provides an introduction to XGBoost, including:

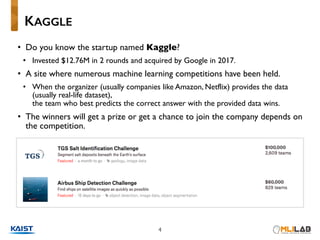

1. XGBoost is an important machine learning library that is commonly used by winners of Kaggle competitions.

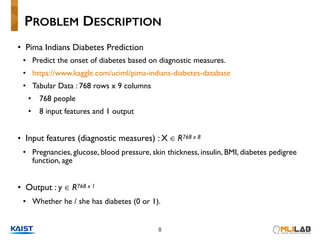

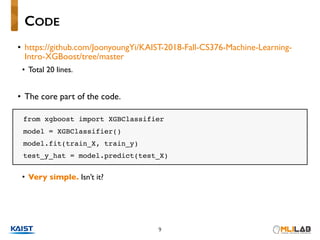

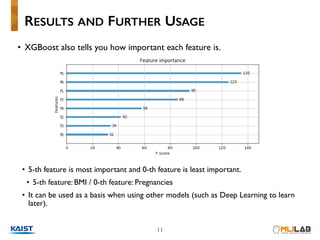

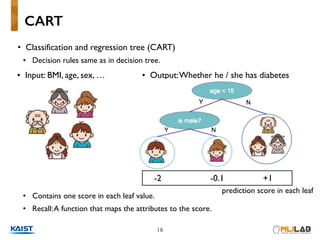

2. A quick example is shown using XGBoost to predict diabetes based on patient data, achieving good results with only 20 lines of simple code.

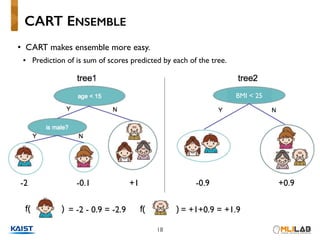

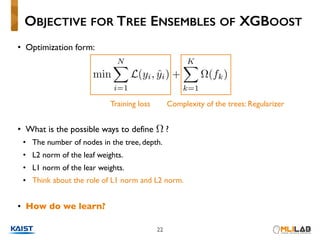

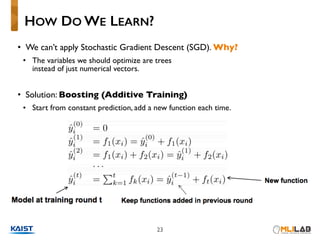

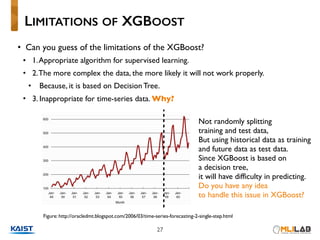

3. XGBoost works by creating an ensemble of decision trees through boosting, and focuses on explaining concepts at a high level rather than detailed algorithms.