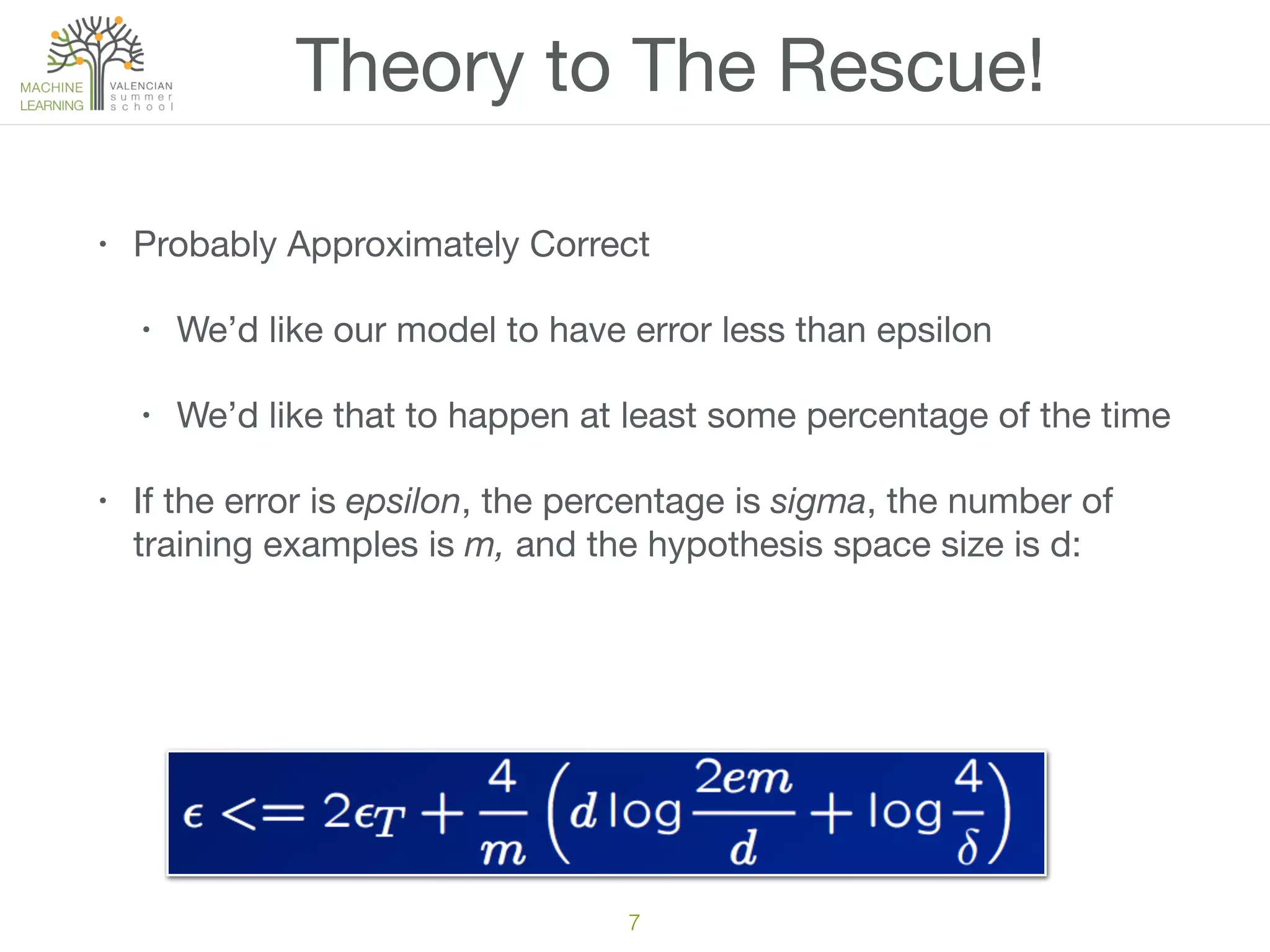

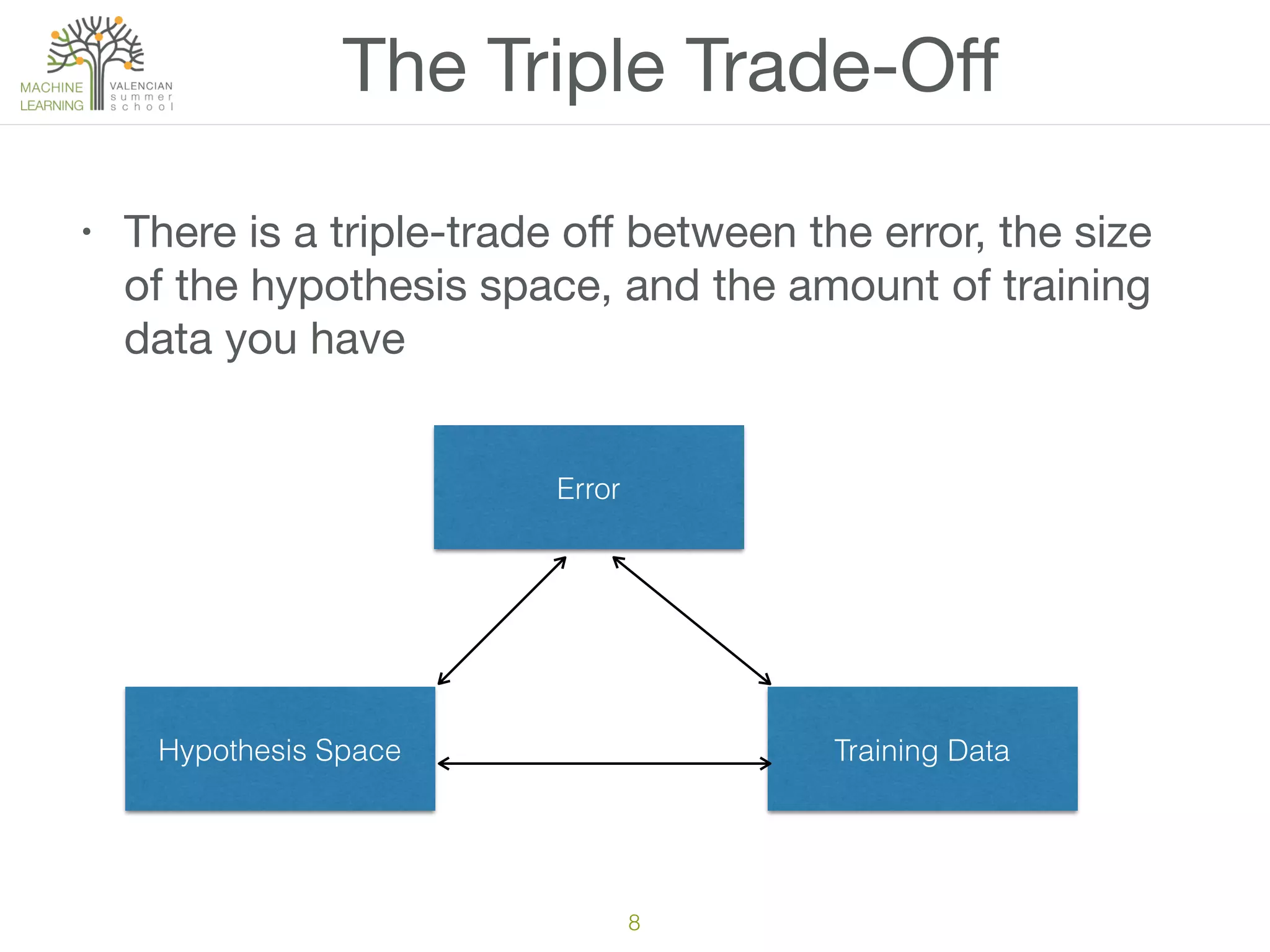

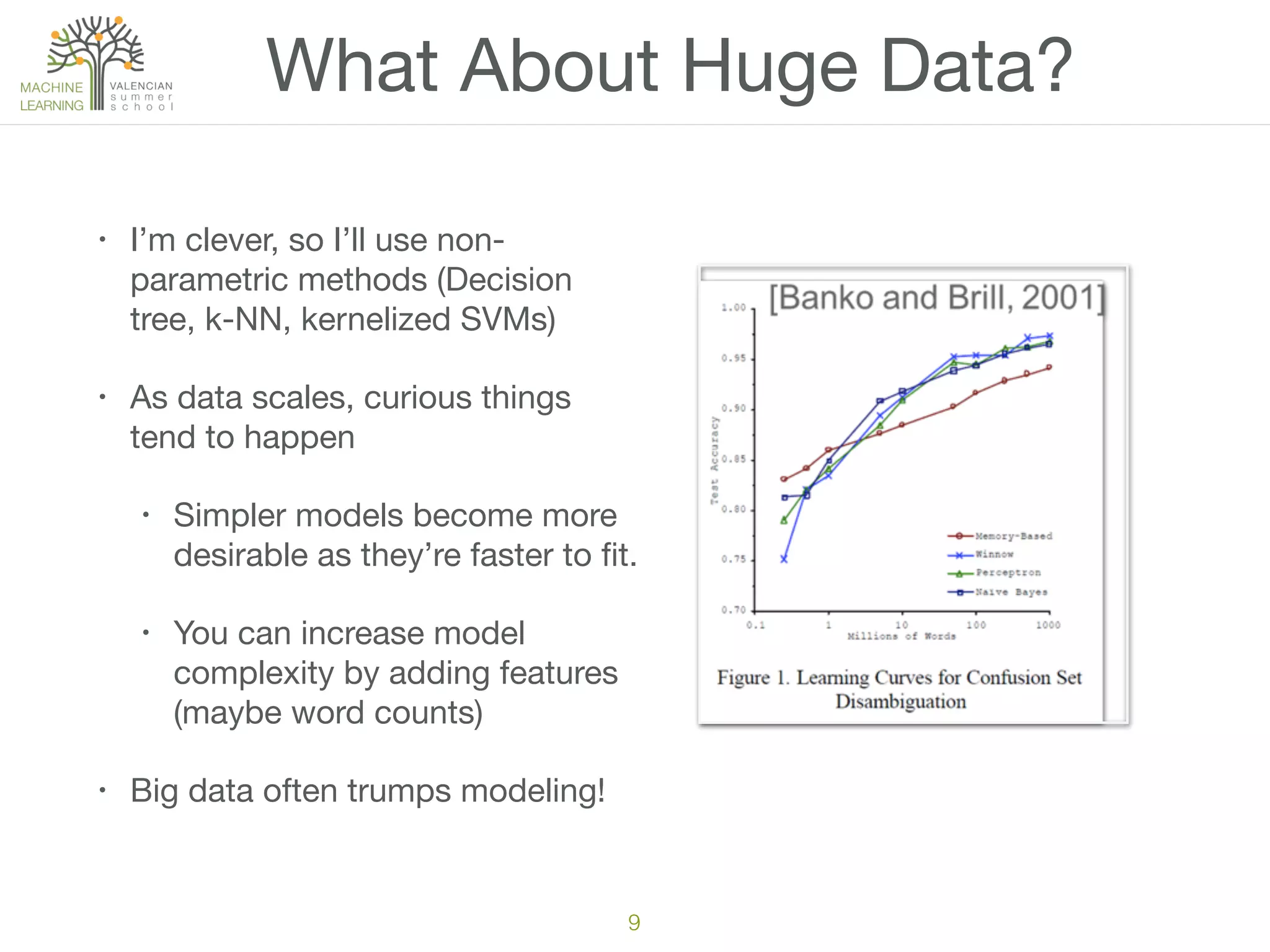

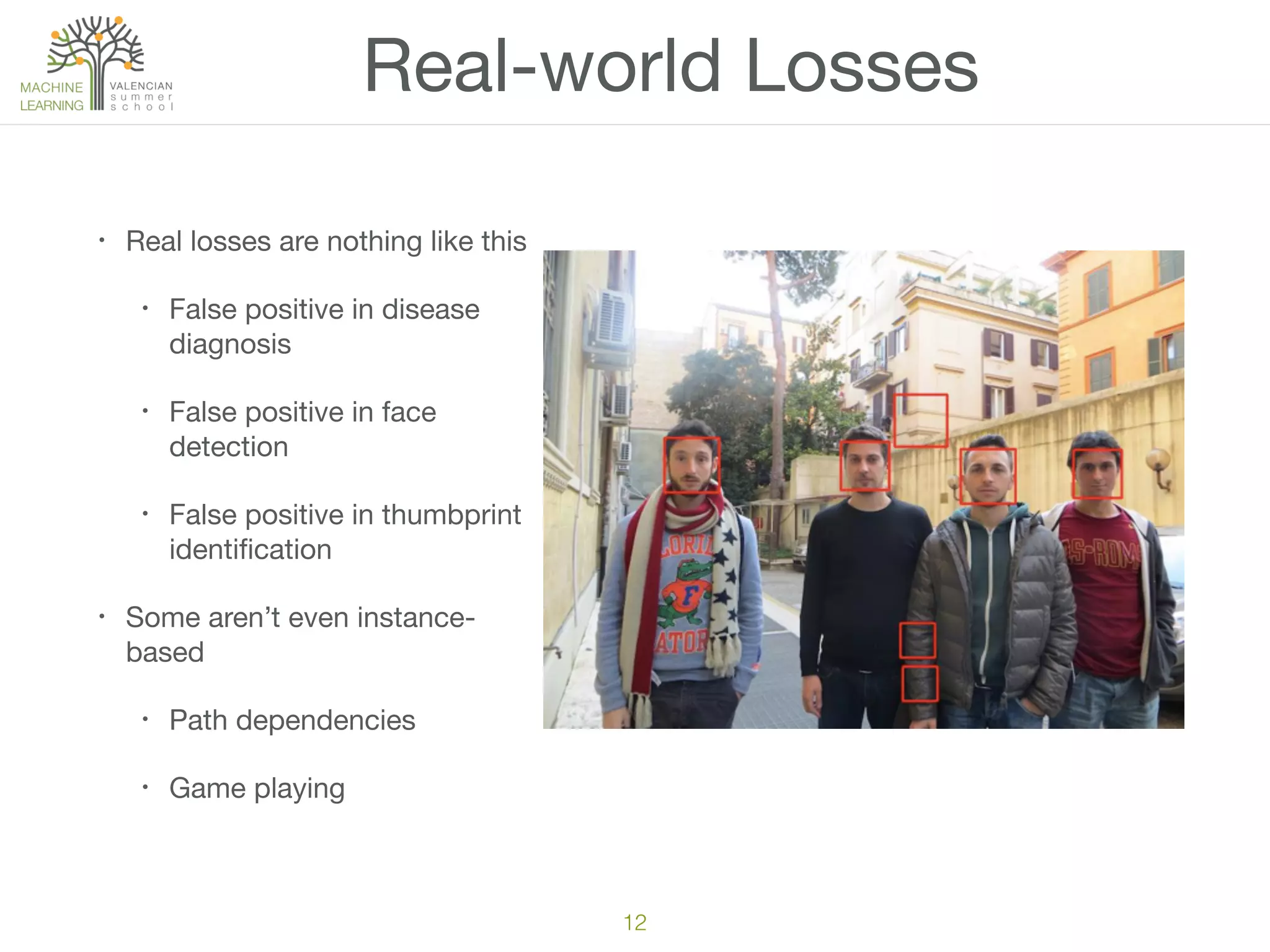

The document discusses the challenges and pitfalls of machine learning projects, emphasizing the difficulty of achieving reliable outcomes in real-world scenarios due to factors like hypothesis space, loss function, and data drift. It cautions against over-reliance on academic research, as theoretical models often do not translate well to practical applications. Ultimately, the message is to approach machine learning with a mix of skepticism and optimism, recognizing its complexities and limitations.