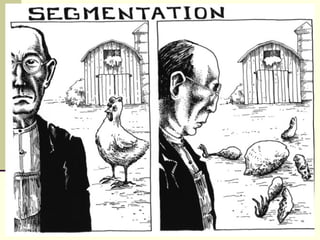

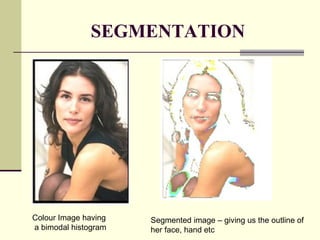

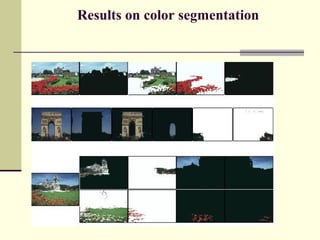

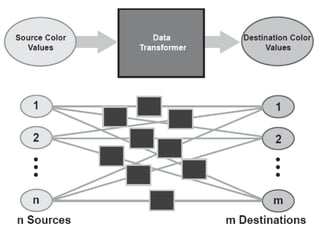

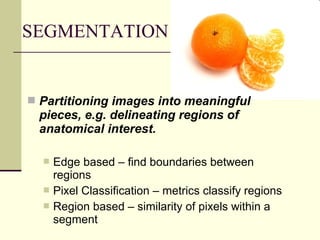

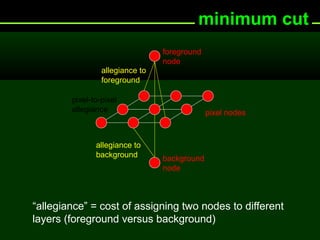

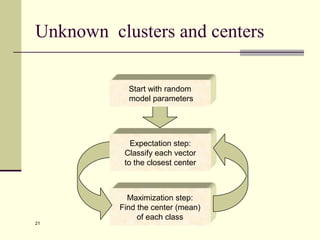

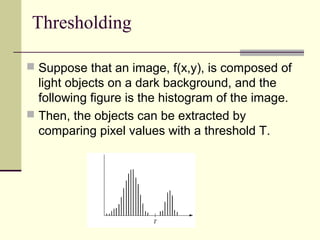

This document discusses a research project focused on developing a computer vision system for robots that can identify object segmentation from various natural backgrounds. It outlines different segmentation methods, their evaluations in RGB color space, and introduces a pixel-based color segmentation approach aimed at effectively separating foreground from background objects in images. The findings indicate that this approach can address common challenges in segmentation, such as pose changes and occlusion.