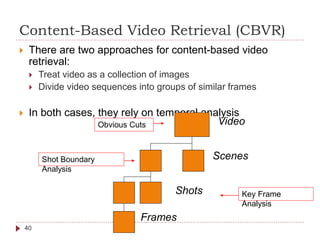

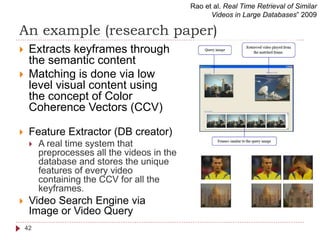

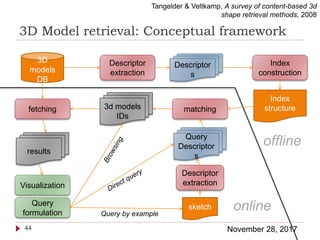

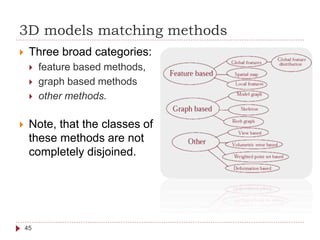

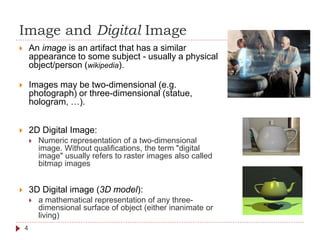

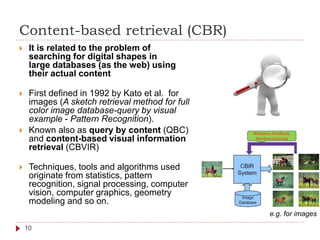

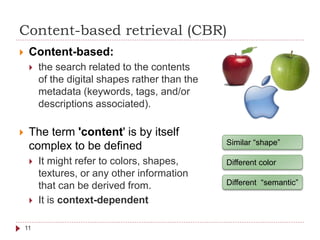

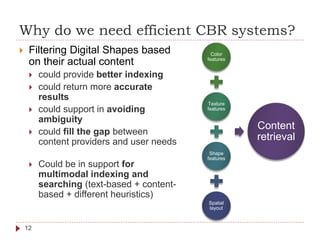

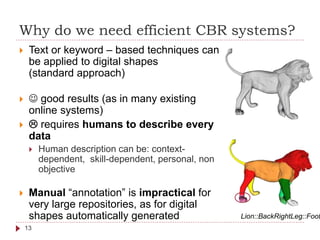

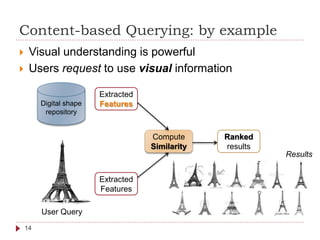

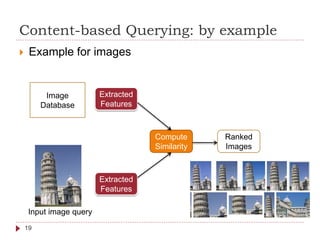

The document provides an overview of content-based retrieval (CBR) systems, specifically for digital shapes, images, videos, and 3D models. It addresses the importance of efficient CBR methods in searching and indexing digital content, emphasizing the relation between visual features and semantic understanding. The outline includes various techniques for querying, feature extraction, and the challenges faced in video and 3D model retrieval, calling attention to ongoing research opportunities in the field.

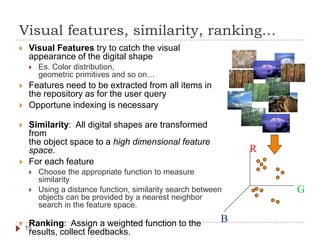

![Similarity measures for images

All images are transformed from the object space to a high

dimensional feature space.

In this space every image is a point with the coordinate representing

its features characteristics

Similar images are “near” in space

The definition of an appropriate distance function is crucial for the

success of the feature transformation.

Some examples for distance metrics are

The Euclidean distance [Niblack 1993],

The Manhattan distance [Stricker and Orengo 1995]

The distance between two points measured along axes at right angles

The maximum norm [Stricker and Orengo 1995],

The quadratic function [Hafner et alii 1995],

Earth Mover's Distance [Rubner, Tomasi, and Guibas 2000],

Deformation Models [Keysers et alii 2007b].

21](https://image.slidesharecdn.com/multimedia-searching08-171128111514/85/Multimedia-searching-21-320.jpg)