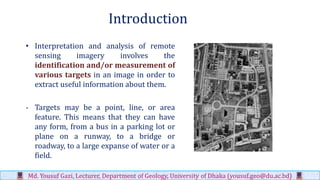

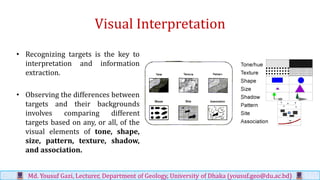

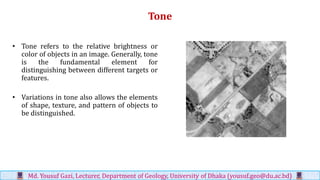

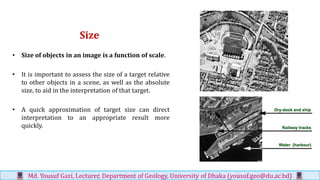

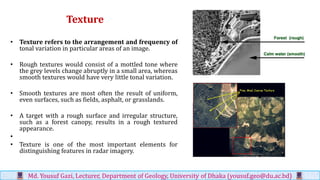

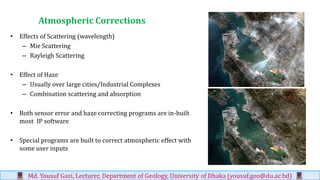

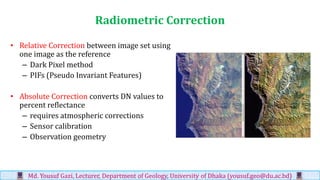

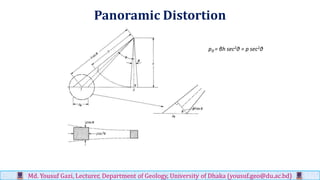

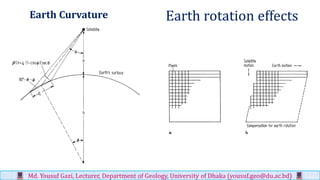

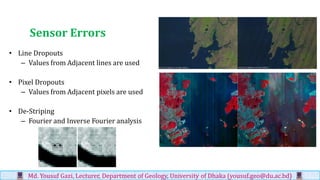

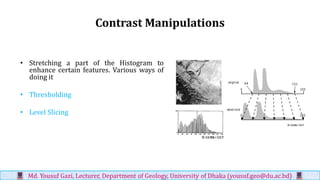

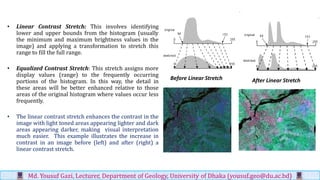

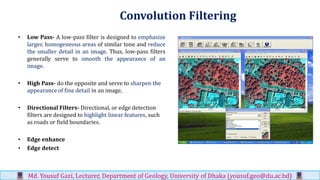

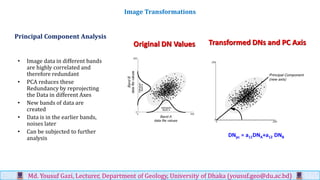

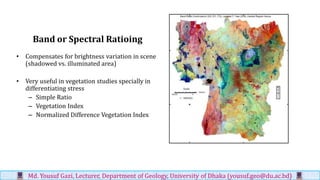

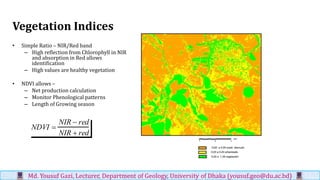

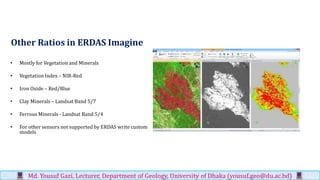

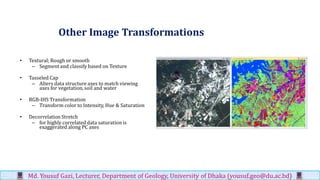

The document discusses techniques for extracting information from visual and digital images. It outlines methods for visual image interpretation using elements like tone, shape, size, pattern, texture, shadow and association. It also discusses digital image processing techniques including pre-processing methods like radiometric and geometric corrections, and enhancement methods like filtering, transformations and indices. The goal is to extract useful information from remote sensing imagery for analysis.