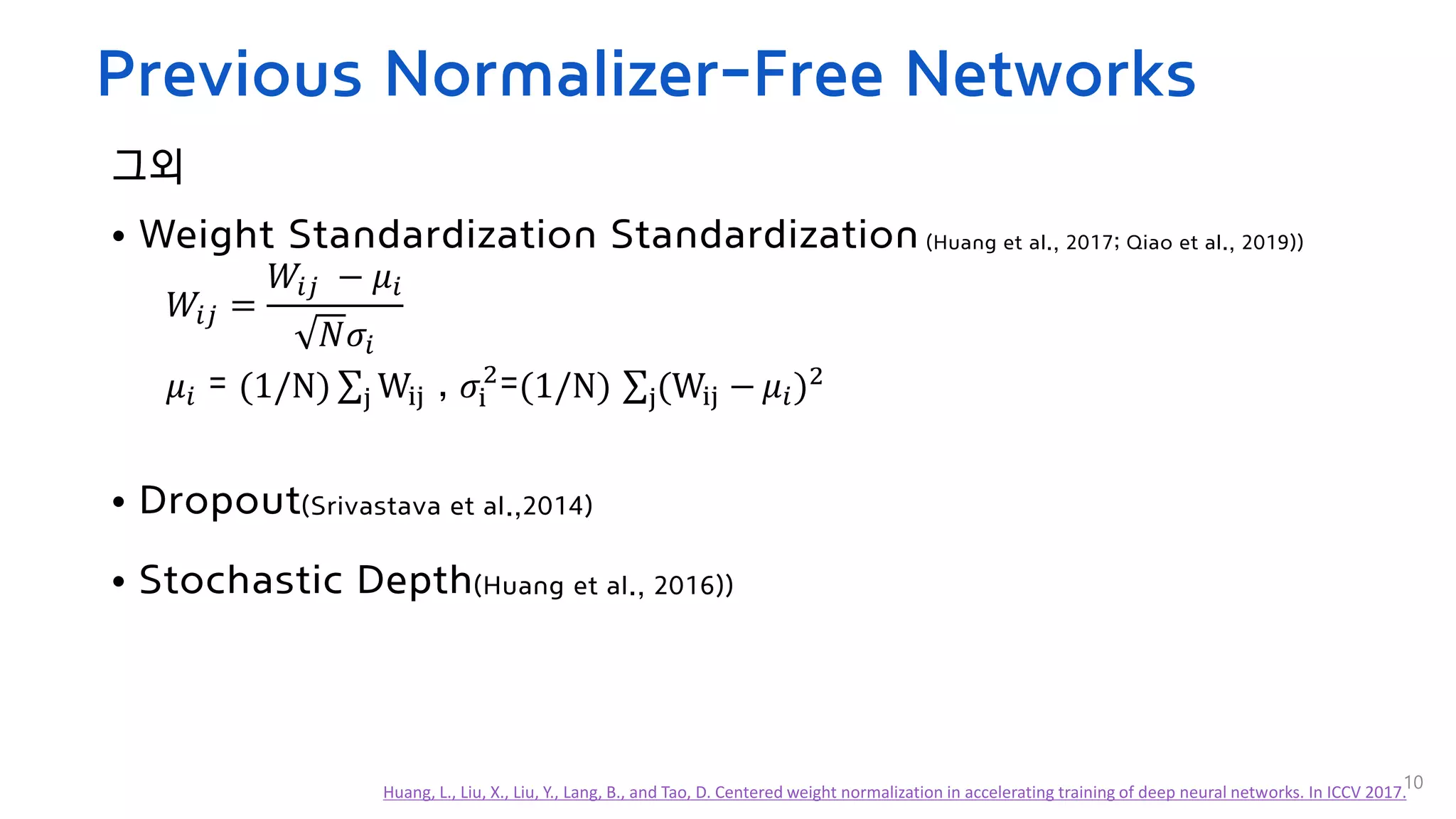

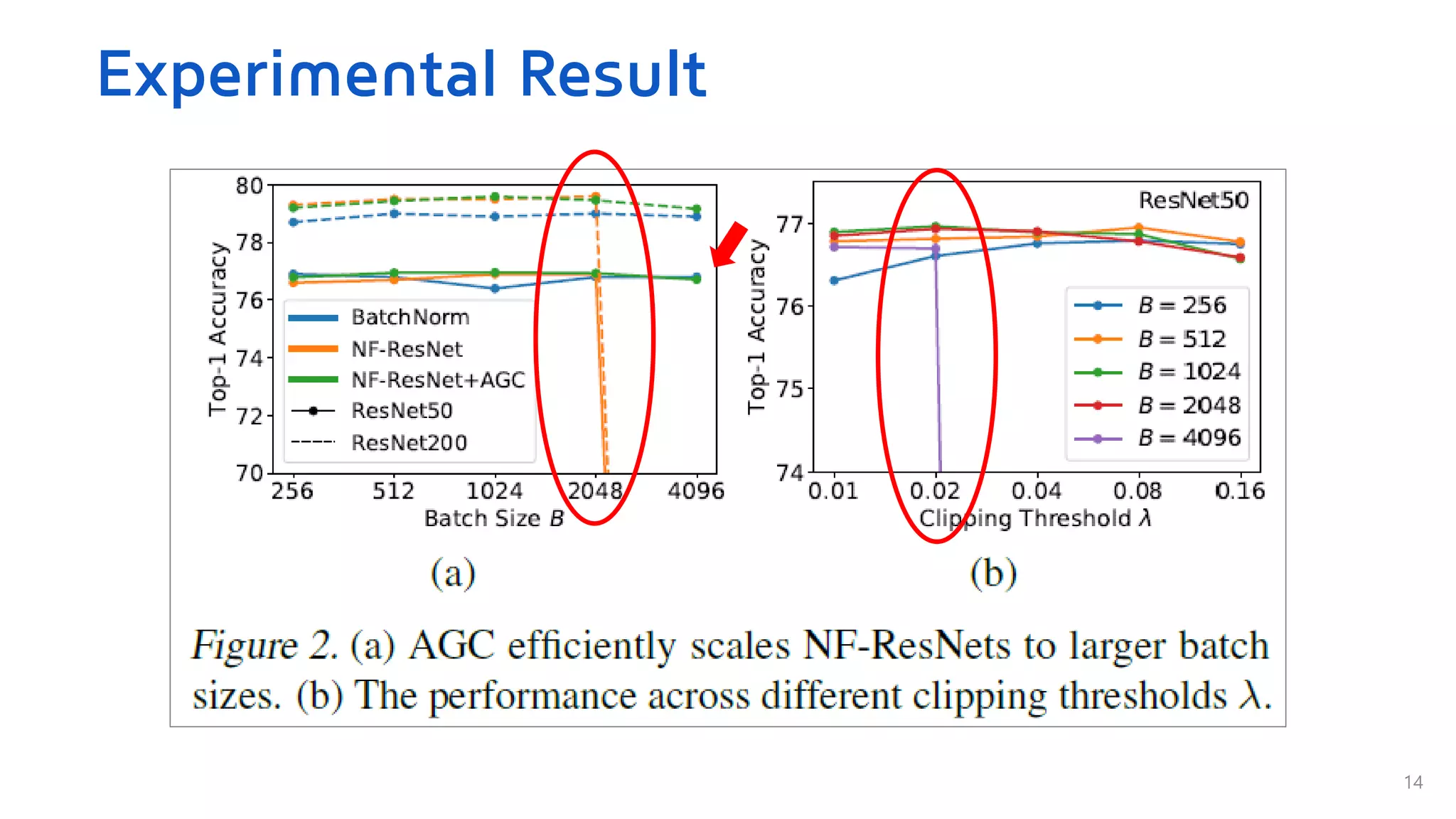

DeepMind's research presents a high-performance method for large-scale image recognition on ImageNet without the use of batch normalization, achieving state-of-the-art accuracy of 86.5%. The proposed adaptive gradient clipping method allows for efficient training with larger batch sizes, surpassing traditional models in terms of speed and performance. This study demonstrates that normalization-free networks can effectively be trained, showing better performance after finetuning on large datasets.

![Performance

• EfficientNet-B7 on ImageNet 8.7x faster to train

• New state-of-the-art top-1 accuracy of 86.5%.

- After finetuning on ImageNet after pretraining on 300 million labeled images,

It achieves 89.2%

• Image Classification on ImageNet[1]

[1] https://paperswithcode.com/sota/image-classification-on-imagenet

3](https://image.slidesharecdn.com/high-performancelarge-scaleimagerecognitionwithoutnormalization-210329070952/75/High-performance-large-scale-image-recognition-without-normalization-3-2048.jpg)

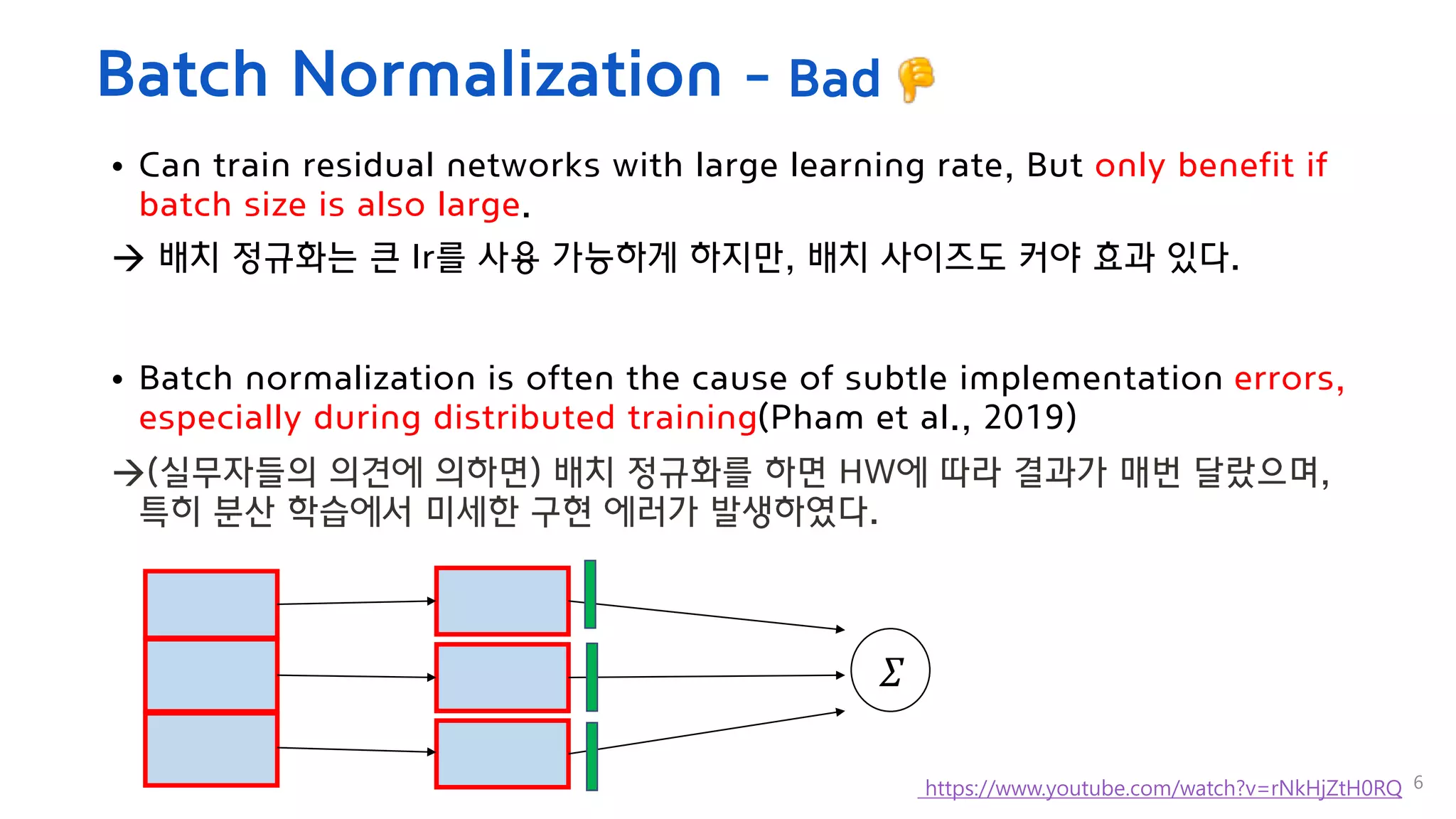

![Batch Normalization(previous knowledge)

• The change in the distributions of

each layers occurs ‘Covariant Shift’[2]

Batch Normalization 효과

·Downscales residual branch

· Regularizing effect

· Eliminates mean-shift

· Efficient large-batch training

4

[2] Batch Normalization: Accelerating Deep Network Training b y Reducing Internal Covariate Shift

http://cs231n.stanford.edu/slides/2018/cs231n_2018_lecture07.pdf

Train

Also calculate exponential moving

avg & var

𝝁 ← 1 − 𝛼 𝜇 + 𝛼𝜇𝛽

𝝈𝟐

← 1 − 𝛼 𝜎2

+ 𝛼𝜎𝛽

2

Test

BNtest x =

x − 𝝁

𝝈𝟐 +𝜖](https://image.slidesharecdn.com/high-performancelarge-scaleimagerecognitionwithoutnormalization-210329070952/75/High-performance-large-scale-image-recognition-without-normalization-4-2048.jpg)

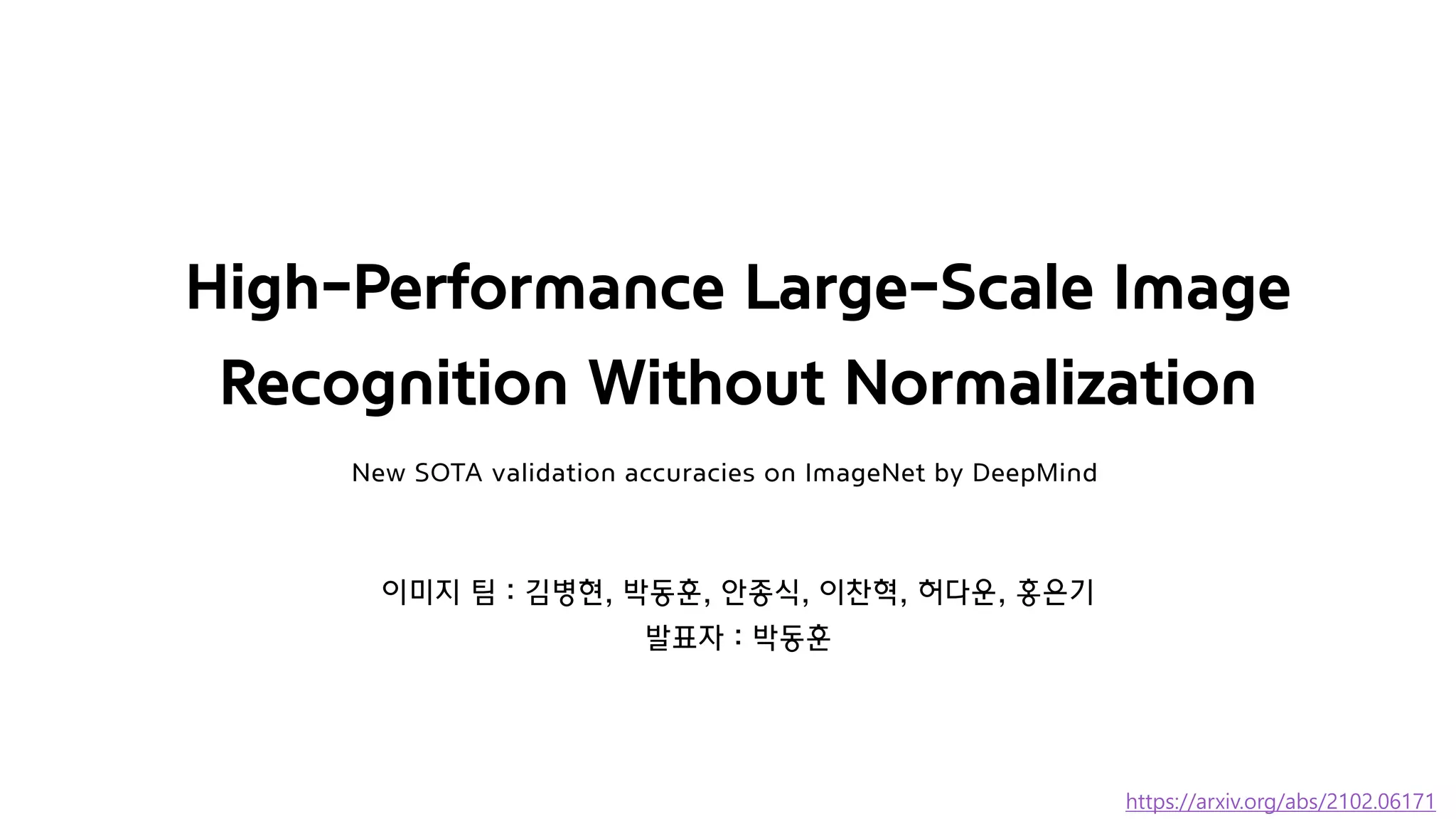

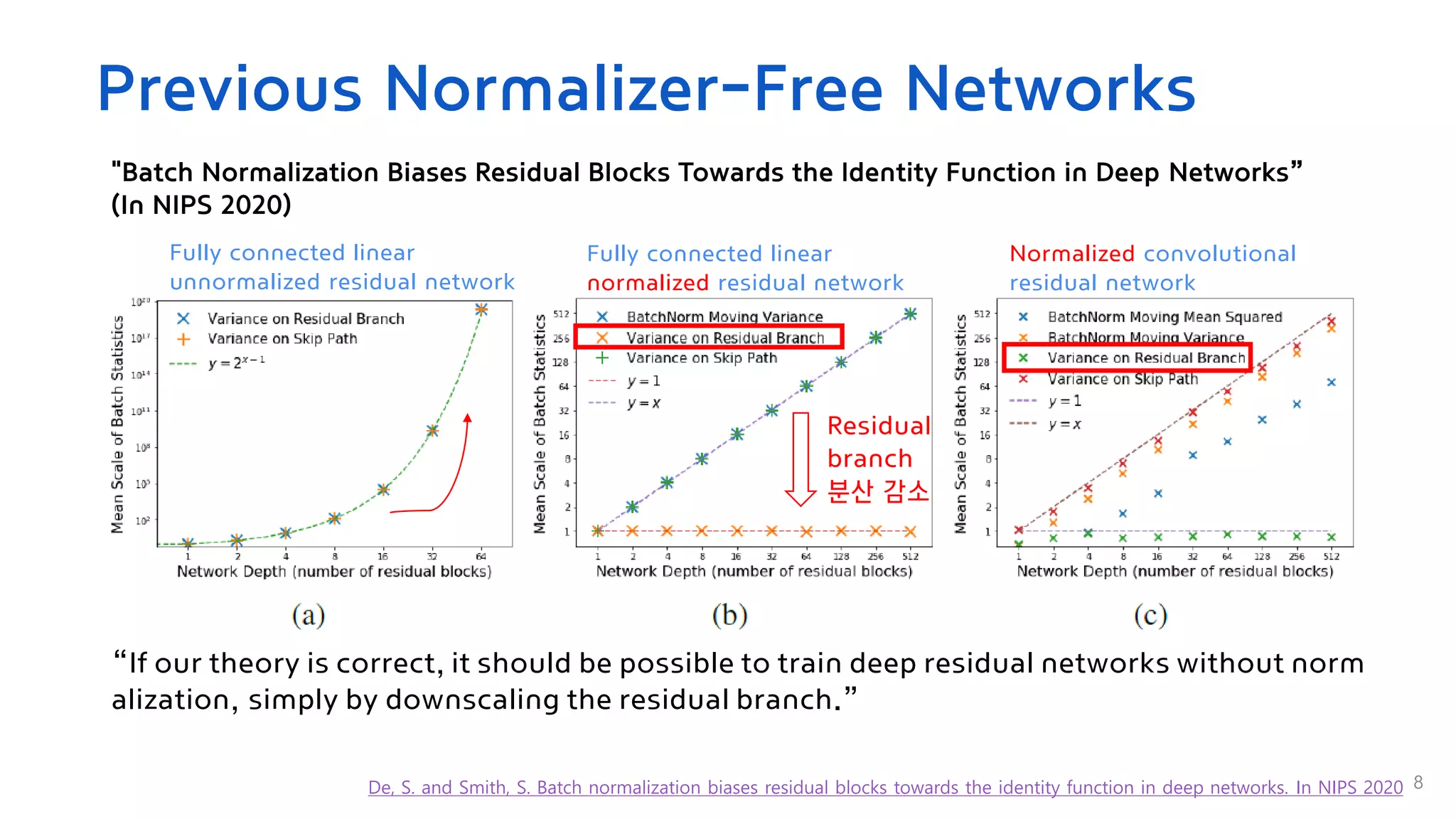

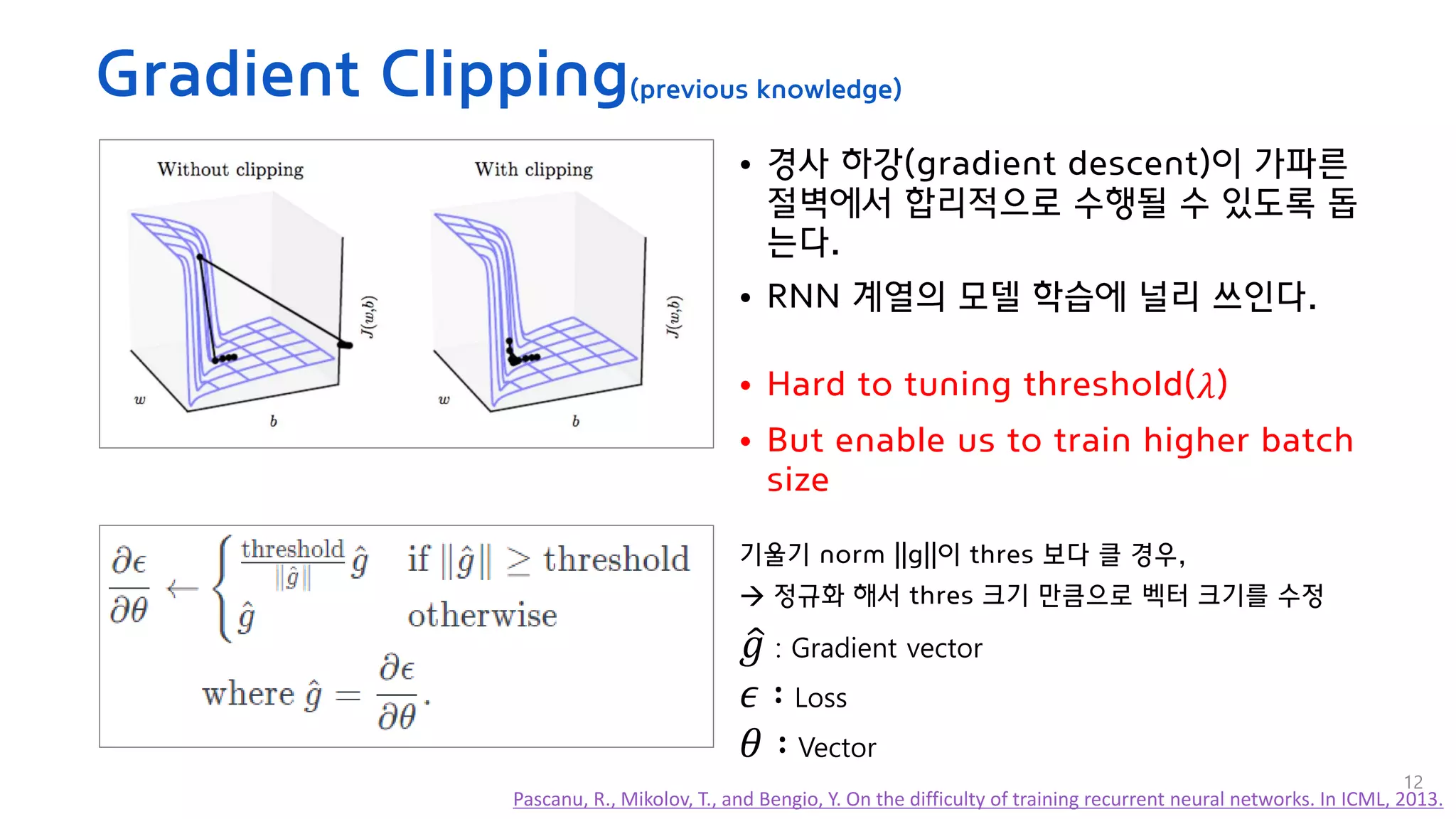

![Adaptive Gradient Clipping

13

• 비율

| 𝐺𝑙 |

||𝑊𝑙||

이 학습의 단위가 될 수 있다는 것에 영감을 받았다.

• 𝑊𝑙

∈ 𝑅𝑁×𝑀

: 𝑙𝑡ℎ

번째 계층의 가중치 행렬

• 𝐺𝑙 ∈ 𝑅𝑁×𝑀 : 𝑊𝑙에 대응하는 기울기

• | 𝑊𝑙 |𝐹 = σ𝑖

𝑁 σ𝑗

𝑀

𝑊𝑖,𝑗

𝑙 2

, | 𝑊𝑙 | = max(| 𝑊𝑙 |𝐹, 𝜖) , 𝜖 = 10−3

• 𝜆 = [0.01, 0.02, 0.04, 0.08, 0.16]

∆𝑊𝑙 = −ℎ𝐺𝑙

| ∆𝑊𝑙 |

||𝑊𝑙||

= h

| 𝐺𝑙 |

||𝑊𝑙||

Training[1]

1/ | 𝑊𝑙

|

Adaptive

[1] https://www.youtube.com/watch?v=o_peo6U7IRM](https://image.slidesharecdn.com/high-performancelarge-scaleimagerecognitionwithoutnormalization-210329070952/75/High-performance-large-scale-image-recognition-without-normalization-13-2048.jpg)

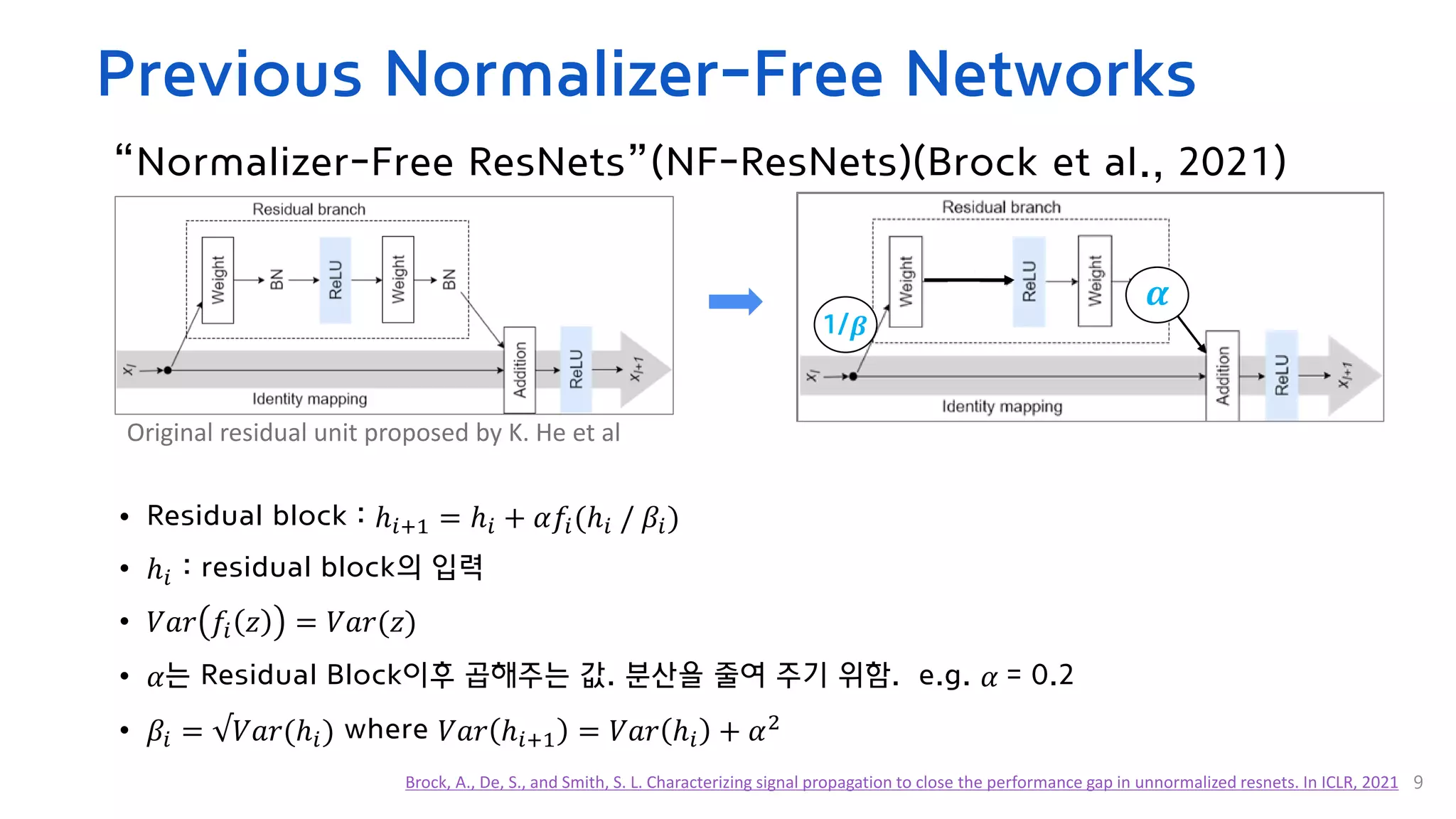

![Model Detail

15

https://github.com/deepmind/deepmind-research/tree/master/nfnets

[Training Detail]

• Softmax cross-entrophy loss with

label smoothing of 0.1

• Stochastic gradient descent with

Nesterov’s momentum 0.9

• Weight decay coefficient 2 x 10-5

• Dropout, Stochastic Depth(0.25)](https://image.slidesharecdn.com/high-performancelarge-scaleimagerecognitionwithoutnormalization-210329070952/75/High-performance-large-scale-image-recognition-without-normalization-15-2048.jpg)