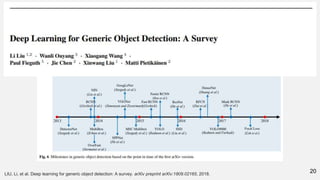

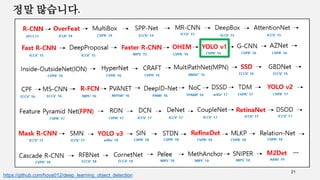

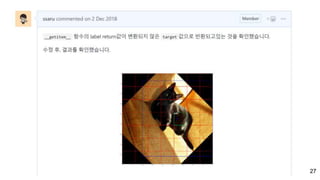

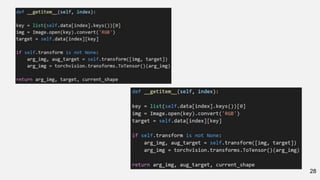

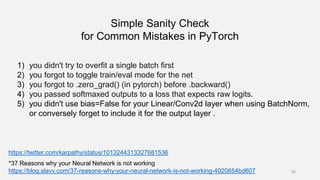

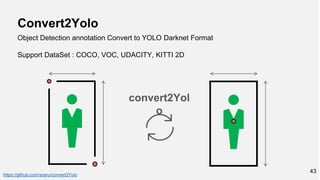

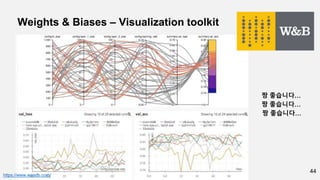

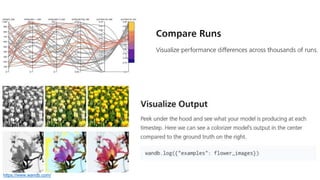

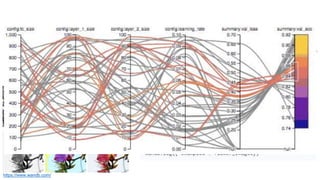

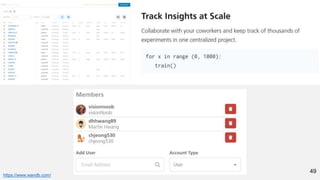

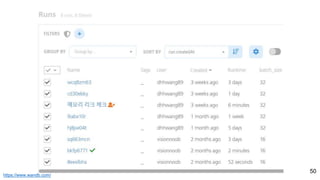

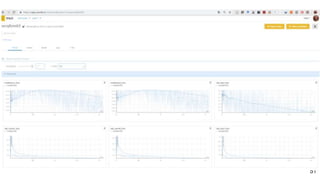

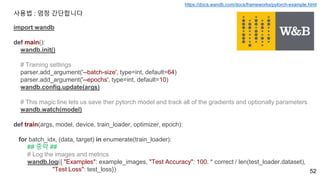

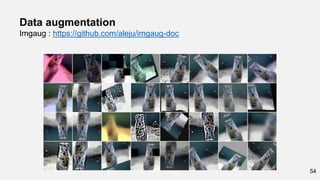

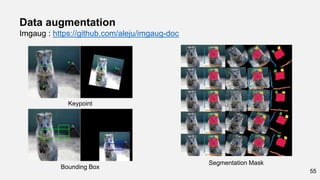

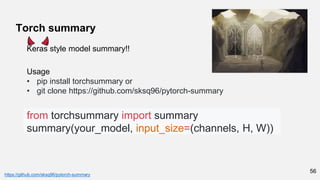

This document summarizes a presentation on implementing deep learning papers from scratch using PyTorch. The presentation covers understanding papers theoretically by thoroughly reading them, and empirically through implementation. It advocates implementing object detection models from scratch to truly understand them. The presenters aim to implement popular object detection methods like YOLO from the ground up and share their experiences. Challenges like bugs during implementation are discussed. Advice like taking a long-term view and checking for common PyTorch mistakes are provided. Useful tools for implementation like Weights and Biases, imgaug, and Torch Summary are also introduced.

![57

summary(model, (1, 28, 28))

>>

----------------------------------------------------------------

Layer(type) Output Shape Param #

================================================================

Conv2d-1 [-1, 10, 24, 24] 260

Conv2d-2 [-1, 20, 8, 8] 5,020

Dropout2d-3 [-1, 20, 8, 8] 0

Linear-4 [-1, 50] 16,050

Linear-5 [-1, 10] 510

================================================================

Total params: 21,840

Trainable params: 21,840

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.06

Params size (MB): 0.08

Estimated Total Size (MB): 0.15

----------------------------------------------------------------](https://image.slidesharecdn.com/pytorchkrdevcon-190122011212/85/Pytorch-kr-devcon-57-320.jpg)