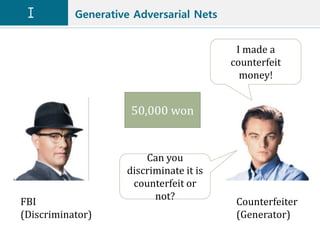

The document discusses neural networks, generative adversarial networks, and image-to-image translation. It begins by explaining how neural networks learn through forward propagation, calculating loss, and using the loss to update weights via backpropagation. Generative adversarial networks are introduced as a game between a generator and discriminator, where the generator tries to fool the discriminator and vice versa. Image-to-image translation uses conditional GANs to translate images from one domain to another, such as maps to aerial photos.

![Training Tip

min

𝐺

max

𝐷

𝑉(𝐷, 𝐺) = 𝔼 𝑥~𝑝 𝑑𝑎𝑡𝑎(𝑥) log 𝐷 𝑥 + 𝔼 𝑧~𝑝 𝑧(𝑧)[log(1 − 𝐷 𝐺(𝑧) )]

max

𝐺

𝑉(𝐷, 𝐺) = 𝔼 𝑧~𝑝 𝑧(𝑧)[log(𝐷 𝐺(𝑧) )]

max

𝐷

𝑉(𝐷, 𝐺) = 𝔼 𝑥~𝑝 𝑑𝑎𝑡𝑎(𝑥) log 𝐷 𝑥 + 𝔼 𝑧~𝑝 𝑧(𝑧)[log(1 − 𝐷 𝐺(𝑧) )]

min

𝐺

𝑉(𝐷, 𝐺) = −(𝔼 𝑧~𝑝 𝑧(𝑧)[log(𝐷 𝐺(𝑧) )])

min

𝐷

𝑉(𝐷, 𝐺) = −(𝔼 𝑥~𝑝 𝑑𝑎𝑡𝑎(𝑥) log 𝐷 𝑥 + 𝔼 𝑧~𝑝 𝑧(𝑧)[log(1 − 𝐷 𝐺(𝑧) )])](https://image.slidesharecdn.com/ganseminar-181220131648/85/Gan-seminar-34-320.jpg)

![Training Tip

min

𝐺

max

𝐷

𝑉(𝐷, 𝐺) = 𝔼 𝑥~𝑝 𝑑𝑎𝑡𝑎(𝑥) log 𝐷 𝑥 + 𝔼 𝑧~𝑝 𝑧(𝑧)[log(1 − 𝐷 𝐺(𝑧) )]

max

𝐺

𝑉(𝐷, 𝐺) = 𝔼 𝑧~𝑝 𝑧(𝑧)[log(𝐷 𝐺(𝑧) )]

max

𝐷

𝑉(𝐷, 𝐺) = 𝔼 𝑥~𝑝 𝑑𝑎𝑡𝑎(𝑥) log 𝐷 𝑥 + 𝔼 𝑧~𝑝 𝑧(𝑧)[log(1 − 𝐷 𝐺(𝑧) )]

min

𝐺

𝑉(𝐷, 𝐺) = −(𝔼 𝑧~𝑝 𝑧(𝑧)[log(𝐷 𝐺(𝑧) )])

min

𝐷

𝑉(𝐷, 𝐺) = −(𝔼 𝑥~𝑝 𝑑𝑎𝑡𝑎(𝑥) log 𝐷 𝑥 + 𝔼 𝑧~𝑝 𝑧(𝑧)[log(1 − 𝐷 𝐺(𝑧) )])](https://image.slidesharecdn.com/ganseminar-181220131648/85/Gan-seminar-35-320.jpg)

![Method

ℒ 𝑐𝐺𝐴𝑁(𝐺, 𝐷) = 𝔼 𝑥,𝑦 log 𝐷 𝑥, 𝑦 + 𝔼 𝑥,𝑧[log(1 − 𝐷 𝑥, 𝐺(𝑥, 𝑧) )]

ℒ 𝐺𝐴𝑁(𝐺, 𝐷) = 𝔼 𝑦 log 𝐷 𝑦 + 𝔼 𝑥,𝑧[log(1 − 𝐷 𝐺(𝑥, 𝑧) )]

ℒ 𝐿1(𝐺) = 𝔼 𝑥,𝑦,𝑧 𝑦 − 𝐺(𝑥, 𝑧) 1

𝐺∗ = 𝑎𝑟𝑔 min

𝐺

max

𝐷

ℒ 𝑐𝐺𝐴𝑁 𝐺, 𝐷 + 𝜆 ℒ 𝐿1(𝐺)

Objective function for GAN

Objective function for cGAN

Final objective function](https://image.slidesharecdn.com/ganseminar-181220131648/85/Gan-seminar-39-320.jpg)

![References

[1] Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley,

Sherjil Ozair, Aaron Courville, Yoshua Bengio, “Generative Adversarial Nets”, NIPS

2014

[2] Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros, “Image-to-Image

Translation with Conditional Adversarial Networks”, CVPR 2016

[3] Kwangil Kim, “Artificial Neural Networks”, Multimedia system lecture of KHU,

2017

[4] DL4J, “A Beginner’s Guide to Recurrent Networks and LSTMs”, 2017,

https://deeplearning4j.org/lstm.html. Accessed, 2018-01-29

[5] Phillip Isola, Jun-Yan Zhu, Tinghui, “Image-to-Image translation with conditional

Adversarial Nets”, Nov 25, 2016,

https://www.slideshare.net/xavigiro/imagetoimage-translation-with-conditional-

adversarial-nets-upc-reading-group. Accessed, 2018-01-29

[6] CCVL, “Datasets: PASCAL Part Segmentation Challenge”, 2018

http://ccvl.jhu.edu/datasets/. Accessed, 2018-01-29](https://image.slidesharecdn.com/ganseminar-181220131648/85/Gan-seminar-46-320.jpg)