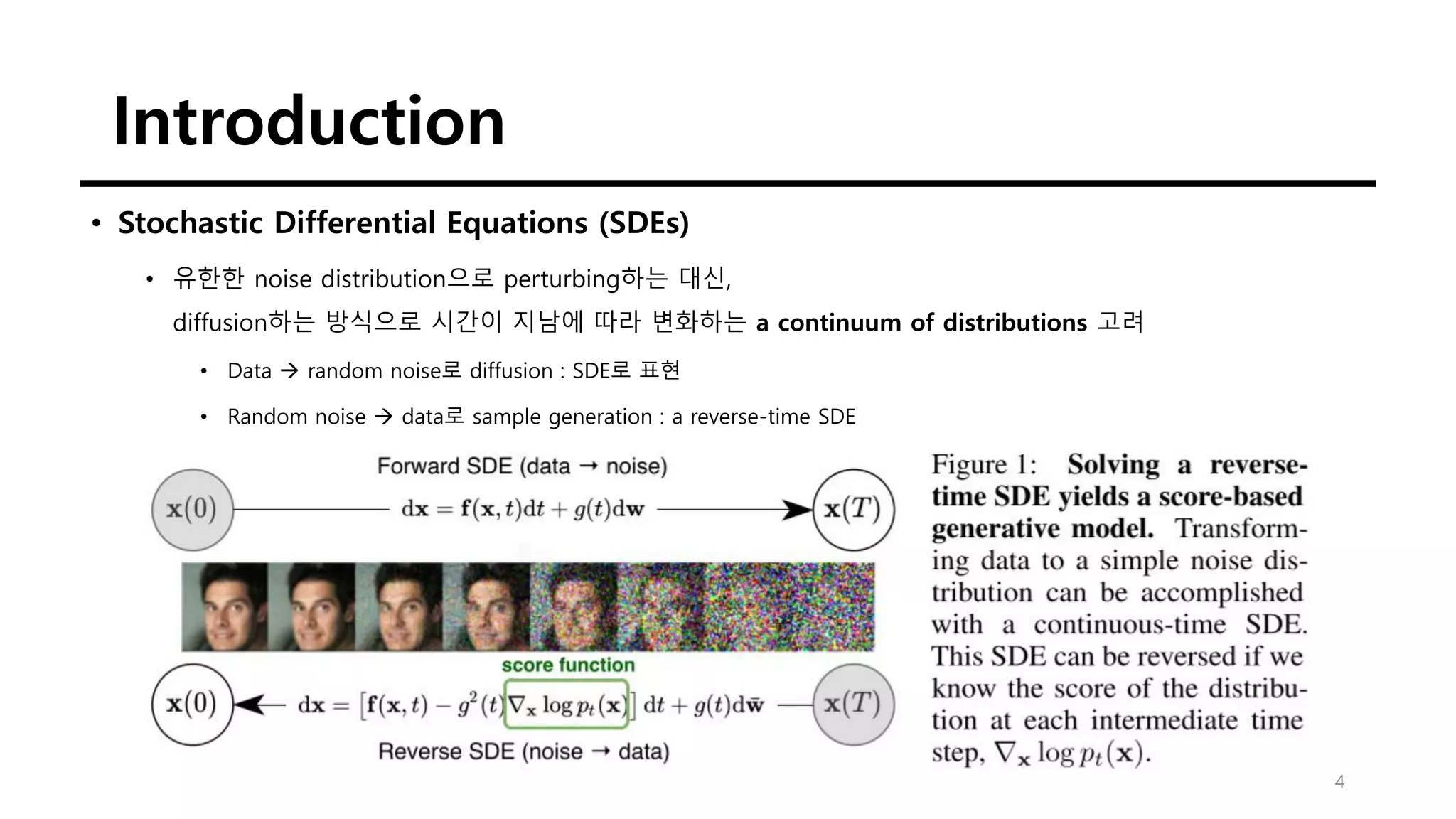

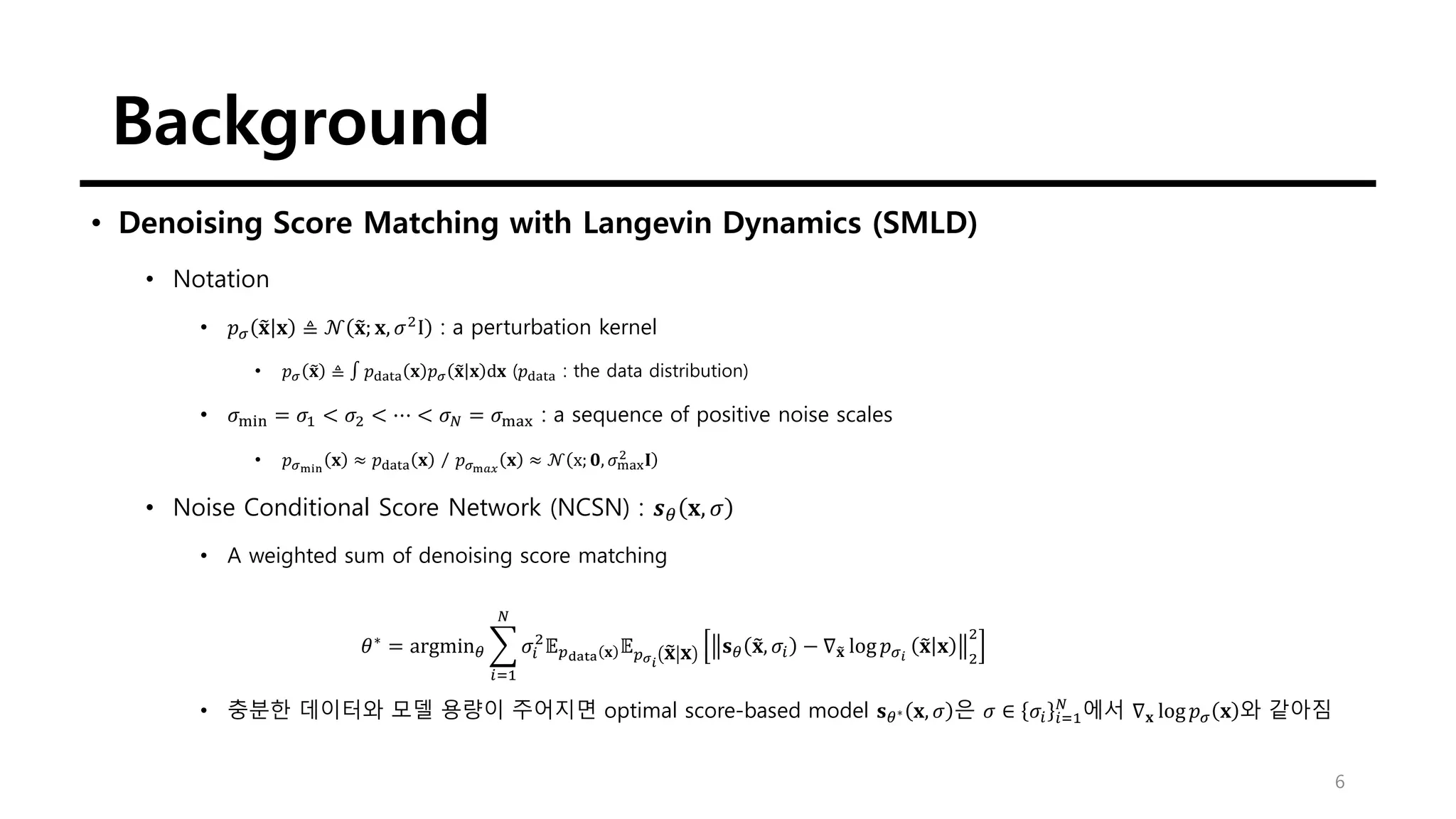

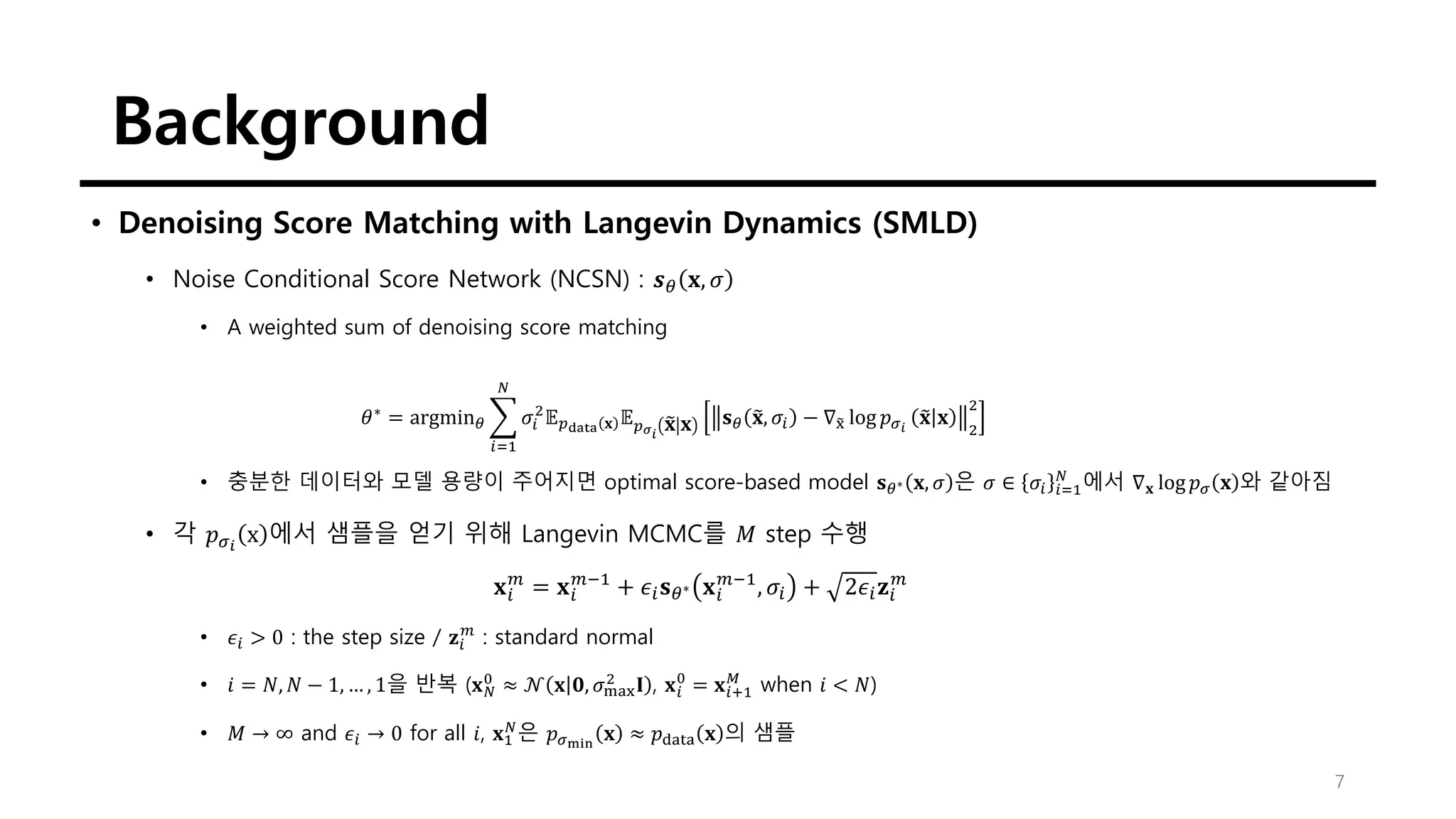

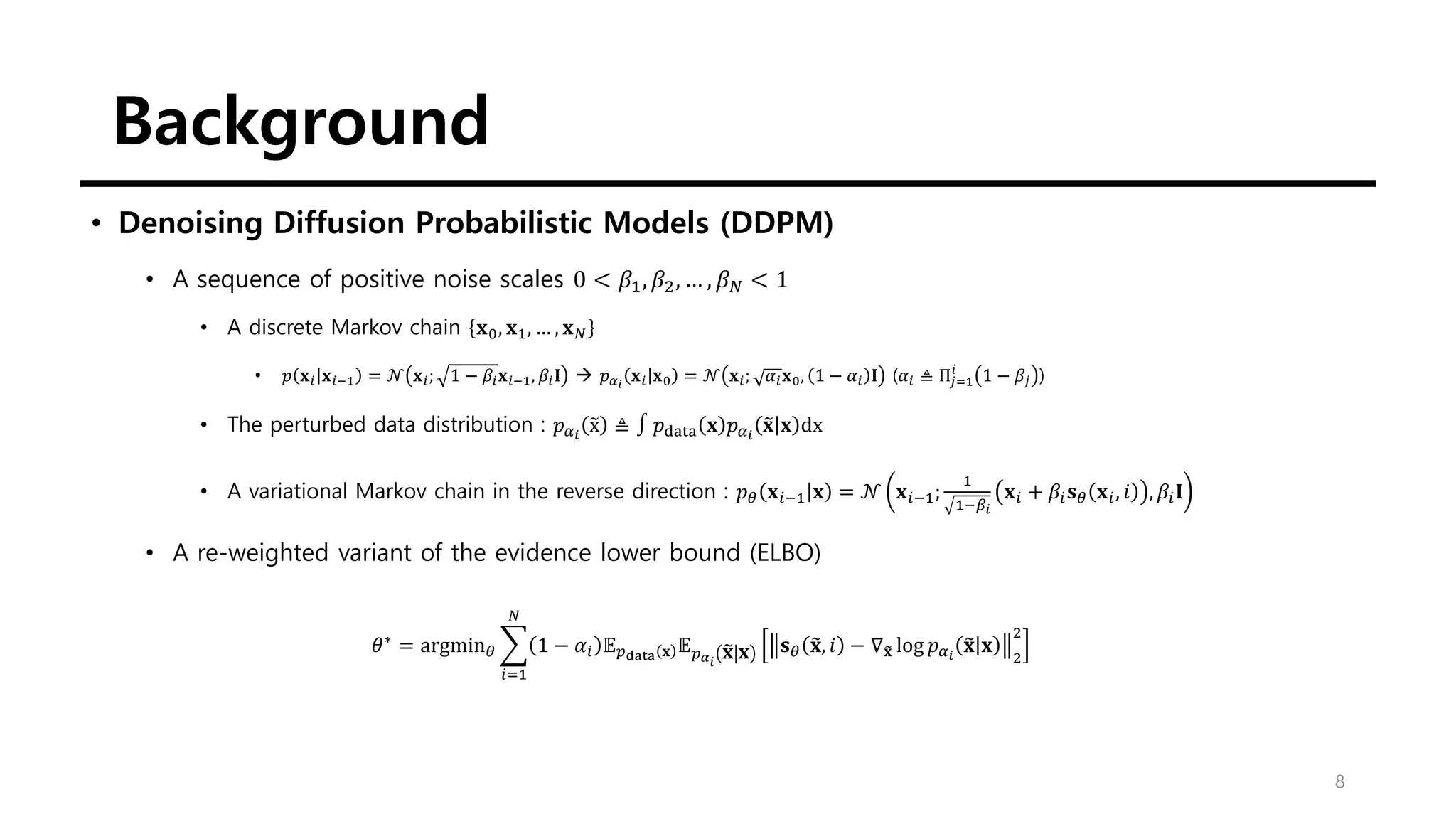

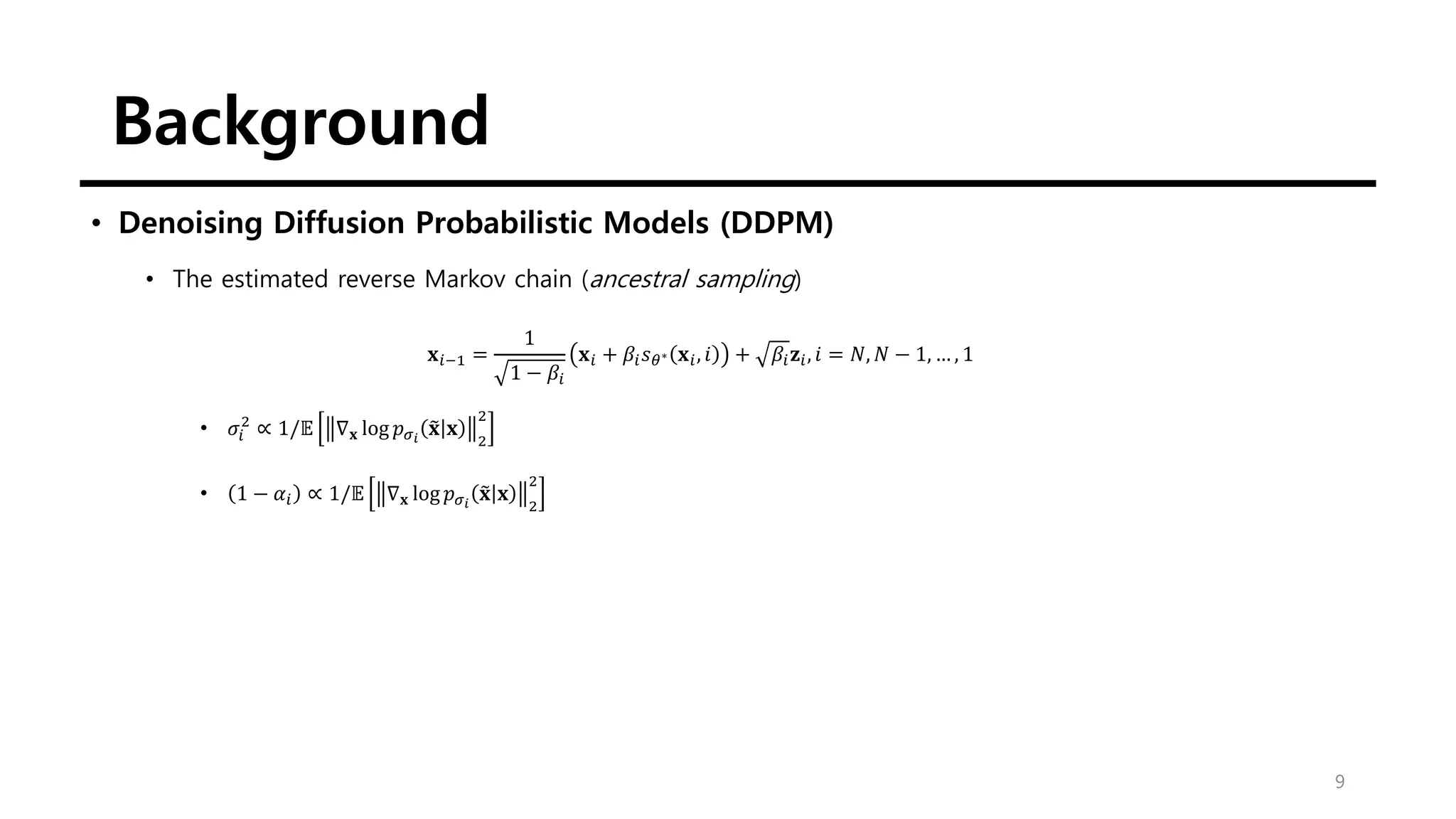

This document discusses score-based generative modeling using stochastic differential equations (SDEs). It introduces modeling data diffusion as an SDE from the data distribution to a simple prior and generating samples by reversing this diffusion process. It also describes estimating the score (gradient of the log probability density) needed for the reverse process using score matching. Finally, it notes that noise perturbation models like NCSN and DDPM can be viewed as discretizations of specific SDEs called variance exploding and variance preserving SDEs.