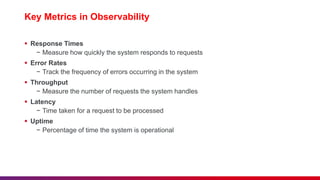

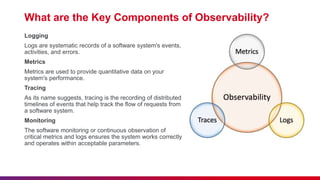

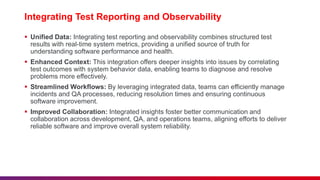

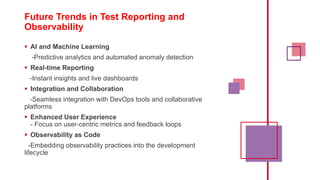

The document discusses the significance of test reporting and observability in software development, detailing best practices, types of reports, and essential tools for effective implementation. It emphasizes the integration of test reporting with observability to provide a comprehensive understanding of system performance, address challenges, and improve collaboration among teams. Future trends highlight the role of AI, real-time reporting, and user-centric approaches in advancing these practices.