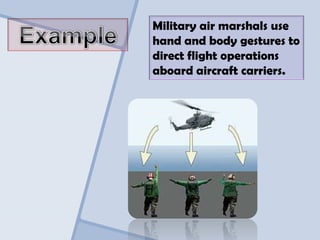

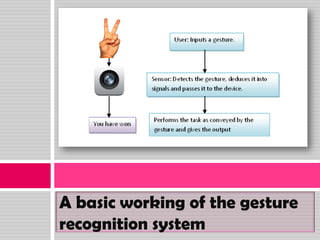

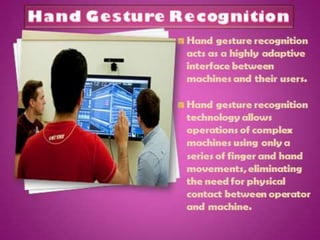

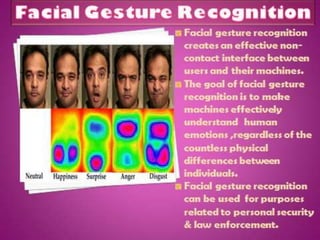

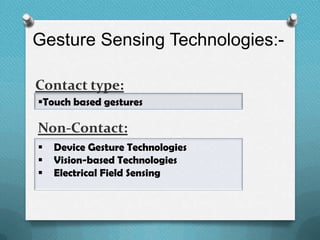

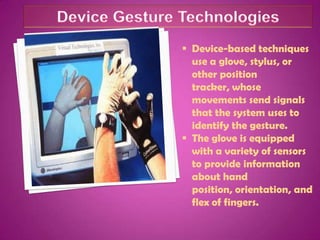

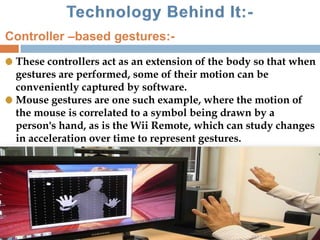

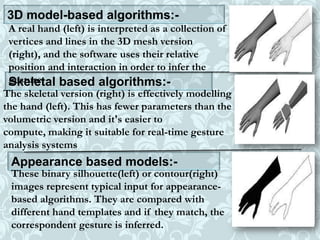

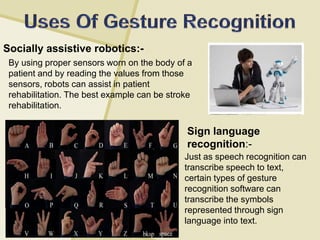

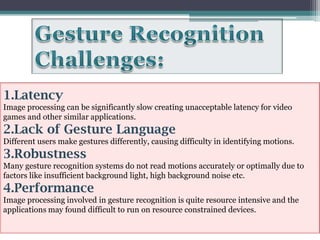

The document discusses the importance of gestures as a form of non-verbal communication, including their applications in military operations and human-robot interaction. It covers various types of gesture recognition technologies, such as hand gesture and sign language recognition, and methods including device-based and vision-based techniques. Additionally, it examines the challenges faced in gesture recognition systems, such as latency, variability of gestures, and performance issues in resource-constrained environments.