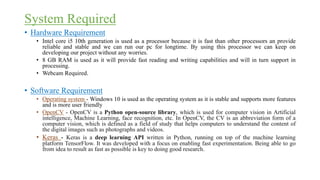

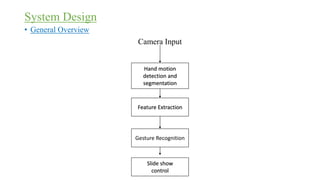

The document outlines a project focused on developing a real-time hand gesture recognition system to aid communication for deaf-and-mute individuals using sign language. It details the system's hardware and software requirements, design, implementation, and future scope for improving gesture recognition capabilities. The project aims to enhance the interaction of deaf-and-mute individuals with the wider community through technology.