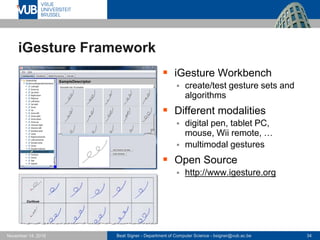

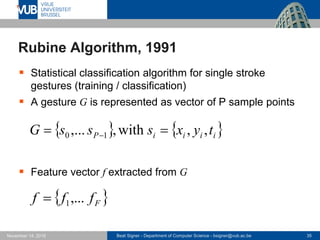

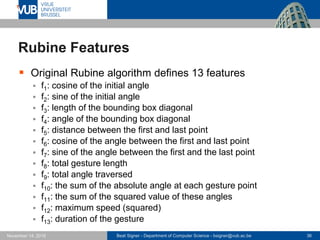

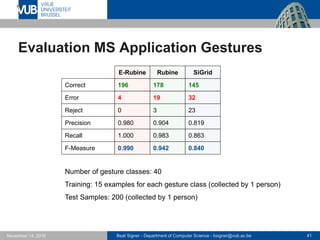

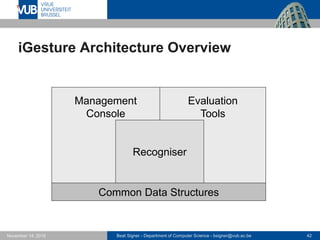

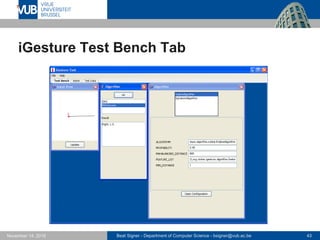

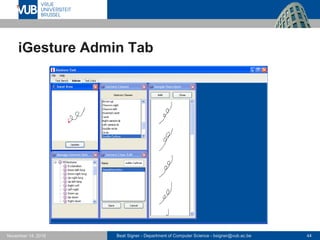

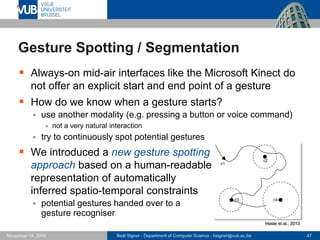

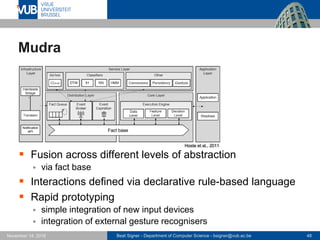

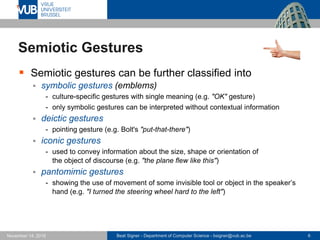

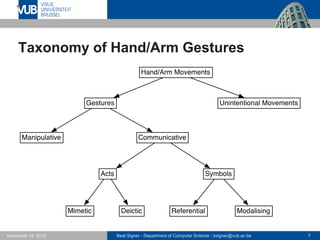

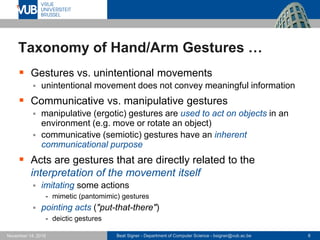

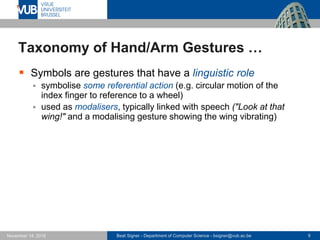

The document discusses gesture-based interactions in human-computer interfaces, detailing various types of gestures, including semiotic, ergotic, and epistemic gestures. It categorizes gestures further into symbolic, deictic, iconic, and pantomimic types while explaining gesture recognition technologies and algorithms like the Rubine algorithm and machine learning approaches. Additionally, it emphasizes the importance of designing intuitive and ergonomic gesture vocabularies for effective user interaction.

![Beat Signer - Department of Computer Science - bsigner@vub.ac.be 26November 14, 2016

"Fat Finger" Problem

"Fat finger" problem is based on

two issues

finger makes contact with a relatively

large screen area but only single

touch point is used by the system

- e.g. centre

users cannot see the currently

computed touch point (occluded

by finger) and might therefore miss their target

Solutions

make elements larger or provide feedback during interaction

adjust the touch point (based on user perception)

use iceberg targets technique

…

[http://podlipensky.com/2011/01/mobile-usability-sliders/]](https://image.slidesharecdn.com/lecture08gesturebasedinteraction-161114065501/85/Gesture-based-Interaction-Lecture-08-Next-Generation-User-Interfaces-4018166FNR-26-320.jpg)