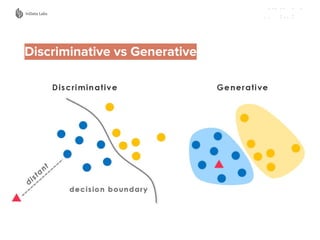

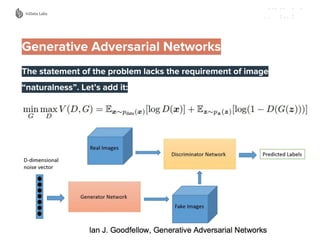

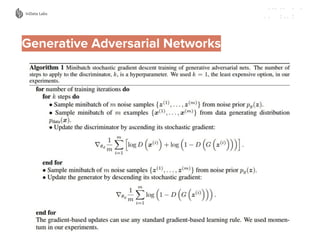

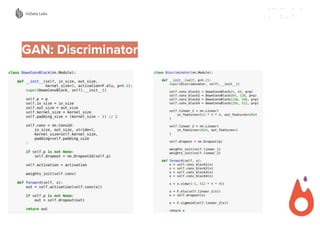

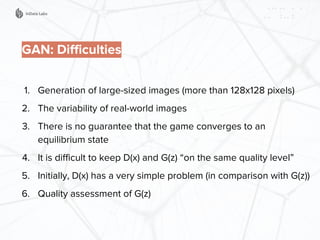

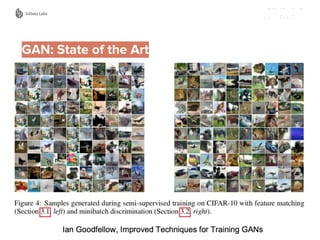

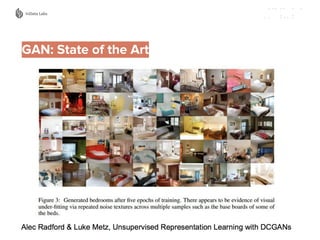

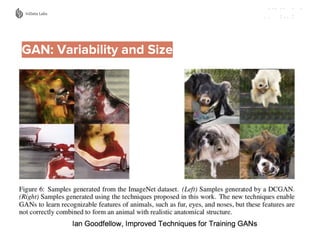

This document discusses generative modeling using convolutional neural networks (CNNs) and contrasts discriminative and generative models. It covers various techniques, challenges, and applications of generative adversarial networks (GANs), emphasizing their potential for generating photorealistic images and tackling real-world problems. Additionally, it highlights the evolution of CNN architectures and the importance of future improvements in the field.

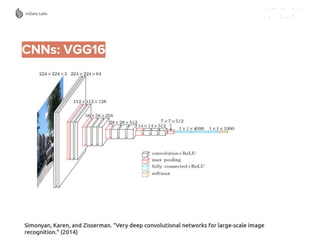

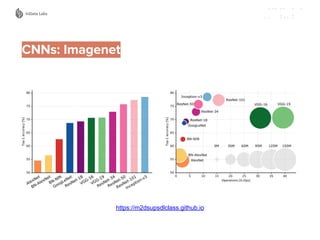

![CNNs: Architectures

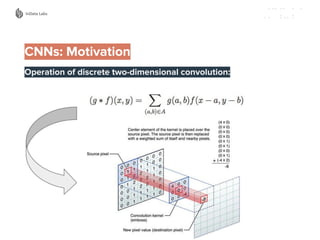

1. Typical architecture of Convolutional Neural Networks

2. Variations

3. Tendencies

A. Fully Convolutional Networks

B. Reduction of the number of parameters and computational

complexity

INPUT→[[CONV→ReLU] * N→POOL?] * M→[FC→ReLU] * K→FC

ResNet / Inception / …](https://image.slidesharecdn.com/presentationforrigameetup2-170810080352/85/Generative-modeling-with-Convolutional-Neural-Networks-9-320.jpg)

![GAN: Designing the D(x)

1. Normalize the input images in [-1.0, 1.0]

2. Should have less parameters than G(x)

3. Use Dropout/Spatial Dropout

4. 2x2 MaxPooling→Convolution2D + Stride = 2

5. ReLU→LeakyReLU/ELU (SELU?)

6. Adaptive L2 regularization

7. Label Smoothing

8. Instance Noise

9. Balancing of the min-max the game

https://github.com/soumith/ganhacks

Ian Goodfellow, Improved Techniques of Training GANs](https://image.slidesharecdn.com/presentationforrigameetup2-170810080352/85/Generative-modeling-with-Convolutional-Neural-Networks-37-320.jpg)