The document discusses abductive commonsense reasoning, focusing on two main tasks: abductive natural language inference (αnli) and abductive natural language generation (αnlg). It presents a dataset for abductive reasoning in narrative text, including experiments and findings based on probabilistic models. Additionally, the document highlights various frameworks and related works aimed at improving the understanding and application of commonsense reasoning in natural language processing.

![Abductive Reasoning

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-4-320.jpg)

![A Probabilistic Framework for 𝛼𝑁𝐿𝐼

The 𝛼𝑁𝐿𝐼 task is to select the hypothesis ℎ∗

that is most probable given the

observations.

ℎ∗

= arg max

ℎ 𝑖

𝑃 𝐻 = ℎ𝑖

𝑂1, 𝑂2

Rewriting the objective using Bayes Rule conditioned on 𝑂1, we have:

𝑃 ℎ𝑖 𝑂1, 𝑂2 ∝ 𝑃 𝑂2 𝐻 𝑖, 𝑂1 𝑃 ℎ𝑖 𝑂1

Illustration of the graphical models described in the probabilistic framework.

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-5-320.jpg)

![A Probabilistic Framework for 𝛼𝑁𝐿𝐼

(a) Hypothesis Only

• the strong assumption: the hypothesis is entirely independent of both observations, i.e.

(𝐻 ⊥ 𝑂1, 𝑂2).

• Maximize the marginal 𝑷 𝑯 .

(b, c) First (or Second) Observation Only

• Weaker assumption: the hypothesis depends on only one of the first 𝑂1 or second 𝑂2

observation.

• Maximize the conditional probability 𝑷 𝑯 𝑶 𝟏 or 𝑷 𝑶 𝟐 𝑯 .

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-6-320.jpg)

![A Probabilistic Framework for 𝛼𝑁𝐿𝐼

(d) Linear Chain

• Uses both observations, but consider each observation’s influence on the hypothesis

independently.

• The model assumes that the three variables < 𝑶 𝟏, 𝑯, 𝑶 𝟐 > form a linear Markov chain.

• The second observation is conditionally independent of the first, given the hypothesis (i.e.

𝑂1 ⊥ 𝑂2 𝐻))

• ℎ∗

= arg max

ℎ 𝑖

𝑃 𝑂2 ℎ∗

𝑃 ℎ∗

𝑂1 𝑤ℎ𝑒𝑟𝑒 𝑂1 ⊥ 𝑂2 𝐻

(e) Fully Connected

• Jointly models all three random variables.

• Combine information across both observations to choose the correct hypothesis.

• ℎ∗ = arg max

ℎ 𝑖

𝑃 𝑂2 ℎ𝑖, 𝑂1 𝑃 ℎ𝑖 𝑂1

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-7-320.jpg)

![𝛼𝑁𝐿𝐺 model

Given ℎ+

= 𝑤1

ℎ

⋯ 𝑤𝑙

ℎ

, 𝑂1 = 𝑤1

𝑜1

⋯ 𝑤 𝑚

𝑜1

, 𝑂2 = 𝑤1

𝑜2

⋯ 𝑤 𝑛

𝑜2

as sequence of tokens,

The 𝛼𝑁𝐿𝐺 task can be modeled as 𝑷 𝒉+ 𝑶 𝟏, 𝑶 𝟐 = 𝑷 𝒘𝒊

𝒉

𝒘<𝒊

𝒉

, 𝒘 𝟏

𝒐 𝟏

⋯ 𝒘 𝒎

𝒐 𝟏

, 𝒘 𝟏

𝒐 𝟐

⋯ 𝒘 𝒏

𝒐 𝟐

Optionally, The model can also be conditioned on background knowledge 𝓚.

ℒ = −

𝑖=1

𝑁

log 𝑃 𝑤𝑖

ℎ

𝑤<𝑖

ℎ

, 𝑤1

𝑜1

⋯ 𝑤 𝑚

𝑜1

, 𝑤1

𝑜2

⋯ 𝑤 𝑛

𝑜2

, 𝒦

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-9-320.jpg)

![ConceptNet [5]

• ConceptNet is a multilingual knowledge base

• Is constructed of:

• Nodes ( a word or a short phrase)

• Edges (relation and weight)

• ConceptNet is a multilingual knowledge base

• Is constructed of:

• Nodes ( a word or a short phrase)

• Edges (relation and weight)

Birds is not capable of … {bark, chew their food,

breathe water}

• Related Works

• Wordnet

• Microsoft Concept Net

• Google knowledge base

Adopted from [6]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-10-320.jpg)

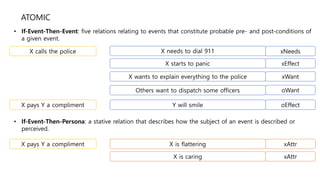

![ATOMIC [2]

1. xIntent: Why does X cause an event?

2. xNeed: What does X need to do before the

event?

3. xAttr: How would X be described?

4. xEffect: What effects does the event have on X?

5. xWant: What would X likely want to do after

the event?

6. xReaction: How does X feel after the event?

7. oReact: How do others’ feel after the event?

8. oWant: What would others likely want to do

after the event?

9. oEffect: What effects does the event have on

others?

If-Then Relation Types

• If-Event-Then-Mental-State

• If-Event-Then-Event

• If-Event-Then-Persona

Inference dimension

Adopted from [2]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-11-320.jpg)

![ATOMIC

• If-Event-Then-Mental-State: three relations relating to the mental pre- and post-conditions of an event.

X compliments Y X wants to be nice

X feels good

Y feels flattered

xIntent: likely intents of the event

xReaction: likely (emotional) reactions of the event’s subject

oReaction: likely (emotional) reactions of others

Adopted from [2]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-12-320.jpg)

![ATOMIC

Adopted from [2]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-14-320.jpg)

![COMET: COMmonsEnse Transformers for Automatic Knowledge Graph

Construction [3]

Adopted from [3]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-15-320.jpg)

![COMET: COMmonsEnse Transformers for Automatic Knowledge Graph

Construction

Adopted from [3]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-16-320.jpg)

![COMET: COMmonsEnse Transformers for Automatic Knowledge Graph

Construction

Loss function

ℒ = −

𝑡= 𝑠 +|𝑟|

𝑠 + 𝑟 +|𝑜|

log 𝑃 𝑥 𝑡 𝑥<𝑡

Input Template

Notation

• Natural language tuples in {s: subject, r: relation, o: object}

• Subject tokens: 𝑿 𝒔 = 𝑥0

𝑠

, ⋯ , 𝑥 𝑠

𝑠

• Relation tokens: 𝑿 𝒓

= 𝑥0

𝑟

, ⋯ , 𝑥 𝑟

𝑟

• Object tokens: 𝑿 𝒐

= 𝑥0

𝑜

, ⋯ , 𝑥 𝑜

𝑜

Adopted from [3]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-17-320.jpg)

![Adversarial Filtering Algorithm

• A significant challenge in creating datasets is avoiding annotation artifacts.

• Annotation artifacts: unintentional patterns in the data that leak information about the

target label.

In each iteration 𝑖,

• 𝑀𝑖: adversarial model

• 𝒯𝑖: a random subset for training

• 𝒱𝑖: validation set

• ℎ 𝑘

+

, ℎ 𝑘

−

: plausible and implausible

hypotheses for an instance 𝑘.

• 𝛿 = ∆ 𝑀 𝑖

ℎ 𝑘

+

, ℎ 𝑘

−

: the difference in the

model evaluation of ℎ 𝑘

+

and ℎ 𝑘

−

.

With probability 𝑡𝑖, update instance 𝑘 that

𝑀𝑖 gets correct with a pair ℎ+, ℎ− ∈ ℋ𝑘

+

×

ℋ𝑘

−

of hypotheses that reduces the value

of 𝛿, where ℋ𝑘

+

(resp. ℋ𝑘

−

) is the pool of

plausible (resp. implausible) hypotheses for

instance 𝑘.

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-19-320.jpg)

![Performance of baselines (𝛼𝑁𝐿𝐼)

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-20-320.jpg)

![Performance of baselines (𝛼𝑁𝐿𝐼)

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-21-320.jpg)

![Performance of baselines (𝛼𝑁𝐿𝐺)

Adopted from [1]

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-22-320.jpg)

![BERT-Score

Evaluating Text Generation with BERT (like inception-score, Frechet-inception-distance, kernel-inception-distance in image generation)

Adopted from [4]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-23-320.jpg)

![BERT-Score

Adopted from [4]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-25-320.jpg)

![Abductive Commonsense Reasoning

1. 𝑂1 ↛ ℎ−: ℎ− is unlikely to follow after the first observation 𝑂1.

2. ℎ− ↛ 𝑂2: ℎ− is plausible after 𝑂1 but unlikely to precede the second observation 𝑂2.

3. Plausible: 𝑂1, ℎ−

, 𝑂2 is a coherent narrative and forms a plausible alternative, but it less plausible

than 𝑂1, ℎ+

, 𝑂2 .

Adopted from [1]

Bert performance](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-26-320.jpg)

![Abductive Commonsense Reasoning

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-27-320.jpg)

![Abductive Commonsense Reasoning

Adopted from [1]](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-28-320.jpg)

![Reference

[1] Chandra Bhagavatula et. al., Abductive Commonsense Reasoning, ICLR 2020.

[2] Maarten Sap et. al., ATOMIC: An atlas of Machine Commonsense for If-Then Reasoning,

arXiv:1811.00146

[3] Antonie Bosselut et. al., COMET: Commonsense Transformers for Automatic Knowledge Graph

Construction, ACL 2019

[4] Tianyi Zhang et. al., BERTScore: Evaluating Text Generation with BERT, ICLR 2020.

[5] Robyn Speer et. al., ConceptNet 5.5: An Open Multilingual Graph of General Knowledge,

arXiv:1612.03975

[6] MIT medialab, URL: https://conceptnet.io/](https://image.slidesharecdn.com/abductivecommonsensereasoning-200710125841/85/Abductive-commonsense-reasoning-29-320.jpg)