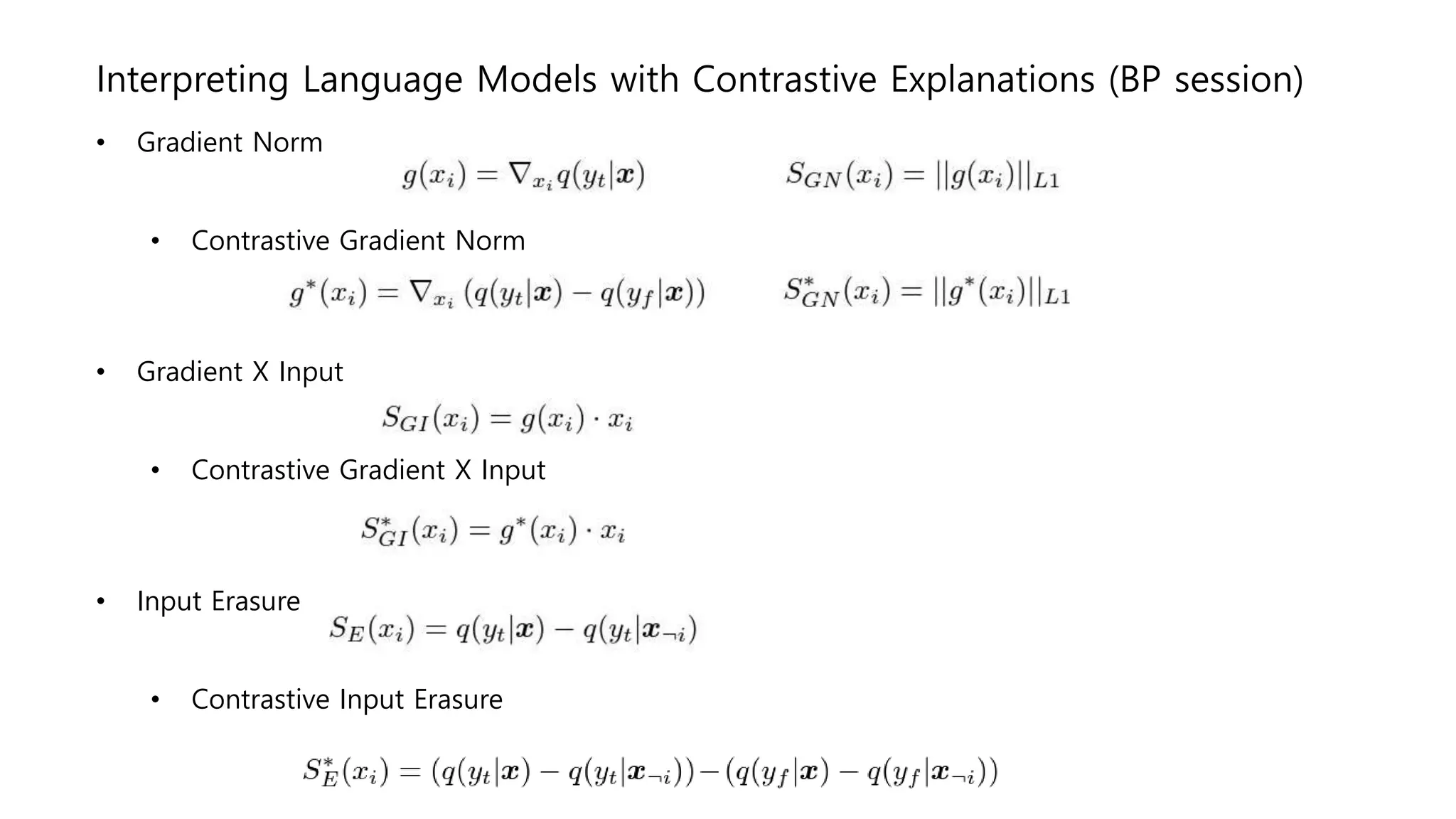

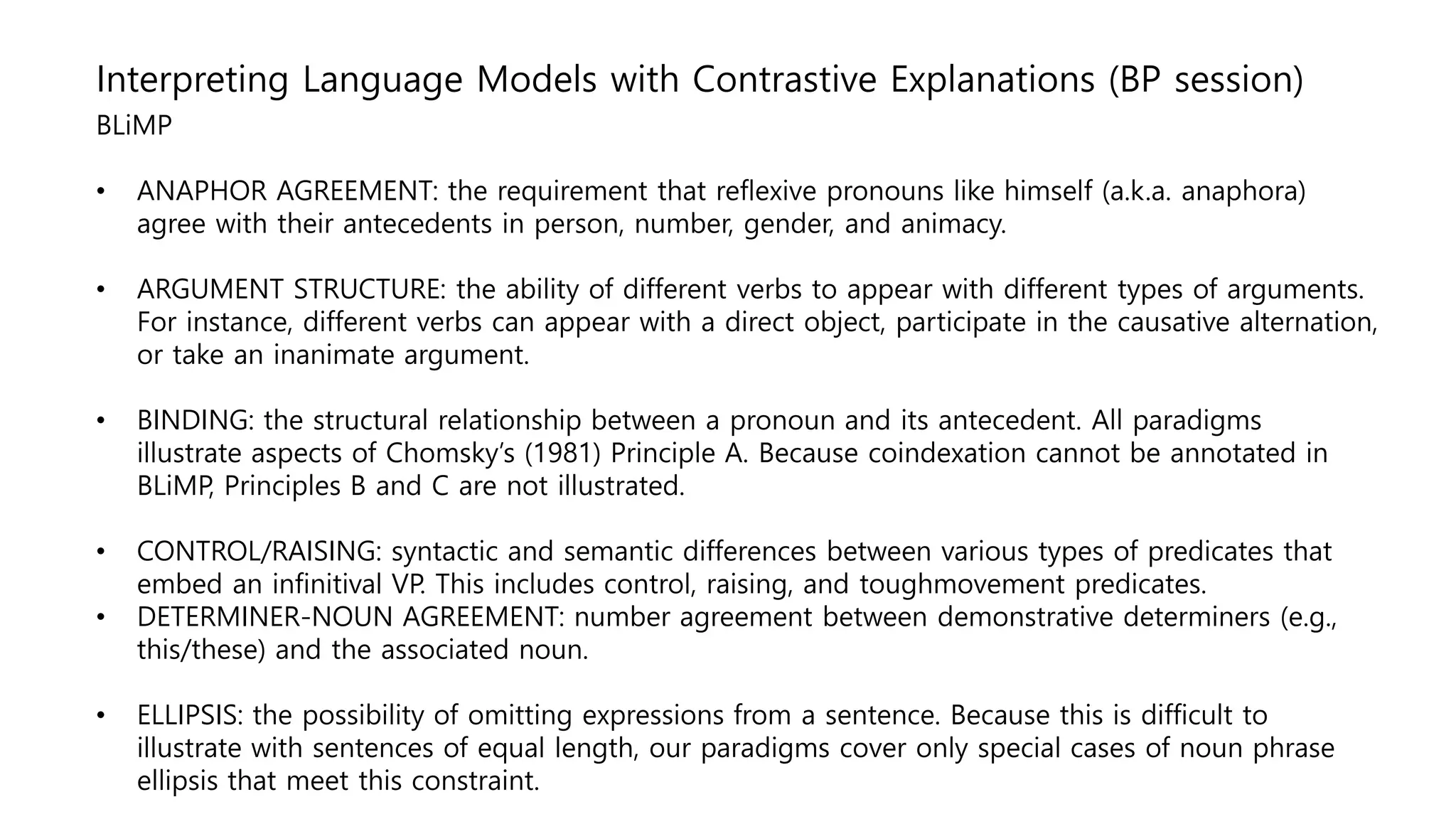

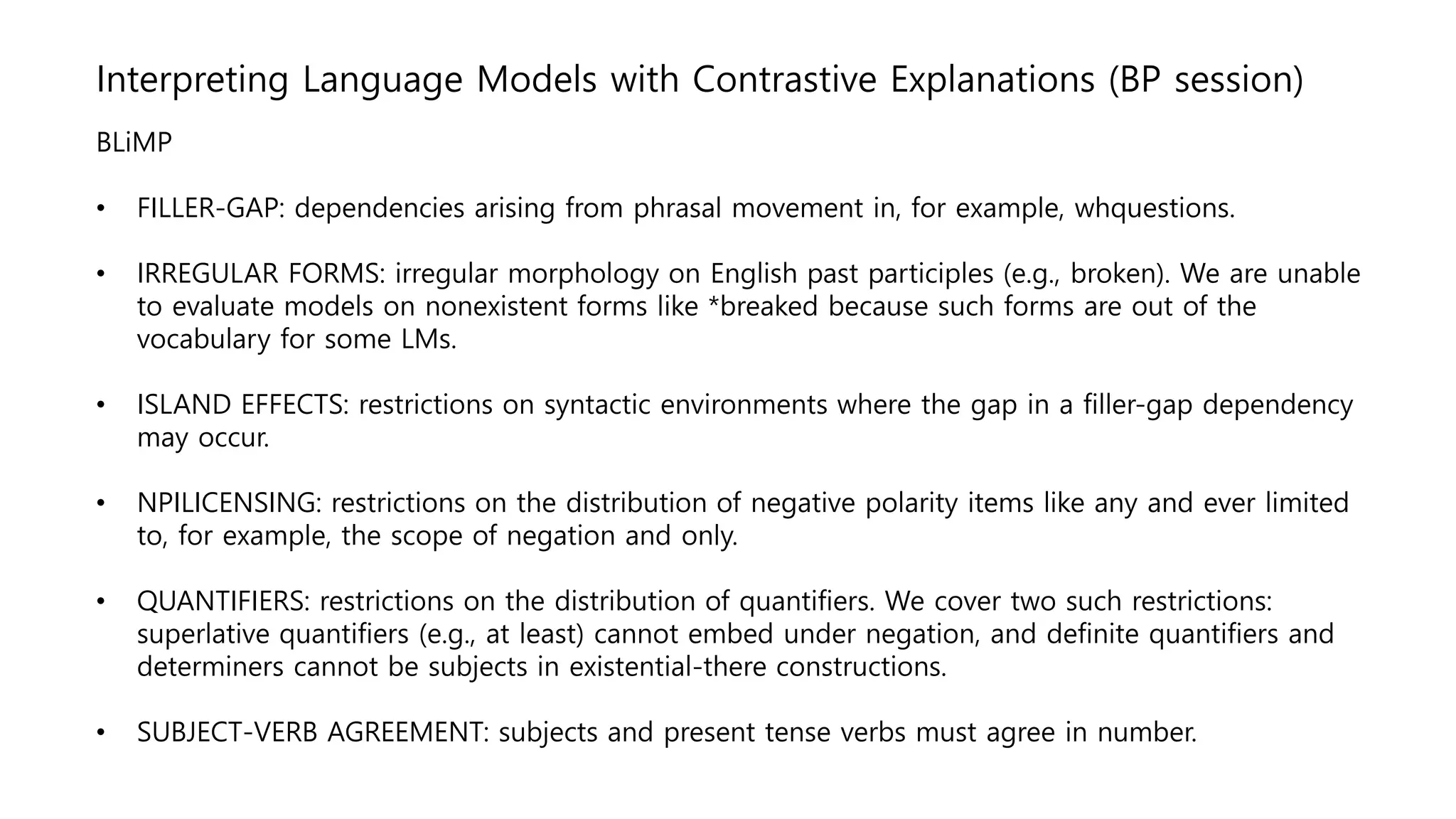

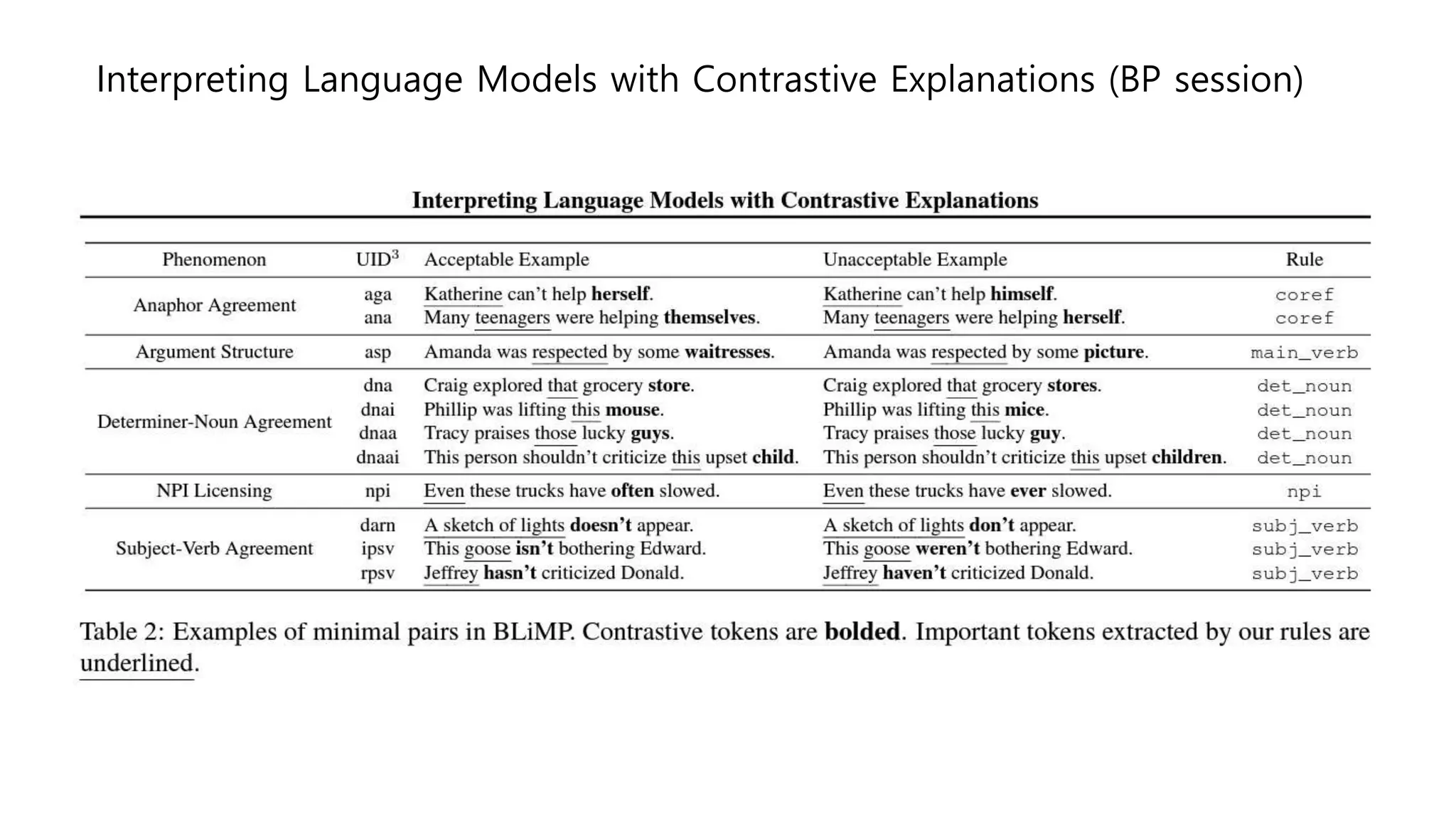

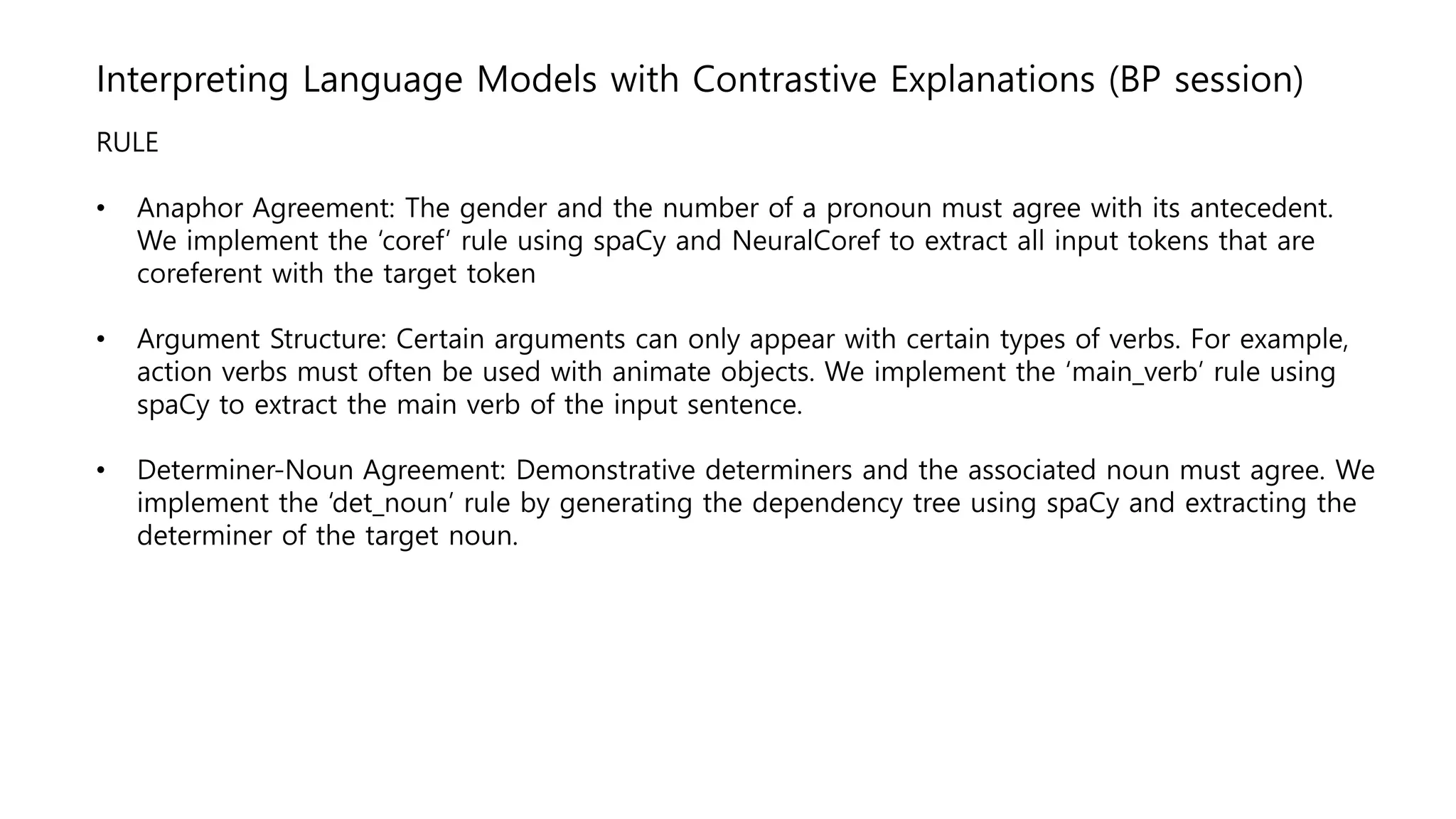

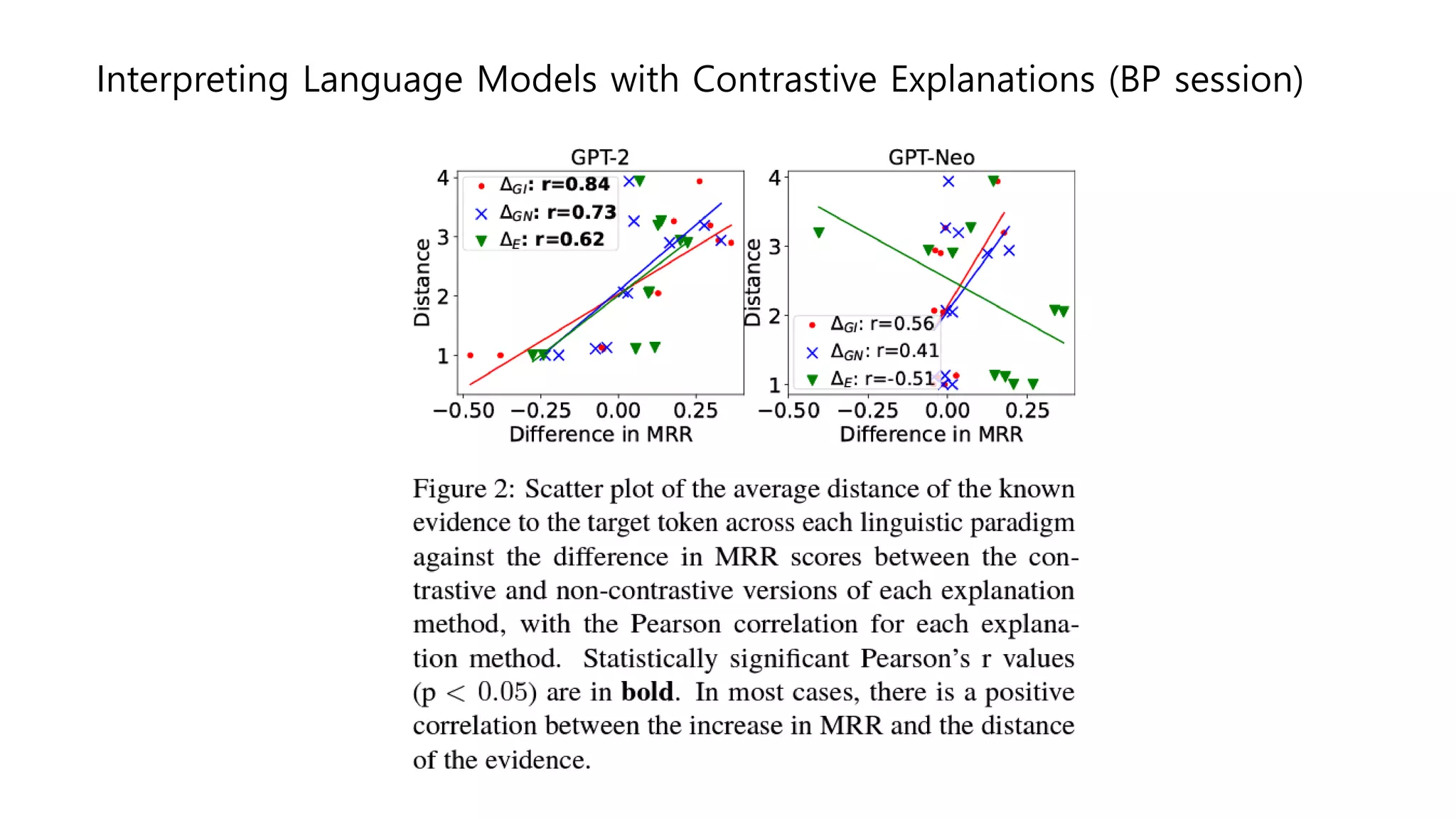

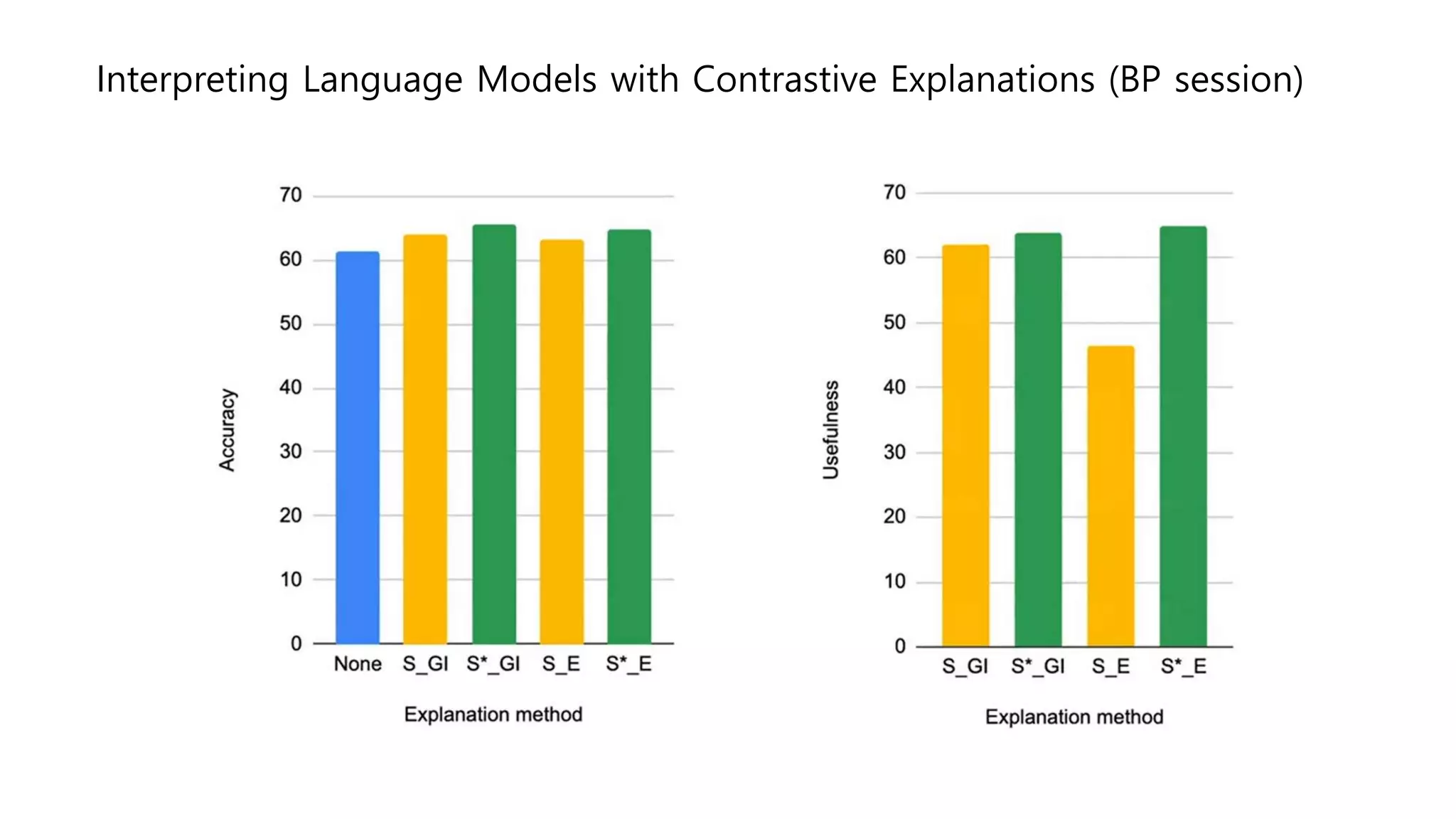

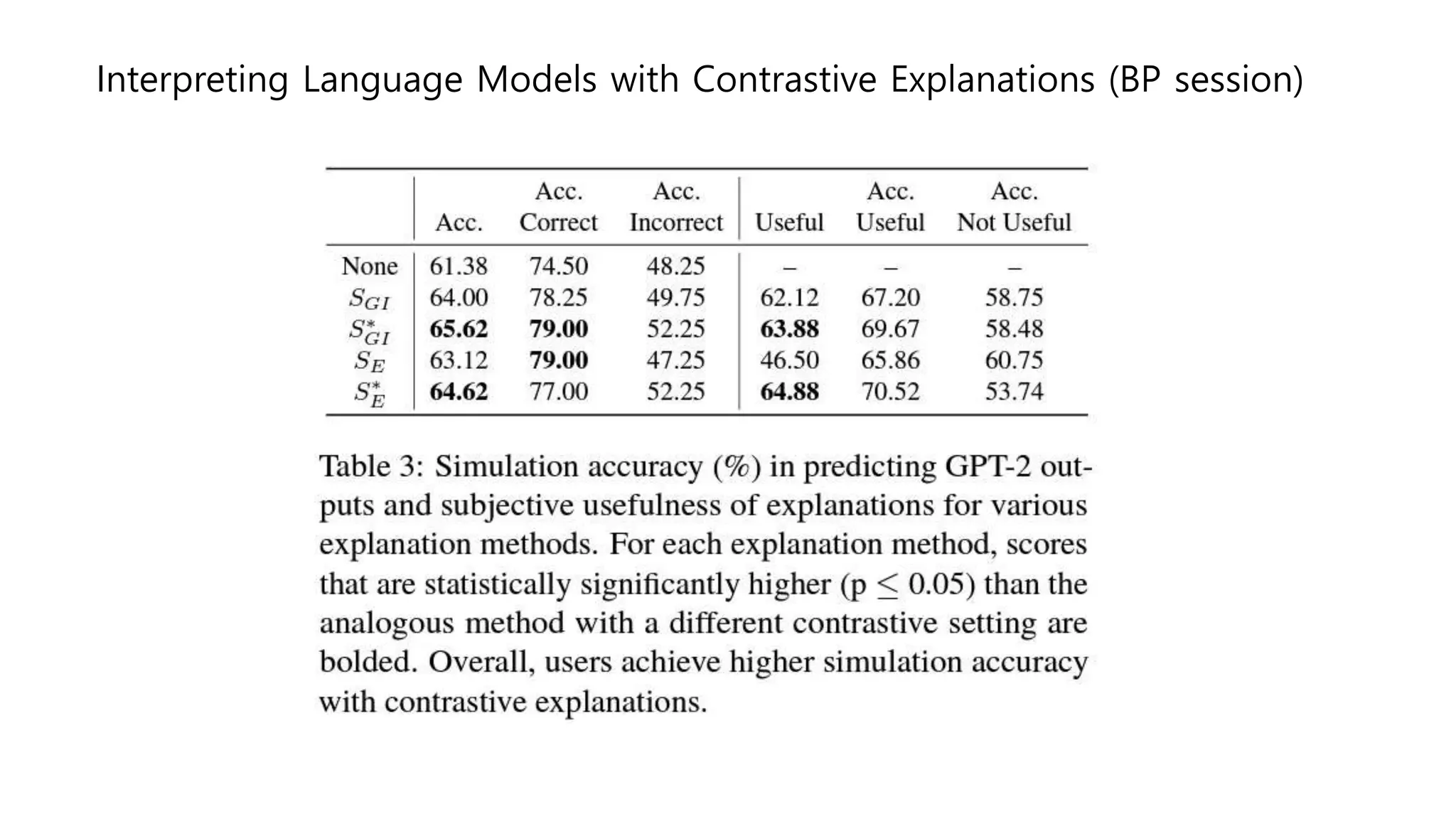

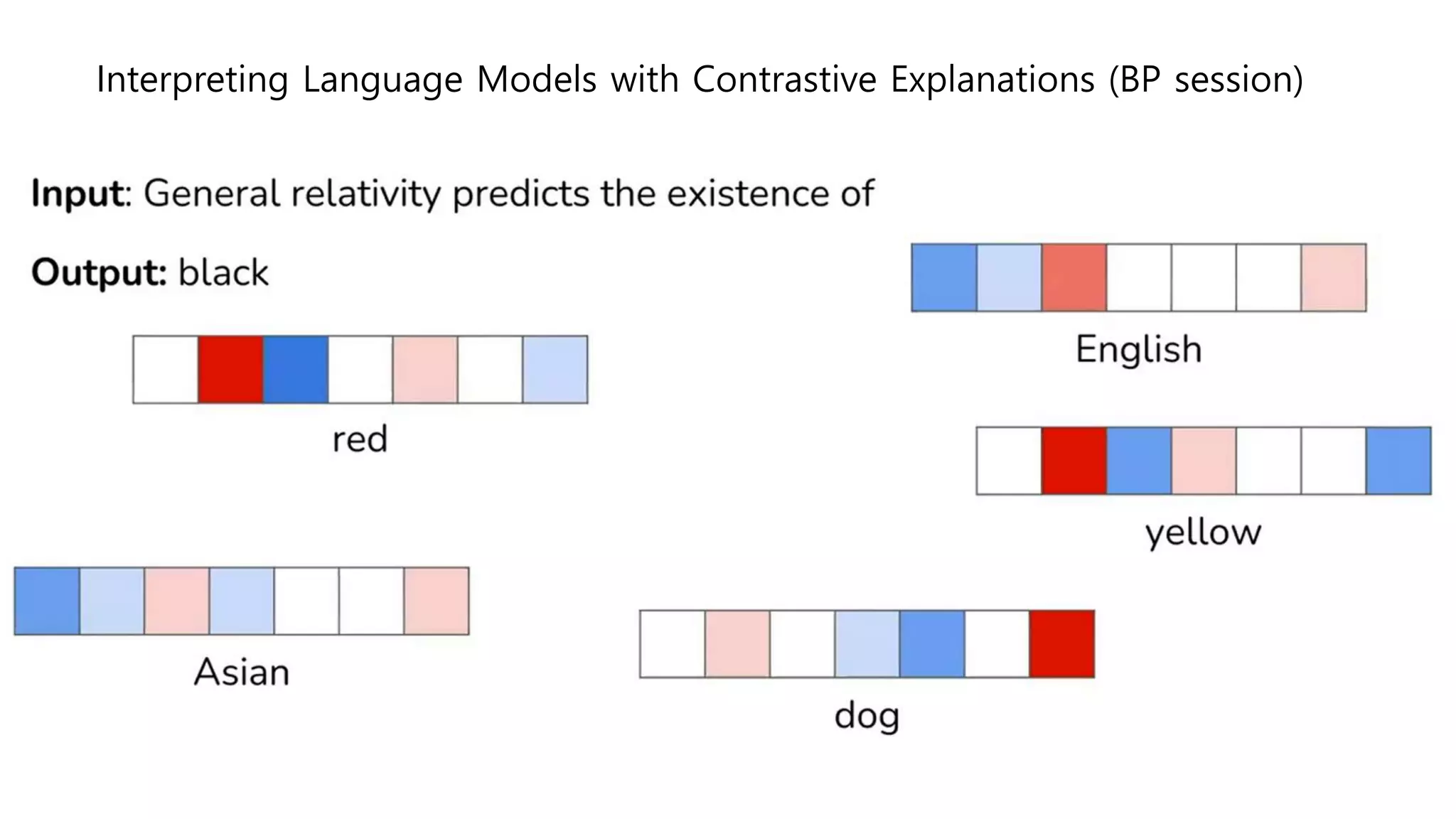

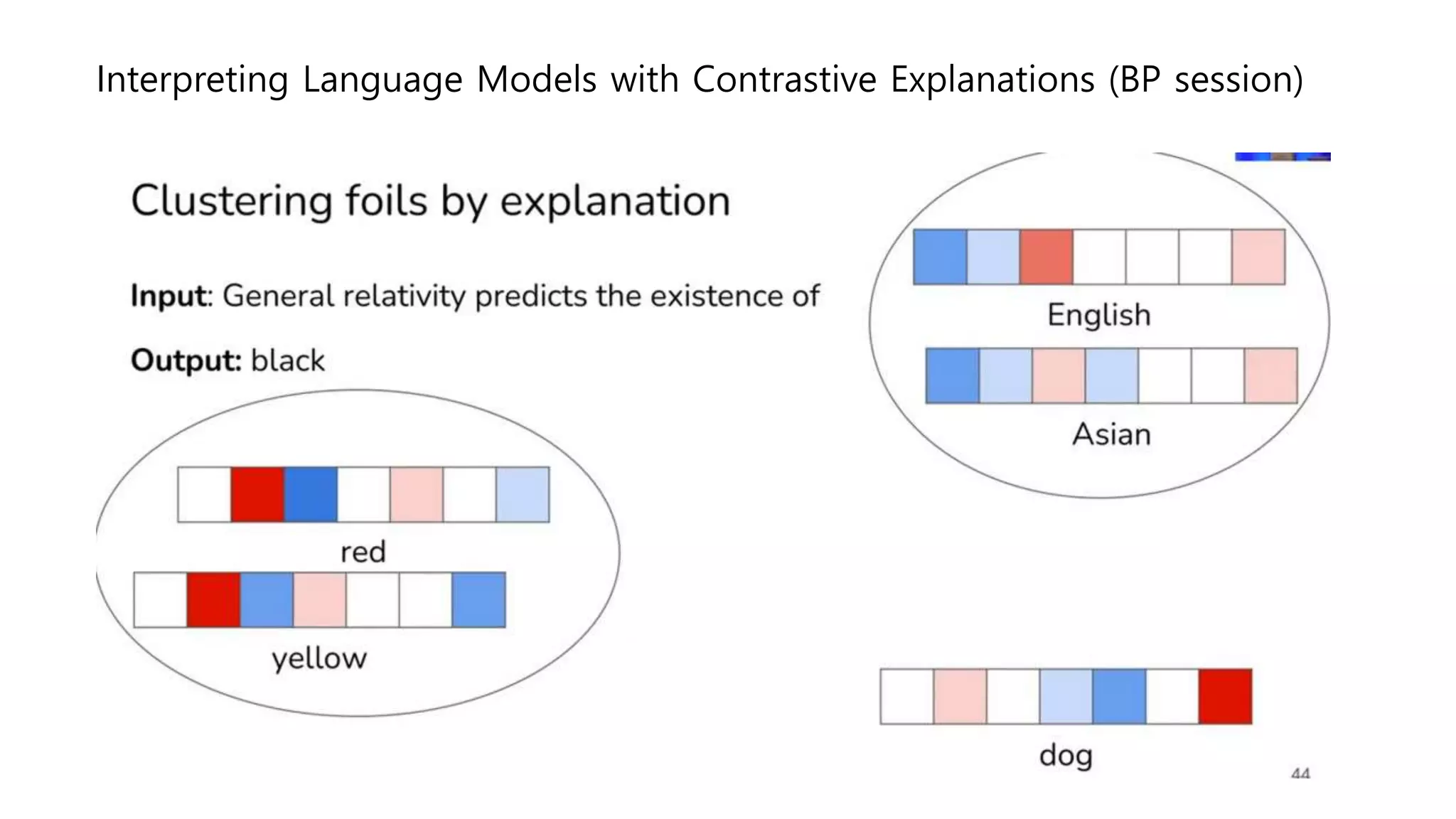

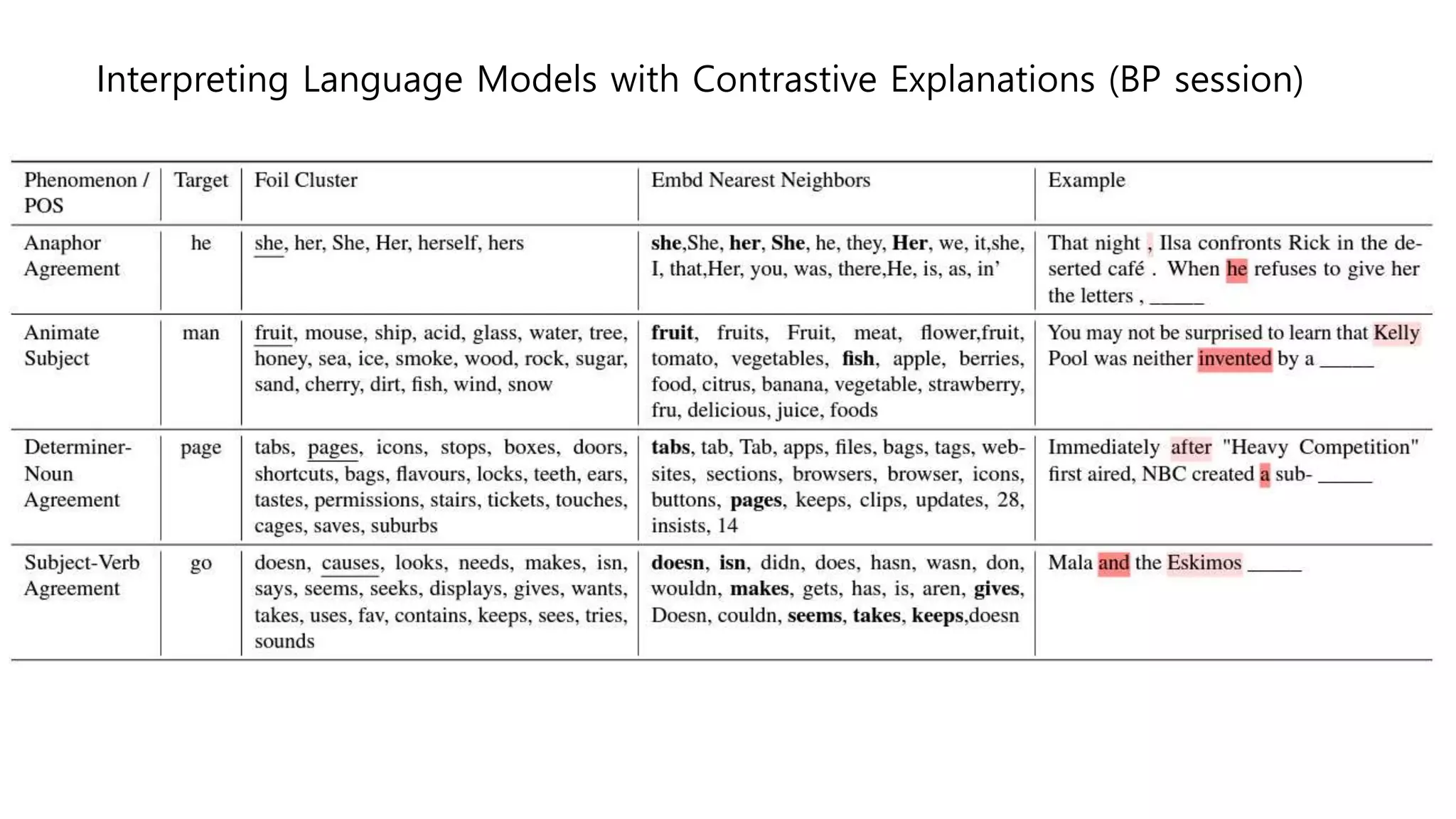

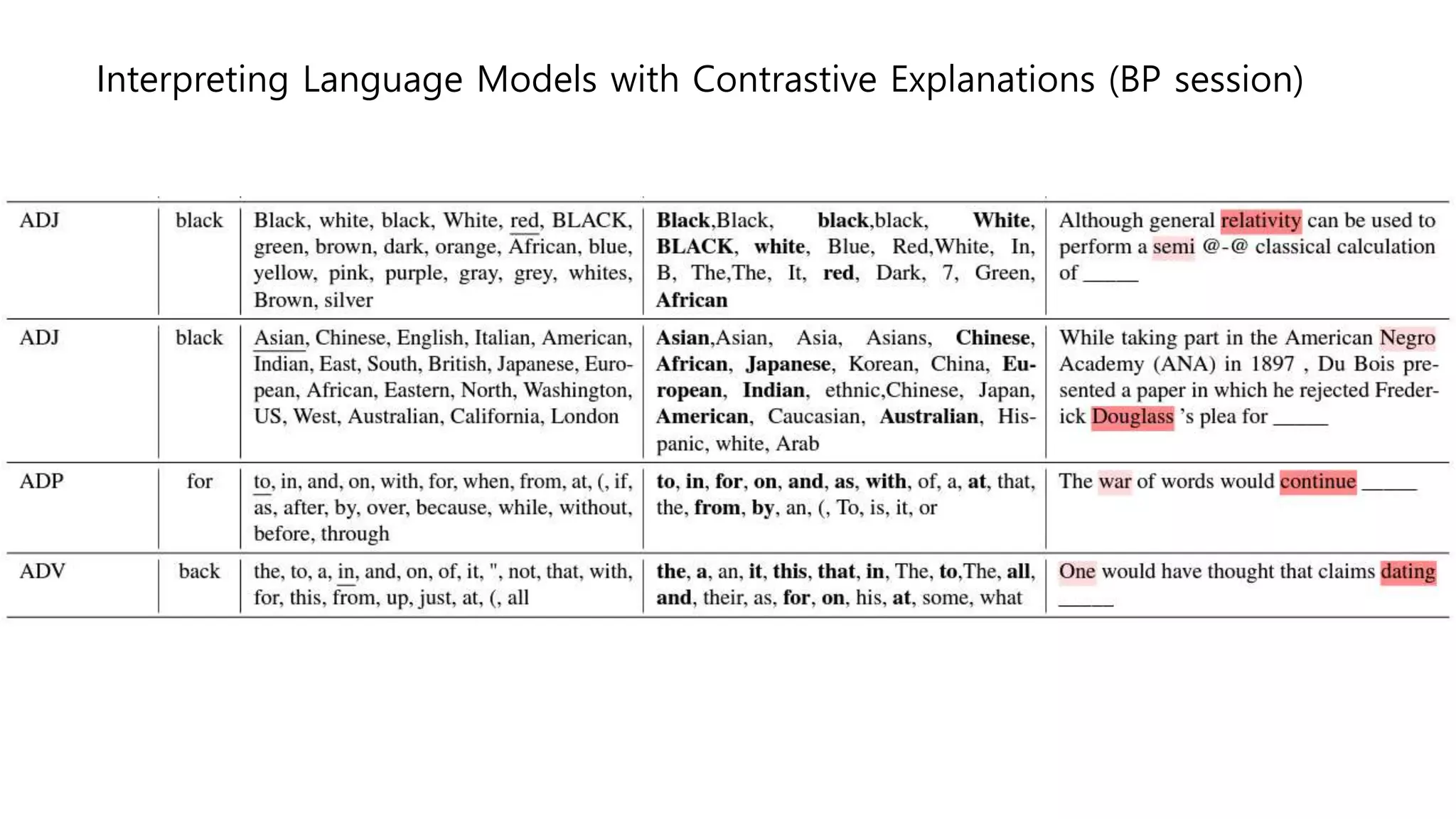

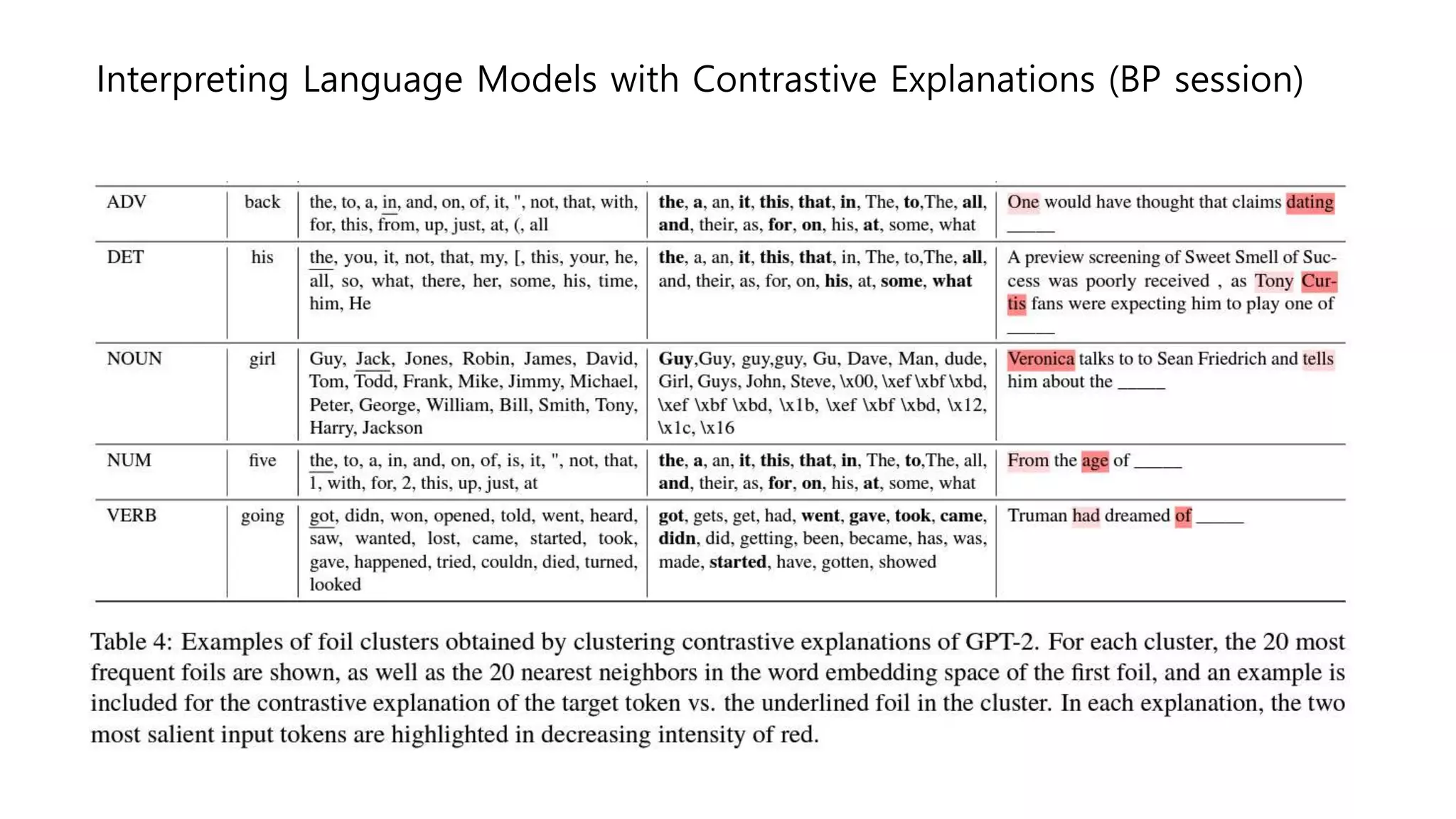

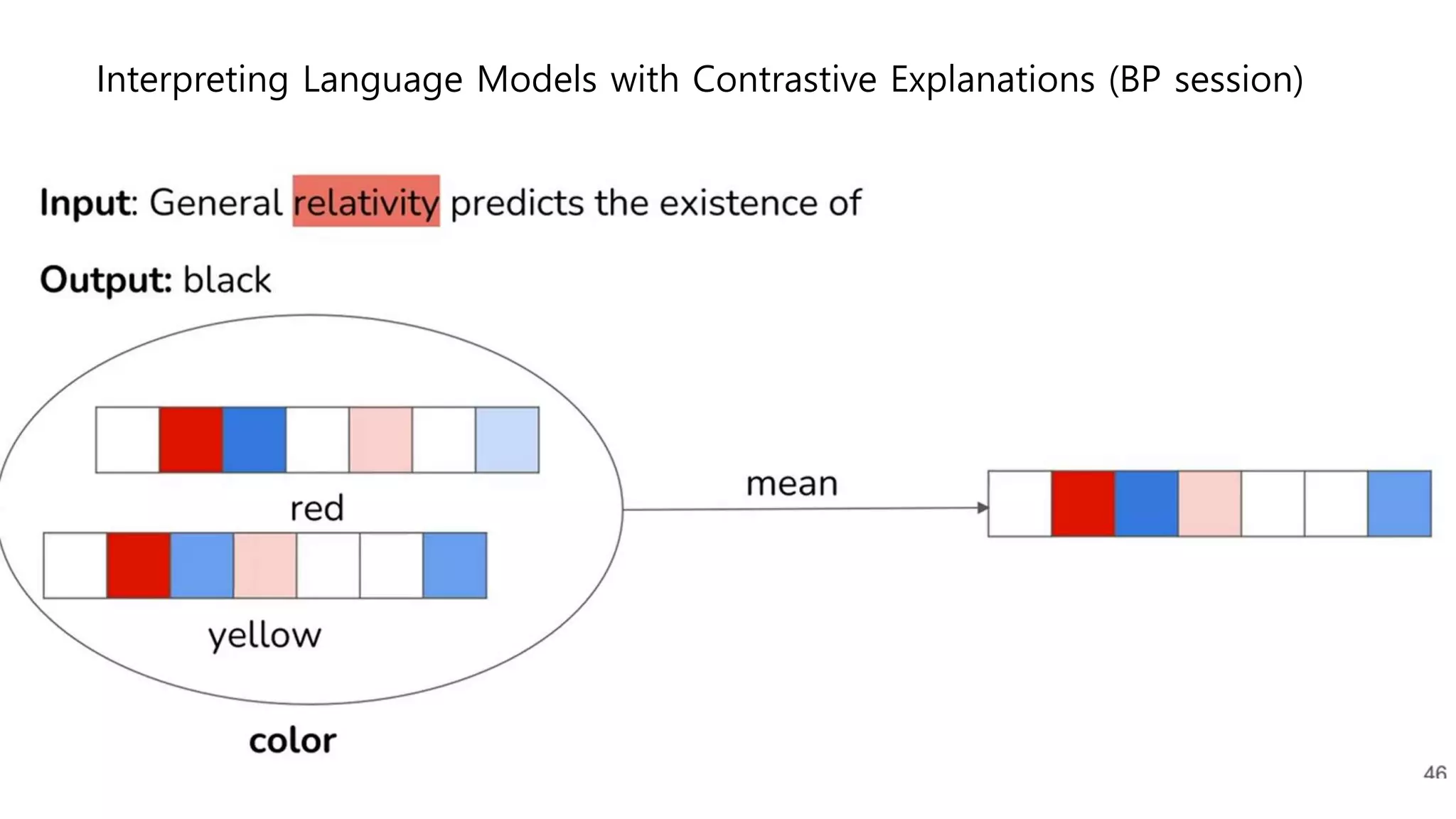

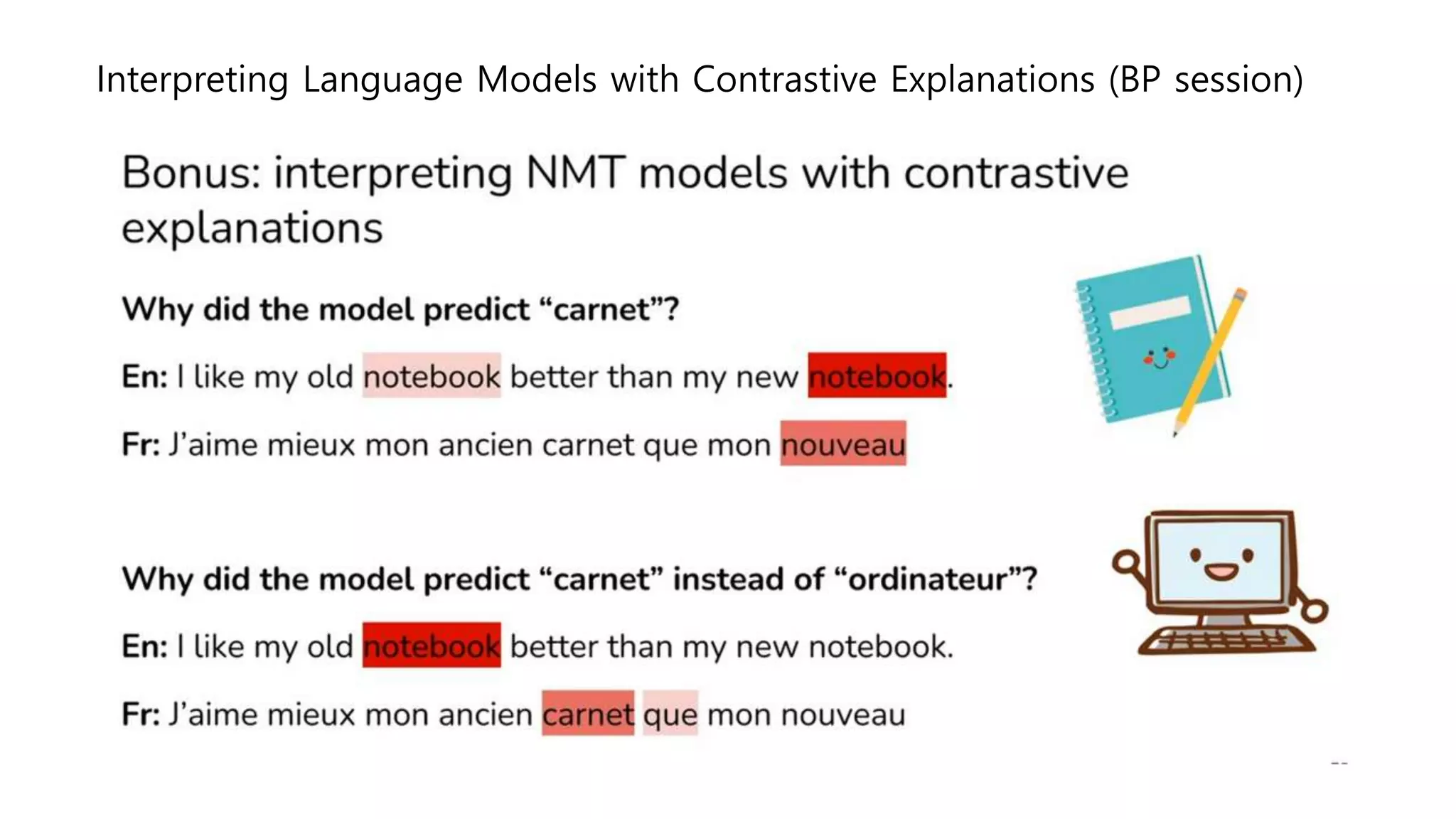

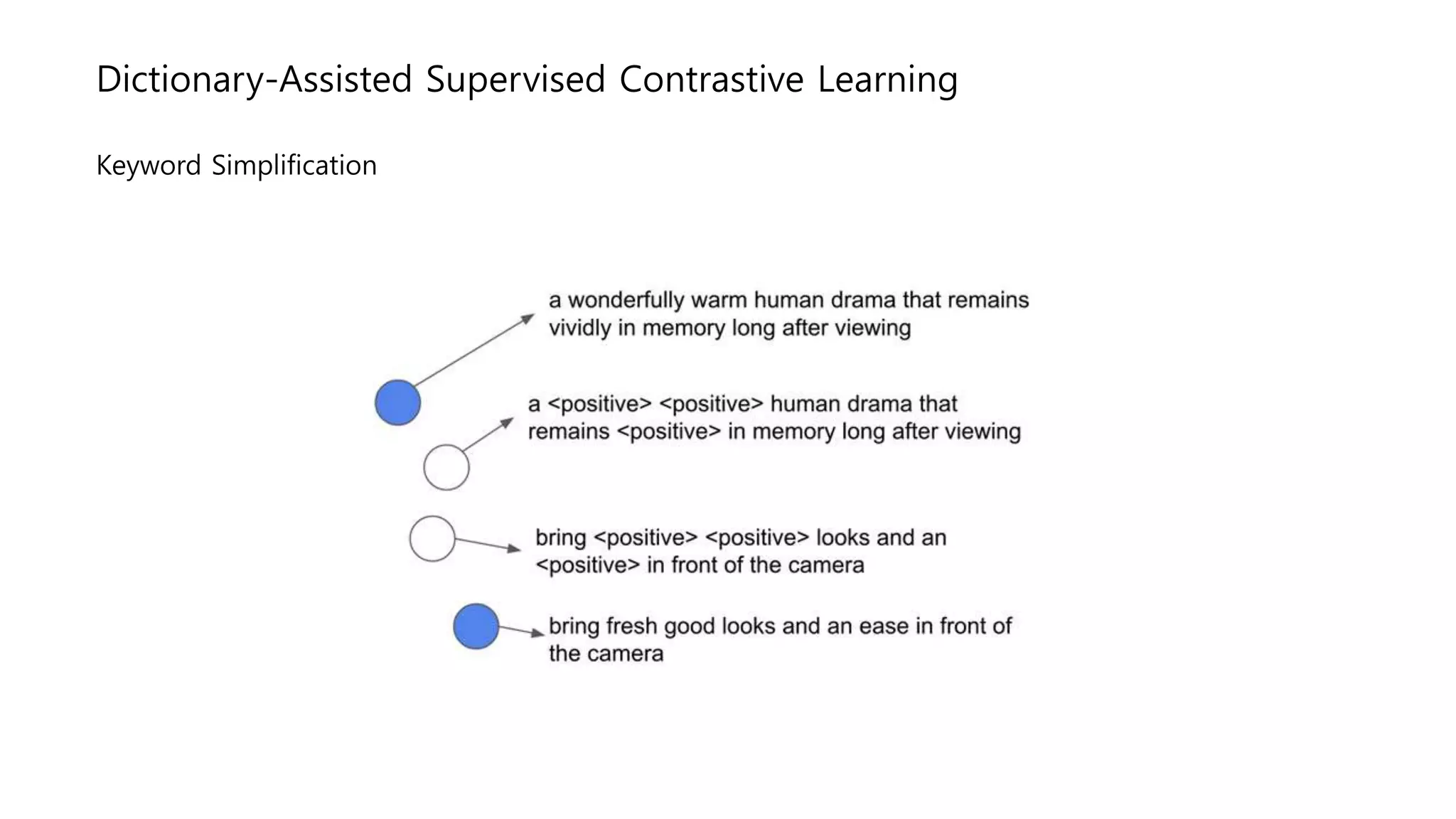

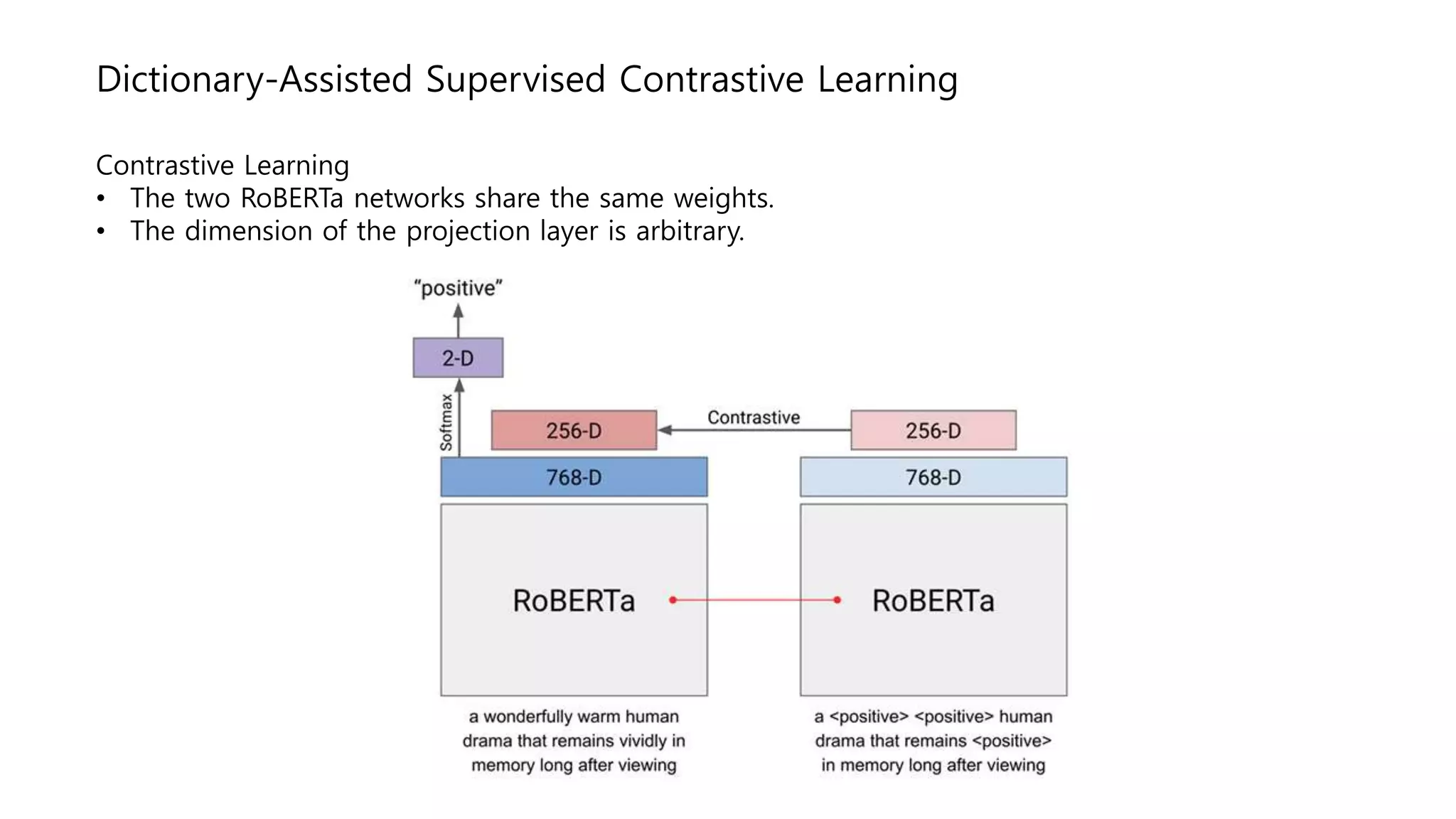

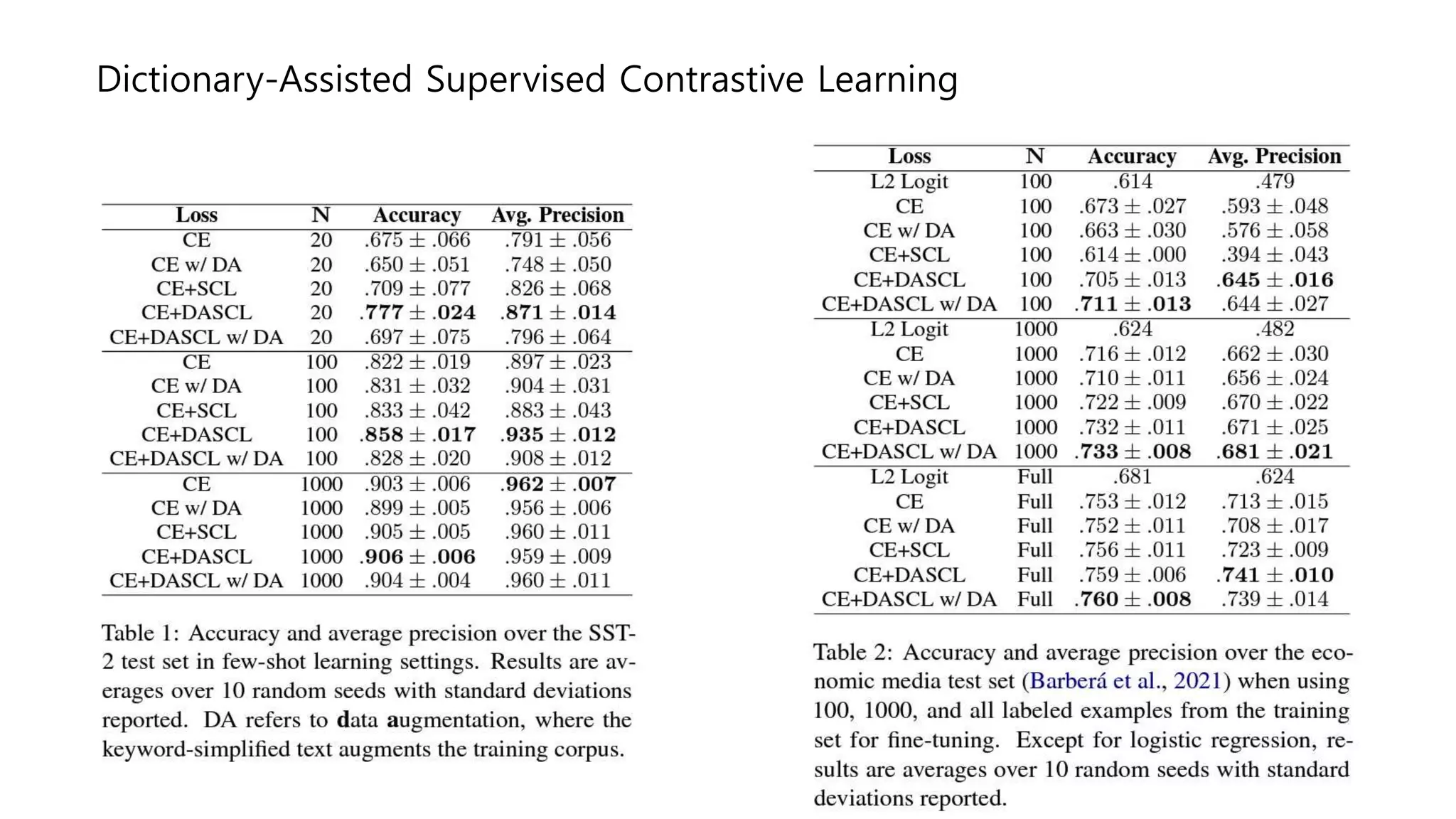

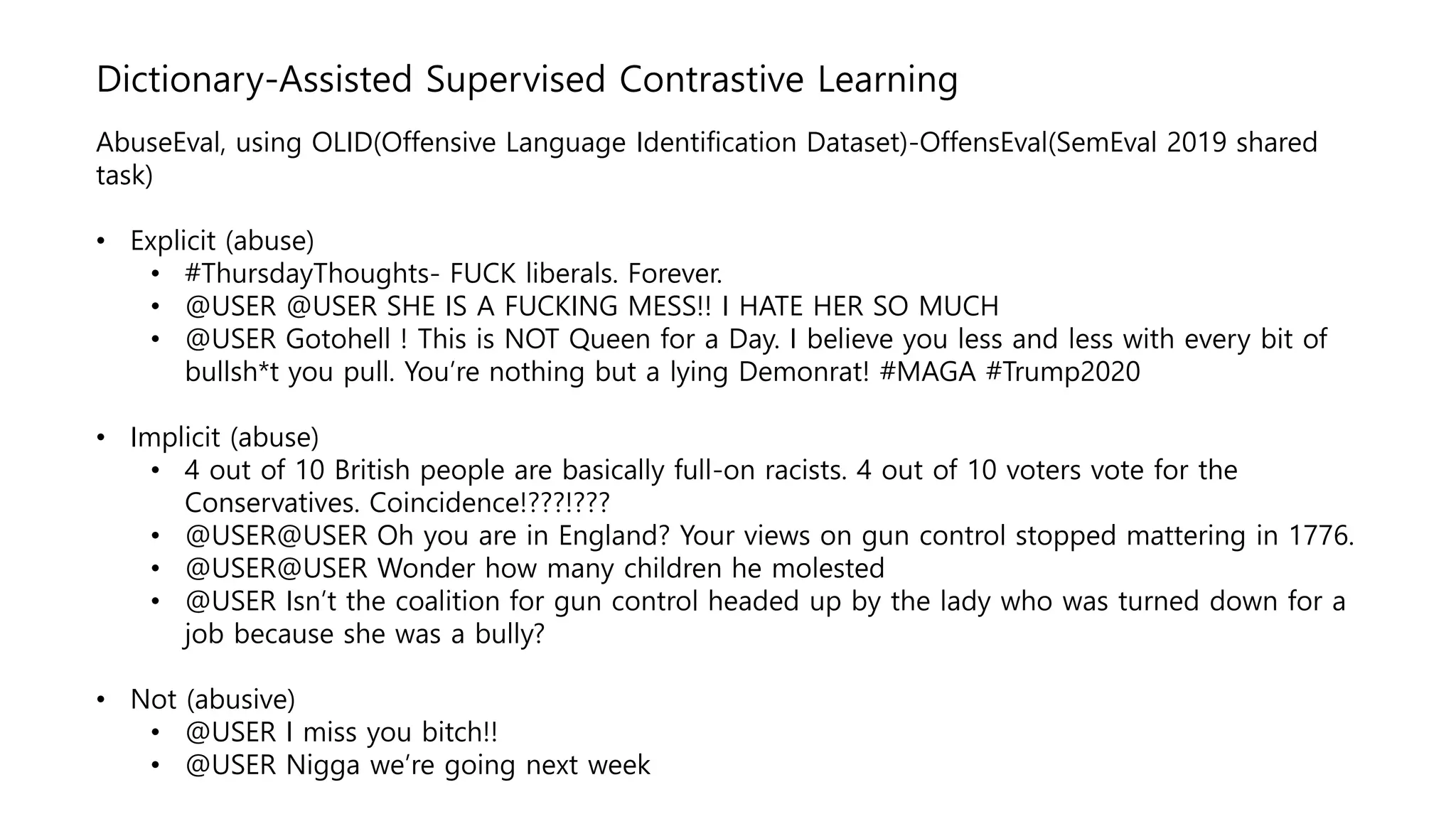

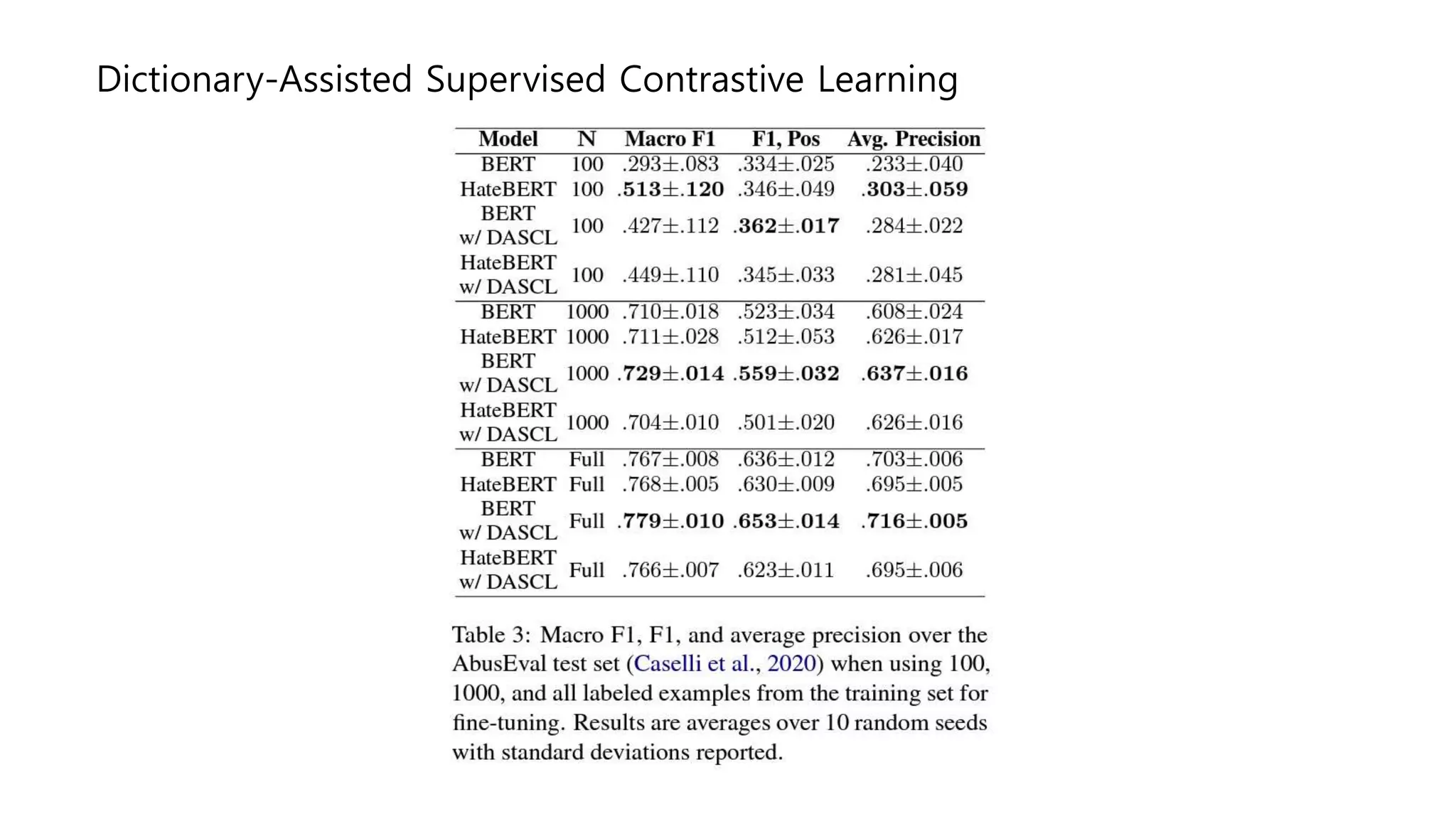

Dictionary-assisted supervised contrastive learning (DASCL) is a method that leverages specialized dictionaries when fine-tuning pretrained language models. It combines cross-entropy loss with a supervised contrastive learning objective to improve classification performance, particularly in few-shot learning settings. Evaluations on tasks like sentiment analysis and abuse detection found that DASCL outperforms cross-entropy alone or supervised contrastive learning without dictionaries. Interpretability techniques like contrastive explanations can provide insights into why models make predictions by comparing predictions to alternative options.

![Interpreting Language Models with Contrastive Explanations (BP session)

Common Approch

• Why did the LM predict [something]?

• Why did the LM predict “barking”?

• Input: Can you stop the dog from

• Output: barking

Contrastive explanations are more intuitive

• Why did the LM predict [target] instead of [foil]?

• Why did the LM predict “barking” instead of “crying”?

• Input: Can you stop the dog from

• Output: barking](https://image.slidesharecdn.com/2023emnlpdaysan-230704104602-3883bffd/75/2023-EMNLP-day_san-pptx-9-2048.jpg)