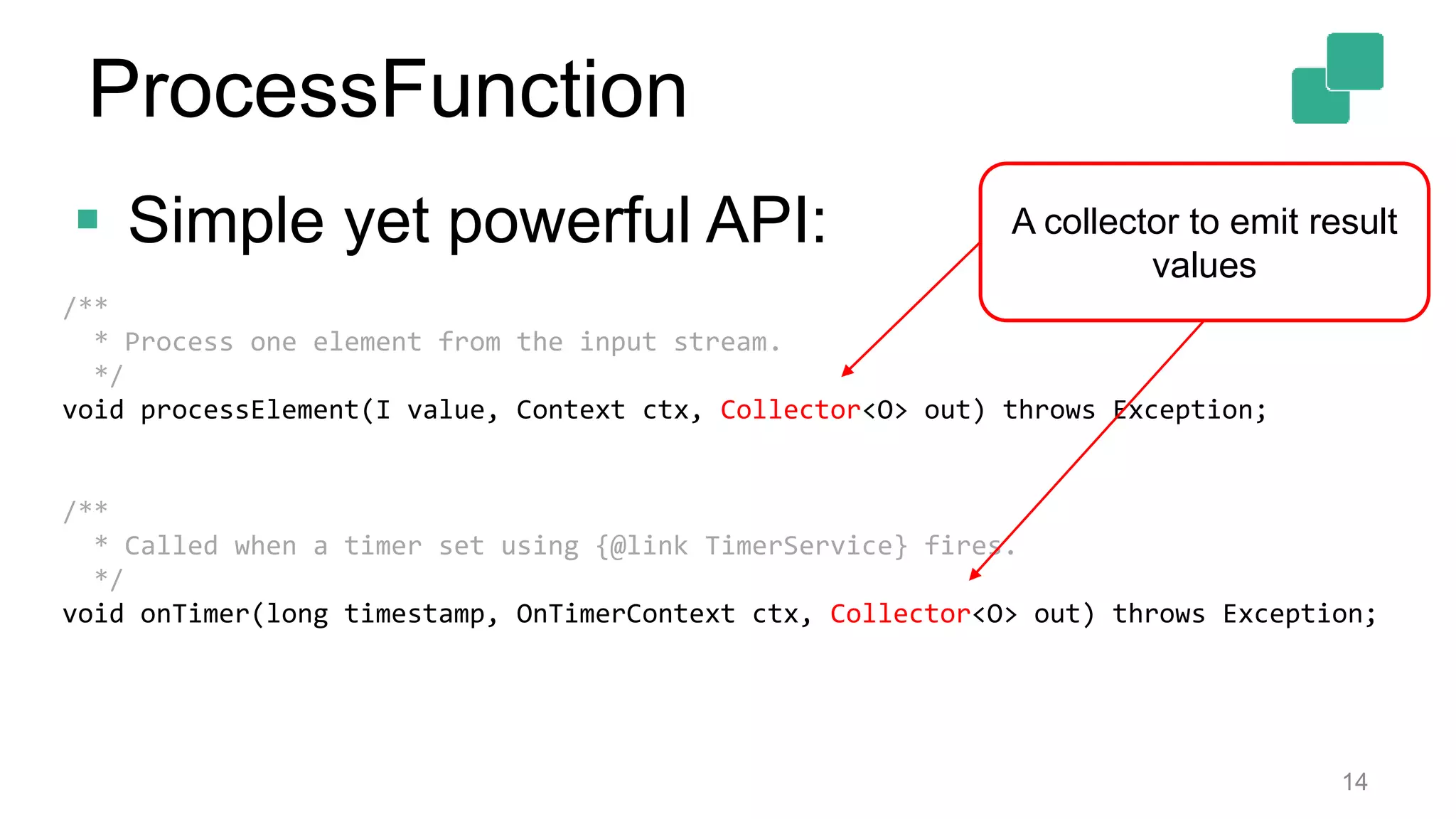

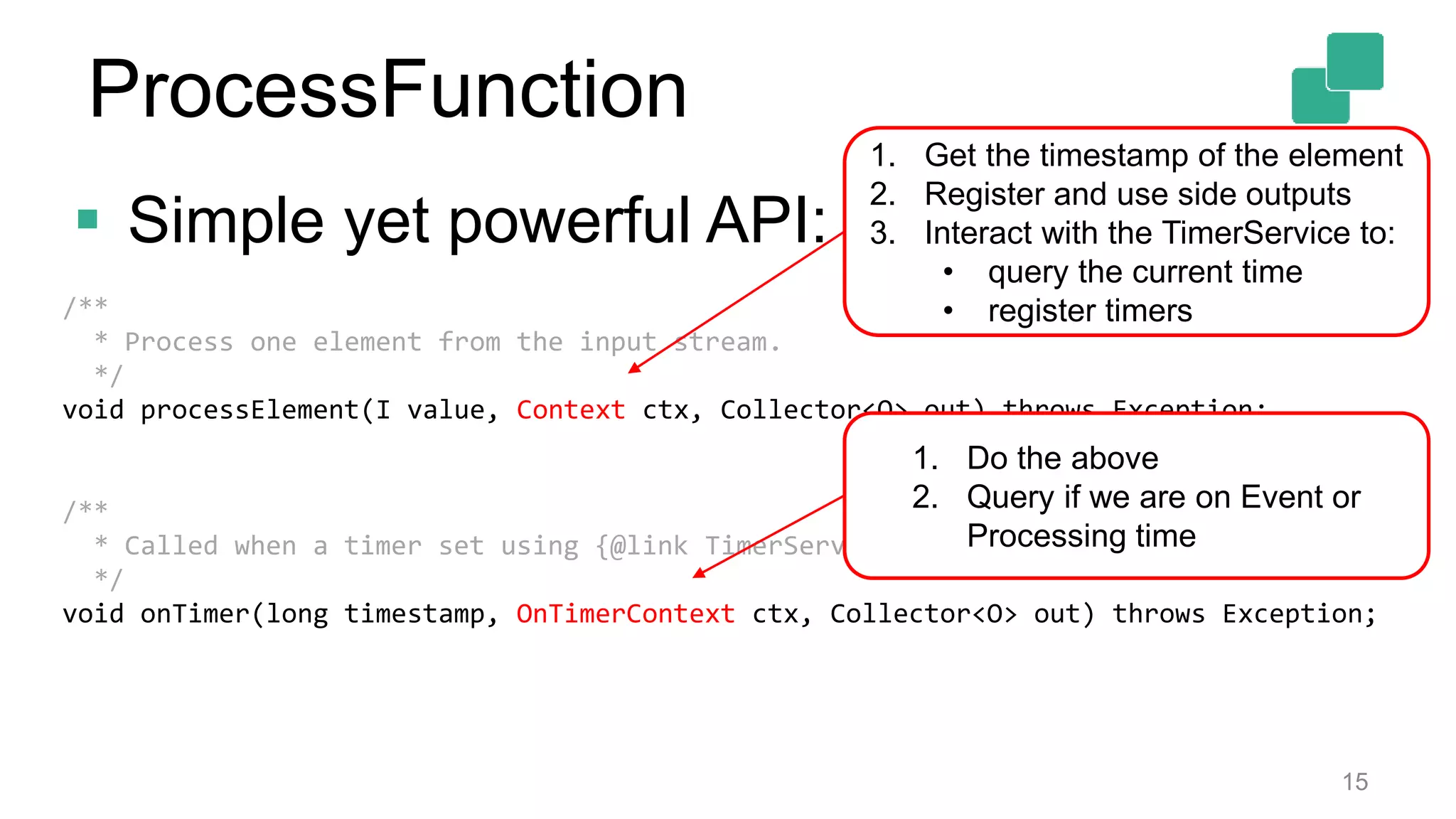

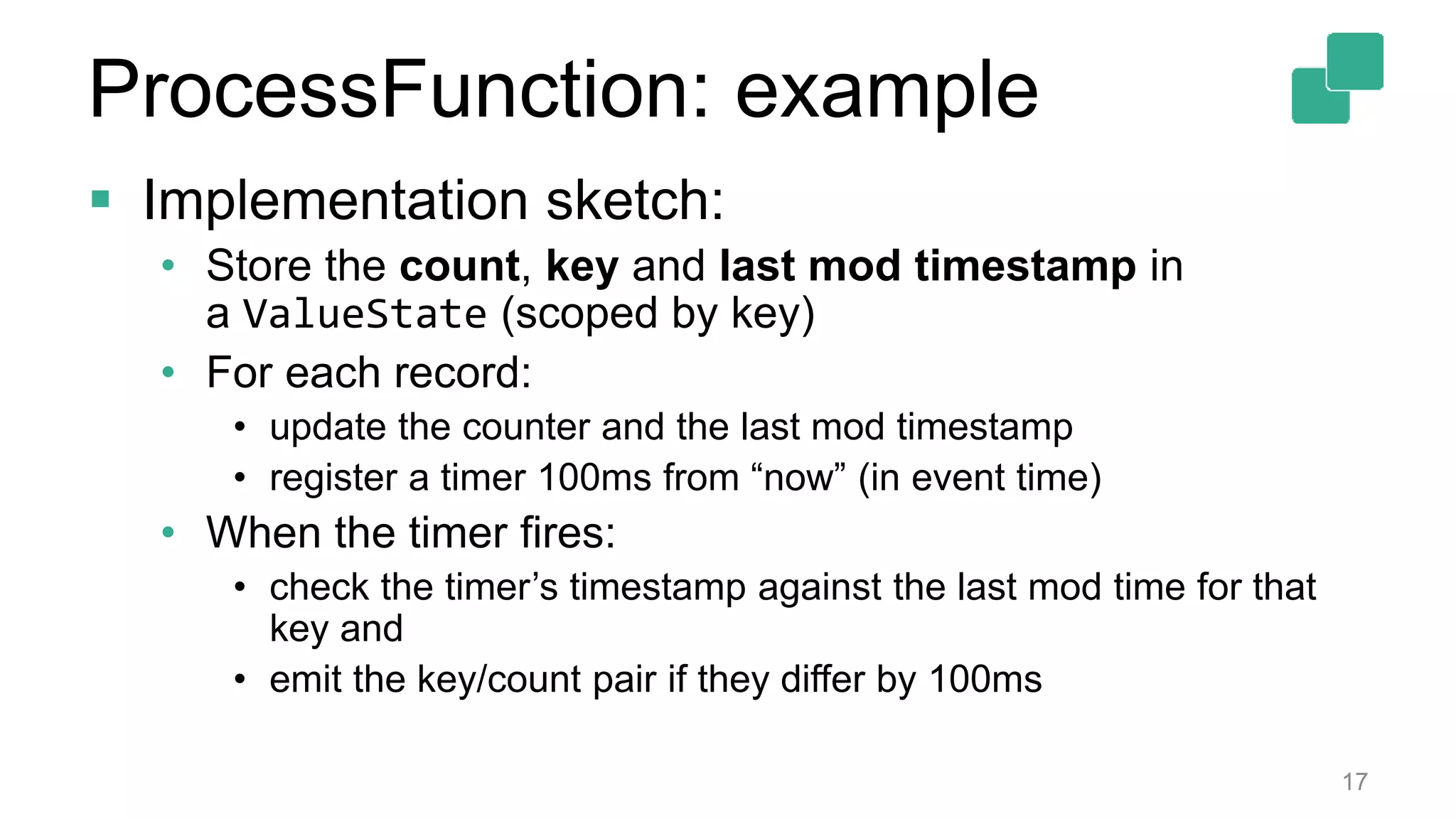

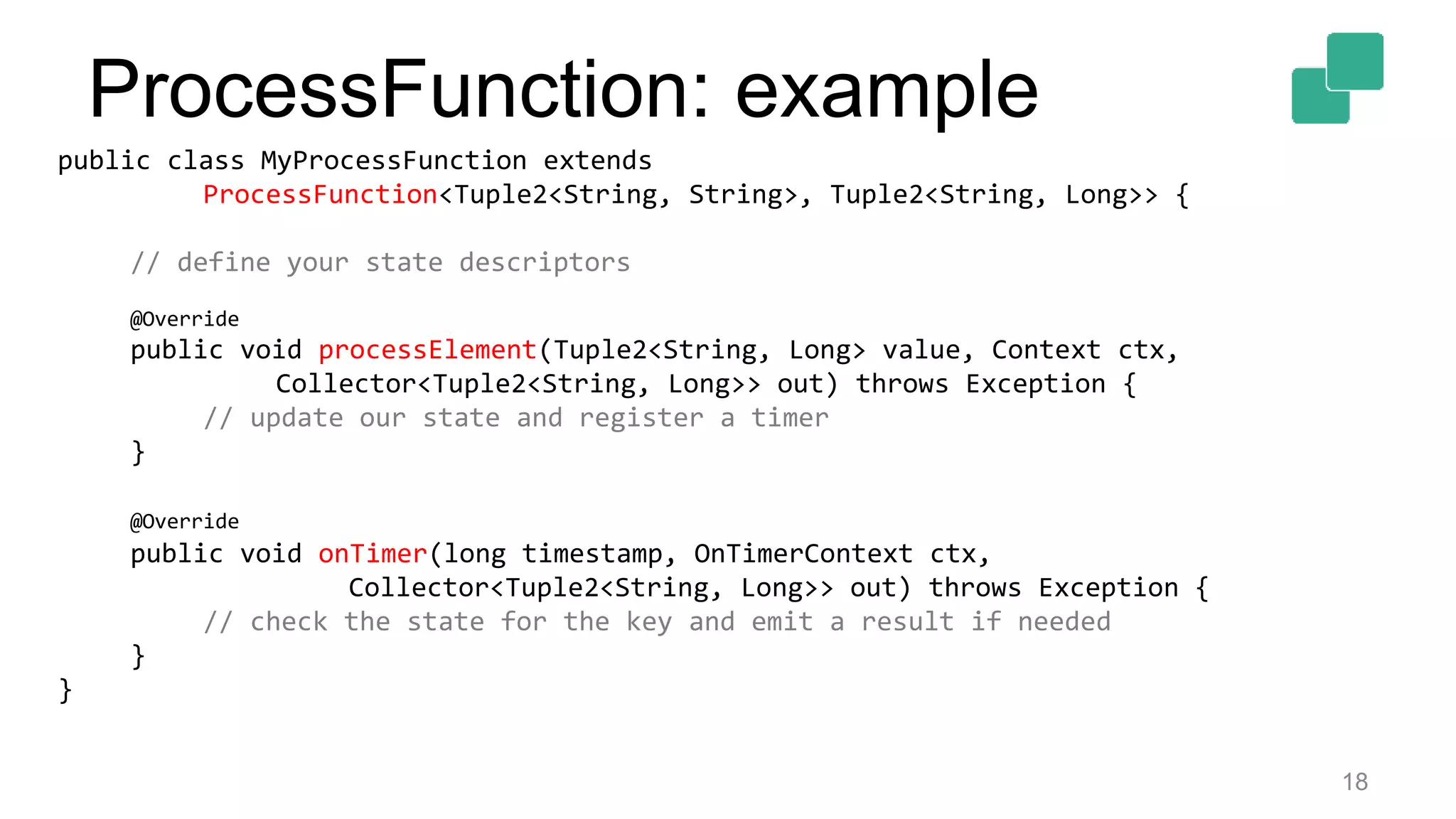

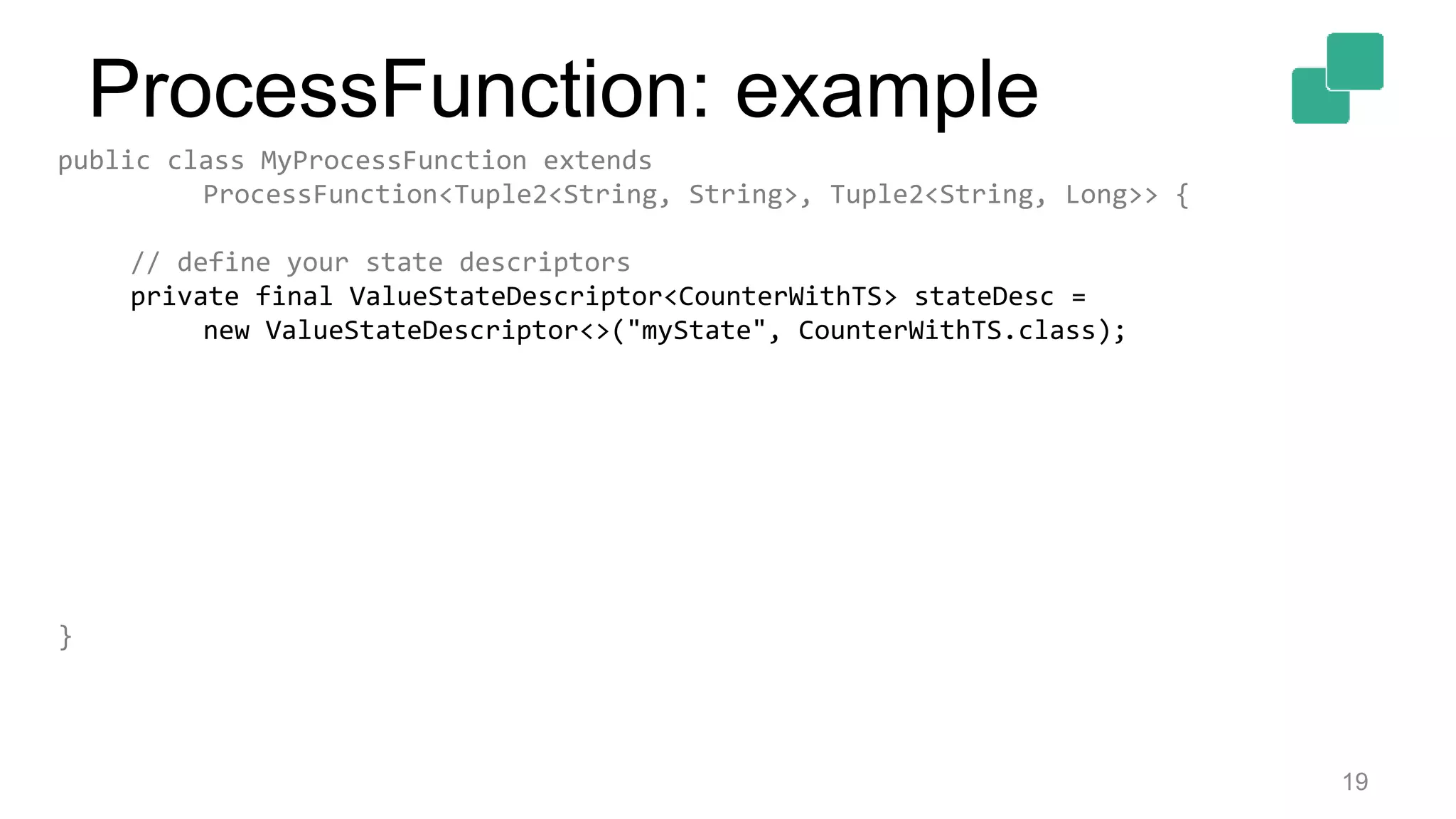

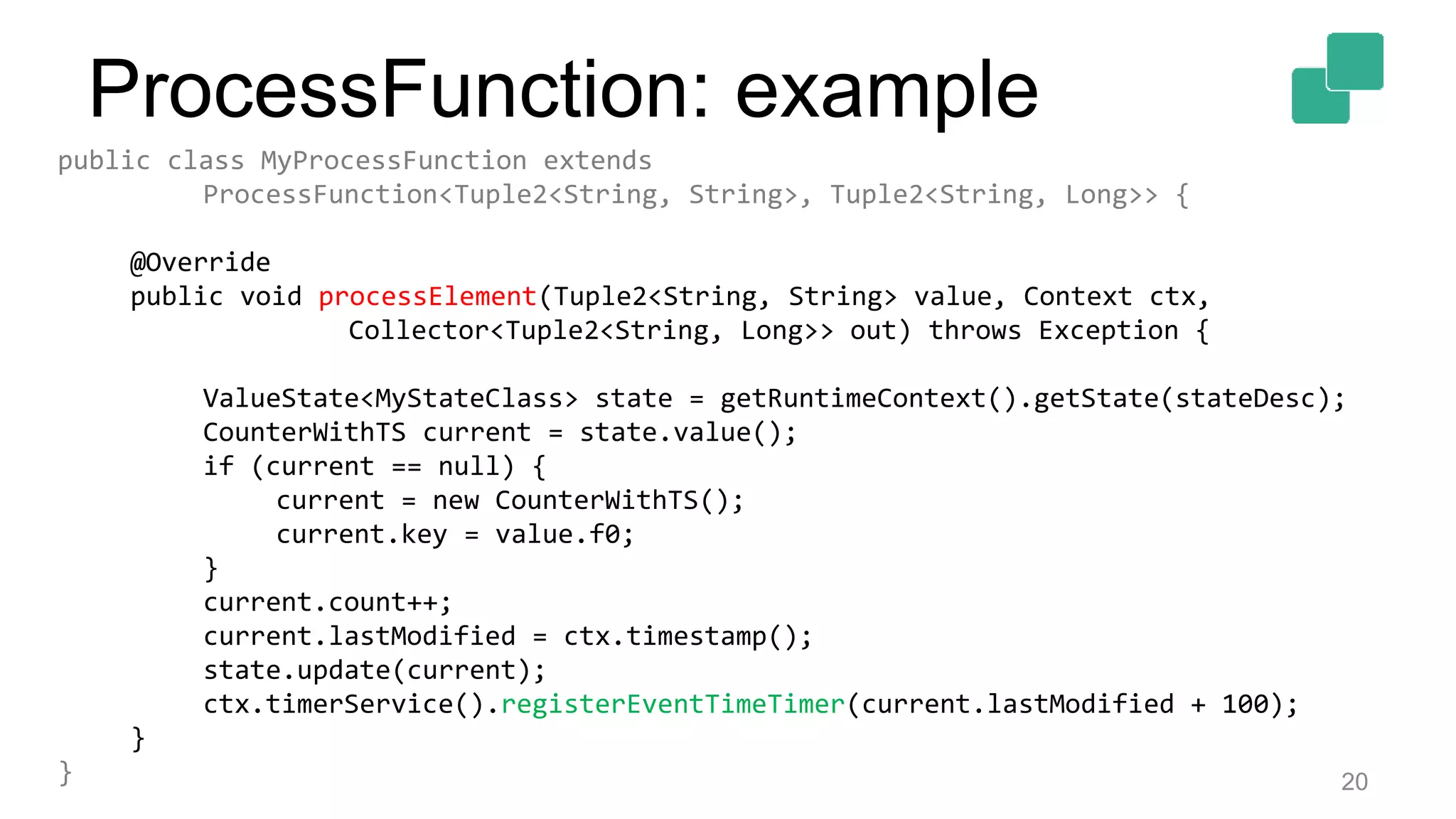

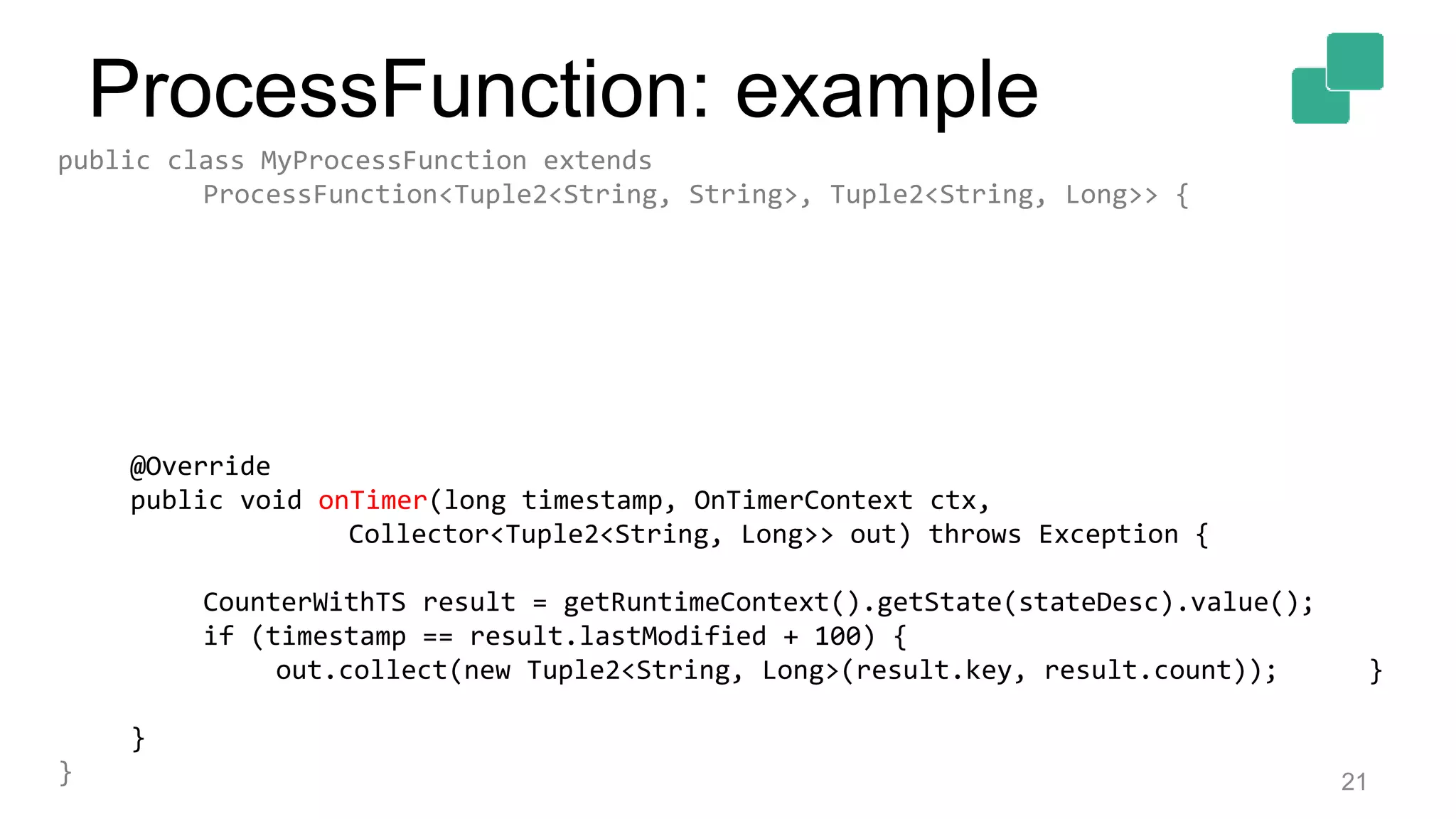

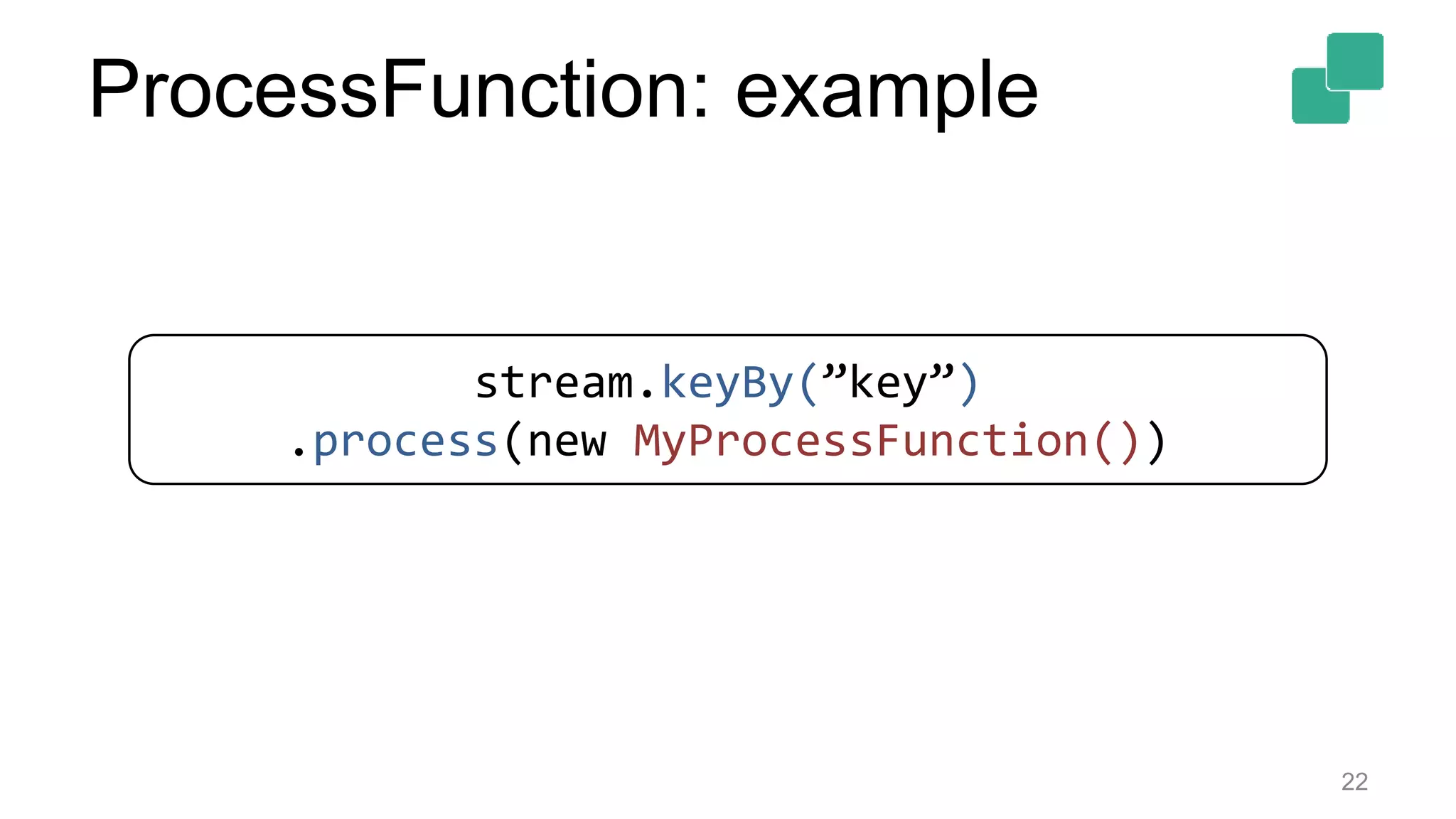

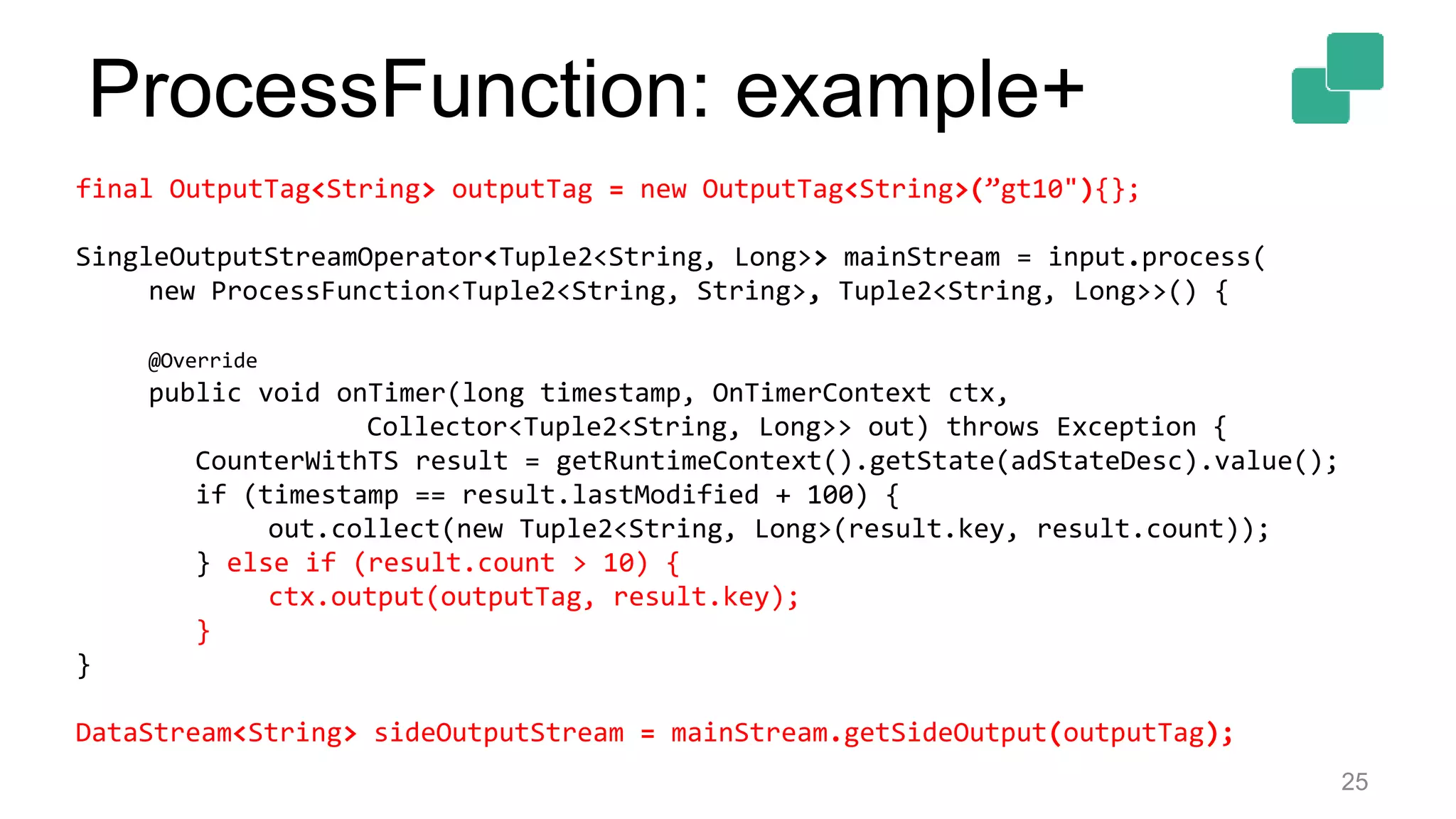

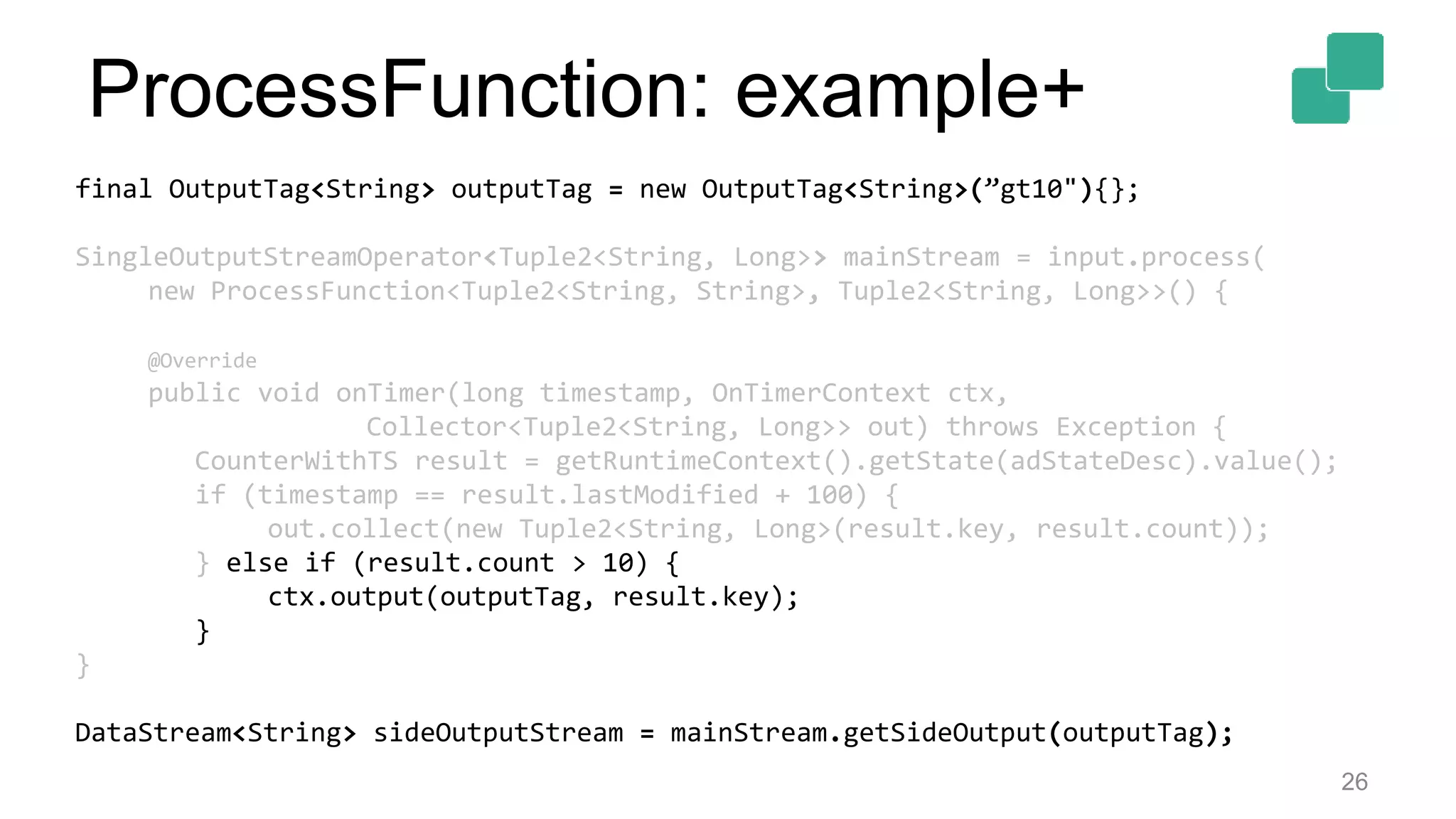

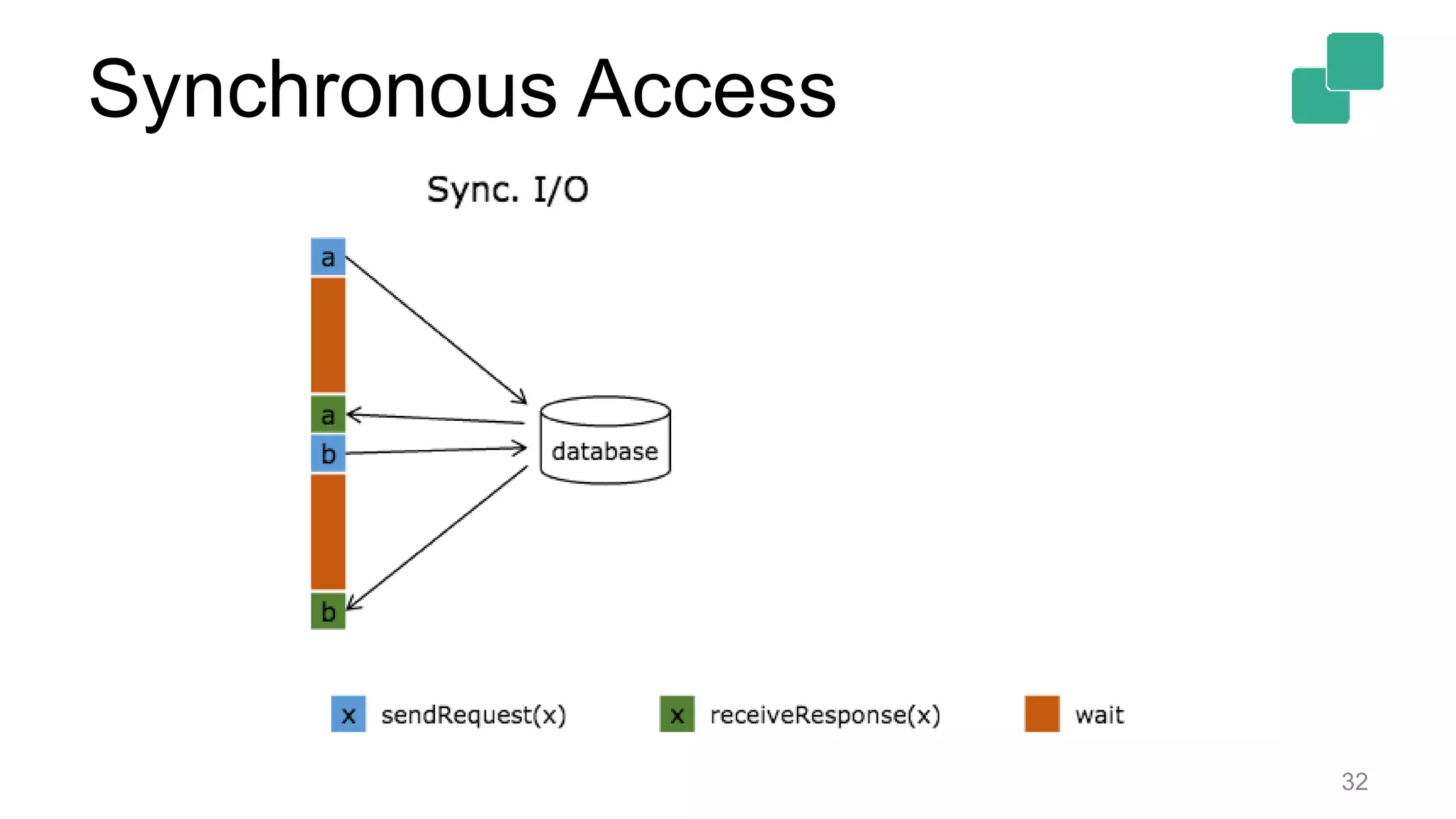

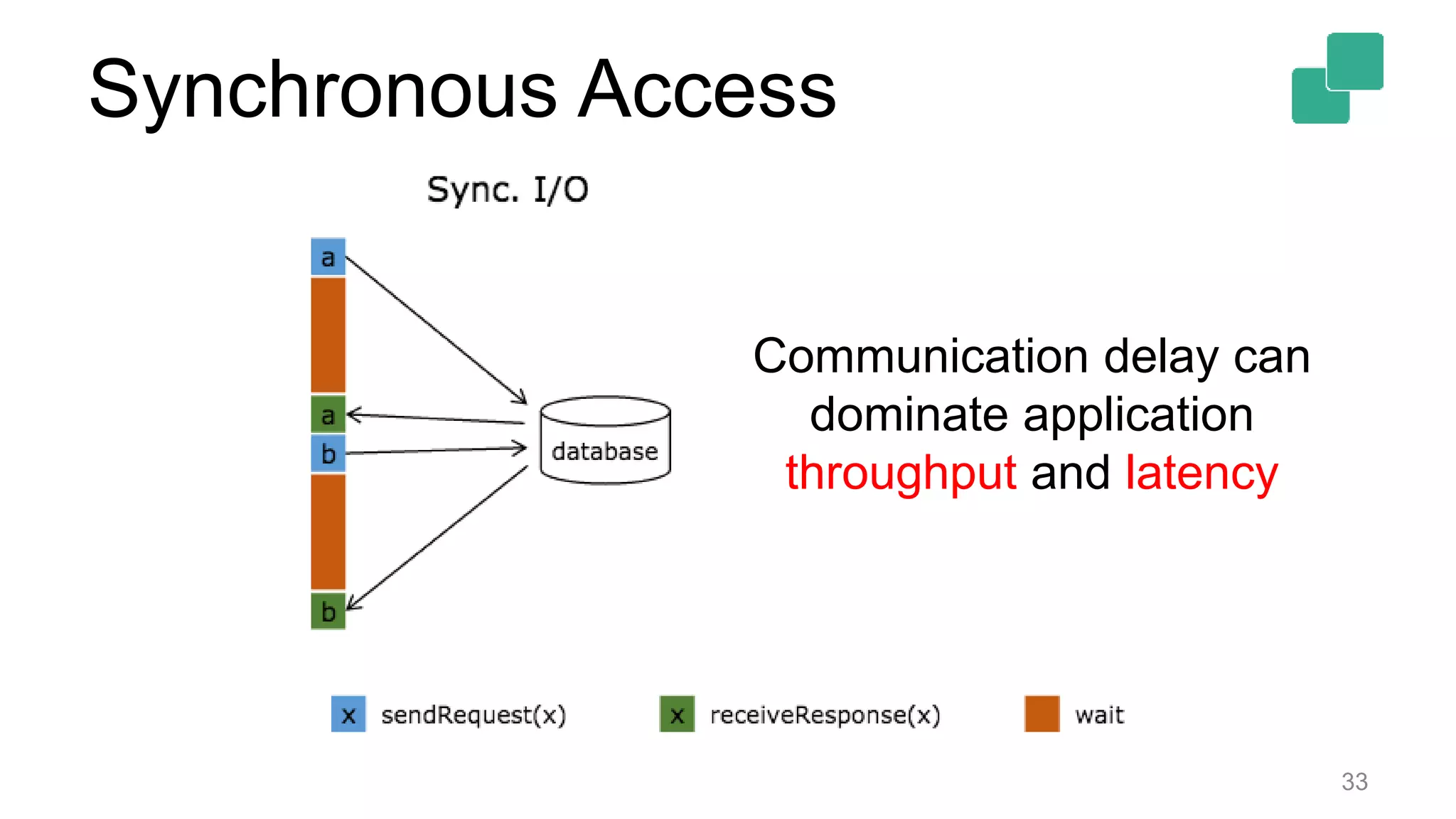

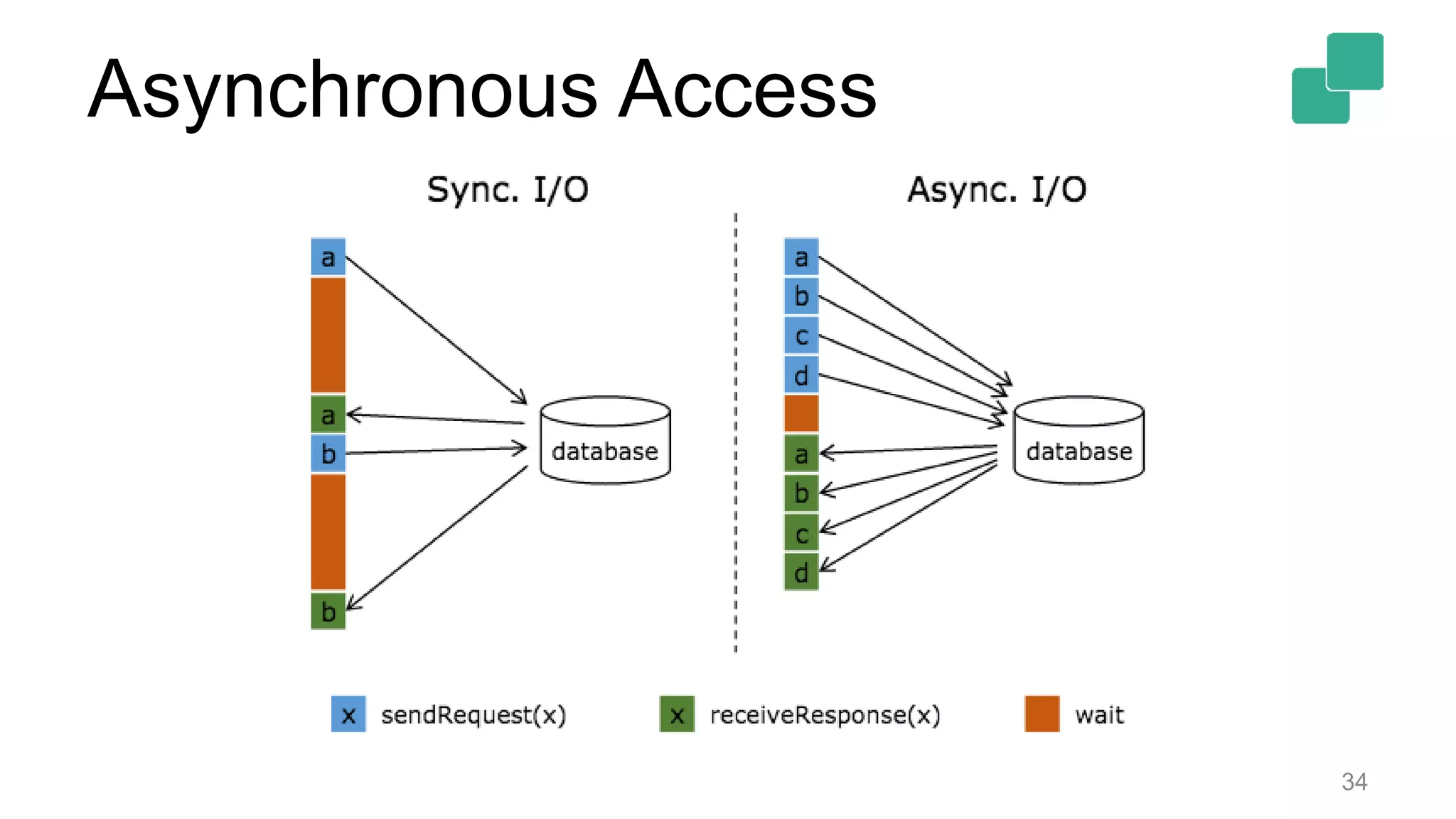

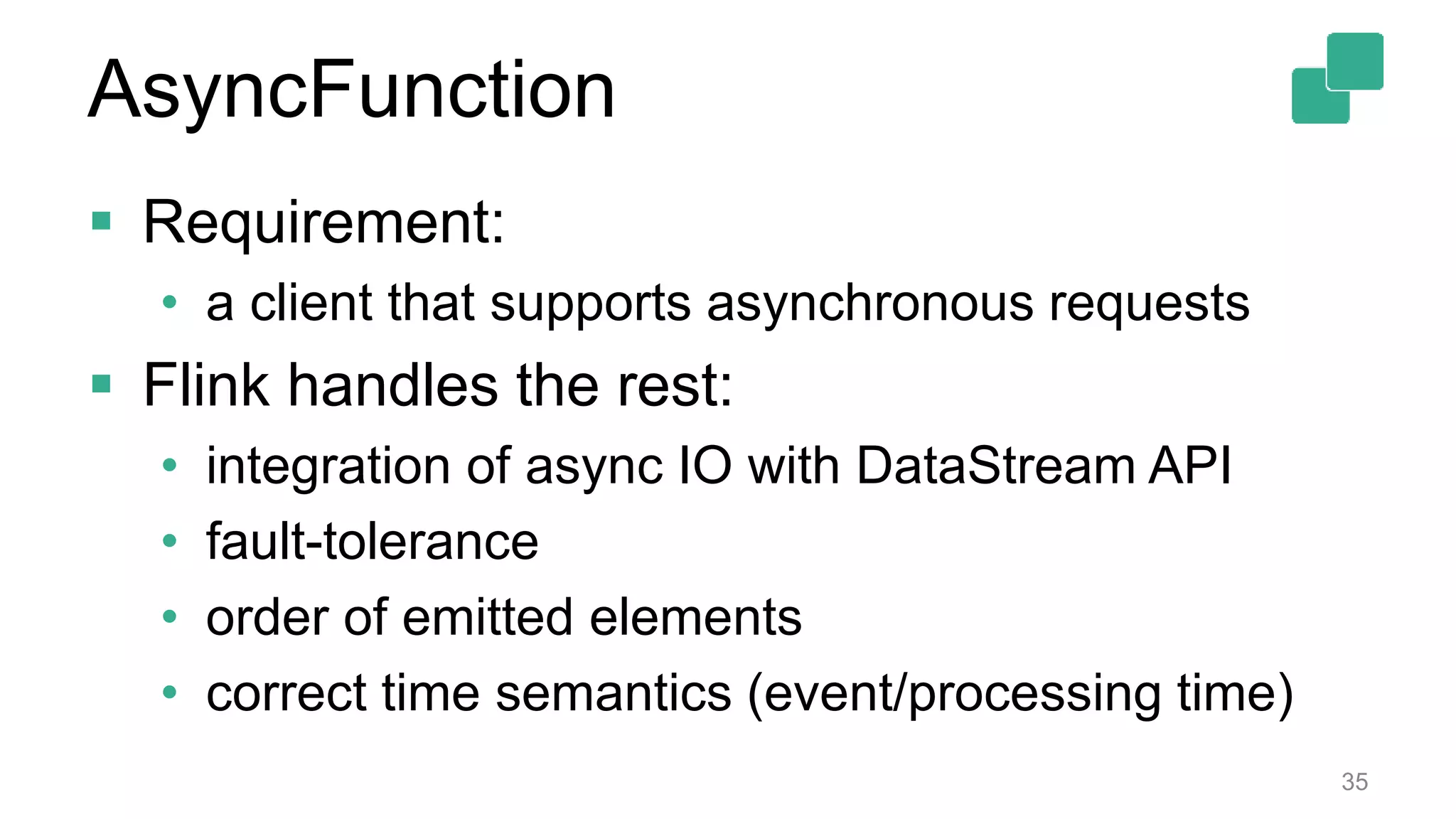

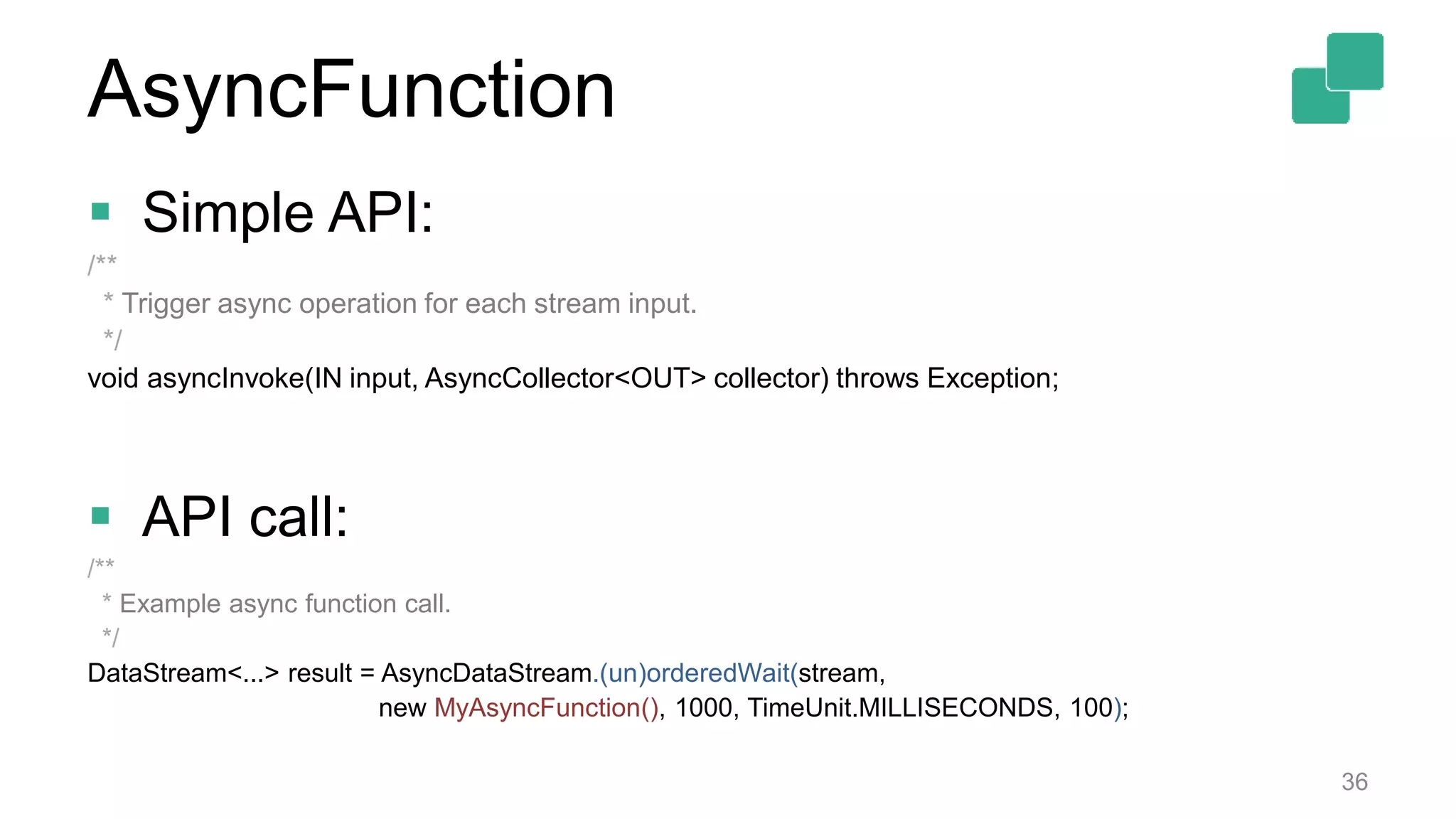

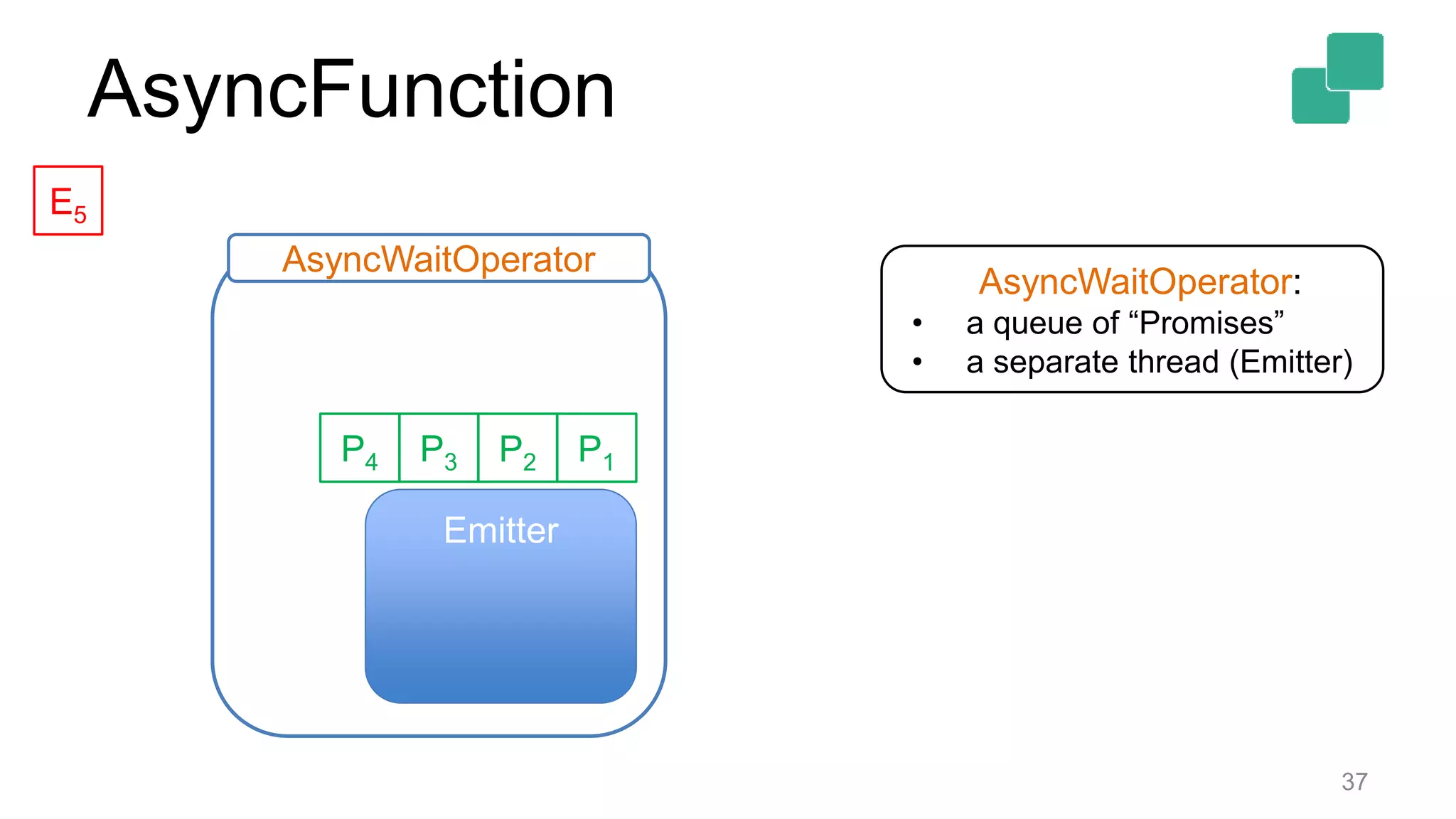

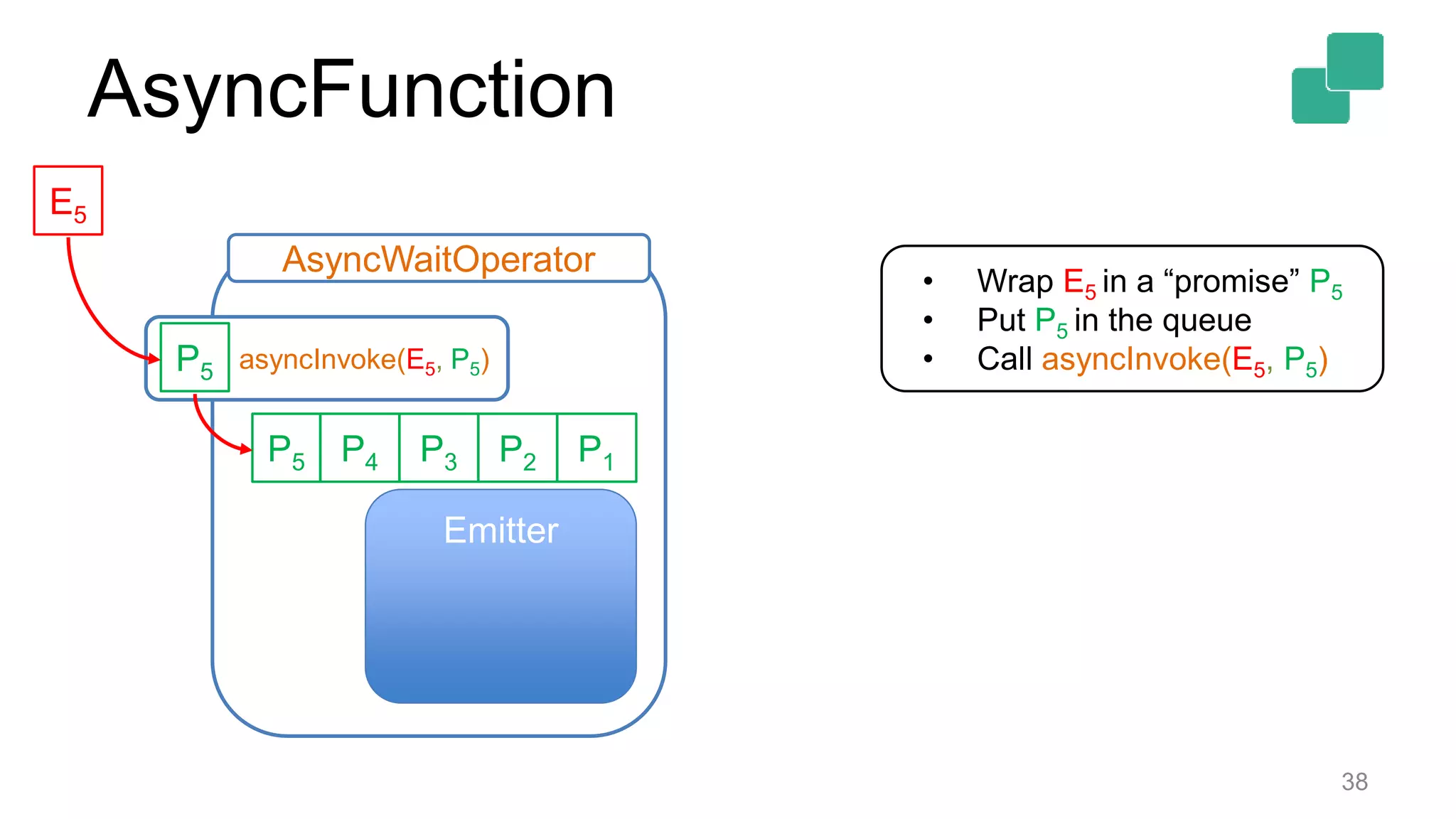

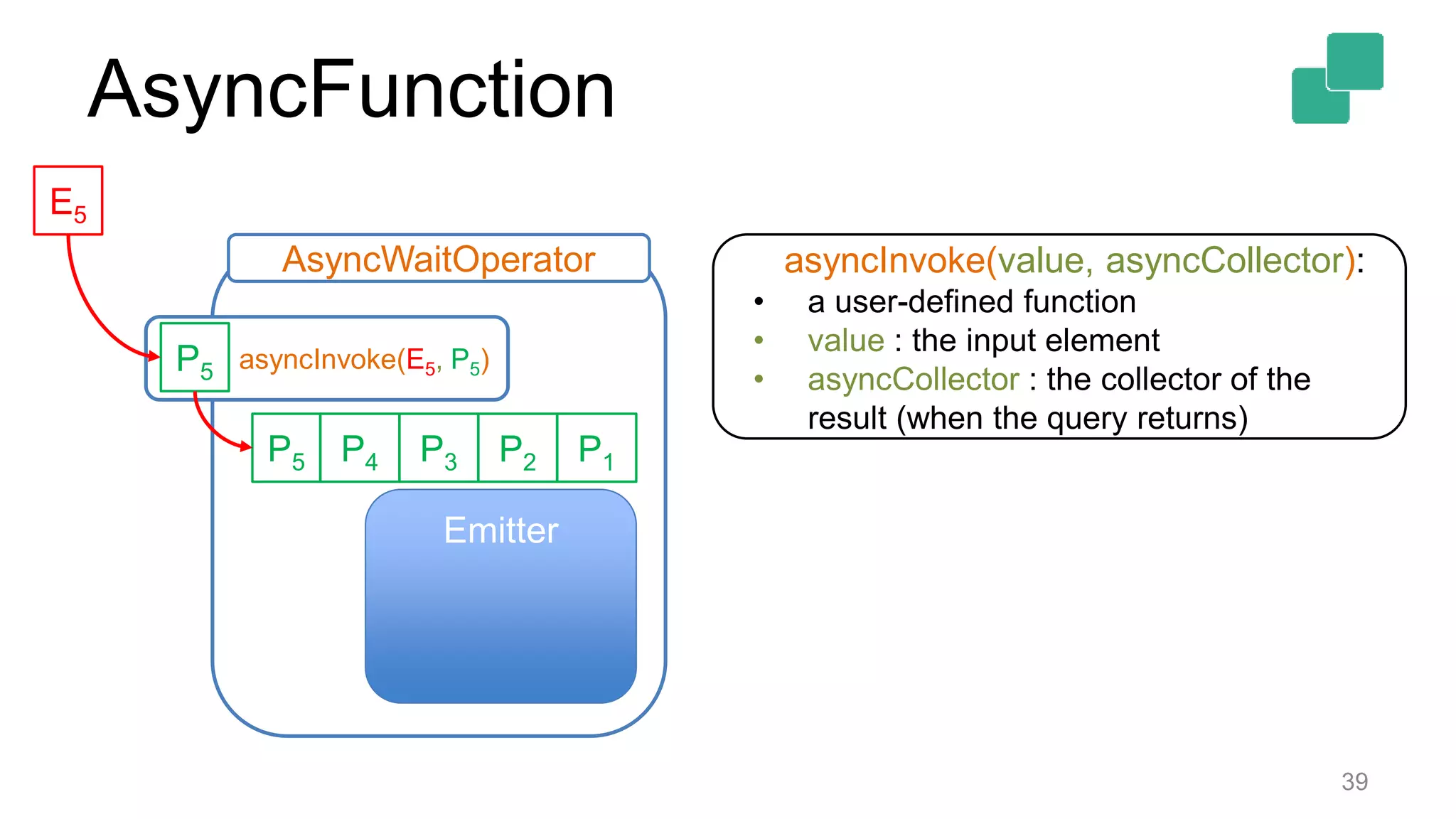

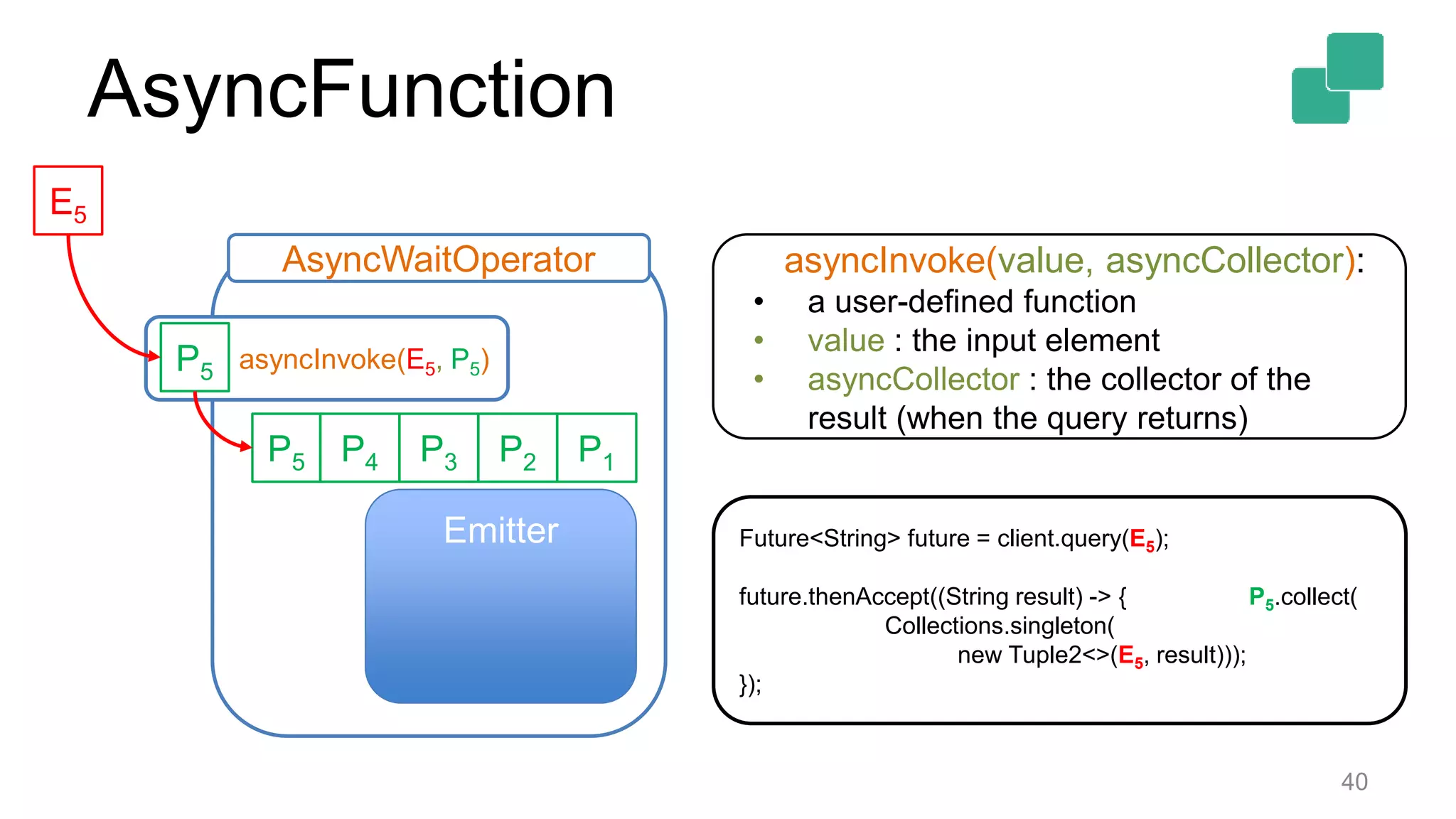

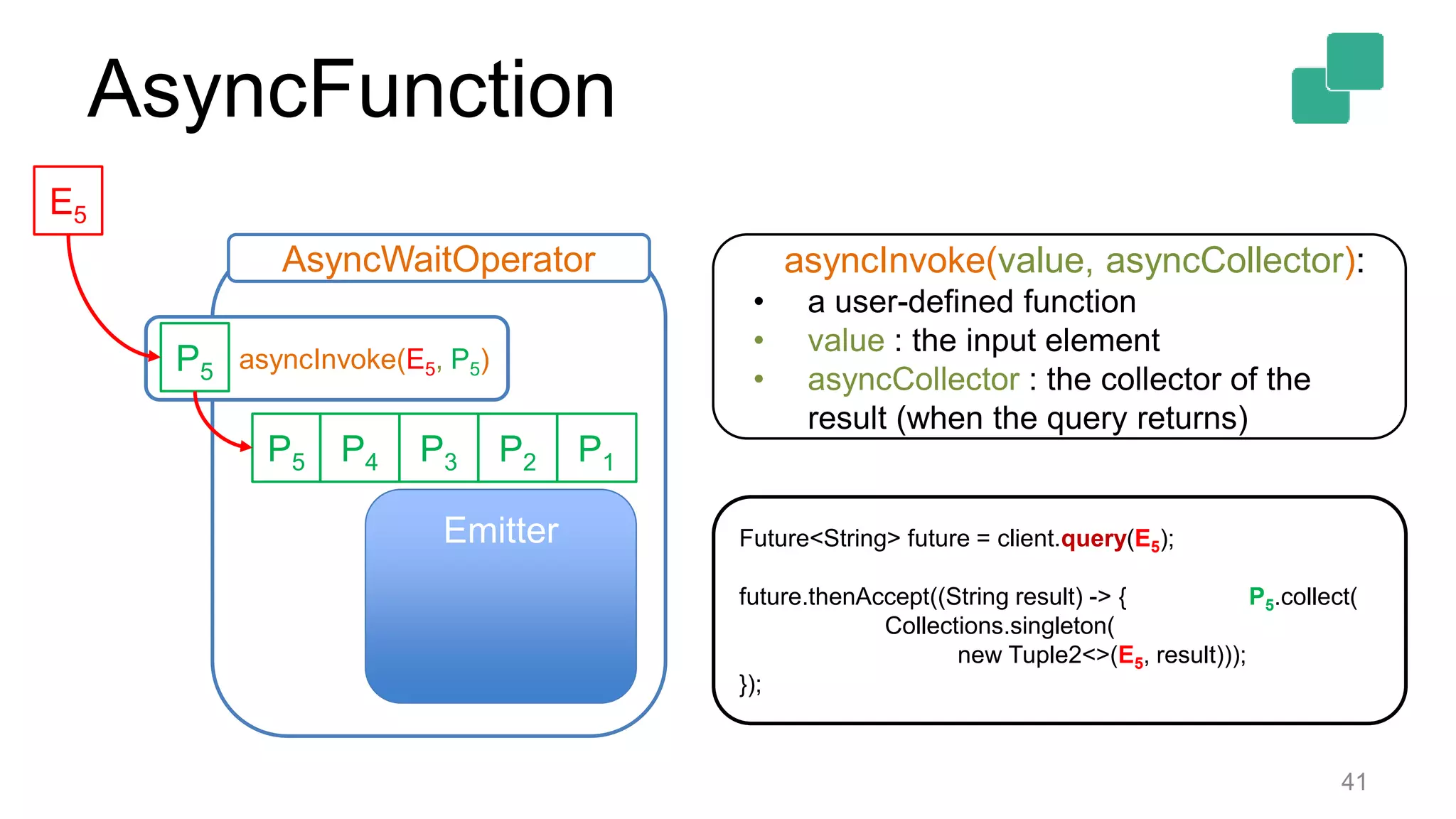

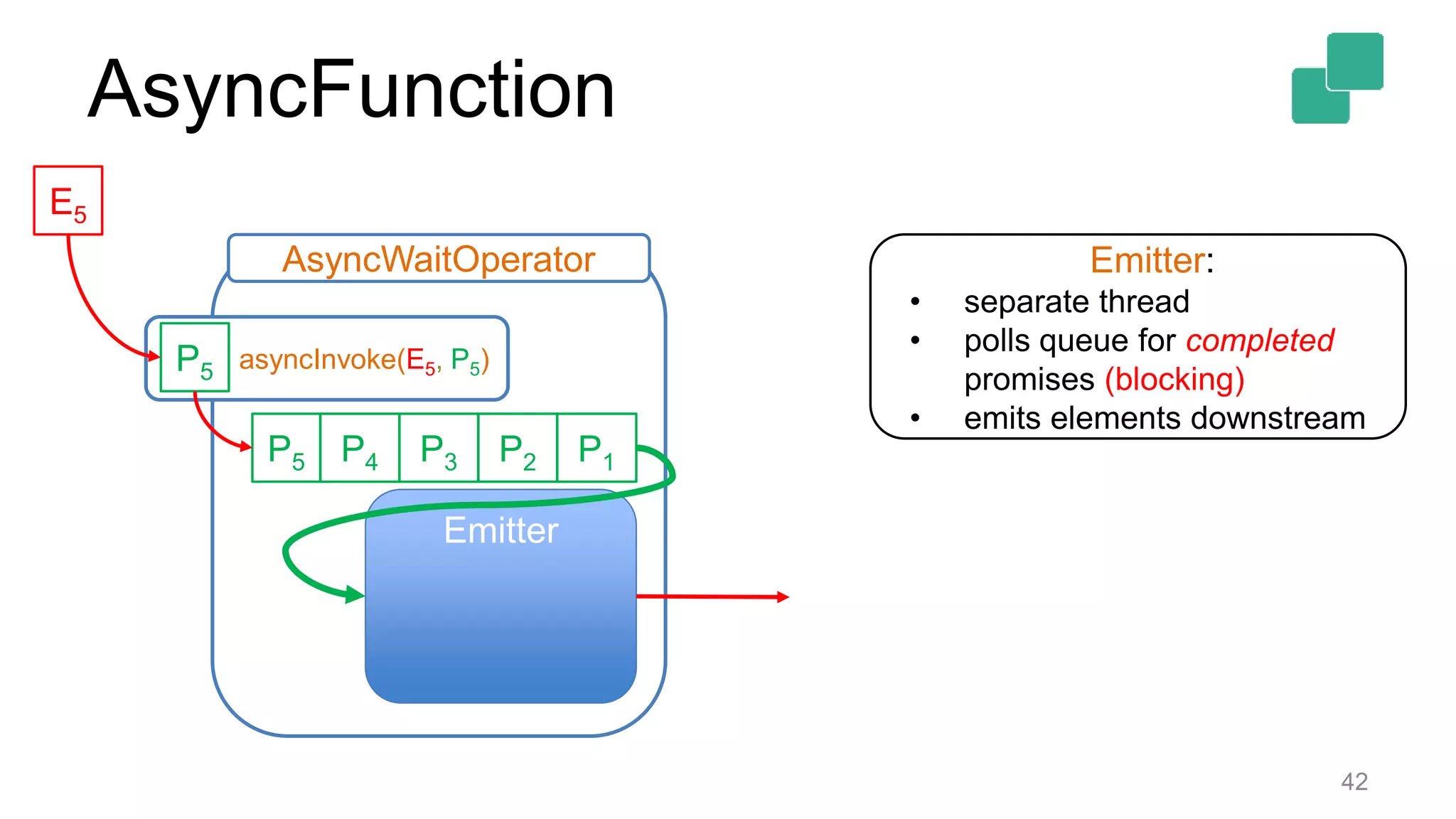

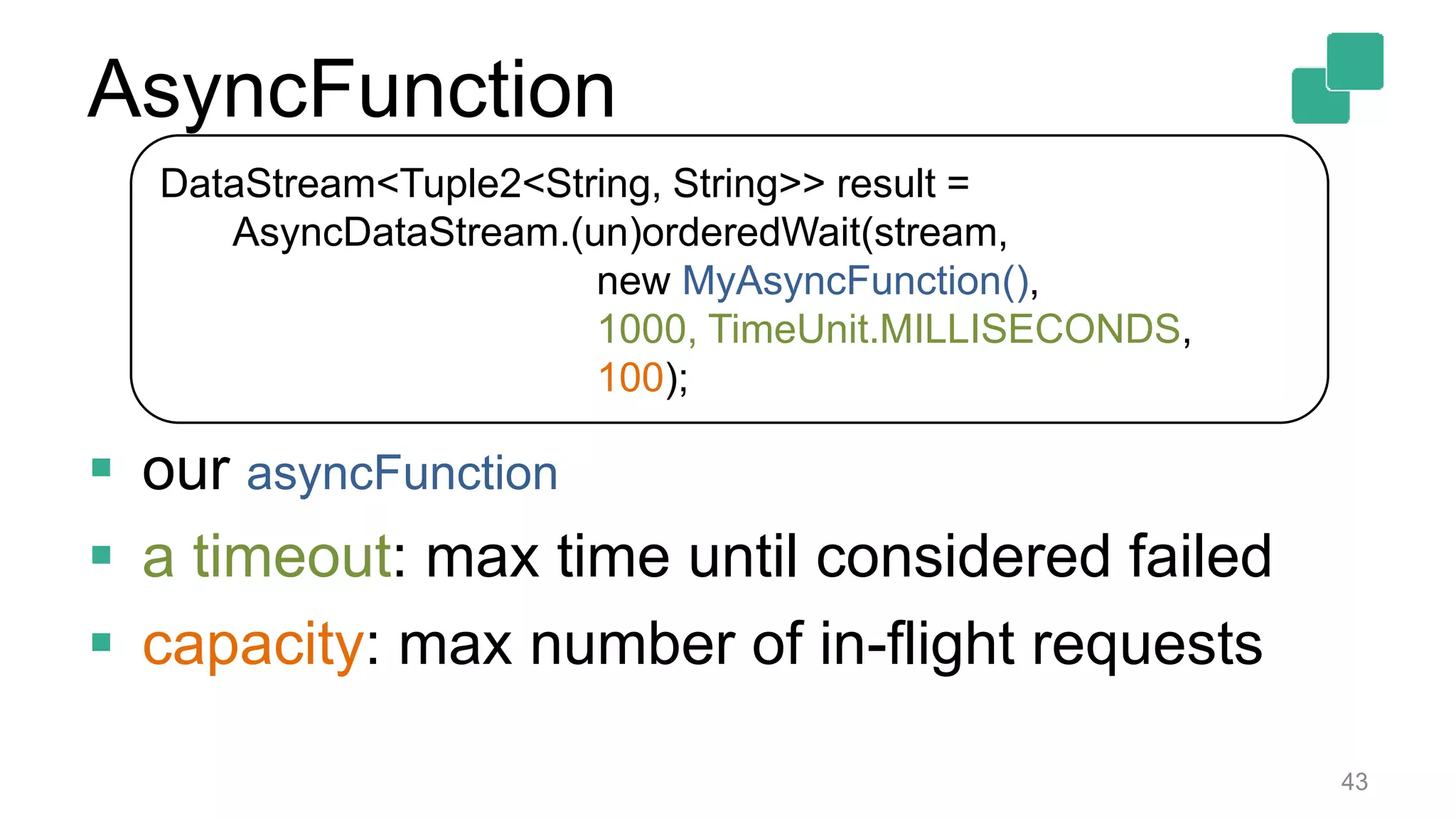

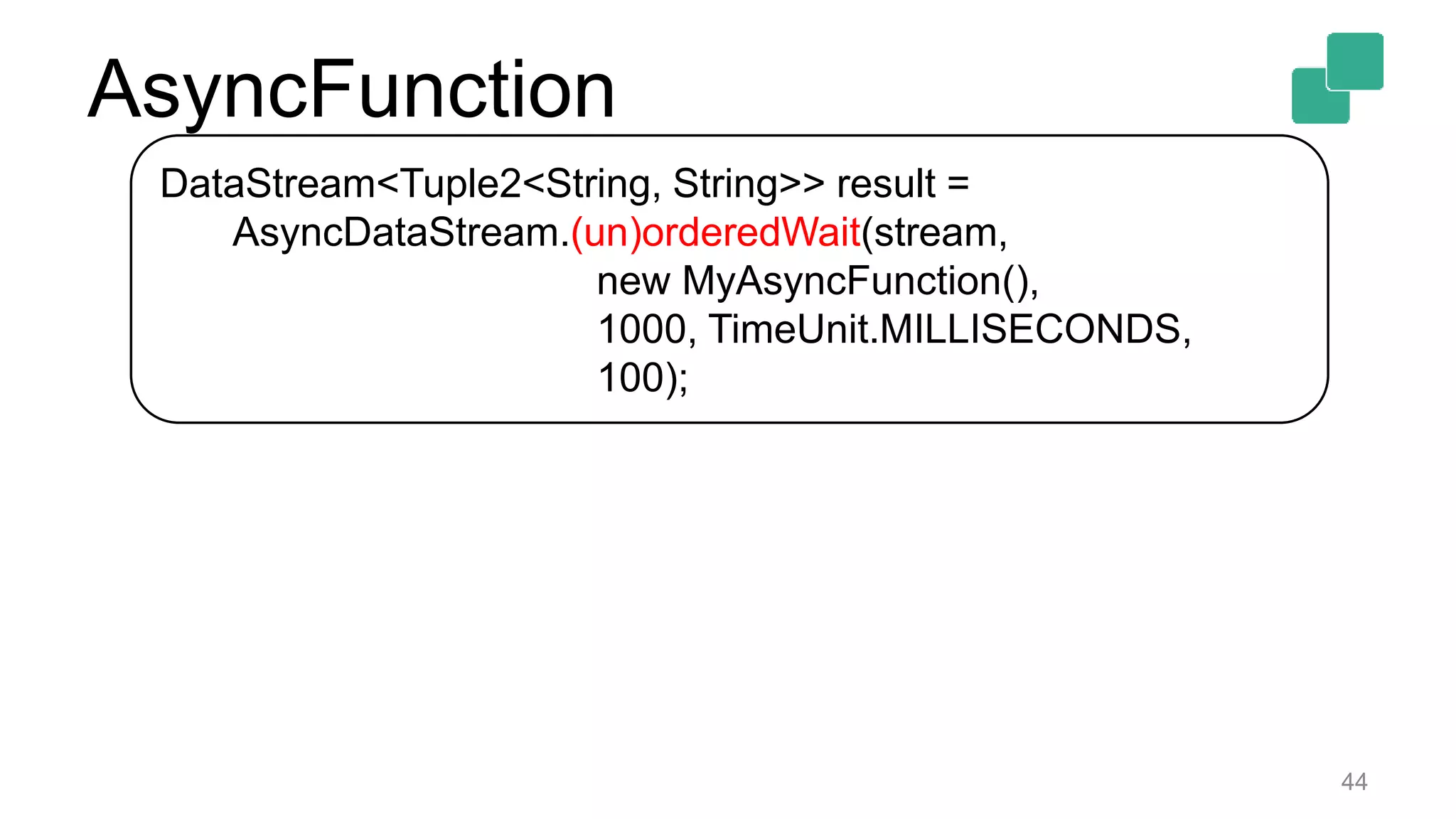

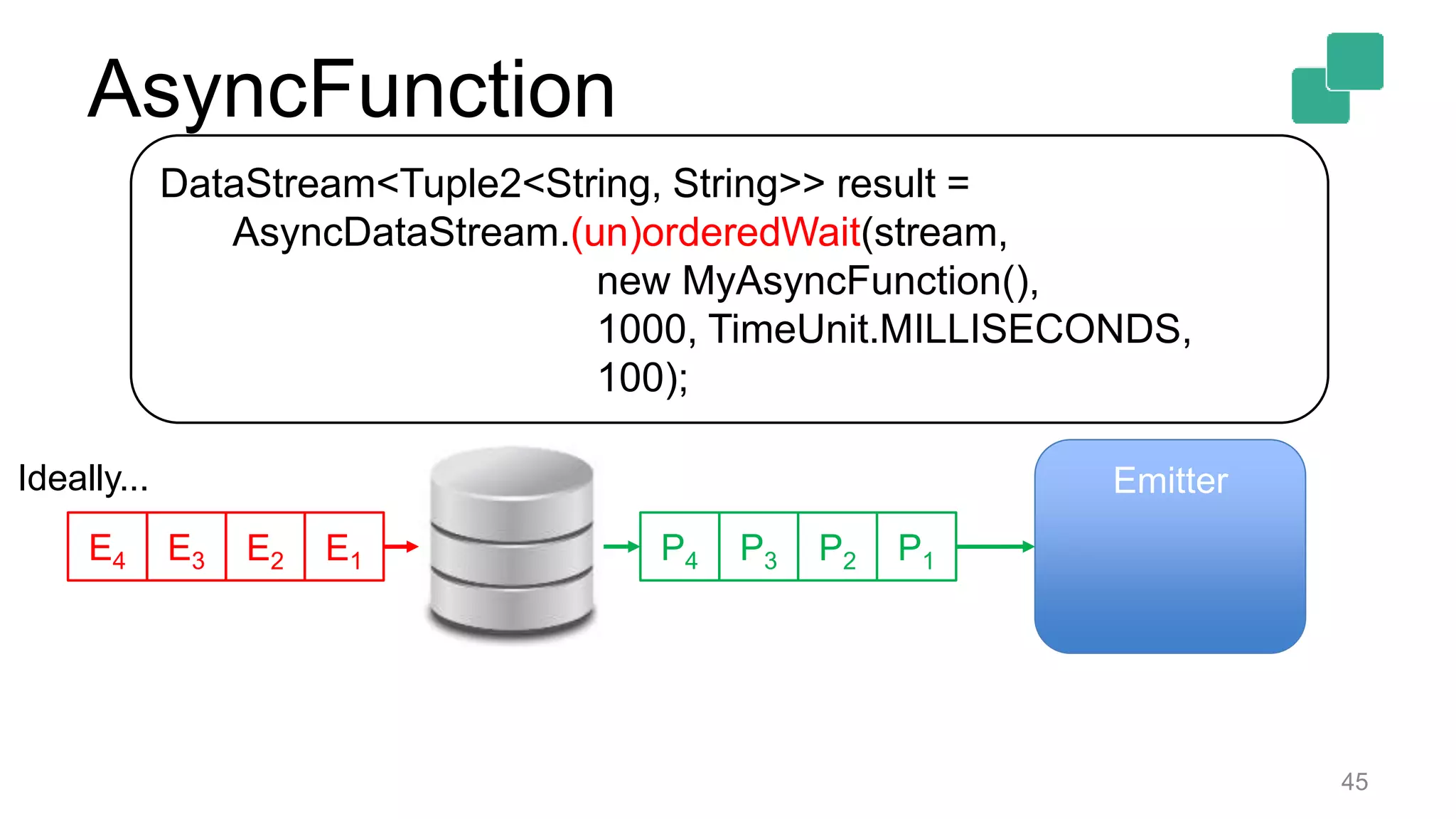

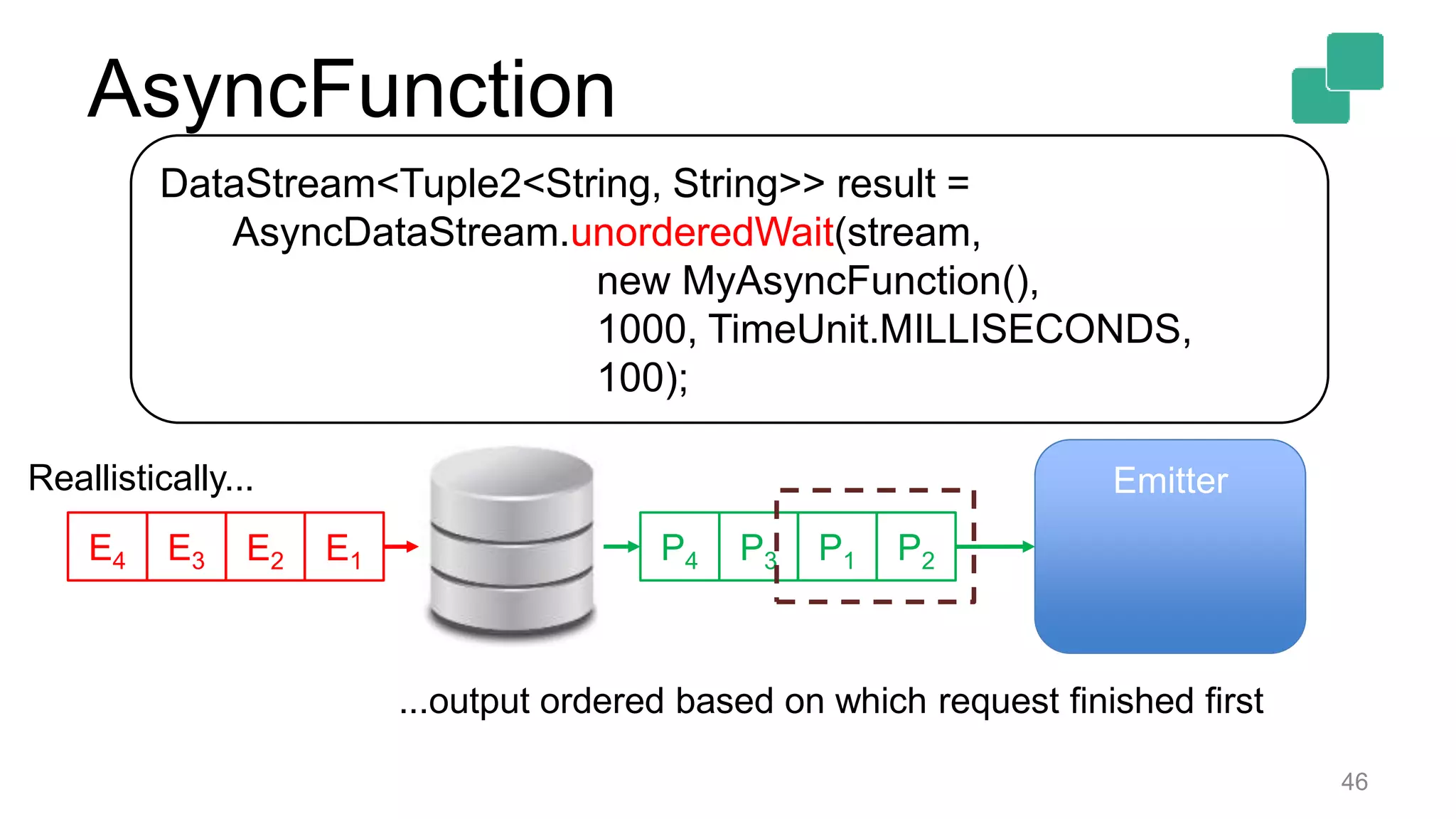

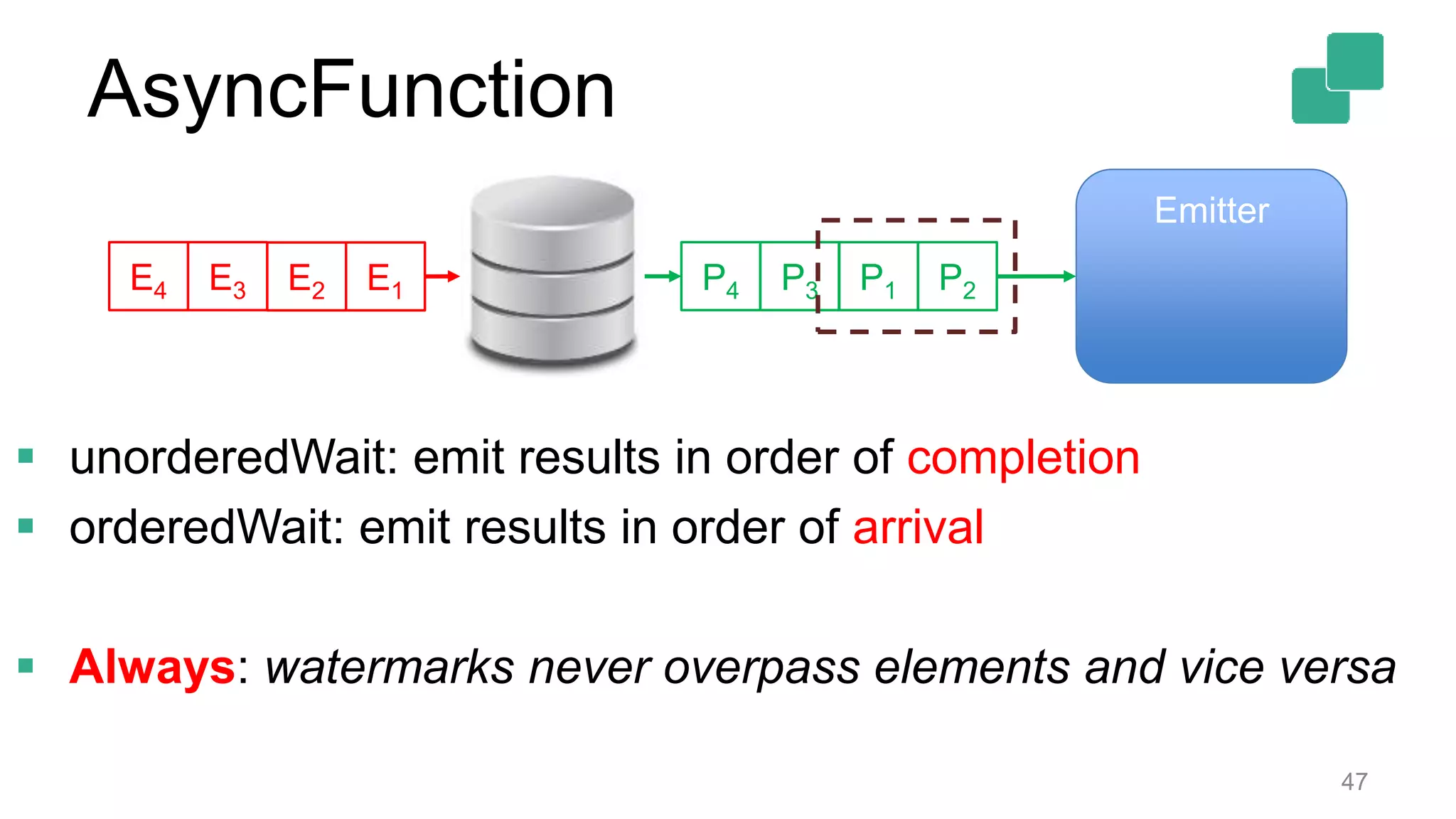

The document discusses extensions to Apache Flink's DataStream API, specifically focusing on the implementation of ProcessFunction for low-level stream processing and asynchronous I/O operations. It provides examples of using the ProcessFunction to manage state and register timers for handling streams of events, including how to maintain counts and emit results. Additionally, the document outlines the structure of an asynchronous function and its integration with the DataStream API to enhance performance through non-blocking I/O operations.