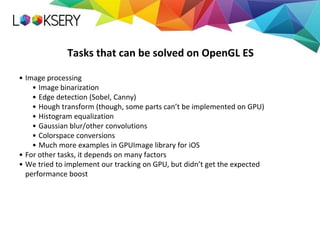

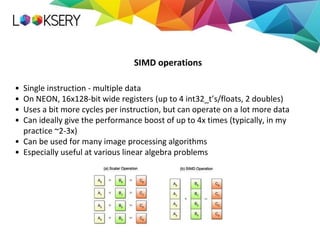

This document discusses optimizing computer vision algorithms on mobile platforms. It recommends first optimizing the algorithm itself before pursuing technical optimizations. Using SIMD instructions can provide a performance boost of up to 4x by processing multiple data elements simultaneously. Libraries can help with vectorization but may not be fully optimized; intrinsics provide more control but require platform-specific code. Handcrafting SIMD assembly code can yield the best performance but is also the most difficult. GPUs via OpenGL ES can provide over an order of magnitude speedup for tasks like image processing but come with limitations on mobile.

![• Sum of matrix rows

• Matrices are 128x128, test is repeated 10^5 times

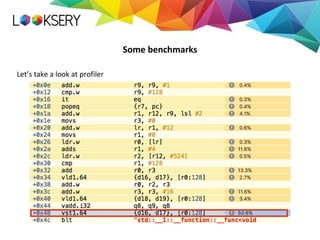

Some benchmarks

// Non-vectorized code

for (int i = 0; i < matSize; i++) {

for (int j = 0; j < matSize; j++) {

rowSum[j] += testMat[i][j];

}

}

// Vectorized code

for (int i = 0; i < matSize; i++) {

for (int j = 0; j < matSize; j += vectorSize) {

VectorType x = *(VectorType*)(testMat[i] + j);

VectorType y = *(VectorType*)(rowSum + j);

y += x;

*(VectorType*)(rowSum + j) = y;

}

}](https://image.slidesharecdn.com/lookseryeecvcv2-160712152221/85/Fedor-Polyakov-Optimizing-computer-vision-problems-on-mobile-platforms-10-320.jpg)

![Some benchmarks

0

1

2

3

4

5

6

7

8

9

10

Simple Vectorized Loop unroll

Time,s

int float short

Got another ~15%

for (int i = 0; i < matSize; i++) {

auto ptr = testMat[i];

for (int j = 0; j < matSize; j += 4 * xSize) {

auto ptrStart = ptr + j;

VT x1 = *(VT*)(ptrStart + 0 * xSize);

VT y1 = *(VT*)(rowSum + j + 0 * xSize);

y1 += x1;

VT x2 = *(VT*)(ptrStart + 1 * xSize);

VT y2 = *(VT*)(rowSum + j + 1 * xSize);

y2 += x2;

VT x3 = *(VT*)(ptrStart + 2 * xSize);

VT y3 = *(VT*)(rowSum + j + 2 * xSize);

y3 += x3;

VT x4 = *(VT*)(ptrStart + 3 * xSize);

VT y4 = *(VT*)(rowSum + j + 3 * xSize);

y4 += x4;

*(VT*)(rowSum + j + 0 * xSize) = y1;

*(VT*)(rowSum + j + 1 * xSize) = y2;

*(VT*)(rowSum + j + 2 * xSize) = y3;

*(VT*)(rowSum + j + 3 * xSize) = y4;

}

}](https://image.slidesharecdn.com/lookseryeecvcv2-160712152221/85/Fedor-Polyakov-Optimizing-computer-vision-problems-on-mobile-platforms-12-320.jpg)

![Some benchmarks

// Non-vectorized code

for (int i = 0; i < matSize; i++) {

for (int j = 0; j < matSize; j++) {

rowSum[i] += testMat[j][i];

}

}

// Vectorized, loop-unrolled code

for (int i = 0; i < matSize; i+=4 * xSize) {

VT y1 = *(VT*)(rowSum + i);

VT y2 = *(VT*)(rowSum + i + xSize);

VT y3 = *(VT*)(rowSum + i + 2*xSize);

VT y4 = *(VT*)(rowSum + i + 3*xSize);

for (int j = 0; j < matSize; j ++) {

x1 = *(VT*)(testMat[j] + i);

x2 = *(VT*)(testMat[j] + i + xSize);

x3 = *(VT*)(testMat[j] + i + 2*xSize);

x4 = *(VT*)(testMat[j] + i + 3*xSize);

y1 += x1;

y2 += x2;

y3 += x3;

y4 += x4;

}

*(VT*)(rowSum + i) = y1;

*(VT*)(rowSum + i + xSize) = y2;

*(VT*)(rowSum + i + 2*xSize) = y3;

*(VT*)(rowSum + i + 3*xSize) = y4;

}](https://image.slidesharecdn.com/lookseryeecvcv2-160712152221/85/Fedor-Polyakov-Optimizing-computer-vision-problems-on-mobile-platforms-14-320.jpg)