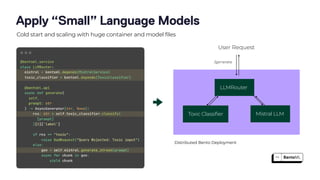

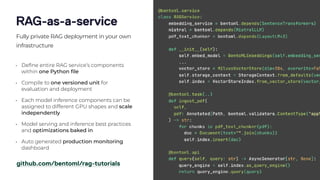

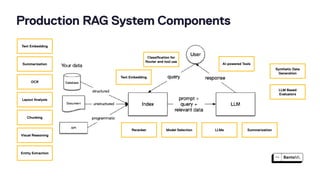

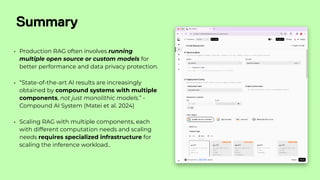

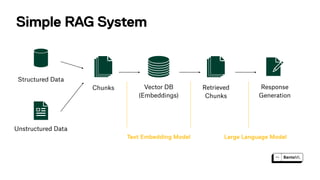

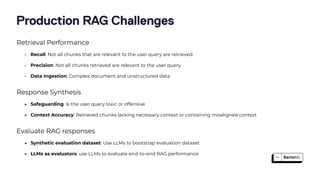

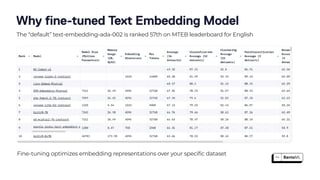

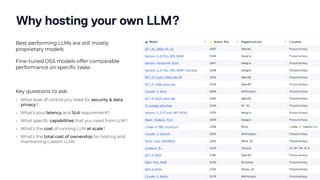

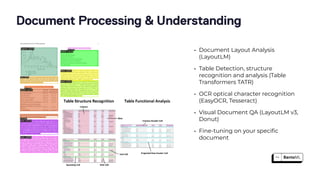

The document discusses the challenges and best practices in scaling retrieval-augmented generation (RAG) systems using custom AI models. It covers topics such as deploying inference APIs, optimizing model performance, and the complexities of managing structured and unstructured data. It emphasizes the importance of fine-tuning models for specific tasks and the need for specialized infrastructure to efficiently handle inference workloads.

![And many more..

Context-Aware chunking

And global concept aware

chunking Metadata

{ datetime: ‘2024-04-11’,

product: “..”, user_id: “..”,

sentiment: “positive”,

summary: “..”,

topics: [..], }

Text Chunk

“I recently purchased the

product and I'm extremely

satis

fi

ed with it! …”

Metadata extraction for Improved retrieval accuracy

And additional context for response synthesis

Reranker Model

fi

ne-tuned

with your dataset generally

performs ~10-30% better](https://image.slidesharecdn.com/unstructureddatameetup-63chaoyuyangbentoml-240606180246-a166705f/85/Infrastructure-Challenges-in-Scaling-RAG-with-Custom-AI-models-8-320.jpg)