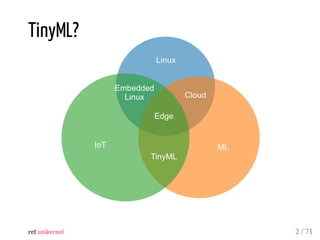

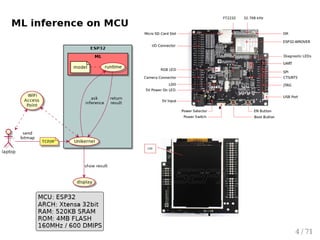

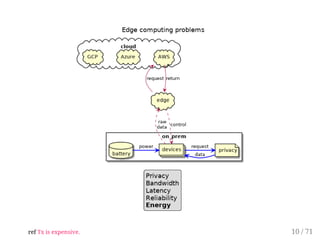

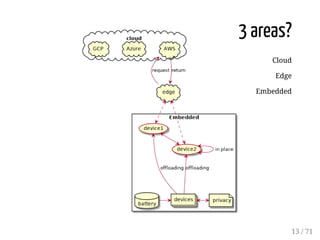

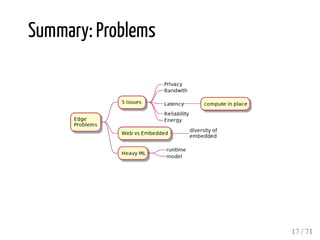

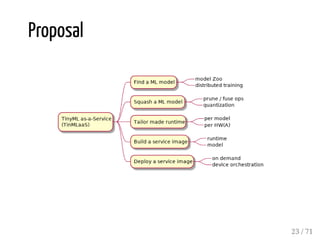

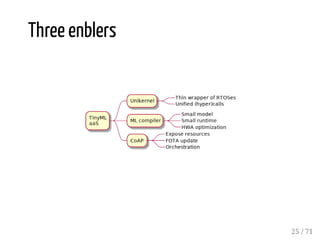

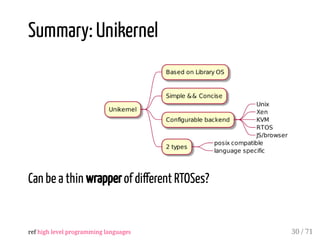

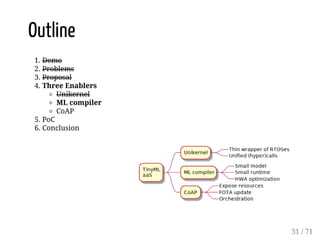

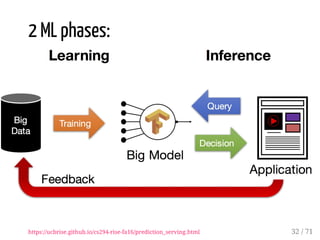

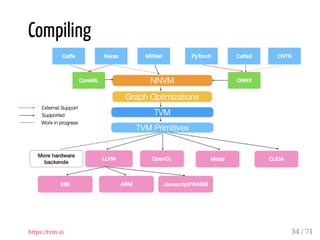

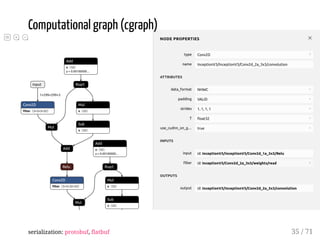

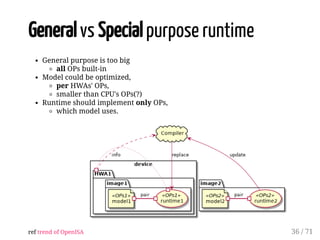

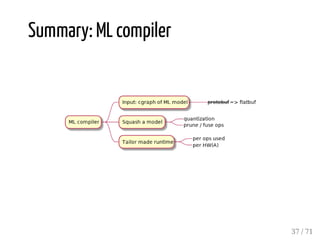

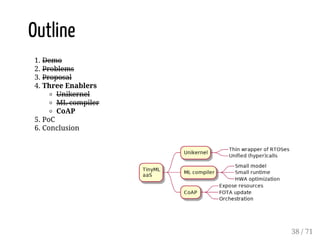

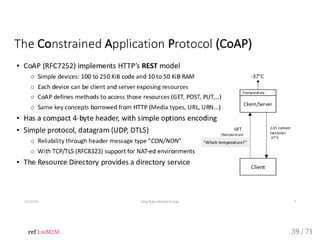

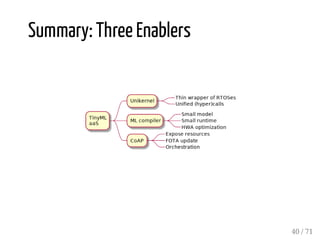

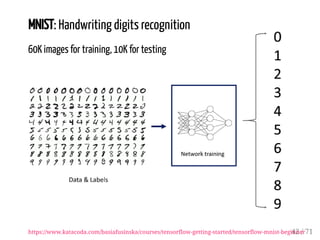

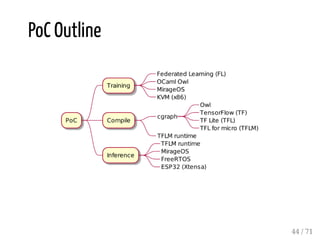

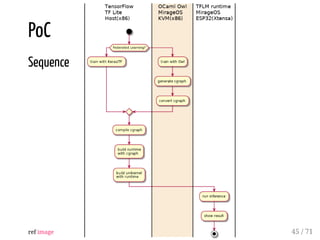

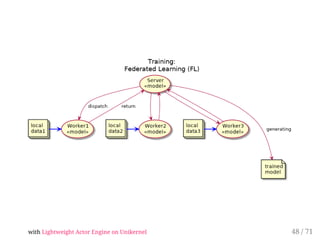

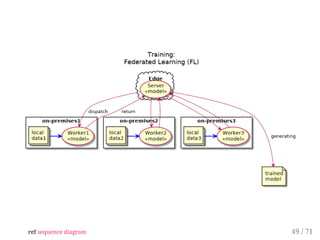

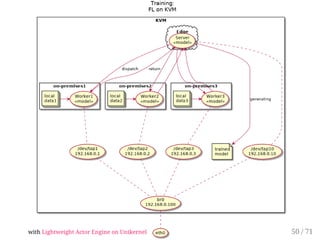

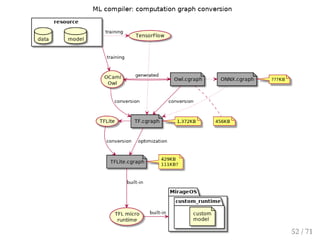

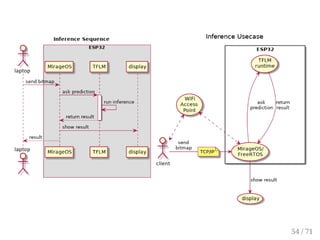

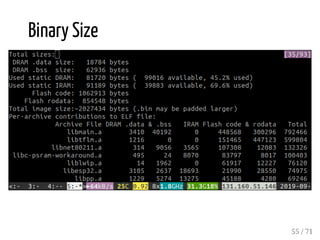

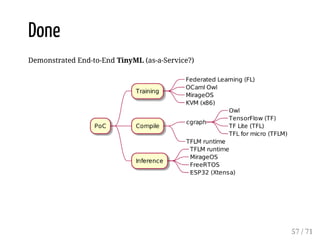

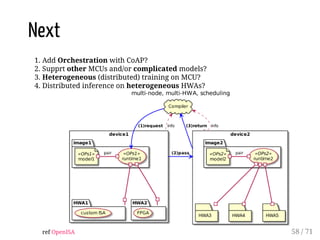

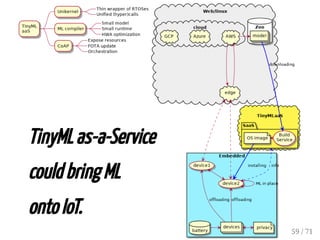

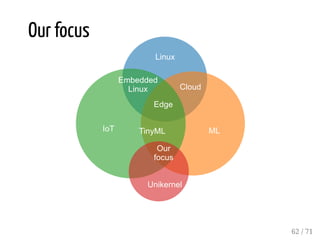

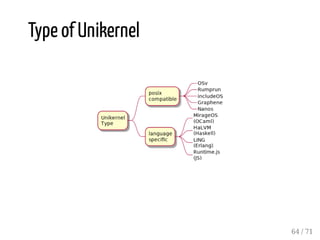

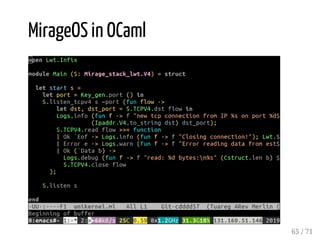

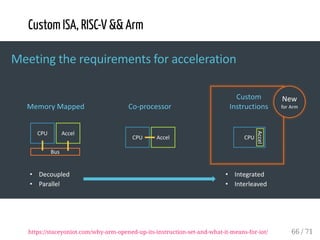

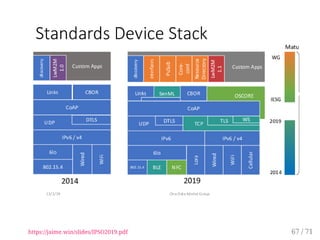

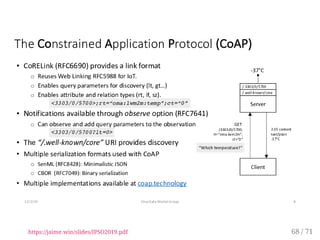

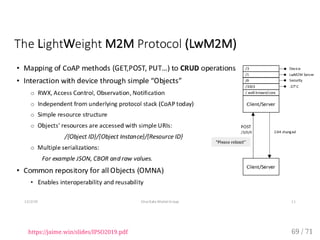

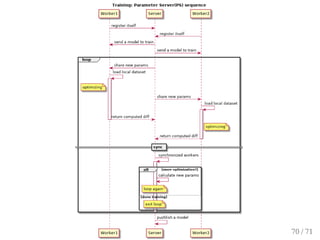

This document proposes using unikernels and specialized machine learning compilers and runtimes to enable distributed machine learning on IoT devices. It demonstrates an end-to-end proof-of-concept for TinyML as a service that trains a MNIST model, compiles it to run on an ESP32 microcontroller, and performs inference on handwritten digits. Next steps include adding orchestration with CoAP, supporting more devices and complex models, distributed training on microcontrollers, and distributed inference across heterogeneous hardware accelerators.