The document presents a study on faster practical block compression techniques for rank/select dictionaries, which are crucial for analyzing large volumes of web log data. It introduces a new chunkwise processing approach that improves encoding and decoding time while maintaining a competitive space efficiency compared to existing methods. Experimental results highlight the advancements in speed and stability of the proposed method over traditional blockwise and bitwise approaches.

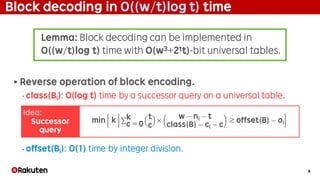

![2

Background

§ Compressed data structures in Web companies.

• Web companies generate massive amount of logs in text formats.

• Analyzing such huge logs is vital for our decision making.

• Practical improvements of compressed data structures are important.

§ RRR compression [Raman, Raman, Rao, SODA’02]

• Basic building block in many compressed data structures.

• Rank/Select queries on compressed bit strings in constant time:

‣ Rankb(B, i): Number of b’s in B’s prefix of length i.

‣ Selectb(B, i): Position of B’s i-th b.

B is an input bit string

b: a bit in {0, 1}](https://image.slidesharecdn.com/kenetayusakuspire172-180615030913/85/Faster-Practical-Block-Compression-for-Rank-Select-Dictionaries-2-320.jpg)

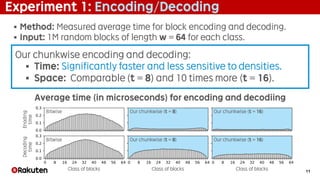

![3

RRR = Block compression + succinct index

§ Represents a block B of w bits into a pair (class(B), offset(B)).

• class(B): Number of ones in B.

• offset(B): Number of preceding blocks of class same as B for some

order (e.g., lexicographical order of bit strings).

§ log w bits for class(B) and log2

w

class(B)

bits for offset(B).

§ Two practical approaches to block compression:

• Blockwise approach [Claude and Navarro, SPIRE’09]

• Bitwise approach [Navarro and Providel, SEA’12]](https://image.slidesharecdn.com/kenetayusakuspire172-180615030913/85/Faster-Practical-Block-Compression-for-Rank-Select-Dictionaries-3-320.jpg)

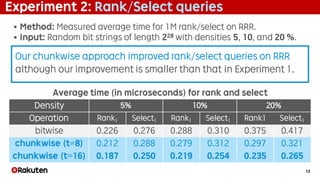

![4

Block compression in practice

Good: O(1) time.

Bad: Low compression ratio.

§The tables limit use of larger w.

§log w bits for class(B) become non-

negligible.

§Ex) 25% overhead for w = 15.

1. Blockwise approach

[Claude and Navarro, SPIRE’09]

2. Bitwise approach

[Navarro and Providel, SEA’12]

Idea: O(2ww)-bit universal tables. Idea: O(w3)-bit binomial coefficients.

Good: High compression ratio.

Bad: O(w) time.

§Count bit strings lexicographically

smaller than block B bit by bit.

§In practice, heuristics of encoding

and decoding blocks with a few

ones in O(1) time can be used.

Less flexible in practice](https://image.slidesharecdn.com/kenetayusakuspire172-180615030913/85/Faster-Practical-Block-Compression-for-Rank-Select-Dictionaries-4-320.jpg)

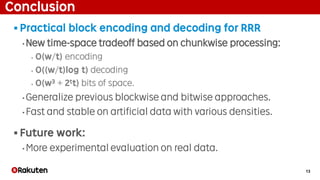

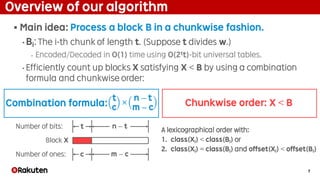

![5

Main result

§ Practical encoder/decoder for block compression

• Generalization of existing blockwise and bitwise approaches.

• Idea: chunkwise processing with multiple universal tables.

• Faster and more stable on artificial data.

Method Encode Decode Space (in bits)

Blockwise [Claude and Navarro, SPIRE’09] O(1) O(1) O(2ww)

Bitwise [Navarro and Provital, SEA’12] O(w) O(w) O(w3)

Chunkwise (This work) O(w/t) O((w/t) log t) O(w3 + 2t t)

This talk uses w and t for block and chunk lengths, respectively.](https://image.slidesharecdn.com/kenetayusakuspire172-180615030913/85/Faster-Practical-Block-Compression-for-Rank-Select-Dictionaries-5-320.jpg)

![8

Block encoding in O(w/t) time

Lemma: Block encoding can be implemented in

O(w/t) time with O(w3+2tt)-bit universal tables.

` 1

oi+1

B0···Bi-1

oi

2

X[0, i) X[i] •••

Blocks X of class same as B

in descending order of offset(X)

from top to bottom.

oi = X X0···Xi-1 < B0···Bi-1

ci

ni

class(B)

− ci − class(Bi)

w − ni − t#bits

#ones

Bi Bi+1···Bw/t-1

• w− ni − t is in {0, t, 2t, …, (w/t)t=w}.

• class(B) − ci − c ranges in [0, w).

• class(Bi) ranges in [0, t).

• Each value can be represented in w bits.

2. class(Xi) = class(Bi) and offset(Xi) < offset(Bi)

Idea:

Multiplication

offset(Bi)×

w − ni − t

class(B) − ci − class(Bi)

1. class(Xi) < class(Bi):

Idea:

Summation

%

t

c

×

w − ni − t

class(B) − ci − c

class(Bi)&1

c = 0](https://image.slidesharecdn.com/kenetayusakuspire172-180615030913/85/Faster-Practical-Block-Compression-for-Rank-Select-Dictionaries-8-320.jpg)