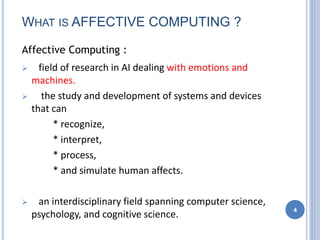

This document provides an overview of affective computing, which is a field of research that deals with developing systems that can recognize, interpret, process, and simulate human emotions. It discusses several key aspects of affective computing including psychological theories of emotion, classes of facial expressions, components of emotions, models for representing emotions, and tools for measuring brain activity like electroencephalography. The document also covers applications of affective computing and areas of ongoing research like developing wearable devices that can recognize affective patterns and creating robots that can express emotions.

![[A,V,S] EMOTION MODEL

[Arousal , Valence , Stance] :- A 3-tuple models an

“emotion”.

Arousal:- Surprise at high arousal, fatigue at low

arousal

-the intensity with which the emotion is experienced

Valence:- Content at high valence, Unhappiness at

low valence

-the discrimination between positive and negative

experiences

Stance:- Stern at closed stance, accepting at open

stance

9](https://image.slidesharecdn.com/finalppt-141026010035-conversion-gate02/85/EMOTION-BASED-COMPUTING-9-320.jpg)