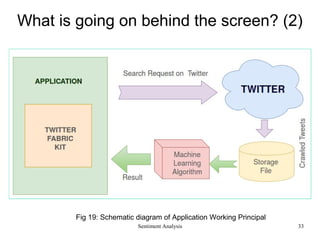

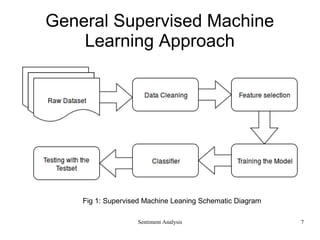

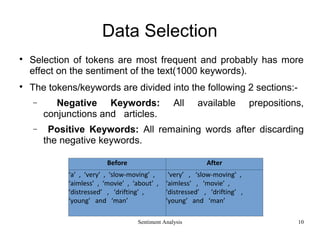

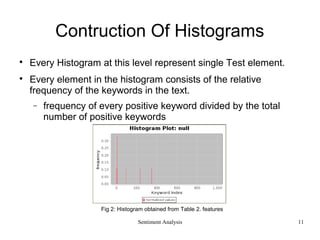

The document presents a comprehensive overview of sentiment analysis on Twitter, discussing its methodology, datasets, and various models such as k-nearest neighbors and recurrent neural networks. It emphasizes data preprocessing, cleaning steps, and the implementation of advanced models like LSTM for improved accuracy. The project highlights practical applications, future improvements, and references to related works in the field.

![Sentiment Analysis

Related Works(1)

Mehto A. proposed a ‘Lexicon based approach for Sentiment Analysis’

based on an aspect catalogue. [1]

The keywords present in aspect catalogue identified in those

sentences in which features of any product are mentioned.

Based on these sentences weighted features from the aspect

catalogue are summed up to find the sentiment of the text.

Nizam and AkÕn unsupervised learning for sentiment classification in

Turkish. [2]

Used tweet words as features and tweet data were clustered in

positive, negative and neutral labelled classes.

Then, this dataset is used to detect classification accuracy with NB,

DT and KNN algorithms.

3](https://image.slidesharecdn.com/abc-170609042314/85/Sentiment-Analysis-on-Twitter-3-320.jpg)

![Sentiment Analysis

Related Works(2)

In a recent work cited as “A New Approach to Target Dependent

Sentiment Analysis with Onto-Fuzzy Logic”.[3]

Hash-tagged words of twitter are given special preference for

determining sentiment of the text.

Hash-tags Category: Topic hash tags , sentiment hash-tags

and sentiment-topic hash tags .

Chikersal P. et. al. developed by Rule-based Classified

combining with Supervised Learning.[4]

The Support Vector Machine (SVM) is trained on semantic,

dependency, and sentiment lexicon based features. By this it

identify +ve, –ve & neutral keyword.

4](https://image.slidesharecdn.com/abc-170609042314/85/Sentiment-Analysis-on-Twitter-4-320.jpg)

![Recurrent Neural Network

Sentiment Analysis 20

• Recurrent Neural Network has the

histograms as inputs and it passes through

one hidden layer and finally output layer

gives the result

• Activation function tanh is used

• Weight and bias matrix continuously

updates matching the output in

subsequent steps (6000-10000)

•

• . varies [-1,1]

• Accuracy : above 75% (approx)

• Vanishing gradient problem

hW ,b( x )=f (WT

x )=f ( ∑

i=1

1000

Wi xi+b)

f ( x)=tanh ( x)=

e

x

−e

−x

ex

+e−x

Fig 8: Recurrent Neural Net](https://image.slidesharecdn.com/abc-170609042314/85/Sentiment-Analysis-on-Twitter-20-320.jpg)

![Long Short Term Memory(LSTM)

•Input gate layer

•Forget gate and tanh layer

•Cell state update layer

•Output layer

Sentiment Analysis 21

f t=σ(Wf .[ht−1 ,xt ]+bf )

it=σ(Wi .[ht−1 ,xt ]+bi )

~

Ct=tanh(WC.[ht−1,xt]+bc )

Ct=ft∗Ct−1+it∗

~

Ct

ot=σ(Wo[ht−1, xt ]+bo)

ht=ot *tanh(Ct )

Fig 9: RNN & LSTM unfolded [6]](https://image.slidesharecdn.com/abc-170609042314/85/Sentiment-Analysis-on-Twitter-21-320.jpg)

![LSTM Network Implementation

Input_1: Layer that represents a particular

input port in the network.

Embedding_1: Turn positive integers

(indexes) into dense vectors of fixed size,

with dropout rate 0.2.

LSTM_1: LSTM Layer. Dropout rates for

gate and itself is 0.2. Activation function

tanh is used.

Dense_1: Just your regular densely-

connected nn layer. Activation function

sigmoid is used.

Output_1: Layer that represents a

particular output port in the network.

Sentiment Analysis 22

Fig 10: LSTM Flowchart [7]](https://image.slidesharecdn.com/abc-170609042314/85/Sentiment-Analysis-on-Twitter-22-320.jpg)