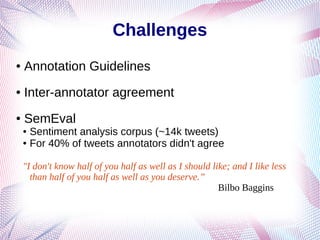

The document explores Natural Language Processing (NLP) and its applications, particularly focusing on text classification and sentiment analysis using supervised machine learning techniques. It covers topics such as feature engineering, linear and logistic regression, and challenges faced in sentiment analysis like context issues and annotation guidelines. Additionally, the document discusses various methods for classifying text, including one-hot encoding and advanced word embeddings.

![Defining Features

● Each word: one-hot vector

● I = [0, 0, 0, 1, 0, 0, 0, …, 0]

● like = [1, 0, 0, 0, 0, 0, 0, …, 0]

● cookies = [0, 0, 0, 0, 0, 0, 1, …, 0]

● Number of dimensions = size of vocabulary

● Document: bag of words

● Order of words is lost

● Count of words can be added

● Term frequency / inverse document frequency

"I like cookies" = [1, 0, 0, 1, 0, 0, 1, …, 0]](https://image.slidesharecdn.com/20150122-sentiment-150521210911-lva1-app6891/85/Sentiment-Analysis-26-320.jpg)

![Feature Engineering

● Ngrams (as one-hot)

● I, like, cookies - unigrams

● “I like” = [0, 0, 0, 0, 1, 0, …, 0] - bigrams

● “I like cookies” - trigrams

● Character n-grams:

● li, ik, ke, lik, ike

● Dictionaries:

● Great value for sentiment analysis

● Very good for domain specific text

If document contains any of:

{love, like, good, cool}

add this one: [0, 0, 1, 0, …, 0]](https://image.slidesharecdn.com/20150122-sentiment-150521210911-lva1-app6891/85/Sentiment-Analysis-27-320.jpg)

![Still not convinced?

● Context issues

● Narrowing the domain helps

● “beer is cool”, “soup is cool”

● “No babies yet!” - condoms / fertility drugs

● “Obama goes full Bush on Syria”

● User generated content SUCKS!

● “Polynesian sauce from chik fila a be so bomb”

● Common sense

“I tried the banana slicer and found it unacceptable. […] the

slicer is curved from left to right. All of my bananas are bent

the other way.”](https://image.slidesharecdn.com/20150122-sentiment-150521210911-lva1-app6891/85/Sentiment-Analysis-34-320.jpg)