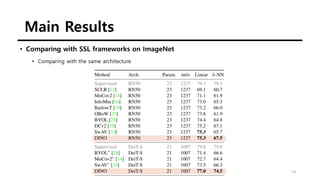

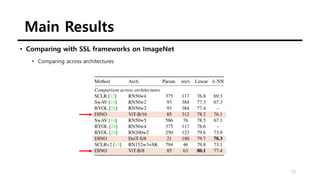

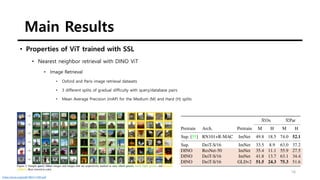

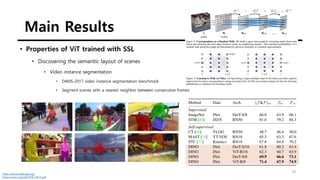

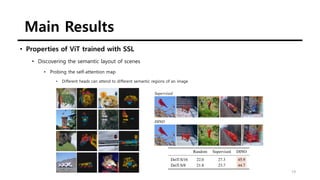

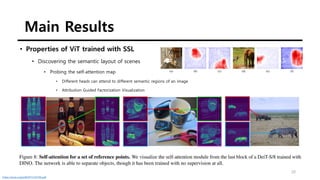

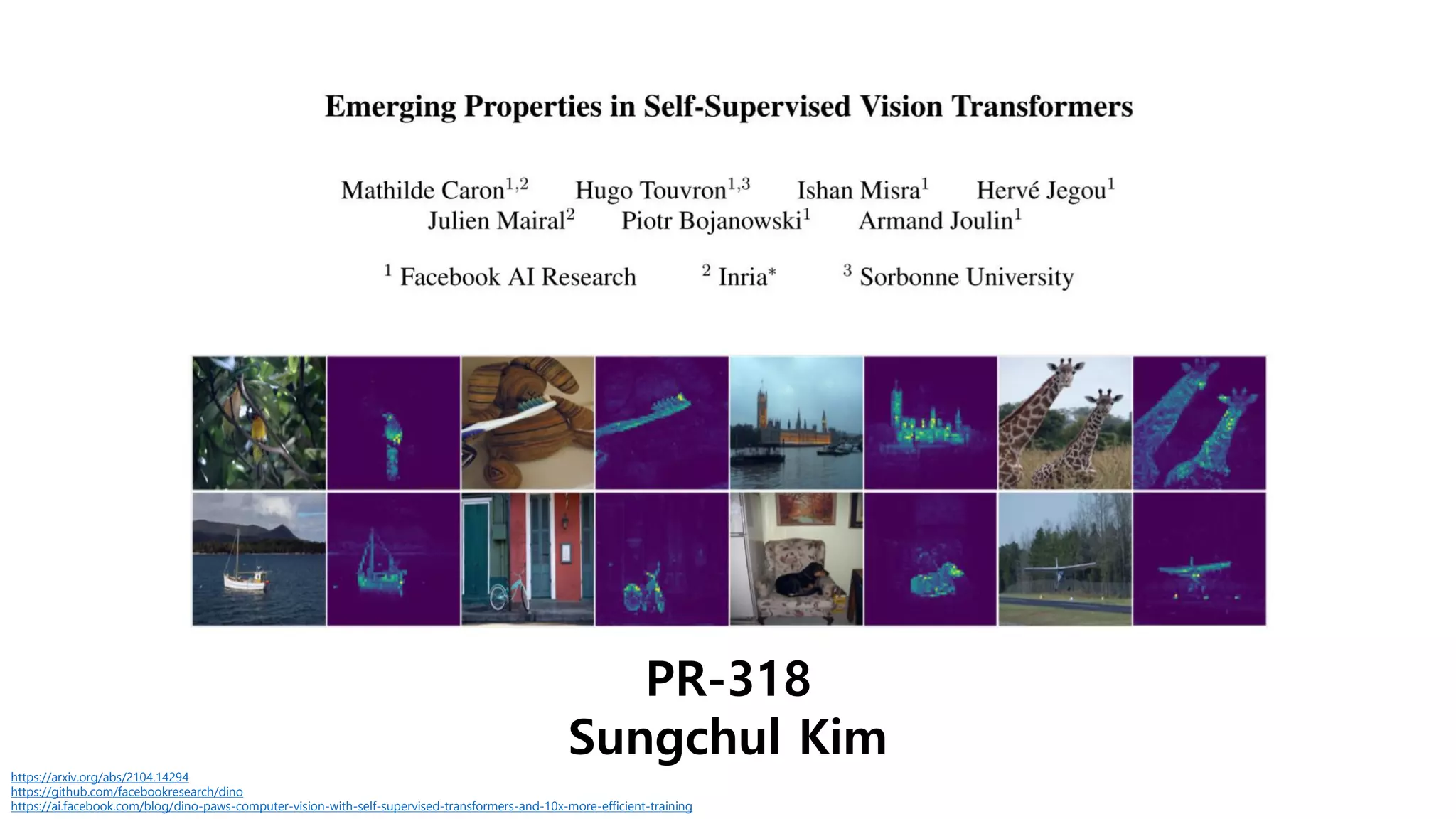

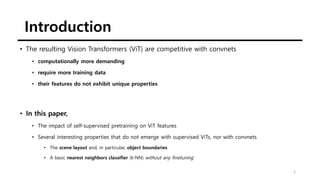

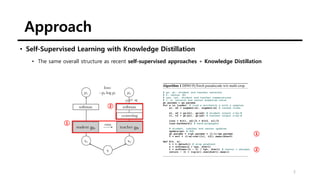

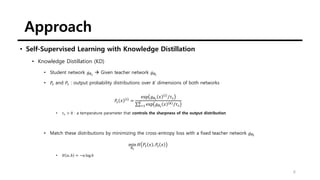

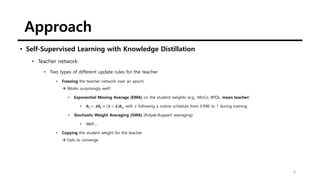

The document summarizes the DINO self-supervised learning approach for vision transformers. DINO uses a teacher-student framework where the teacher's predictions are used to supervise the student through knowledge distillation. Two global and several local views of an image are passed through the student, while only global views are passed through the teacher. The student is trained to match the teacher's predictions for local views. DINO achieves state-of-the-art results on ImageNet with linear evaluation and transfers well to downstream tasks. It also enables vision transformers to discover object boundaries and semantic layouts.

![Approach

11

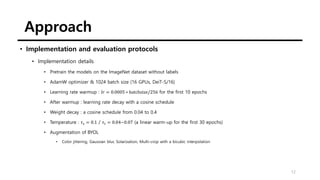

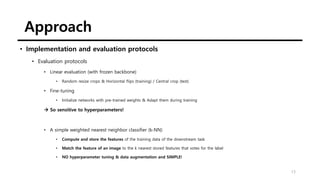

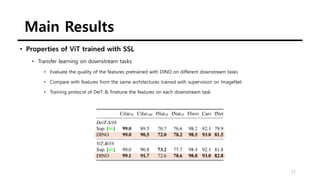

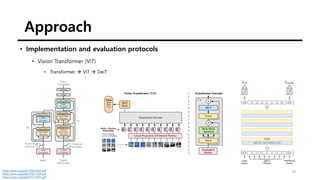

• Implementation and evaluation protocols

• Vision Transformer (ViT)

• N=16 (“/16”) or N=8 (“/8”)

• The patches → linear layer → a set of embeddings

• The class token [CLS] for consistency with previous works

• NOT attached to any label nor supervision](https://image.slidesharecdn.com/210516emergingpropertiesinself-supervisedvisiontransformers-210516144611/85/Emerging-Properties-in-Self-Supervised-Vision-Transformers-11-320.jpg)