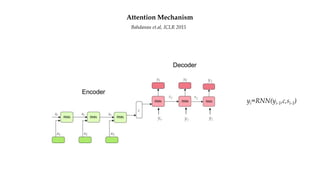

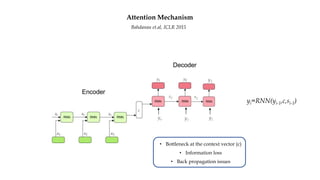

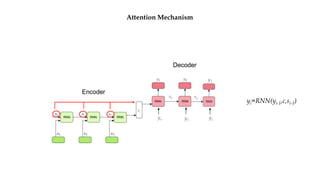

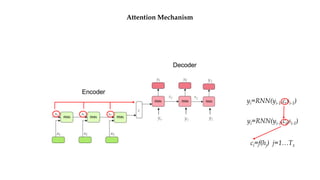

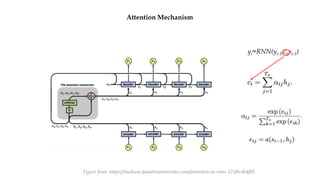

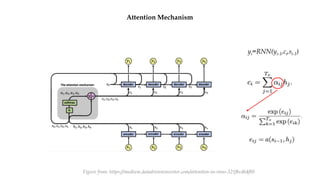

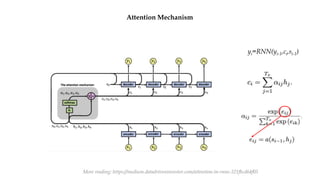

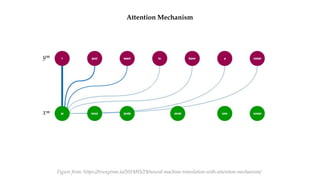

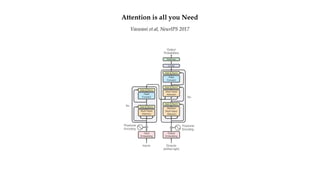

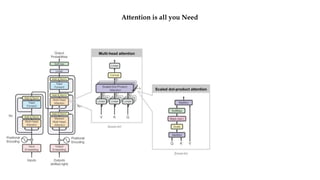

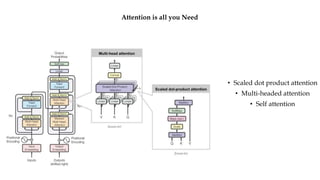

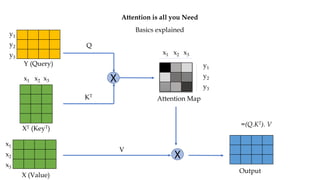

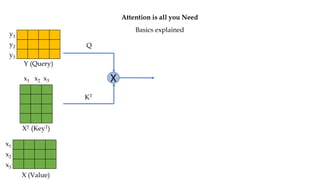

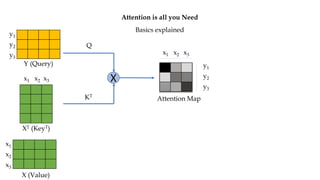

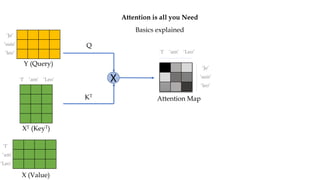

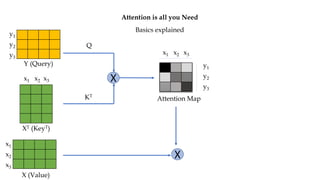

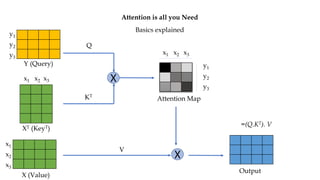

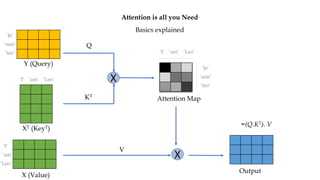

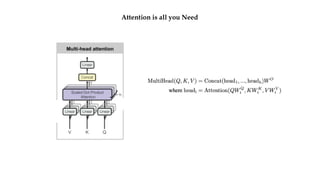

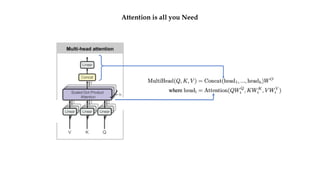

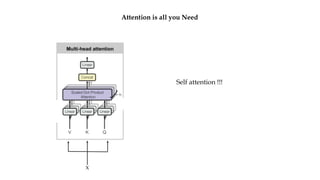

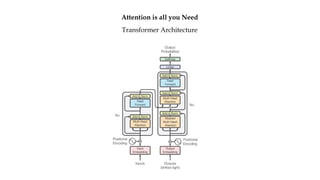

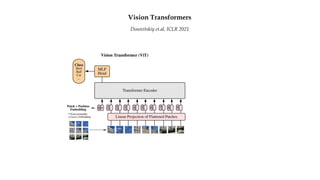

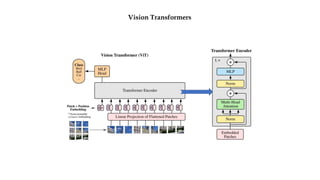

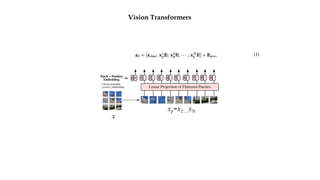

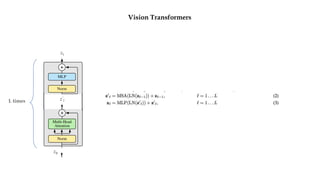

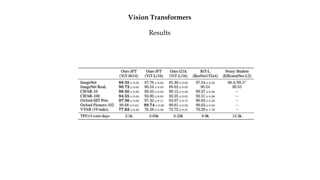

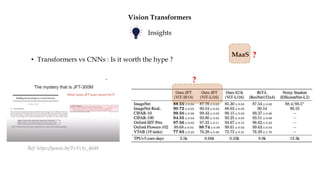

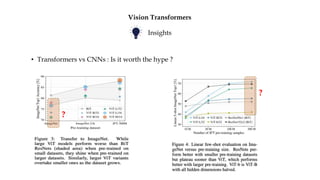

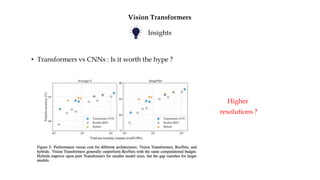

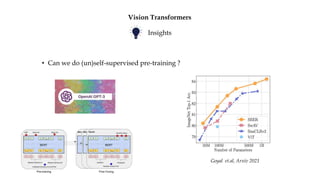

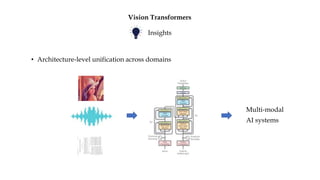

The document discusses visual transformers and attention mechanisms in computer vision. It summarizes recent work on applying transformers, originally used for natural language processing, to vision tasks. This includes Vision Transformers which treat images as sequences and apply self-attention. The document reviews key papers on attention mechanisms, the Transformer architecture, and applying transformers to computer vision through Vision Transformers.