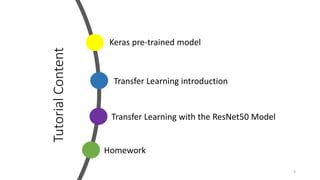

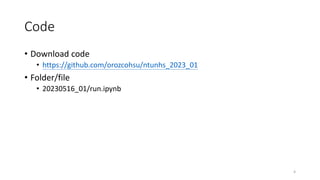

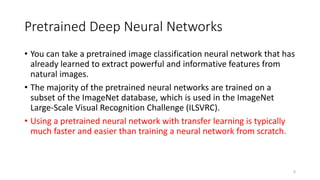

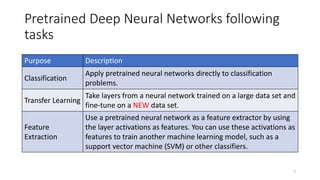

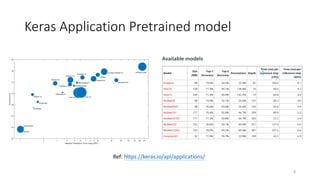

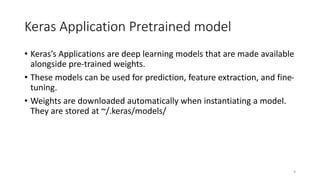

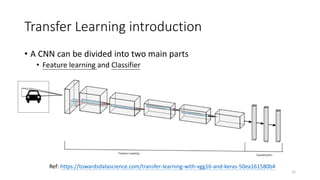

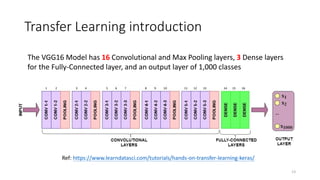

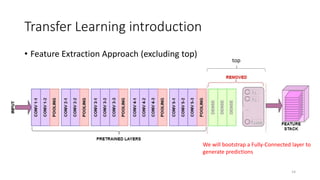

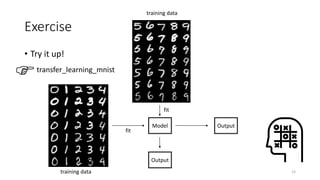

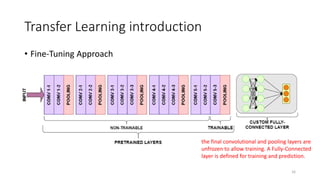

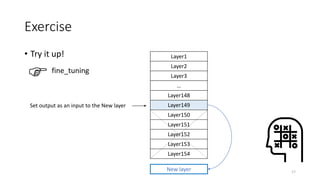

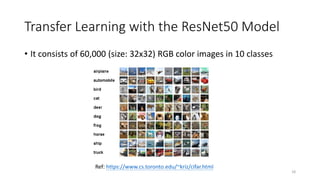

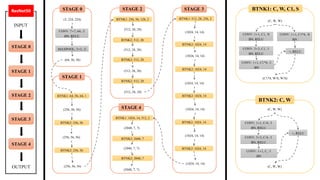

The document discusses transfer learning, particularly using pretrained models like ResNet50 and VGG16 for image classification tasks. It outlines the benefits of transfer learning, including faster model training and effective feature extraction from existing neural networks. It also provides practical resources, exercises, and code links to help practitioners implement transfer learning using Keras.